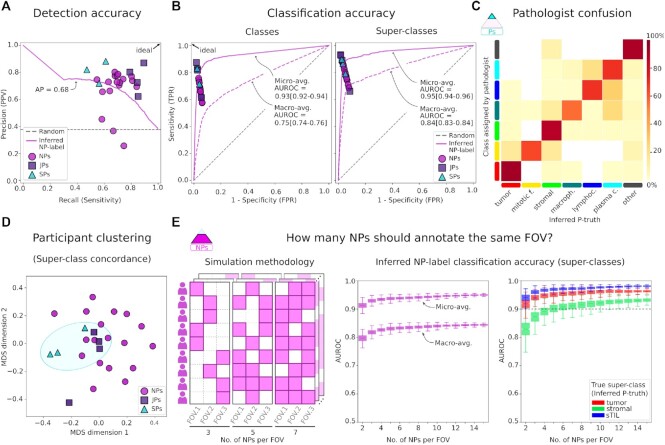

Figure 3:

Accuracy of participant annotations. A. Detection precision-recall comparing annotations to inferred P-truth. Junior pathologists tend to have similar precision but higher recall than senior pathologists, possibly reflecting the time constraints of pathologists. PPV: positive predictive value. B. Classification ROC for classes and super-classes. The overall classification accuracy of inferred NP-labels was high. However, class-balanced accuracy (macro-average) is notably lower because NPs are less reliable annotators of uncommon classes. FPR: false-positive rate. C. Confusion between pathologist annotations and inferred P-truth. D. Multidimensional scaling (MDS) analysis of interrater classification agreement. Some clustering by participant experience (blue ellipse) highlights the importance of modeling reliability during label inference. E. A simulation was used to measure how redundancy affects the classification accuracy of inferred NP-labels. While keeping the total number of NPs constant, we randomly kept annotations for a variable number of NPs per FOV. Accuracy in these simulations was class-dependent, with stromal nuclei requiring more redundancy for accurate inference. Each simulation is represented by one notched box plot, where notches correspond to the bootstrapped 95% interval around the median, and the whiskers extend for 1.5x the interquartile range.