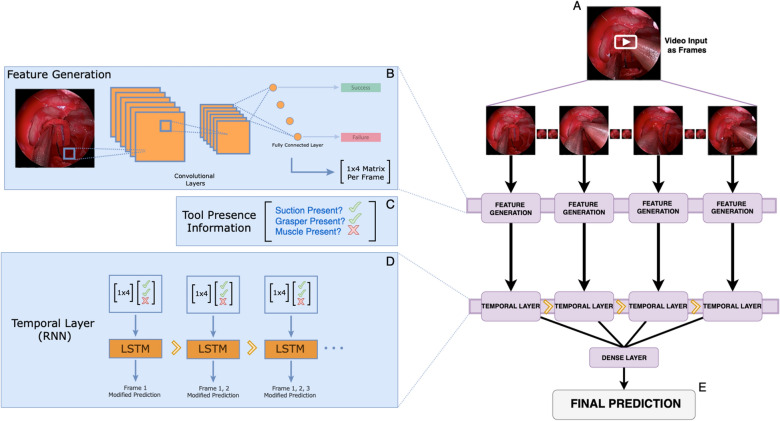

Figure 1.

SOCALNet architecture. Deep learning model used to predict blood loss and task success in critical hemorrhage control task. (A) Video is snapshotted into individual frames. (B) A pretrained ResNet convolutional neural network (CNN) is fine-tuned on SOCAL images from (A), to predict of blood loss and task success in each individual frame. The penultimate layer of the network was removed and a 1 × 4 matrix of values predictive of success/failure or BL was obtained. This is repeated for all frames, generating a new matrix with N (number of frames) rows and 4 columns. Output matrix from (B) and Tool Presence Information (C) [e.g. ‘Is suction present? Yes (check); is Muscle present? No (X), etc.; encoded as 8 binary values per frame (Nx8)] is input into a temporal layer. (D) Temporal layer: Long-short-term memory (LSTM) modified recurrent neural network allowing for temporal analysis across all frames. The 2D matrix of: features from the ResNet and Tool Presence Information (‘check mark’, ‘X’) from each frame are fed into the Temporal Layer. All LSTM predictions are consolidated in one dense layer and (E) a final prediction of success/failure, and blood loss (in mL) is output.