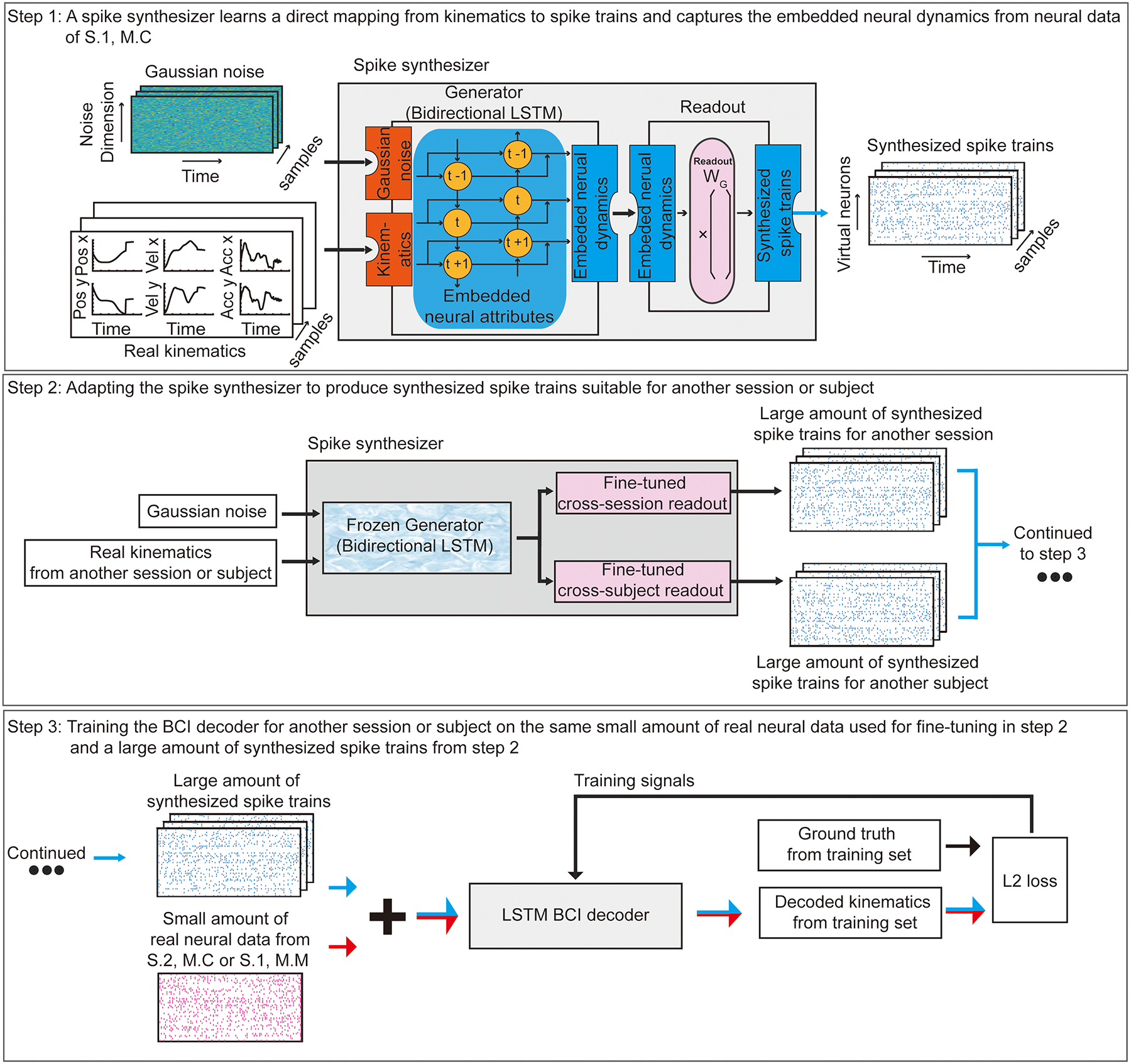

Fig. 2 |. General Framework.

Step 1: Training a spike synthesizer on the neural data from session one of Monkey C (S.1, M.C) to learn a direct mapping from kinematics to spike trains and to capture the embedded neural attributes. Gaussian Noise and Real Kinematics are input to the Spike synthesizer (consisted of a Generator and a Readout). The spike synthesizer generates realistic synthesized spike trains by first learning the embedded neural attributes using a Generator (a bidirectional LSTM recurrent neural network) through a bidirectional time-varying generalizable internal representation (symbols t−1, t, t+1). Different instances of Gaussian Noise combined with new kinematics yield different embedded neural attributes that all have similar properties to those used for training. Then, the Readout maps the embedded neural attributes to spike trains (using readout weight WG). Step 2: Adapting the spike synthesizer to produce synthesized spike trains suitable for another session or subject from Real Kinematics and Gaussian noise. We first freeze the generator to preserve the embedded neural attributes or virtual neurons learned previously. Then, we substitute and fine-tune the readout modules using a limited neural data from another session or subject (session two of Monkey C (S.2, M.C) or session one of Monkey M (S.1, M.M)). The fine-tuned readout modules adapt the captured expression of these neural attributes into spike trains suitable for another session or subject. Step 3: Training a BCI decoder for another session or subject using the combination of same small amount of real neural data used for fine-tuning (in step 2) and a large amount of synthesized spike trains (in step 2).