Abstract

Humans can rapidly adapt to new situations, even though they have redundant degrees of freedom (d.f.). Previous studies in neuroscience revealed that human movements could be accounted for by low-dimensional control signals, known as motor synergies. Many studies have suggested that humans use the same repertories of motor synergies among similar tasks. However, it has not yet been confirmed whether the combinations of motor synergy repertories can be re-used for new targets in a systematic way. Here we show that the combination of motor synergies can be generalized to new targets that each repertory cannot handle. We use the multi-directional reaching task as an example. We first trained multiple policies with limited ranges of targets by reinforcement learning and extracted sets of motor synergies. Finally, we optimized the activation patterns of sets of motor synergies and demonstrated that combined motor synergy repertories were able to reach new targets that were not achieved with either original policies or single repertories of motor synergies. We believe this is the first study that has succeeded in motor synergy generalization for new targets in new planes, using a full 7-d.f. arm model, which is a realistic mechanical environment for general reaching tasks.

Keywords: motor synergy, task generalization, multi-directional reaching, reinforcement learning, optimization

1. Introduction

Humans can generate complex movements (such as object manipulation and locomotion) in diverse situations, using a number of muscles and joints in a coordinated manner. Previous studies have revealed that human movements can be accounted for by a small number of primitive components, known as motor synergies [1–3]. The central nervous system is considered to simplify the control problem of complex and redundant musculoskeletal systems by linearly combining a small number of motor synergies [4,5]. Another feature of motor synergies is that the same synergy structures are often observed from various movements performed for different targets [6–9]. Such motor synergies are referred to as shared synergies, and they refer to the re-usability of motor synergy structures. It has also been reported that animals, including humans, explore combination patterns of previously acquired motor synergies during motor skill learning [10,11], suggesting that motor synergies can be combined and re-used to deal with new targets. Computationally, seeking motor synergy combinations can reduce the search space in motor learning.

There are previous studies that address the motor synergy structure computationally. The computational approach facilitates the analysis and evaluation of the role of each component using simple models that extract important factors of the actual system. For example, some studies have reported that motor synergy structures can be observed from trajectories acquired by machine learning and optimization: zero-moment point-based locomotion [12], feedback torque integration [13], optimal control [14,15], trajectory optimization [16], reinforcement learning for gait [17] and reaching [18]. These studies provide a methodology for approaching motor synergies without assuming how motor synergies are implemented in animals. However, the aspect of task generalization through the obtained synergy has not been fully discussed. In addition, Rückert & D'Avella proposed a framework that explicitly implemented a shared-synergy structure based on dynamic movement primitives [19,20], movement representations similar to motor synergies [21]. There are also some studies that examined the generalization ability of motor synergies. Al Borno et al. evaluated reaching tasks using a musculoskeletal model and reported that a single repertory of motor synergies obtained by trajectory optimization could be generalized to different initial poses and targets [22]. Hagio & Kouzaki demonstrated that a hard-wired motor synergy structure could accelerate motor learning against changes in musculoskeletal structures [23]. These studies reveal the generalization ability of single repertories of motor synergies; however, determining whether combinations of multiple motor synergy repertories can be generalized to new targets remains unclear. In this study, to address this question, we use multiple motor synergy repertories extracted from motor control agents (i.e. policies) that are individually trained with different targets, and we investigate the systematic generalization framework of motor synergies.

This study demonstrates that the combinations of motor synergies extracted from multiple policies can be re-used for generalization of new targets in multi-directional three-dimensional (3D) reaching tasks. We employed several types of target positions in each of which the target positions were located on different planes. The main contribution of this study is to demonstrate the generalization ability of motor synergies extracted from individually learned motor skills. Alternatively, this study provides a framework for explaining and reproducing human’s ability to generalize to new situations by combining previously acquired skills, which will be beneficial to neuroscience, machine learning and robotics.

2. Material and methods

2.1. Overview

This study demonstrates that the ability of a single repertory of motor synergies can be extended by combining another repertory of motor synergies. In this study, we use spatio-temporal synergies to represent the motor synergies as follows:

| 2.1 |

where , and hl indicate the movement, spatio-temporal synergy and activity amplitude, respectively. Considering this equation, it is expected that more diverse movements can be expressed by increasing the number of motor synergies. We demonstrate a more special case in this study. We evaluate how representation ability can be improved by combining motor synergies extracted from different policies. To accomplish that, we construct a method that consists of (i) motor learning to acquire multiple policies, (ii) motor synergy extraction from individual policies, and (iii) generalization to new targets with combined motor synergy repertories.

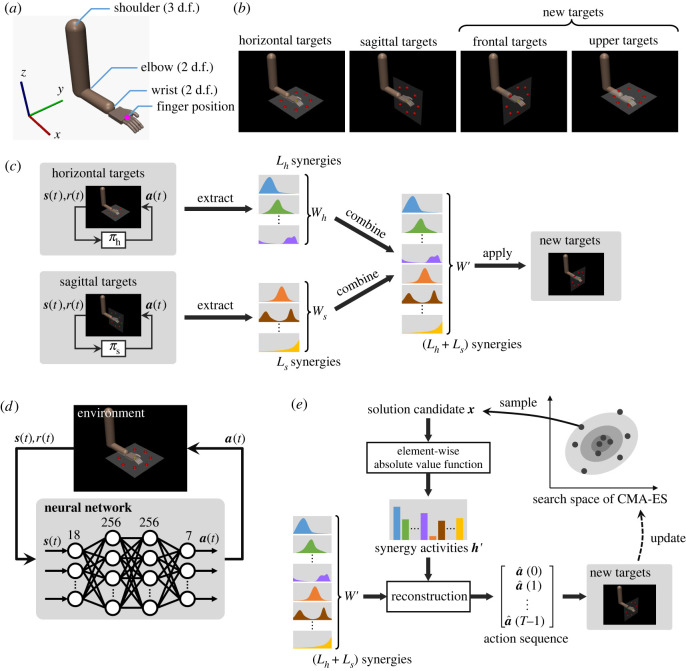

Figure 1 illustrates an overview of the method. We used tasks as illustrated in figure 1a,b. The method consists of motor learning, motor synergy extraction, combination, and optimization for new targets (figure 1c). First, multiple policies are trained for different types of targets using a deep reinforcement learning method (figure 1d). Thereafter, motor synergies are extracted from the time series of the joint torque performed by each policy. Finally, combining the repertories of motor synergies linearly, we applied an optimization method for adjusting motor synergy activities to accomplish other types of targets. These are newly provided to investigate task generalization (figure 1e).

Figure 1.

Overview of the method. (a) Seven-dimensional arm. (b) Reaching targets. The horizontal and sagittal targets are used for reinforcement learning, whereas the frontal and upper targets are regarded as new targets to be generalized. (c) The entire process in this study. First, multiple policies, πh and πs, are learned for the horizontal and sagittal targets, respectively. Thereafter, motor synergy repertories, Wh and Ws, are extracted. Finally, the motor synergies are integrated into W′, and its activation magnitude is optimized. (d) Set-up of reinforcement learning. (e) Optimization procedure for generalization. Solution candidates are first sampled from a Gaussian distribution. Thereafter, the solution candidates are converted to synergy activities, which are decoded into an action sequence with combined motor synergies, and the parameters of the Gaussian distribution are updated using the evaluation results.

2.2. Task description

We used a seven-degrees-of-freedom (d.f.) arm such that its kinematics resembled that of human arms. The overview of the arm is illustrated in figure 1a. The shoulder, elbow and wrist in this arm have three, two and two rotational joints, respectively. The joints are controlled by torque commands. We used MuJoCo [24] for physics simulation. The joint specifications are described in table 1.

Table 1.

Joint specifications.

| index | lower limit (rad) | upper limit (rad) |

|---|---|---|

| 1 | −1.57 | 0.70 |

| 2 | −0.85 | 1.57 |

| 3 | −0.85 | 0.85 |

| 4 | −1.50 | 1.05 |

| 5 | −1.50 | 1.57 |

| 6 | −0.50 | 0.50 |

| 7 | −1.05 | 1.05 |

This study handles a multi-directional reciprocating reaching task; the arm first moves to a target point at a specific time and returns to the initial position at a predetermined finish time. The same type of task is often employed in studies of human arm reaching [3,25]. An observation variable of the task at time t, , consists of seven-dimensional joint angles , seven-dimensional joint angular velocities , three-dimensional target position , and normalized time . Here, is fixed for each episode. The normalized time is represented as follows:

| 2.2 |

where tfinish indicates the time when the task should have been terminated. Action consists of a seven-dimensional joint torque . An immediate reward r(t) is expressed as follows:

| 2.3 |

This reward function forces the arm tip to reach at ttarget and returns to at tfinish while attempting to reduce the joint torques during the task. Here, . indicates the target position to be reached. and indicate the position and velocity at the centre of gravity of the fingers, respectively. The coefficient values were set to k1 = 0.002 and k2 = 0.2. The sampling interval of the policy was set to Δt = 10 ms. Each episode consists of 100 samples of interaction, (i.e. 1 s). In addition, ttarget and tfinish were set to 50 and 100 (i.e. 0.5 and 1 s), respectively.

We employed four types of target positions: cases in which the target points were on a horizontal, sagittal, frontal and upper-horizontal plane. We refer to them as horizontal, sagittal, frontal and upper targets. The target positions in each case are defined as follows:

| 2.4 |

| 2.5 |

| 2.6 |

| 2.7 |

where

| 2.8 |

| 2.9 |

| 2.10 |

The target positions are illustrated in figure 1b. As explained below, we use only the horizontal and sagittal targets for the initial policy acquisition to acquire each repertory motor synergy, and the other targets are used for synergy-based optimization.

2.3. Motor synergy acquisition through reinforcement learning

We first trained policies using proximal policy optimization (PPO) [26], a type of reinforcement learning method. Each policy consists of a neural network with two hidden layers as illustrated in figure 1d. Each hidden layer has 256 units with tanh activation. During the training, critic networks with the same hidden layer architecture as the policy networks were used. Another set-up for PPO is detailed in table 2.

Table 2.

Parameters of reinforcement learning.

| item | value |

|---|---|

| no. training epochs | 3000 |

| steps per epoch | 10 000 |

| discount factor | 0.99 |

| clipping ratio | 0.2 |

| λ for generalized advantage estimator [27] | 0.97 |

| learning rate for the policy network | 0.0003 |

| learning rate for the critic network | 0.001 |

| number of gradient descent steps per epoch | 80 |

After the training of the policies with PPO, motor synergies are extracted. We used non-negative matrix factorization (NMF) to extract spatiotemporal synergies.

Spatio-temporal synergies indicate primitive patterns of multi-d.f. movements linked both temporally and spatially. It can be extracted with NMF from a matrix of movements in multiple trials, , as follows:

| 2.11 |

where and indicate the motor synergies and their activity amplitudes in individual trials, respectively. Among the various definitions of motor synergies [28], spatio-temporal synergies can compress both spatial and temporal patterns of the time series of actions.

Before extracting motor synergies, it is necessary to pre-process the sequences of M d.f. actions that can take both positive and negative values because NMF can only handle non-negative values. First, an action at time t in the nth trial, , is converted to two non-negative values, and , as follows:

| 2.12 |

and

| 2.13 |

where and indicate the positive and negative parts of , respectively. By definition, holds. Thereafter, an action matrix is constructed as follows:

| 2.14 |

where T and N indicate the number of actions in a trial and the number of trials, respectively. Because and can be regarded as M × 1 matrices, has 2MT × N elements.

Finally, can be decomposed into spatio-temporal synergies and their activities with NMF as in (2.11). Here, and , where L is the number of motor synergies. In this study, T = 100 and M = 7 are used.

2.4. Generalization to new targets

By training multiple policies considering the targets, we can obtain multiple repertories of motor synergies. Supposing we have two sets of the motor synergies for the horizontal and sagittal reaching targets, let the numbers of motor synergies be Lh and Ls, respectively. Combining them, we can finally use L′ ≡ Lh + Ls motor synergies to acquire new movements.

Using these motor synergies, we finally optimized the magnitude of their activities (i.e. hl in equation (2.1)) for new targets. In summary, the optimization was performed using a numerical optimization method that explores solutions in a way of evolutionary computation.

We optimized the magnitude of the L′ motor synergy activities, denoted as , using the covariance matrix adaptation evolution strategy (CMA-ES) [29,30]. An overview is illustrated in figure 1e. The covariance matrix adaptation evolution strategy is a stochastic optimization method that uses a Gaussian distribution to search for a solution. It searches an L′-dimensional space in which the coordinates correspond to the activities of motor synergies, and it maximizes the following accumulated reward G:

| 2.15 |

Considering each optimization step, candidates were sampled from a Gaussian distribution, and the parameters of the Gaussian distribution (i.e. the mean vector and covariance matrix) were updated through the evaluation of the sampled candidates. Here, indicates the floor function. The initial parameters of the Gaussian distribution were set to and σ = 10−3. The CMA-ES explores the entire L′-dimensional space containing negative values, whereas the motor synergy activity should be positive. To limit the solutions to positive, a sampled candidate was converted to the motor synergy activity using the element-wise absolute value function, , as follows:

| 2.16 |

The motor synergy activity, , was finally decoded to a time series of actions as follows:

| 2.17 |

and

| 2.18 |

where and indicate the motor synergies in the horizontal and sagittal targets, respectively.

Here we used CMA-ES instead of PPO because of the difference in learning targets. As the motor synergy activities correspond to trajectories one by one, we did not need to optimize sequential decision making but only optimize single variables. Also, we did not use neural networks but directly optimized motor synergy activities. Thus, it was suitable to use a numeric optimization method.

3. Results

3.1. Motor synergy emergence by deep reinforcement learning

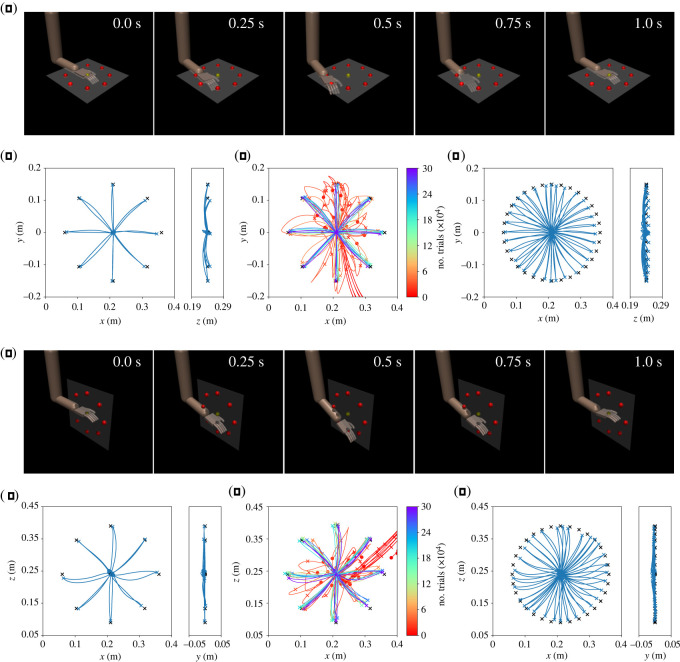

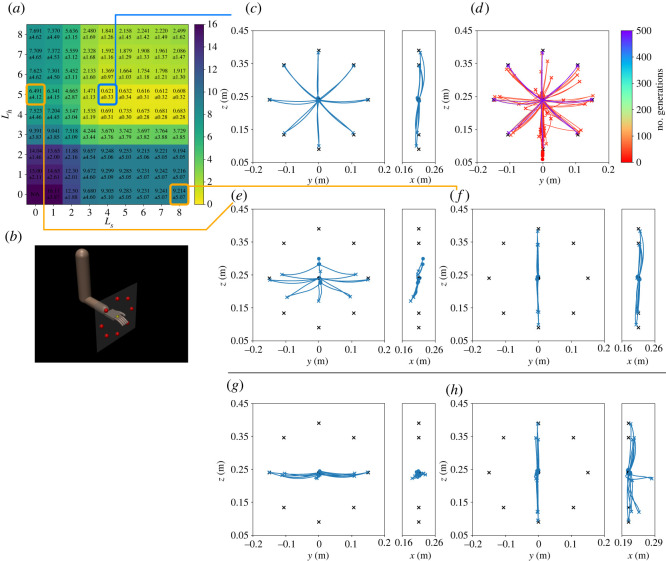

Two policies were trained with PPO for the horizontal and sagittal targets (figure 1b). We refer to these policies as the horizontal and sagittal policies, respectively. Figure 2a,e shows the snapshots of the horizontal and sagittal policies. The movements are also available in electronic supplementary material, movie S1. Also, figure 2b,f shows the trajectories performed by the horizontal and sagittal policies. In addition, the trajectories during the training are shown in figure 2c,g. The errors at t = ttarget, tfinish in the eight learned targets are summarized in table 3.

Figure 2.

Results in the horizontal and sagittal policies. (a–d) indicate the horizontal targets, and (e–h) indicate sagittal targets. (a) An example of snapshots in the horizontal targets. (b) Trajectories in the horizontal targets. Black cross symbols and bullets indicate qtarget and qinitial, respectively. (c) Learning progress. Trajectories at the 0, 10, 20, 30, 50, 100, 150, 200, 250 and 300 (×103)th iterations are shown. (d) Trajectories in the various targets with different θ, including unlearned ones. (e) An example of snapshots in the sagittal targets. (f) Trajectories in the sagittal targets. (g) Learning progress. Trajectories at the 0, 10, 20, 30, 50, 100, 150, 200, 250 and 300 (×103)th iterations are shown. (h) Trajectories in the various targets with different θ, including unlearned ones.

Table 3.

Reaching errors. Mean values and standard deviations are described. Italics type indicates that the mean error in is less than 1 cm.

| target type | method | (cm) | (cm) |

|---|---|---|---|

| horizontal targets | horizontal policy | 0.781 ± 0.494 | 0.419 ± 0.110 |

| sagittal targets | sagittal policy | 0.863 ± 0.310 | 0.890 ± 0.296 |

| frontal targets | optimization results (Lh = 5, Ls = 4) | 0.621 ± 0.331 | 0.411 ± 0.164 |

| optimization results (Lh = 5, Ls = 0) | 6.49 ± 4.12 | 2.44 ± 2.06 | |

| optimization results (Lh = 0, Ls = 8) | 9.21 ± 5.07 | 0.492 ± 0.152 | |

| horizontal policy | 9.52 ± 5.69 | 0.618 ± 0.230 | |

| sagittal policy | 9.46 ± 5.51 | 0.667 ± 0.210 | |

| upper targets | optimization results (Lh = 5, Ls = 5) | 0.754 ± 0.342 | 0.957 ± 0.438 |

| optimization results (Lh = 8, Ls = 0) | 3.81 ± 0.577 | 1.69 ± 0.165 | |

| optimization results (Lh = 0, Ls = 6) | 9.50 ± 4.68 | 0.943 ± 0.380 | |

| horizontal policy | 4.97 ± 0.288 | 0.483 ± 0.177 | |

| sagittal policy | 9.55 ± 5.11 | 0.861 ± 0.331 |

To further confirm the ability of the policies, we evaluate other target positions using different target angles θ as follows:

| 3.1 |

in which 24 targets have not been given during training. Figure 2d,h shows that 32 trajectories performed horizontal and sagittal policies.

We extracted synergy structures from the actions (i.e. joint torques) performed by the horizontal and sagittal policies. Considering the eight trials for each policy, we evaluated the R2-values to quantify the reconstruction performance for various motor synergies. The R2-values are computed as follows:

| 3.2 |

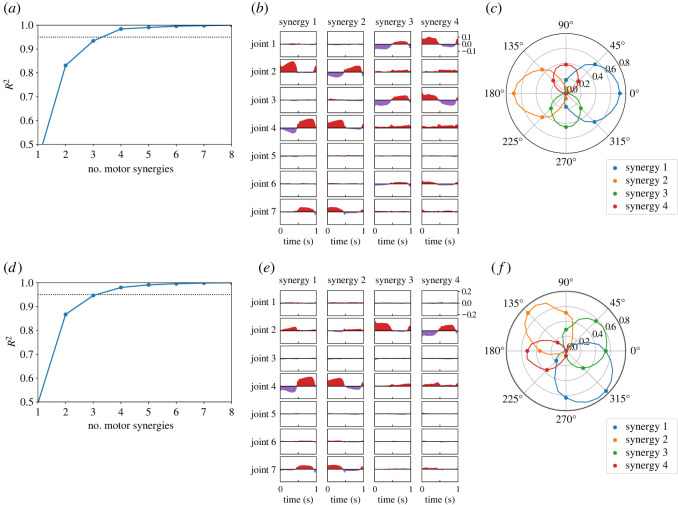

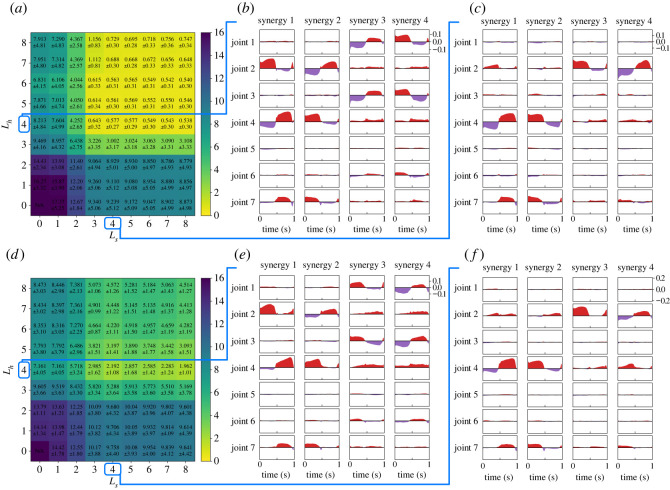

where and indicate the original and reconstructed actions at time t, respectively, and indicates the mean values of the actions over the trial. Figure 3a,d shows R2-values of the motor synergies extracted from the horizontal and sagittal policies. Four or more motor synergies achieved R2 > 0.95 in both the horizontal and sagittal policies.

Figure 3.

Motor synergies extracted from the horizontal and sagittal policies. (a–c) Correspond to the results in the horizontal targets, whereas (d–f) correspond to results in the sagittal targets. (a,d) Reconstruction performance of motor synergies. (b,e) Waveforms of motor synergies. The red and purple waves correspond to the positive and negative components of the joint torque, respectively. (c) Synergy activities for the target directions θ in equation (2.4). and correspond to the x- and y-axes, respectively. In addition, the plots and curves correspond to the motor synergy activities of the 8- and 32-directional reaching movements, respectively. (c) Synergy activities for the target directions θ in equation (2.5). and correspond to the x- and z-axes, respectively.

Figure 3b,e shows the waveforms of the four motor synergies extracted from eight reaching movements shown in figure 2b,f. Regarding the figures, the red positive values correspond to the components of , whereas the purple-coloured negative values indicate the components of . Moreover, figure 3c,f shows the synergy activities for the target position’s directions θ. Considering the figures, the marked points correspond to the learned targets shown in figure 2b,f, whereas the curved lines correspond to the 32 targets shown in figure 2d,h.

3.2. Generalization to new target positions with motor synergies

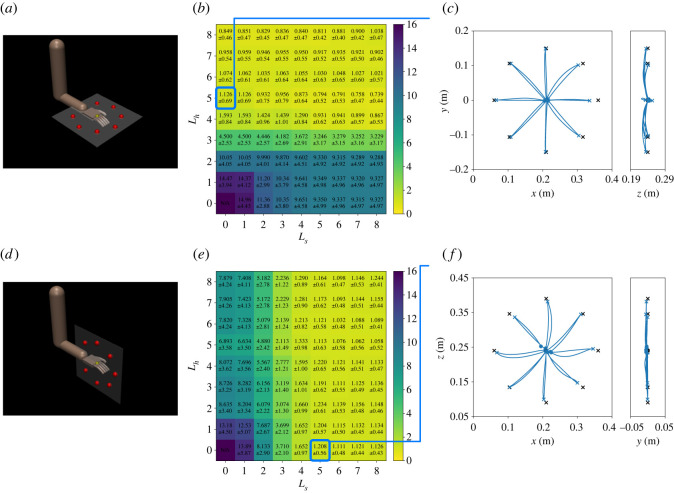

We optimize the magnitude of the motor synergy activities using the method illustrated in figure 1e to obtain new movements. First, we applied the method of the reaching tasks to the horizontal and sagittal targets to investigate the ability of the motor synergies. Figure 4 shows the results in the horizontal and sagittal targets, which were used in reinforcement learning. Figure 4b,e shows the errors in with varying numbers of motor synergies. The trajectories in the horizontal targets in Lh = 5 and Ls = 0 are shown in figure 4c. In addition, the trajectories in the sagittal targets in Lh = 0 and Ls = 5 are shown in figure 4f.

Figure 4.

Optimization results in learned targets; (a–c) correspond to the results in the horizontal targets, whereas (d–f) correspond to results in the sagittal targets. (a) Horizontal targets. (b) Error map in (cm). (c) Trajectories when Lh = 5 and Ls = 0. (d) Sagittal targets. (e) Error map in (cm). (f) Trajectories when Lh = 0 and Ls = 5.

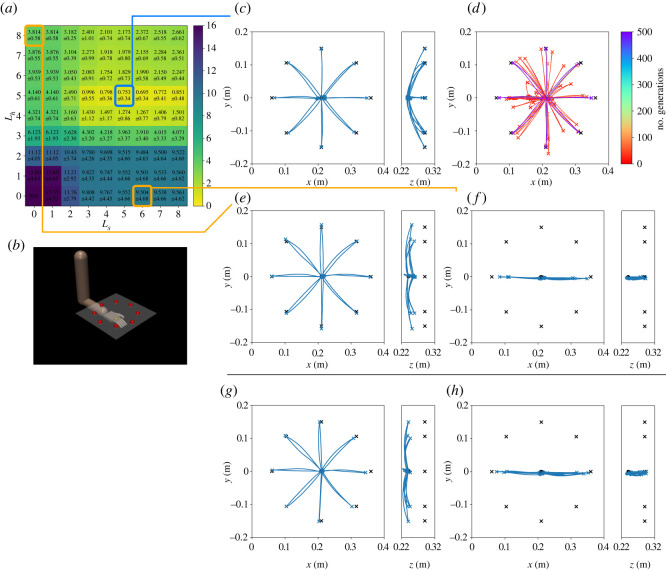

Subsequently, we evaluated the method in the frontal targets, which can be regarded as new targets in a new plane. Figure 5 shows the results. Figure 5a shows the reaching errors in , changing the number of motor synergies, Lh and Ls. Considering the results, the case with Lh = 5 and Ls = 8 resulted in the smallest error in . Figure 5c shows the trajectories in Lh = 5 and Ls = 4, where the average error is 0.621 cm; This was not the smallest error, whereas the difference from the smallest error was 0.013 cm, which was approximately 4% of the standard deviation. The movements in Lh = 5 and Ls = 4 are available in electronic supplementary material, movie S1. Figure 5d shows the trajectories during the optimization. Furthermore, considering the results when only single motor synergy repertories are used, the cases with Lh = 5 and Ls = 8 resulted in the smallest errors. The trajectories are shown in figure 5e,f. Comparatively, the trajectories obtained by the policies are shown in figure 5g,h. The errors at t = ttarget, tfinish are summarized in table 3.

Figure 5.

Optimization results in the frontal targets (new targets). (a) Error map in (cm). (b) Frontal targets. (c) Trajectories when Lh = 5 and Ls = 4. (d) Learning progress. Trajectories at the 0, 10, 20, 30, 40, 50, 100, 150, 200, 300, 400 and 500th generations are shown. (e) Trajectories when Lh = 5 and Ls = 0. (f) Trajectories when Lh = 0 and Ls = 8. (g) Trajectories by the horizontal policy. (h) Trajectories by the sagittal policy.

Moreover, the same evaluation is conducted for the upper targets, which can be regarded as new targets in a new plane by sliding the horizontal plane. Figure 6a shows the reaching errors in , changing the number of motor synergies, Lh and Ls. Figure 6c shows the trajectories in Lh = 5 and Ls = 5, where the average error is 0.753 cm, and figure 6d shows the trajectories during the optimization. The movements in Lh = 5 and Ls = 5 are also available in electronic supplementary material, movie S1. Furthermore, considering the results of when only single motor synergy repertories are used, the cases with Lh = 8 and Ls = 6 resulted in the smallest errors. The trajectories are shown in figure 6e,f. Comparatively, the trajectories obtained by the policies are shown in figure 6g,h. The errors at t = ttarget, tfinish are summarized in table 3.

Figure 6.

Optimization results in the upper targets (new targets). (a) Error map in (cm). (b) Frontal targets. (c) Trajectories when Lh = 5 and Ls = 5. (d) Learning progress. Trajectories at the 0, 10, 20, 30, 40, 50, 100, 150, 200, 300, 400 and 500th generations are shown. (e) Trajectories when Lh = 8 and Ls = 0. (f) Trajectories when Lh = 0 and Ls = 6. (g) Trajectories by the horizontal policy. (h) Trajectories by the sagittal policy.

3.3. Transferability to different arms

We conducted an additional simulation to evaluate if the results so far are consistent when arm models are even different. In human studies, measured data usually contain movements of multiple subjects who have different kinematic parameters such as link lengths. Here, three arm models with different link lengths were used: the original model, a shorter-arm model and a longer-arm model. The link parameters are listed in table 4. Firstly, policies were trained for the shorter- and longer-arm models by using PPO with the same hyper-parameters as in table 2. In policy learning, target positions were calculated with equations (2.4) and (2.5), whereas varied depending on the arm lengths. We then extracted motor synergies from the trajectories of each arm model and optimized their activities with CMA-ES for the original arm model.

Table 4.

Link lengths of arm models.

| model name | shoulder-to-elbow (m) | elbow-to-wrist (m) |

|---|---|---|

| original model | 0.36 | 0.27 |

| shorter-arm model | 0.31 | 0.22 |

| longer-arm model | 0.41 | 0.32 |

Figure 7 shows the results in the frontal targets. Compared with figure 5a, the motor synergies by the shorter-arm model brought about slightly smaller errors, whereas the motor synergies by the longer-arm model brought about larger errors. When the motor synergies from the shorter-arm model were used, the error was 0.577 ± 0.27 cm in Lh = Ls = 4. In contrast, when the motor synergies from the longer-arm model were used, the error was 2.192 ± 1.08 cm in Lh = Ls = 4; this was approximately three times larger than that observed in figure 5a.

Figure 7.

Optimization results in the frontal targets when different arm models were used in synergy extraction. Note that the original arm model was used in optimization. (a) Error map in (cm) when motor synergies of the shorter-arm model were applied to the original arm model. (b) Motor synergies when the shorter-arm model is reaching the horizontal targets. (c) Motor synergies when the shorter-arm model is reaching the sagittal targets. (d) Error map in [cm] when motor synergies of the longer-arm model were applied to the original arm model. (e) Motor synergies when the longer-arm model is reaching the horizontal targets. (f) Motor synergies when the longer-arm model is reaching the sagittal targets.

4. Discussion

4.1. Performance of the learned movements

The horizontal and sagittal policies trained with reinforcement learning demonstrated high performance and generalization ability; nonetheless, there were limitations. It is be observed that these policies have learned the reaching task and have demonstrated the generalization ability for the intermediate target positions between the trained ones (figure 2 and table 3). By contrast, when these policies are applied without a combination of policies, the performance is greatly degraded in the frontal and upper targets as shown in figure 5g,h, 6g,h and table 3. The difference in the performance arises from whether the new targets are the interpolation or extrapolation of learned targets. Considering the same target types (i.e. where only the angle θ is changed), targets will be located at approximately the intermediate positions between the trained ones. Regarding such cases, the policies can easily succeed in the reaching tasks by interpolating learned behaviours. By contrast, when the targets were changed to the frontal targets, most of the targets were no longer at the intermediate positions between the targets in the horizontal or sagittal targets. Therefore, policies have to extrapolate from learned behaviours, which is generally difficult. It can be observed that the arm was moved to the points where the target is projected onto the horizontal/sagittal plane. The policies attempted to reach the frontal and upper targets by interpolating the learned behaviours.

The endpoint trajectories show a characteristic feature as observed in human reaching movements. The trajectories were almost along straight lines to the targets. Although the trajectories were slightly drooping in the z-direction owing to gravity, the policy compensated most of the gravity effect. The gravity effect was not completely eliminated because of a trade-off with the reward term of energy minimization in (2.3). It has been reported that humans move their hand tips in a straight line to the target position [3]. This phenomenon has been reproduced computationally in previous studies [15,31]; nevertheless, the gravity force in these studies is compensated beforehand. By contrast, those policies reproduce the same phenomenon under the gravity that exists. This indicates that the learned movements reflect the skills of the policies, such as gravity compensation.

The fact that the policies failed in new targets (i.e. frontal and upper targets) is important for the purpose of this study. We aimed to demonstrate the generalization ability of the combined motor synergies for new targets; therefore, it is preferred that the new targets are exactly new for agents (policies or humans). The performance degradation in the policies in new targets implies that these targets were totally new for the policies. Therefore, if motor synergies generalize to these new targets by combination, we can conclude that the generalization ability emerged from the motor synergy combinations, not the policies. It is, in contrast, difficult to verify the same thing with human subjects who had already obtained motor synergies for various motor tasks. In addition, given recent developments in deep reinforcement learning, the same approach is expected to be applied to more complicated motor tasks.

4.2. Representation ability of motor synergies

Motor synergies are able to reconstruct the original actions (figure 3) and achieve the reaching task (figure 4). Only four motor synergies resulted in R2 > 0.95 in both the horizontal and sagittal targets. Moreover, the average target errors could be reduced to approximately 1 cm in both the horizontal and sagittal targets with only five motor synergies. It can also be observed that the trajectories of motor synergies (figure 4) resemble those of the policies (figure 2). Because a single movement consists of 100 actions of seven joints, the motor synergies significantly and efficiently reduce the dimensionality of the movements.

We observe the relationship between the reconstruction accuracy and performance. The selection of motor synergies with high reconstruction accuracy resulted in high performance, suggesting that the reconstruction accuracy could be used to determine the appropriate number of motor synergies for motor control. Previous studies that applied motor synergies to robot control employed reconstruction accuracy to determine the number of motor synergies to use [32–35]. However, the performance and R2-values did not completely correspond to each other. For example, the cases of three and four motor synergies showed almost double the difference in the reaching errors, whereas there were no such remarkable changes in R2-values, especially in the sagittal targets (figures 3d and 4e). A possible reason is the gap between signal and performance reconstructions as pointed out in [36].

Each repertory of the extracted motor synergies is considered to be specialized for the corresponding targets. Regarding the horizontal targets, the number of motor synergies from the horizontal policy remarkably affected the performance, whereas those from the sagittal policy hardly changed the performance (figure 4b). Considering the sagittal targets, the motor synergies from the sagittal policy affected the performance, whereas those from the horizontal policy did not (figure 4e). Moreover, neither was the repertory able to improve the performance in the frontal and upper targets by itself (figures 5 and 6). It is notable that the motor synergy repertoires from the horizontal targets themselves were not able to reach the upper targets, where only the height was different. Such a difference in the contribution of the motor synergies between the targets suggests that motor synergies are related to specific motor skills.

Focusing on each of the extracted motor synergies, we can observe that they encode task information efficiently. The waveforms of the motor synergies shown in figure 3b,e show the trend changes in ttarget = 0.5 s. For example, in figure 3b, the joint torque four in synergy zero changes from negative to positive values at ttarget. Furthermore, considering figure 3e, joint torque two in synergy three rapidly decreases at ttarget. These trend changes show that the movement directions change at ttarget. Therefore, the motor synergies efficiently encoded the temporal information about the task, which were the changes in the reaching direction over time. This is in contrast to the other motor representations proposed in the robotics field, such as dynamic movement primitives (DMP) [19,20], which do not depend on specific tasks. Because the motor synergies encode specific skills of experts (e.g. humans and trained policies), they (the motor synergies) are considered suitable for handling a combination of multiple skills.

The motor synergies themselves and their activation patterns also seem to encode the task information. The amplitude of the motor synergy activities change in the cosine-like form for the angles of , according to figure 3c,f. Each motor synergy strongly activates at a particular target direction, and movements toward the intermediate directions between them can be represented by combinations of these motor synergies. Previous studies report similar activation patterns in human reaching movements [3,25,37] although the types of motor synergies are different. There are regular spatial patterns in motor synergy activities, which can improve the generalization ability of motor command generators by explicitly incorporating it into the design.

4.3. Generalization by combination of motor synergy repertories

It is demonstrated that the combination of multiple motor synergy repertories can be generalized to new targets, even in the new planes. Considering figures 5 and 6, the combination of four to five motor synergies from each of the horizontal and sagittal policies results in comparable performances to those of reinforcement learning (table 3). In addition, the trajectories are approximately straight lines, similar to those obtained by reinforcement learning (figures 2, 5 and 6). This suggests that the skills of the original policies, such as gravity compensation, are also reproduced. The same is suggested by the fact that a straight trajectory started to appear early in the optimization process (figures 5d and 6d), contrary to reinforcement learning, where the complex curved trajectories are generated at the beginning of motor learning (figure 2c,g).

The performance was remarkably improved only when both repertories of the motor synergies from the horizontal/sagittal policies are combined (figures 5 and 6). Considering the frontal targets, when only single repertories were used (i.e. Lh = 0 or Ls = 0) the minimum error was 6.49 cm at Lh = 5. The trajectories do not seem to have reached the targets successfully in most cases (figure 5e,f). Considering the case where Lh = Ls = 3, the error was reduced to 4.24 cm although the number of motor synergies were approximately the same as in the above case. The error was further reduced by adding one motor synergy from each of the two repertories, whereas it hardly changed when two motor synergies were added from single repertories. A similar tendency was also observed in the upper targets. Regarding these results, the essential factor for generalization is not to simply increase the motor synergies; nonetheless, it is to combine multiple repertories of the motor synergies.

Motor synergies, however, were not always effective for different arm models. The motor synergies extracted from the longer-arm model were only able to show lower performance than that of the original arm model (figures 5a and 7d). This would be because the motor synergies depend on the inverse kinematics of the arm, which varies for different arm models. By contrast, the motor synergies from the shorter-arm model resulted in a similar performance to the original arm model. Thus, motor synergies may be transferable for different bodies in some cases and enable the new bodies to perform the same task. Whether a repertory of motor synergies is transferable for another body is not simple and remains as future work. Besides, in both cases, we can yet observe a similar tendency to the previous subsection that the performance was only improved when both repertories of the motor synergies were combined.

Considering the combination of multiple motor synergy repertories, linearity plays an important role. Linearity makes it possible to extend the current repertory of motor synergies by adding other motor synergies. This study directly demonstrates this using the optimization of the frontal and upper targets (figures 5c and 6c). The motor synergy repertories extracted from the horizontal and sagittal policies enable x–y and x–z directional reaching, respectively. Each repertory itself cannot realize y–z directional reaching movements; however, combining them, a new skill that can perform y–z directional reaching can be realized. Whereas there exists a study that addresses the extension of motor synergy repertories [38], our study extends the repertories with motor synergies that are extracted from individually learned movements.

These findings can also contribute to machine learning and robotics. Our results suggest the possibility that a robot can construct new movements by re-using previously acquired skills through motor synergies. Even if motor synergies may not be able to work in other robots that have totally different kinematics, that fact can be beneficial toward the scalability of intelligent robots. Therefore, it will improve the efficiency of motor learning, although there is a labour of motor synergy extraction.

4.4. Comparison with the findings in neuroscience

Although it is not our goal to describe the actual adaptation process in humans, it is possible to compare our results with past findings in neuroscience. Previous studies have suggested that humans employ the superposition of motor synergies to generate new movements. Ivanenko et al. and Clark et al. reported that approximately five motor synergies were able to represent human locomotion [39,40]. Moreover, Ivanenko et al. reported that additional motor synergies make it possible to represent other types of movements such as straddling-obstacle movements [41]. Similar findings are reported in multi-directional reaching tasks [4]. Although our study did not consider the timing of motor synergy activation, as in the above studies, we computationally reproduced a similar phenomenon in which new movements were generated by extending motor synergy repertoires.

Our findings are also closely related to the shared synergies and motor synergies commonly observed in movements for different tasks [6,9]. Scano et al. showed that similar motor synergy structures were observed between reaching tasks with various target positions [9]. Israely et al. demonstrated that a set of motor synergies in a reaching task can account for other directional movements [8]. Those studies have shown the existence of the shared synergies; by contrast, our study demonstrates a process in which motor synergies are re-used for new targets to function as shared synergies. In this study, the motor synergies are not intended to be shared with different types of targets. This is in contrast to other studies that directly designed shared-synergy structures [21,42].

However, there are differences between our method and the human adaptation process. One difference is that we only treated joint-level synergies, whereas human studies usually consider muscle-level synergies. As the numbers of muscles are usually more than those of joints, higher dimensionality needs to be treated than that of ours in muscle-level synergies. Muscle-level synergies also consider redundancy of antagonistic muscles, which do not appear in joint-level synergies. On the other hand, the tasks in this study also have redundancy and nonlinearity due to the inverse kinematics. Moreover, previous studies pointed out that joint-level synergies are highly correlated with muscle-level synergies [43,44]. These suggest that our results can also be applied to muscle-level synergies of more complex musculoskeletal systems.

Another difference is that in humans, the motor synergies themselves can change while learning new tasks. Motor synergy structures have been reported to gradually change during development or motor skill learning [45–47]. It has also been reported that the number of motor synergies increases in experts who have experienced long-term motor training in specific tasks [48]. By contrast, our method does not have a function that makes it possible to modify the motor synergies after extraction. Although the modification of motor synergies is slow and small [11,49], fine-tuning the motor synergy structure may improve the final performance in more complex tasks. In addition, we used spatiotemporal motor synergies, in which motor activities were feed-forwardly determined, and sensory feedback played an important role in the motor pattern generation in the central nervous system [14,50–53]. Although this study addresses a situation where feed-forward motor commands are sufficient, the extension to sensorimotor feedback remains as future study.

It should be noted that the reaching task in this study is complex even compared with related works that used musculoskeletal systems because we treated a three-dimensional task. Although recent studies have dealt with simulated musculoskeletal systems with many muscles [22,54,55], most studies only handled planar physics, in which the rotational directions of joints are always constant (i.e. perpendicular to the plane). By contrast, in three-dimensional reaching, the rotational axes of joints vary depending on other joint angles; for example, the rotation direction of the elbow joint changes depending on shoulder-joint angles. Owing to such difficulty, there are still only a few studies that dealt with three-dimensional tasks with motor synergies [56].

5. Conclusion

This study reveals that a linear combination of motor synergies extracted from multiple policies can be generalized to new targets that cannot be achieved with either the original policies or single motor synergy repertories. We have demonstrated a new framework that consists of (i) motor learning to acquire individual skills, (ii) motor synergy extraction from the skills, and (iii) generalization to new targets by combining the motor synergies and optimizing their activities. We also found that the motor synergies efficiently encoded the movements achieving the tasks, and the representation ability of the motor synergies could be easily extended by superposition owing to the linearity. New targets in new planes could be realized with errors within 1 cm, even for the new plane that was perpendicular to the learned planes. These computational results suggest the advantages of motor synergies for the generalization of motor skills.

We believe this is the first study that has succeeded in motor synergy generalization for new targets in multi-directional reaching with a full seven-d.f. arm under gravity, a realistic mechanical environment for the motor task. It was previously difficult to be verified with the motion analysis performed by human subjects who had already obtained motor synergies for various motor tasks. Moreover, our results provide important contributions to machine learning and robotics field. Our finding highlights that the generalization ability of motor synergies supports the employment of motor synergy representations in the computational learning process.

Acknowledgements

We would like to thank Editage (http://www.editage.com) for editing and reviewing this manuscript for English language.

Data accessibility

The codes to run simulations are available at https://doi.org/10.5281/zenodo.6395483.

Authors' contributions

K.K.: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, project administration, resources, software, validation, visualization, writing—original draft, writing—review and editing; M.H.: conceptualization, funding acquisition, project administration, supervision, writing—original draft, writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

We declare we have no competing interests.

Funding

This work was supported by JST, ACT-X grant no. JPMJAX20A8, Japan; and the JSPS Grant-in-Aid for Scientific Research on Innovative Areas (20H05458) Hyper-Adaptability project.

References

- 1.Lee WA. 1984. Neuromotor synergies as a basis for coordinated intentional action. J. Mot. Behav. 16, 135-170. ( 10.1080/00222895.1984.10735316) [DOI] [PubMed] [Google Scholar]

- 2.Santello M, Flanders M, Soechting JF. 1998. Postural hand synergies for tool use. J. Neurosci. 18, 10 105-10 115. ( 10.1523/JNEUROSCI.18-23-10105.1998) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.D’Avella A, Portone A, Fernandez L, Lacquaniti F. 2006. Control of fast-reaching movements by muscle synergy combinations. J. Neurosci. 26, 7791-7810. ( 10.1523/JNEUROSCI.0830-06.2006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.D’Avella A, Lacquaniti F. 2013. Control of reaching movements by muscle synergy combinations. Front. Comput. Neurosci. 7, 42. ( 10.3389/fncom.2013.00042) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Takei T, Confais J, Tomatsu S, Oya T, Seki K. 2017. Neural basis for hand muscle synergies in the primate spinal cord. Proc. Natl Acad. Sci. USA 114, 8643-8648. ( 10.1073/pnas.1704328114) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.D’Avella A, Bizzi E. 2005. Shared and specific muscle synergies in natural motor behaviors. Proc. Natl Acad. Sci. USA 102, 3076-3081. ( 10.1073/pnas.0500199102) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chvatal SA, Ting LH. 2013. Common muscle synergies for balance and walking. Front. Comput. Neurosci. 7, 48. ( 10.3389/fncom.2013.00048) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Israely S, Leisman G, Machluf C, Shnitzer T, Carmeli E. 2017. Direction modulation of muscle synergies in a hand-reaching task. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 2427-2440. ( 10.1109/TNSRE.2017.2769659) [DOI] [PubMed] [Google Scholar]

- 9.Scano A, Dardari L, Molteni F, Giberti H, Tosatti LM, D’Avella A. 2019. A comprehensive spatial mapping of muscle synergies in highly variable upper-limb movements of healthy subjects. Front. Physiol. 10, 125. ( 10.3389/fphys.2019.01231) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kargo WJ, Nitz DA. 2003. Early skill learning is expressed through selection and tuning of cortically represented muscle synergies. J. Neurosci. 23, 11 255-11 269. ( 10.1523/JNEUROSCI.23-35-11255.2003) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Berger DJ, Gentner R, Edmunds T, Pai DK, D’Avella A. 2013. Differences in adaptation rates after virtual surgeries provide direct evidence for modularity. J. Neurosci. 33, 12 384-12 394. ( 10.1523/JNEUROSCI.0122-13.2013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cunha T, Vieira PM, Costa K, Santos CP. 2016. Looking for motor synergies in Darwin-OP biped robot. In 2016 IEEE Int. Conf. on Robotics and Automation, Stockholm, Sweden, 16–21 May, pp. 1776-1781. IEEE. ( 10.1109/ICRA.2016.7487322) [DOI] [Google Scholar]

- 13.Hayashibe M, Shimoda S. 2018. Synergetic learning control paradigm for redundant robot to enhance error-energy index. IEEE Trans. Cogn. Dev. Syst. 10, 573-584. ( 10.1109/TCDS.2017.2697904) [DOI] [Google Scholar]

- 14.Todorov E, Jordan MI. 2002. Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 5, 1226-1235. ( 10.1038/nn963) [DOI] [PubMed] [Google Scholar]

- 15.Chhabra M, Jacobs RA. 2006. Properties of synergies arising from a theory of optimal motor behavior. Neural Comput. 18, 2320-2342. ( 10.1162/neco.2006.18.10.2320) [DOI] [PubMed] [Google Scholar]

- 16.Groote FD, Jonkers I, Duysens J. 2014. Task constraints and minimization of muscle effort result in a small number of muscle synergies during gait. Front. Comput. Neurosci. 8, 697. ( 10.3389/fncom.2014.00115) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chai J, Hayashibe M. 2020. Motor synergy development in high-performing deep reinforcement learning algorithms. IEEE Robot. Autom. Lett. 5, 1271-1278. ( 10.1109/LRA.2020.2968067) [DOI] [Google Scholar]

- 18.Han J, Chai J, Hayashibe M. 2021. Synergy emergence in deep reinforcement learning for full-dimensional arm manipulation. IEEE Trans. Med. Robot. Bionics 3, 498-509. ( 10.1109/TMRB.2021.3056924) [DOI] [Google Scholar]

- 19.Schaal S. 2006. Dynamic movement primitives: a framework for motor control in humans and humanoid robotics. In Adaptive motion of animals and machines, pp. 261–280. Tokyo, Japan: Springer.

- 20.Ijspeert AJ, Nakanishi J, Hoffmann H, Pastor P, Schaal S. 2013. Dynamical movement primitives: learning attractor models for motor behaviors. Neural Comput. 25, 328-373. ( 10.1162/NECO_a_00393) [DOI] [PubMed] [Google Scholar]

- 21.Rückert E, D’Avella A. 2013. Learned parametrized dynamic movement primitives with shared synergies for controlling robotic and musculoskeletal systems. Front. Comput. Neurosci. 7, 138. ( 10.3389/fncom.2013.00138) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Al Borno M, Hicks JL, Delp SL. 2020. The effects of motor modularity on performance, learning and generalizability in upper-extremity reaching: a computational analysis. J. R. Soc. Interface 17, 20200011. ( 10.1098/rsif.2020.0011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hagio S, Kouzaki M. 2018. Modularity speeds up motor learning by overcoming mechanical bias in musculoskeletal geometry. J. R. Soc. Interface 15, 20180249. ( 10.1098/rsif.2018.0249) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Todorov E, Erez T, Tassa Y. 2012. MuJoCo: a physics engine for model-based control. In 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October, pp. 5026-5033. IEEE. ( 10.1109/IROS.2012.6386109) [DOI] [Google Scholar]

- 25.D’Avella A, Portone A, Lacquaniti F. 2011. Superposition and modulation of muscle synergies for reaching in response to a change in target location. J. Neurophysiol. 106, 2796-2812. ( 10.1152/jn.00675.2010) [DOI] [PubMed] [Google Scholar]

- 26.Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O. 2017. Proximal policy optimization algorithms. (https://arxiv.org/abs/1707.06347).

- 27.Schulman J, Moritz P, Levine S, Jordan MI, Abbeel P. 2016. High-dimensional continuous control using generalized advantage estimation. In 4th Int. Conf. on Learning Representations, San Juan, Puerto Rico, 2–4 May. See https://dblp.org/rec/journals/corr/SchulmanMLJA15.html?view=bibtex. [Google Scholar]

- 28.Chiovetto E, Berret B, Delis I, Panzeri S, Pozzo T. 2013. Investigating reduction of dimensionality during single-joint elbow movements: a case study on muscle synergies. Front. Comput. Neurosci. 7, 11. ( 10.3389/fncom.2013.00011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hansen N, Ostermeier A. 2001. Completely Derandomized Self-Adaptation in Evolution Strategies. Evol. Comput. 9, 159-195. ( 10.1162/106365601750190398) [DOI] [PubMed] [Google Scholar]

- 30.Hansen N, Auger A. 2014. Principled design of continuous stochastic search: from theory to practice. In Theory and principled methods for the design of metaheuristics (eds Y Borenstein, A Moraglio), pp. 145-180. Berlin, Germany: Springer. ( 10.1007/978-3-642-33206-7_8) [DOI] [Google Scholar]

- 31.Guigon E, Baraduc P, Desmurget M. 2007. Computational motor control: redundancy and invariance. J. Neurophysiol. 97, 331-347. ( 10.1152/jn.00290.2006) [DOI] [PubMed] [Google Scholar]

- 32.Geng T, Lee M, Hülse M. 2011. Transferring human grasping synergies to a robot. Mechatronics 21, 272-284. ( 10.1016/j.mechatronics.2010.11.003) [DOI] [Google Scholar]

- 33.Ben Amor H, Kroemer O, Hillenbrand U, Neumann G, Peters J. 2012. Generalization of human grasping for multi-fingered robot hands. In Proc. 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October, pp. 2043–2050. IEEE.

- 34.Furui A, Eto S, Nakagaki K, Shimada K, Nakamura G, Masuda A, Chin T, Tsuji T. 2019. A myoelectric prosthetic hand with muscle synergy-based motion determination and impedance model-based biomimetic control. Sci. Robot. 4, eaaw6339. ( 10.1126/scirobotics.aaw6339) [DOI] [PubMed] [Google Scholar]

- 35.Katyara S, Ficuciello F, Caldwell DG, Siciliano B, Chen F. 2021. Leveraging kernelized synergies on shared subspace for precision grasping and dexterous manipulation. IEEE Trans. Cogn. Dev. Syst. (Early Access) ( 10.1109/TCDS.2021.3110406) [DOI] [Google Scholar]

- 36.Alessandro C, Delis I, Nori F, Panzeri S, Berret B. 2013. Muscle synergies in neuroscience and robotics: from input-space to task-space perspectives. Front. Comput. Neurosci. 7, 43. ( 10.3389/fncom.2013.00043) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.D’Avella A, Fernandez L, Portone A, Lacquaniti F. 2008. Modulation of phasic and tonic muscle synergies with reaching direction and speed. J. Neurophysiol. 100, 1433-1454. ( 10.1152/jn.01377.2007) [DOI] [PubMed] [Google Scholar]

- 38.Alessandro C, Carbajal JP, D’Avella A. 2012Synthesis and adaptation of effective motor synergies for the solution of reaching tasks. In From animals to animats, vol. 12 (eds T Ziemke, C Balkenius, J Hallam), pp. 33-43. Berlin, Germany: Springer. ( 10.1007/978-3-642-33093-3_4) [DOI] [Google Scholar]

- 39.Ivanenko YP, Poppele RE, Lacquaniti F. 2004. Five basic muscle activation patterns account for muscle activity during human locomotion. J. Physiol. 556, 267-282. ( 10.1113/jphysiol.2003.057174) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Clark DJ, Ting LH, Zajac FE, Neptune RR, Kautz SA. 2010. Merging of healthy motor modules predicts reduced locomotor performance and muscle coordination complexity post-stroke. J. Neurophysiol. 103, 844-857. ( 10.1152/jn.00825.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ivanenko YP, Poppele RE, Lacquaniti F. 2006. Motor control programs and walking. Neuroscience 12, 339-348. ( 10.1177/1073858406287987) [DOI] [PubMed] [Google Scholar]

- 42.Neumann G, Daniel C, Paraschos A, Kupcsik A, Peters J. 2014. Learning modular policies for robotics. Front. Comput. Neurosci. 8, 55. ( 10.3389/fncom.2014.00062) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tagliabue M, Ciancio AL, Brochier T, Eskiizmirliler S, Maier MA. 2015. Differences between kinematic synergies and muscle synergies during two-digit grasping. Front. Hum. Neurosci. 9, 1-17. ( 10.3389/fnhum.2015.00165) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Esmaeili S, Karami H, Baniasad M, Shojaeefard M, Farahmand F. 2022. The association between motor modules and movement primitives of gait: a muscle and kinematic synergy study. J. Biomech. 134, 110997. ( 10.1016/j.jbiomech.2022.110997) [DOI] [PubMed] [Google Scholar]

- 45.Gentner R, Gorges S, Weise D, Buttmann M, Classen J. 2010. Encoding of motor skill in the corticomuscular system of musicians. Curr. Biol. 20, 1869-1874. ( 10.1016/j.cub.2010.09.045) [DOI] [PubMed] [Google Scholar]

- 46.Dominici N, et al. 2011. Locomotor primitives in newborn babies and their development. Science 334, 997-999. ( 10.1126/science.1210617) [DOI] [PubMed] [Google Scholar]

- 47.Cheung VCK, Cheung BMF, Zhang JH, Chan ZYS, Ha SCW, Chen CY, Cheung RTH. 2020. Plasticity of muscle synergies through fractionation and merging during development and training of human runners. Nat. Commun. 11, 4356. ( 10.1038/s41467-020-18210-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sawers A, Allen JL, Ting LH. 2015. Long-term training modifies the modular structure and organization of walking balance control. J. Neurophysiol. 114, 3359-3373. ( 10.1152/jn.00758.2015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Yang Q, Logan D, Giszter SF. 2019. Motor primitives are determined in early development and are then robustly conserved into adulthood. Proc. Natl Acad. Sci. USA 116, 12 025-12 034. ( 10.1073/pnas.1821455116) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Latash ML. 2000. The organization of quick corrections within a two-joint synergy in conditions of unexpected blocking and release of a fast movement. Clin. Neurophysiol. 111, 975-987. ( 10.1016/S1388-2457(00)00263-7) [DOI] [PubMed] [Google Scholar]

- 51.Chvatal SA, Ting LH. 2012. Voluntary and reactive recruitment of locomotor muscle synergies during perturbed walking. J. Neurosci. 32, 12 237-12 250. ( 10.1523/JNEUROSCI.6344-11.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Safavynia SA, Ting LH. 2013. Long-latency muscle activity reflects continuous, delayed sensorimotor feedback of task-level and not joint-level error. J. Neurophysiol. 110, 1278-1290. ( 10.1152/jn.00609.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Nazifi MM, Yoon HU, Beschorner K, Hur P. 2017. Shared and task-specific muscle synergies during normal walking and slipping. Front. Hum. Neurosci. 11, 40. ( 10.3389/fncom.2017.00040) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Chen J, Qiao H. 2020. Muscle-synergies-based neuromuscular control for motion learning and generalization of a musculoskeletal system. IEEE Trans. Syst. Man Cybern. Syst. 51, 3993-4006. ( 10.1109/TSMC.2020.2966818) [DOI] [Google Scholar]

- 55.Kambara H, Takagi A, Shimizu H, Kawase T, Yoshimura N, Schweighofer N, Koike Y. 2021. Computational reproductions of external force field adaption without assuming desired trajectories. Neural Netw. 139, 179-198. ( 10.1016/j.neunet.2021.01.030) [DOI] [PubMed] [Google Scholar]

- 56.Chen J, Zhong S, Kang E, Qiao H. 2019. Realizing human-like manipulation with a musculoskeletal system and biologically inspired control scheme. Neurocomputing 339, 116-129. ( 10.1016/j.neucom.2018.12.069) [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The codes to run simulations are available at https://doi.org/10.5281/zenodo.6395483.