Abstract

Early diagnosis of breast cancer is an important component of breast cancer therapy. A variety of diagnostic platforms can provide valuable information regarding breast cancer patients, including image-based diagnostic techniques. However, breast abnormalities are not always easy to identify. Mammography, ultrasound, and thermography are some of the technologies developed to detect breast cancer. Using image processing and artificial intelligence techniques, the computer enables radiologists to identify chest problems more accurately. The purpose of this article was to review various approaches to detecting breast cancer using artificial intelligence and image processing. The authors present an innovative approach for identifying breast cancer using machine learning methods. Compared to current approaches, such as CNN, our particle swarm optimized wavelet neural network (PSOWNN) method appears to be relatively superior. The use of machine learning methods is clearly beneficial in terms of improved performance, efficiency, and quality of images, which are crucial to the most innovative medical applications. According to a comparison of the process's 905 images to those of other illnesses, 98.6% of the disorders are correctly identified. In summary, PSOWNNs, therefore, have a specificity of 98.8%. Furthermore, PSOWNNs have a precision of 98.6%, which means that, despite the high number of women diagnosed with breast cancer, only 830 (95.2%) are diagnosed. In other words, 95.2% of images are correctly classified. PSOWNNs are more accurate than other machine learning algorithms, SVM, KNN, and CNN.

1. Introduction

In the world, breast cancer is one of the leading health problems for women. Breast cancer comes in second place to lung cancer in terms of incidence. According to studies, one out of every nine women will be diagnosed with breast cancer. Approximately 2,088,849 cases of breast cancer were diagnosed globally in 2018 (11.6 percent of all cancer diagnoses) [1, 2]. Breast cancer occurs when there is an overdevelopment of cells in the breast, resulting in lumps or tumors. Malignant tumors tend to penetrate their surroundings more readily and are considered to be cancerous. Benign tumors are less likely to do this [3]. The masses are usually left untreated if they do not cause discomfort to the breast or spread to neighboring tissues. Many types of benign lumps can be found in breasts and prostates, including cysts, fibroadenomas, phyllodes tumors, atypical hyperplasias, and fat necrosis. Tumors can be malignant or invasive. In the absence of early diagnosis and treatment, these lesions spread and damage the surrounding breast tissues, leading to metastatic breast cancer [4, 5]. Metastatic breast cancer occurs when breast tumor cells spread to other organs, such as the liver, brain, bones, or lungs, through the bloodstream or lymphatic system [6]. Breast tissue is mostly made up of glandular (milk-producing) and fat tissues, as well as lobes and ducts. There are numerous types of breast cancer. Ductal and lobular carcinomas are the two most common types of invasive breast cancer [7–9]. In addition to redness, swelling, scaling, and underarm lumps, breast cancer survivors also notice irritation to the skin, fluid leakage, and distorted breasts. The five stages of breast cancer (stages 0 through IV) range from noninvasive malignancy to aggressive breast cancer. There are over 90,000 new cases of these illnesses every year in Asia, and 40,000 people die from them. In part, the growing death rate is due to a lack of knowledge, low education levels, and widespread poverty in diagnosis or consultation with physicians. It may be possible to significantly increase the chance of survival and find more effective treatment options if this condition is diagnosed early. Mammography can reduce mortality by one-third for women over the age of 50 [10, 11].

Because breast cancer cannot be prevented, many manual and image-based exams are useful for identifying and diagnosing it. For early detection of this disease, women are advised to perform a self-exam to become aware of the frequency of bizarre breast anomalies. Breast cancer screenings use a variety of imaging techniques, including X-ray mammography, ultrasound MRI, thermography, and CT scans [12, 13]. Researchers can use these images to examine several breast cancer-related issues. Breast cancer may appear on mammograms as microcalcifications, masses, and architectural deformities, but WSI can also detect abnormalities in the nucleus, epithelium, tubules, stroma, and mitotic activity in breast tissue [14]. In the absence of a tumor, architectural distortion is the hardest abnormality to detect on mammography. Medical breast imaging, such as mammography, is often interpreted differently by expert radiologists. Breast Imaging Reporting and Dated System (BIRADS) was developed by the American College of Radiology to deal with this conflict and radiologists' subjectivity during interpretation and features of breast mammograms, ultrasounds, and magnetic resonance imaging (MRI). Researchers have pioneered the development of artificial neural networks (ANNs) for the detection of breast cancer in recent years. An important aspect of this invention is calculating how many aspects of a diagnostic procedure can be positively affected [15–17].

Additionally, automated detection of breast cancer can mimic the unique behavior of the human brain, making it more effective than manual methods [18, 19]. An ANN cancer detection system mimics the functions of the human brain by approximating and resolving nonlinear and difficult issues, which can be perceived as a mathematical representations-inspired learning process. Further, the predictive accuracy of ANN-based cancer diagnosis is better than that of classic statistical detection approaches due to the latter's reliance on parameter optimization [12, 20]. An ANN-based cancer detection method's performance is also affected by (a) feature selection, (b) learning algorithms and their rates, (c) hidden layer count, (d) multiple nodes in a hidden layer, and (e) initial weights for the factors considered during optimization. When developing ANN-based breast cancer detection systems, feature selection is perhaps the most important factor to consider. ANN-based breast cancer detection techniques rely heavily on feature subsets [21, 22]. Additionally, the input feature subset and the design elements in the ANN-inspired breast cancer diagnostic have a reciprocal relationship. Therefore, the ANN-based process of breast cancer diagnosis must be optimized in terms of feature subset and design parameters [23].

The aim of this research is to reduce uncertainty in order to improve accuracy. Throughout history, uncertainty has always played a role in decision-making, and this is evident by the lack of clarity in the problems. There are times when it is impossible to predict all the parameters of a system, resulting in an incorrect choice. The remainder of this article is organized as follows. The purpose of an artificial neural network is to take in input in the form of a radiological discovery and to generate output in the form of a biopsy. A neural network can be used to identify and predict the risk of breast cancer in masses. In mammography, machine learning methods are used to identify abnormalities by classifying suspicious areas. In the Conclusion, a full assessment of the findings will be presented.

2. Related Work

Various deep-learning algorithms have been successfully used to build automated digital models in a variety of applications [24–27]. Using the discrete wavelet transform (DWT) and back-propagation neural networks (BPNN), Beura et al. developed a CAD model based on GLCM features and a BPNN classifier [28]. A KNN classifier was used in conjunction with DWT and GLCM features to develop a CAD model. Based on principal component analysis (PCA) and a support vector machine (SVM) classifier, Liu et al. provided a model that reduced DWT features. DWT and SVM-based CAD models were suggested by Basheer et al. [29]. Linear Discriminant Analysis (LDA) is used in a KNN classifier to extract salient features from a discrete curvelet transform (DCT) model described by [30]. Using lifting wavelet transform features and an extreme learning machine (ELM), Muduli et al. developed a moth flame optimization algorithm to build a hybrid classifier [31]. It produces better classification results with fewer features. Based on support vector machines (SVMs) in particle swarm optimization (PSO), Khan et al. [32] developed an optimized Gabor filter bank CAD model to extract important features and then improve accuracy by using SVM classifiers. The use of ultrasound images for breast cancer classification has also been introduced using machine-learning-based models. A neural network is employed to classify a feature-based model based on autocorrelation coefficients, proposed by Xiao et al. [33]. According to Liu et al. [34], repairing damaged fonts based on style is a better method of repairing damaged fonts. Researchers have found that the font content provided by the research-based CGAN network repair style is comparable to the right font content. Zhou et al. [35] described an efficient blind quality assessment approach for SCIs and NSIs that is based on a dictionary of learnt local and global quality criteria. Li et al. [36] created an artificial intelligence technique that is used for data-enhanced encryption at the IoT's endpoints and intermediate nodes. The technique presented in this article is an AI approach for encrypting data at the endpoints and intermediate nodes of autonomous IoT networks. Yang et al. [37] presented a temporal model for page dissemination in order to reduce the disparity between prediction data from current models and actual code dissemination data. In a study by Eslami et al. [38], attention-based multiscale convolutional neural networks (A+MCNN) were used to efficiently separate distress items from non-distress items in pavement photos. Liao et al. [39] developed an enhanced faster regions with CNN features (R-CNN) technique for semi-supervised SAR target identification that includes a decoding module and a domain-adaptation module named FDDA. Liu et al. [40] developed self-supervised CycleGAN in order to achieve perception consistency in ultrasound images. Sharifi et al. [41] shown how to diagnose tired and untired feet using digital footprint images. According to Zuo et al. [42], deep-learning technologies have improved optical metrology in recent years. He et al. [43] introduced a number of feature selection techniques for reducing the dimensionality of data. Ahmadi et al. [44] developed a new classifier based on wavelet transformation and fuzzy logic. The ROC curve findings show that the given layer is able to accurately segment brain tumors. To predict m6A from mRNA sequences, Zou et al. [45] used word embedding and deep neural networks. Jin et al. [46] developed word embedding and deep neural networks for m6A prediction from mRNA sequences. Yang et al. [47] sought to elucidate the mechanism behind the movement of soy husk polysaccharide (SHP) in the mucus layer triggered by Na+/Ca2+. The findings indicated that Na+ had minimal influence on the viscosity of polysaccharides, but Ca2+ enhanced it. Using a speckle-emphasis-based feature combined with an SVM classifier, Chang et al. [48] suggested a multifeature extraction model that provides the best results. A model that incorporates curvelet, shearlet, contourlet, wavelet, and gray-level cooccurrence matrix (GLCM) features has been proposed by Zhou et al. [49]. For optimal breast cancer detection, Liu et al. [50] proposed an interval-uncertainty-based strategy. Indeterminacy was accounted for using interval analysis. Regardless of the imaging system's alterations, the approach is guaranteed to provide acceptable results. To develop an interval-based Laplacian of Gaussian filter which can be used to simulate intensity uncertainties, the goal was to develop an interval-based Laplacian of Gaussian filter. To demonstrate the method's effectiveness, final findings were applied to the MIAS database and compared with several established methodologies.

A CNN-based method of detecting breast cancer was proposed by Zuluaga et al. [51]. This method was enhanced by BreastNet. Prior to including the image data into the model, the expansion approach was used to establish the image data. An accurate classification system was developed using hypercolumn methodology. To demonstrate the recommended system's increased accuracy, the findings were compared to those of several recent approaches. In histopathology images, Carvalho et al. [52] employed a different method of detecting breast cancer. Phylogenetic diversity indices were used for the construction of models and the categorization of histopathological breast images by the authors. To test its accuracy, the approach was compared to a variety of other recent methodologies. Mahmood et al.'s [53] unique convolutional neural network (ConvNet) used deep learning to identify breast cancer tissues with dramatically lower human error. For identifying mammographic breast masses, the proposed technique obtained a spectacular training accuracy of 0.98, an AUC of 0.99, high sensitivity of 0.99, and test accuracy of 0.97. According to Zhang et al. [54], different identification and detection methods pose both challenges and opportunities, such as amplification of nucleic acids, optical POCT, electrochemistry, lateral flow assays, microfluidics, enzyme-linked immunosorbent assays, and microarrays. Jiang et al. [55] focused on the surface teeth of the entire crown. Robot-assisted trajectory planning is demonstrated to improve efficiency and alleviate pressure associated with manual tooth preparation within the margin of error. Its practicability and validity are demonstrated. Qin et al. [56] suggested a novel monitoring technique for robotic drilling noise based on focused velocity synchronous linear chirplet transforms. Mobasheri et al. [57] reviewed important immunological results in COVID-19 and contemporary reports of autoimmune illnesses related to the condition. According to Ala et al. [58], for solving the appointment scheduling model using a simulation technique, they developed the whale optimization algorithm (WOA), which uses the Pareto archive and the NSGA-II algorithm. An adaptive secondary sampling method based on machine learning for multiphase drive systems is proposed by Liu et al. [59]. Zheng et al. [60] recommended image classification as the research goal for examining how metalearning rapidly acquires knowledge from a limited number of sample photos. In an article, Liu et al. [61] developed an image stitching algorithm based on feature point pair purification. Kaur et al. [62] have used a deep convolutional neural network (DCNN) and fuzzy support vector machines; they have developed two-class and three-class models for breast cancer detection and classification. Mammogram images from DDSM and curated breast imaging subsets DDSM (CBIS-DDSM) were used to create the models. Our system was tested for accuracy, sensitivity, AUC, F1-score, and confusion matrix. For the DCNN and fuzzy SVM, the accuracy of the 3-class model was 81.43 percent. With a 2-layer serial DCNN with fuzzy SVM, the first layer achieved accuracies of 99.61 percent and 100.00 percent, respectively, in binary prediction. Table 1 shows the summary of related work.

Table 1.

Summary of related work.

| Author | Year | Type | Network | Result | Advantages | Disadvantages |

|

| ||||||

| Dong et al. [22] | 2022 | Breast cancer diagnosis and classification | Random forest and regression tree | The application of machine learning techniques like CART and random forests coupled with geographical methodologies provides a viable alternative for future inequalities studies | (i) Low complexity (ii) High accuracy |

(i) Possible overfitting (ii) Used classic feature extraction (iii) Lowest robustness |

|

| ||||||

| Guha et al. [19] | 2022 | Breast cancer risk factors | SEER-Medicare analysis | The incidence of AF in women after a breast cancer diagnosis is much higher. AF is strongly linked to a higher stage of breast cancer upon diagnosis. Women newly diagnosed with breast cancer who develop AF suffer an increased risk of cardiovascular death but not breast-cancer-related death | (i) Ability of risk assessment (ii) Technical assessment |

(i) Needs feature extraction (ii) Unable to diagnose the patient |

|

| ||||||

| Chamieh et al. [63] | 2022 | Breast cancer diagnosis using fine-needle aspiration cytology | Begg and Greenes method | Irrespective of the recommended technique, the FNAC test's specificity was always greater than its sensitivity. For all approaches, the probability ratios were positive. Both positive and negative yields were high, demonstrating the test's exact discriminating qualities. | (i) Technical assessment method (ii) Low complexity |

(i) Unable to diagnose illness type (ii) Limited dataset |

|

| ||||||

| Thangarajan et al. [64] | 2022 | Breast cancer biomarkers validated in plasma | BC gene expression profiling | Methylation status of SOSTDC1, DACT2, and WIF1 can distinguish BC from benign and control conditions with 100% sensitivity and 91% specificity. Therefore, SOSTDC1, DACT2, and WIF1 may be used as a supplemental diagnostic tool to distinguish noninvasive and invasive breast cancer from benign breast conditions and healthy individuals | (i) Using biomarkers instead of mathematical features | (i) Lower sensitivity (ii) Unable to diagnose illness type |

|

| ||||||

| Chakravarthy et al. [65] | 2022 | Diagnosis of breast cancer | Multideep CNN | By fuzzing deep features for both datasets (97.93 percent for MIAS and 96.646 percent for INbreast), we achieved the highest classification accuracy among state-of-the-art frameworks. When the PCA was applied to combined deep features, classification performance did not improve, but execution time was shorter, resulting in a lower computing cost | (i) Low complexity (ii) High accuracy (iii) Technical assessment method |

(i) Possible overfitting (ii) Needs feature extraction (iii) Lower sensitivity (iv) Lowest robustness |

| Wang et al. [66] | 2022 | Metastasis of breast cancer axillary lymph nodes forecasting | CNN | The T2WI sequence outperformed the other three sequences in the testing set, with accuracy and AUC of 0.933/0.989. In comparison with T1WI, which has accuracy and AUC of 0.691/0.806, the increase is substantial | (i) Ability of risk assessment (ii) Technical assessment |

(i) Unable to diagnose the patient (ii) Used classic feature extraction (iii) Limited dataset (iv) Lower sensitivity |

|

| ||||||

| Melekoodappattu et al. [67] | 2022 | Breast cancer detection | CNN and texture feature-based approach | Using our ensemble method, we measured 97.8% specificity and 98.6% accuracy for MIAS and 98.3% and 97.9% for DDSM. Experimental data indicate that the combination strategy enhances measurement metrics independently for each phase | (i) Low complexity (ii) High accuracy |

(i) Unable to diagnose illness type |

|

| ||||||

| Gonçalves et al. [68] | 2022 | Breast cancer detection | CNNs | VGG-16 produced F1-scores greater than 0.90 for all three networks, an increase from 0.66 to 0.92. Furthermore, compared to earlier research, we were able to improve the F1-score of ResNet-50 from 0.83 to 0.90 | (i) Comparative study (ii) Used high-rank methods |

(i) Unable to diagnose illness type (ii) High complexity |

To get high-frequency and low-frequency pictures, Li et al. [69] employed a wavelet for multiscale decomposition of the source and fusion images. This article employed a deep convolutional neural network to learn the direct mapping between the high-frequency and low-frequency pictures of the source and fusion images in order to get clearer and more comprehensive fusion images. The experimental findings demonstrated that the approach suggested in this research may produce a good fusion picture that is superior in terms of both visual and objective assessments to that produced by certain sophisticated image fusion algorithms. In their study, Zhang et al. [70] used the Gaussian pyramid to improve the basic ORB-oriented approach. Based on the experimental results, we have demonstrated that the pyramid sphere method is invariant, resilient to scale and rotation changes, and has a high degree of registration accuracy. Additionally, the stitching speed is around ten times to that of SIFT. Shan et al. [71] employed a two-dimensional three-dimensional multimode medical image registration technique based on normalized cross-correlation. The results demonstrate that a multiresolution technique enhances registration accuracy and efficiency by compensating for the normalized cross-correlation algorithm's inefficiency. Xu et al. [72] suggested a technique for segmenting and categorizing tongue pictures using an MTL algorithm. The experimental findings demonstrate that our combined strategy outperforms currently available tongue characterisation techniques. Ahmadi et al. [73] used a CNN to segment tumors associated with seven different types of brain disorders: glioma, meningioma, Alzheimer's, Alzheimer's plus, Pick, sarcoma, and Huntington's. Sadeghipour et al. [74] developed a new method, combining the firefly algorithm and an intelligent system, to detect breast cancer. Researchers Zhang et al. [75] explored a way to query clinical pathways in E-healthcare systems while preserving privacy. According to Sadeghipour et al. [76], a new designed system was developed for diagnosing diabetes using the XCSLA system. Ahmadi et al. [77] introduced a technique called QAIS-DSNN for segmenting and distinguishing brain malignancies from MRI images. The simulation results obtained using the BraTS2018 dataset indicate that the suggested technique is 98.21 percent accurate. Chen et al. [78] developed a model of label limited convolutional factor analysis (LCCFA) that combines factor analysis (FA), convolutional operations, and supervised learning. Our technique outperforms other relevant models in terms of classification accuracy for small samples on multiple benchmark datasets and measured radar high-resolution range profile data. Rezaei et al. [79] created a data-driven technique for segmenting hand parts on depth maps that does not need additional work to get segmentation labels. Sadeghipour et al. [80] created a clinical system for diagnosing obstructive sleep apnea with the XCSR Classifier's assistance. Dorosti et al. [81] developed a generic model to determine the link between several characteristics in a GC tumor's location and size. Abadi et al. [82] suggested an unique hybrid salp swarm algorithm and genetic algorithm (HSSAGA) model for scheduling and designating nurses. The proposed test function algorithm's results indicate that it outperforms state-of-the-art techniques. Zhou and Arandian [83] proposed a computer-aided technique for skin cancer detection. A mix of deep learning and the Wildebeest Herd Optimization Algorithm was used to create the approach. The first characteristics are extracted using an Inception convolutional neural network. Following that, the WHO method was used to choose the relevant characteristics in order to reduce the analysis time complexity. Finally, the entire diagnostic system was compared to other ways in order to determine its efficacy in comparison to the methods evaluated. Davoudi et al. [84] examined the effect of statins on the severity of COVID-19 infection in patients who had been taking statins prior to infection. Hassantabar et al. [85] examined the effect of statins on the severity of COVID-19 infection in patients who had been taking statins prior to infection. Yu et al. [86] used differential expression analysis to combine the biological relevance of genes from gene regulatory networks, resulting in weighted differentially expressed genes for breast cancer detection. The binary classifier was capable of making a decent prediction for an unknown sample, and the testing results confirmed the efficacy of our suggested methods. A convolutional neural network based on an artificial fish school technique was suggested by Thilagaraj et al. [87]. The breast cancer image dataset comes from archives of cancer imaging. The breast cancer picture was filtered with the use of a Wiener filter for classification in the preprocessing phase of classification. By determining the number of epochs and training pictures for the deep CNN, the optimization approach assisted in lowering the error rate and increasing performance efficiency.

3. Breast Cancer Detection and Diagnosis

Prediction and treatment of breast cancer using computers are largely based on intermediate procedures such as segmenting (identifying breast lesions), identifying features, and finally categorizing areas found into classes. It is possible to detect breast lesions by either defining a suspicious region pixel by pixel in a breast image or by creating a bounding box around the suspicious area. Cancer could be detected by processing whole breast images instead of removing worrisome spots and then categorizing them, which would incur an additional charge. To classify the lesions under investigation, features are extracted from the ROI or the whole image. A classification algorithm (ML or DL) uses these features to classify the samples.

3.1. Features Learning

There are many aspects of this work which are depicted through the images. Segmenting and classifying images require knowledge of the most informative and accurate features. A large and complicated set of features are extracted due to the discrepancy between benign and malignant lesions. As a result, selecting the right set of features is crucial, since having too many features can degrade the classifier's performance and increase its complexity. To segment and classify breast lesions, numerous kinds of handmade features, such as texture, size, shape, intensity, and margins, were previously obtained from breast images. [12].

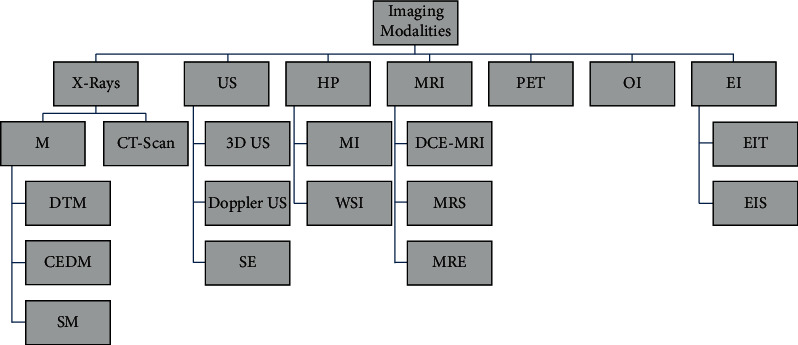

As a result, deep learning has considerably improved the whole feature extraction process, thereby improving the performance of the following stages (e.g., detection and classification). Hence, deep features derived from a convolutional network trained on a large dataset can perform discriminating tasks far better than conventional approaches based on hand-engineered features or typical machine learning methods (see Figure 1).

Figure 1.

Modalities of imaging.

4. Proposed Method

The flow chart of the structure of CNN used can be seen in Section 4.7. Our approach to improving Shafer's hypothesis was a combination of approaches as you can see in the image. Machine learning and neural networks are used to classify and diagnose tumors. For this purpose, CNN deep neural networks are individually trained and tested. This article discusses two strategies for feature extraction. CNN uses deep features for feature extraction. With gray-level cooccurrence matrix features retrieved from the image, an artificial neural network is trained. In the subsequent stages, a classifier is used to determine the probability of each class.

4.1. Dataset

This study used Mini-MIAS Screening mammography data as input images. Data would be gathered directly from hospitals and physicians, as well as from public sources. Data would be publicly available. Image resolution is 256 × 256 pixels in PNG format. This is an example of an image. 1824 images are used for analysis and simulation: 80% for training and 20% for validation.

4.2. Analyzing Outliers and Reducing Dimensions

To reduce the dimensionality of the data, Principal Component Analysis (PCA) was used. To determine the appropriate number of principal components, several machine learning models were fitted repeatedly to the modified data. In order to evaluate the effects of dimensionality reduction on prediction accuracy, a predefined number of principal component analyses (PCA) were conducted before training all models using the Classifying Learner application in the Statistics and Machine Learning Toolbox. Principal component analysis of the data was used to identify the “base” model. In order to determine the appropriate number of principal components for the modified data, machine learning models were fitted repeatedly. The PCA was performed independently on benign and malignant tumors to remove outliers.

4.3. ROI Preprocessing

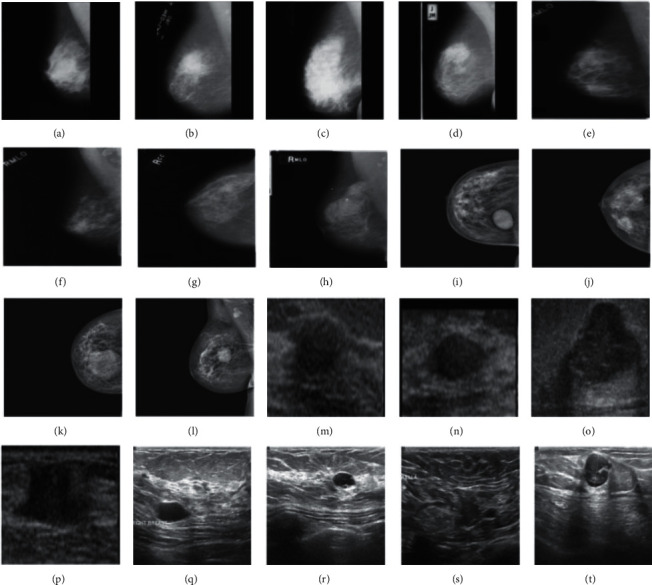

A variety of undesirable visual features, noise, and artifacts can be seen in the mammography images in the database, resulting in a low-quality image that will inevitably lower the accuracy of classification. As a result of manually cropping each image in the MIAS, we obtained the Region-of-Interest (ROI) encompassed by the suspected anomaly. To crop the image to size, the radiologist provided the center and radius parameters in the dataset (Figure 1). The ROIs that were retrieved are shown in Figure 2. Size has been assigned to the INbreast, BUS-1, and BUS-2 full images due to a lack of ground truth data. Figure 2 shows the MIAS, DDSM, INbreast, BUS-1, and BUS-2 datasets [69].

Figure 2.

Sample benign images from different datasets like MIAS ((a) and (b)), DDSM ((e) and (f)), INbreast ((i) and (j)), BUS-1 ((m) and (n)), and BUS-2 ((q) and (r)). Sample malignant images from different datasets like MIAS ((c) and (d)), DDSM, ((g) and (g)), INbreast ((k) and (l)), BUS-1 ((o) and (p)), and BUS-2 ((s) and (t)).

4.4. Feature Extraction

To minimize the number of resources required for an accurate display of a large amount of data, feature extraction is used. When collecting complex data, the number of variables being examined is one of the most significant challenges. Using the instructional example, a large number of variables requires a large amount of memory and storage. To solve problems requiring high precision, feature extraction is a term that refers to a wide range of methodologies for gathering data. The idea behind image analysis is to design a unique method for representing the fundamental components of an image distinctively. The fractal approach was used to generate gray area vectors for feature vectors. Based on the light intensity of the defined locations relative to someone in the image, statistical analysis is used to create the image features of the confidence interval for the identified chemicals. In each combination of intensity points (pixels), the statistics are affected by the frequency of these points. We extract the feature using the GLCM model in this study. The feature selection technique was used to reduce the dimensions and identify additional critical qualities that might adequately separate the various systems in terms of their capacity to interact with large amounts of input data [48]. The GLCM approach was combined with covariance analysis to extract eigenvalues and reduce the size of the image. The fractal approach requires identical input images, and each image is considered a two-dimensional matrix and a single vector. Images must be grayscale and of a certain resolution. As a result of matrix reshaping, each image becomes a column vector, and each image is extracted from an MN matrix, where N is the number of pixels in each image, and M is the total number of images. It is necessary to compute the average image for each original image in order to establish the normal distribution. The covariance matrix is then calculated as well as the eigenvalues and eigenvectors. Fractal systems use the following mechanism: M is the number of training images, Fi is the mean of the images, and li represents each image in the Ti array, beginning with M images, each of which has NN pixels.

4.5. Concept of Convolutional Neural Network (CNN)

Convolutional neural network (CNN) is a significant technique from the deep learning field. A CNN typically includes principal layers of the convolution, the Maxpooling, the fully connected layer, and the other layers executing various features. There are two phases for the preparation of each system, the forward progression and backward progression. Firstly, the data moves from the input layer to the hidden layer and then to the output layer. In the backpropagation algorithm, the input image is doing the feeding process to the network in the first step. Once the output is achieved, the error value is evaluated. This value is then brought back to the network together with updating the network weight and along with the cost functions diagram (see Figure 3). CNN consists of different types of hidden sublayers as discussed below.

Figure 3.

A description of a CNN architecture.

Convolutional Layer. It is the principle of the convolution network. This layer's output is represented as a 3D neuron matrix. The CNN network requires multiple kernels in certain layers to transform both the input image and the core function maps. The convolution process has three key advantages:

The weight-sharing method decreases the number of features in each function diagram.

The connection between adjacent pixels is known through a local connection.

It induces changes in the position of the target to create equilibrium.

Activation Functions. In particular, activation functions are used in neural networks to achieve the desired output. Neural networks can use various activation functions; the most significant of which are Tanh activation functions and Sigmoid. The sigmoid function transforms input data (−∞–+∞) to values from 0 to 1. Tanh provides production value 1 to −1 interval. One of the other activation functions is the ReLU function which has been introduced in recent years. ReLU is a function of activation g extended to all components. It aims to present the network with nonlinear behavior. This functionality contains all pixels in the image and converts all negative points to 0.

Max pooling: There are several consequences of the use of Maxpooling in CNN. The use of it helps CNN to define the target with only small modifications to the matrix at first. Second, it helps CNN to recognize features in the huge size of the image. CNN Maxpooling is used to sum up the functions during the sampling process subtraction and so can be gotten into deep steps of CNN. Then we need to begin pooling to get what we have to retain this information. Among the most common forms of pooling are Max and Average.

Data augmentation: Preprocessing and data enhancement are some of the most often overlooked issues. However, these tasks are often unnecessary. You should always check whether your task needs to be preprocessed before running any data processing.

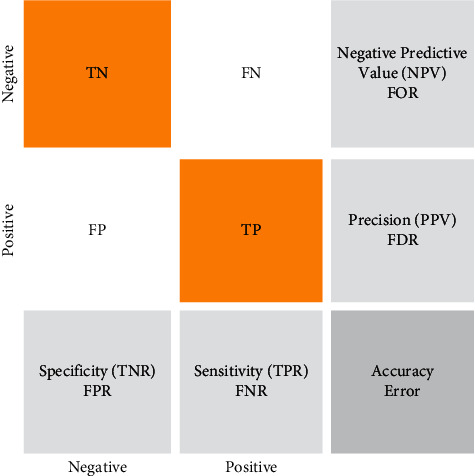

4.6. Classifier's Performance Analysis Metrics

By comparing the true positive rate (TPR) to the false positive rate (FPR), the receiver operating characteristic (ROC) curve is established. In machine learning, TPR is sometimes referred to as recall or probability of detection. The FPR and TPR have disappeared from the left side of the ROC (see Figure 4). There are a lot of meaningful test findings on the threshold line. Start with the most recent findings, which represent the most meaningful test results. The consistency with which a measure categorizes knowledge into these two categories is quantifiable and informative. The study described here emphasizes the importance of specificity over recall (also known as responsiveness or TPR) because low precision leaves patients with no need for therapeutic intervention. On the other hand, recall should not be neglected as a false positive result could lead to unnecessarily treating the individual. Evaluation of the models included recall and specificity, fivefold cross-validated accuracy, F1-score, and Matthew's correlation coefficient (MCC). The accuracy is determined by the number of correctly detected observational events (both benign and malignant tumors); F1 represents the harmonic mean of precision and recall and signifies the model's ability to predict. In other words, precision refers to the proportion of accurately detected malignancies in the anticipated set to the total number of malignancies. Each of recall, specificity, accuracy, and precision has a value between 0 and 1. The MCC was evaluated as a metric for assessing classification accuracy, since it takes into account both true negatives as well as true positives, false positives, and false negatives, and when classes are imbalanced; it can measure classification accuracy more accurately. The most commonly used binary classification evaluation measures are accuracy and F1-score when assessing models' performance on unbalanced datasets. It is only when a model predicts accurately in all four confusion matrix categories (true positives, false negatives, and false positives) that it is considered high in the MCC for binary classification. MCC provides more accurate estimates of classification accuracy when applied to the WBCD dataset. There is a perfect prediction on an MCC score of 1 if predictions and observations do not agree. The formulas for recall, specificity, accuracy, precision, and F1-score are listed as follows.

| (1) |

Figure 4.

The confusion matrix.

A true positive, a true negative, a false positive, and a false negative, respectively, are represented in the above equations. Mixture-effect models were run in JMP to determine the effects of dimensionality reduction, outlier analysis, or combinations of both on cross-validated accuracy.

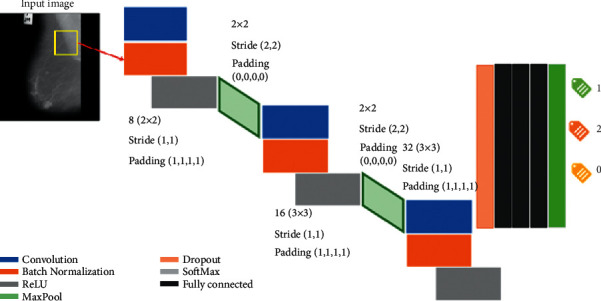

4.7. CNN Neural Network Structure

CNN has been a major factor in deep learning's recent success. The convolutional layers in this neural network are completely linked to the top layer. Additionally, the weights and layers are combined in this approach. The performance of this deep neural network was better than previous deep neural network designs. Furthermore, deep-feed neural networks are simpler to train. They are useful because they have a limited number of estimated parameters. Convolutional neural networks consist of three primary layers: the convolutional layer, the pooling layer, and the fully connected layer, each of which performs a unique function. There are two steps to training convolutional neural networks: feedforward and backpropagation. After feeding the input image into the neural network, the network output is calculated, which is used to adjust the network training parameters; after calculating the output, the results are used to calculate error rates for the network. Starting with the error value computed in the previous phase, backpropagation begins. The gradients of each parameter are computed using the chain rule, and each parameter varies in response to its influence on the network's error. Afterward, the feedforward phase begins. After a certain number of iterations, the training concludes. Our structure of the convolutional network is illustrated in Figure 5 and Table 2. There are twenty layers in the structure of CNN.

Figure 5.

The structure of CNN.

Table 2.

The presented CNN architecture layers.

| Layer | Name | Description |

|---|---|---|

| 1 | Image input | 256 × 256 × 1 images with “zero center” normalization |

| 2 | Convolution | 8 (3 × 3) convolutions with stride [1 1] and padding “same” |

| 3 | Batch normalization | Normalization |

| 4 | ReLU | Rectifier |

| 5 | Pooling | 2 × 2 max pooling with stride [2 2] and padding [0 0 0 0] |

| 6 | Convolution | 16 (3 × 3) convolutions with stride [1 1] and padding “same” |

| 7 | Batch normalization | Normalization |

| 8 | ReLU | Rectifier |

| 9 | Pooling | 2 × 2 max pooling with stride [2 2] and padding [0 0 0 0] |

| 10 | Convolution | 32 (3 × 3) convolutions with stride [1 1] and padding “same” |

| 11 | Batch normalization | Normalization |

| 12 | ReLU | Rectifier |

| 13 | Fully connected | 7 fully connected layers |

| 14 | SoftMax | SoftMax |

| 15 | Classification output | Cross entropy |

5. Results and Discussion

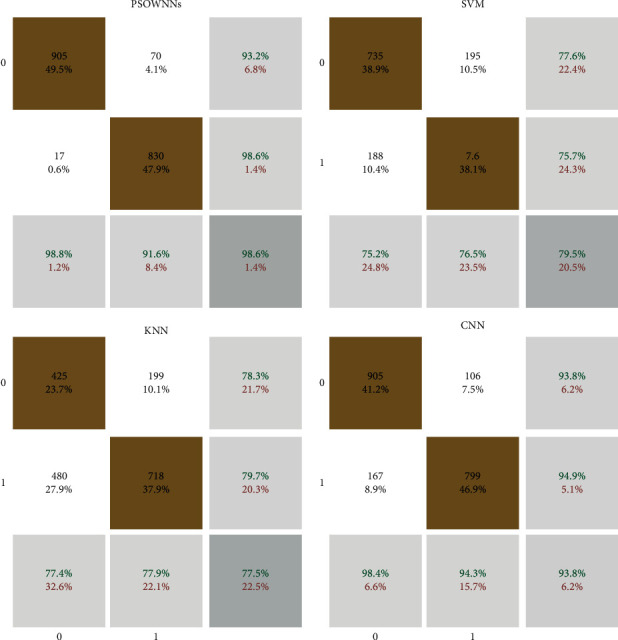

To evaluate, we have divided the test data into two categories, benign and malignant. The evaluation was performed on 64 samples from the benign class and 51 representatives from the malignant class from the MIAS dataset. The test data related to the benign class and the probability of belonging are considered first. The test data associated with the malignant class and its chance are considered in the second category. We now discuss the ROC, confusion matrix, and the comparison diagrams of the two classes. Figure 6 shows the ROC of the MLP with GLCM features, as well as CNN for the benign and malignant class.

Figure 6.

The confusion matrix of the deep learning methods used for breast cancer diagnosis.

The techniques are also included in each class of 905 matrices with 22 features. Figure 6 shows the classification results in a confusion matrix. Hence, in the confusion matrix in Figure 6, green cells indicate real metrics, while white cells indicate false metrics. In findings, out of 905 images of breast cancer, 830 (47.2 percent) are diagnosed correctly. Despite this, 70 (4.1 percent of all images) are misdiagnosed. So PSOWNNs techniques have a sensitivity of 91.6%. In other words, 91.6 percent of individuals with breast cancer are identified correctly. Moreover, 905 images with different disorders were correctly diagnosed in 98.8 percent of the cases. PSOWNNs have a specificity of 98.8%. Additionally, PSOWNNs have an accuracy of 98.6%, which means 830 patients are found to have breast cancer, they (98.6%) are correctly diagnosed. The accuracy of classifiers is another significant parameter. PSOWNNs have an accuracy of 95.2%. That means that 95.2% of the images are correctly diagnosed. In comparison with other machine learning techniques, PSOWNNs are more accurate than SVM, KNN, and CNN. As neural networks do, CNNs train to build output maps by removing more complex, high-level functions. Input function maps are combined with convolutional kernels. CNN exploits the fact that a feature is the same in the receptive field irrespective of its location if a function converts. The results show that CNN can acquire more useful functionality than techniques that do not take this into account. As a result of this assumption, weight sharing is used to decrease the number of components.

The used methods are trained by gradient descent. Hierarchical characteristics are optimized for the task at hand because each layer feeds into the previous one. A real-valued vector is typically needed for SVM and other methods. By contrast, CNN is often taught from beginning to end, ensuring that it reacts appropriately to the challenge it is attempting to resolve. In SVMs, KNNs, and CNNs, PSOWNNs are used as trainable attribute detectors. Since SVMs are still extensively used, different machine learning algorithms should complement each other. Consequently, this article uses machine learning to identify breast cancer based on GLCM traits. CNN's confusion matrix is depicted in Figure 5. 905 breast cancer patients were accurately diagnosed by 799 (94.9 percent) according to the matrix. However, 106 images were misdiagnosed. On the other hand, all patients with additional disorders or negative test results are identified. The CNN approaches have a sensitivity of 94.3 percent and a specificity of 93.8 percent, respectively. They also have a 93.4 percent accuracy rate. Breast cancer is a true positive in all patients with this identification. CNN methodologies, therefore, are 95.9% accurate.

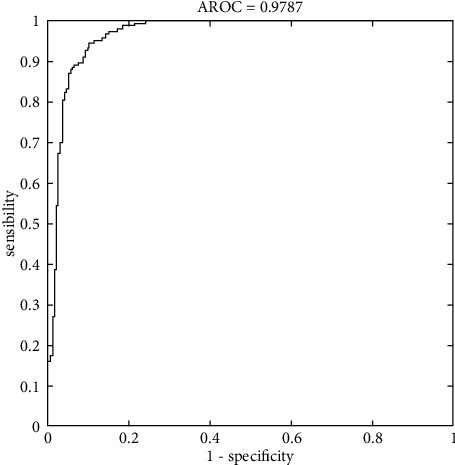

In terms of classification, it is preferable if the fall-out and sensitivity have lower and higher values, respectively. CNN has, therefore, a greater sensitivity than other methods. Meanwhile, KNN demonstrated that machine learning algorithms are less sensitive than CNN (see Figure 7). In Figure 8, the ROC curve is displayed to compare machine learning algorithms. Fall-out is represented by the horizontal axis of the ROC curve, while sensitivity is represented by the vertical axis.

Figure 7.

The training process of the CNN approach.

Figure 8.

ROC region for the breast tumor segmentation in the MIAS dataset.

Based on the performance analysis metrics results shown in Table 3, the higher values belong to the CNN technique. ROC curve value is an essential metric for classifiers. For CNN methods, it is 99.97. Consequently, the highest accuracy belongs to CNN, KNN, LDA, SVM, and NB, respectively.

Table 3.

The comparison between the presented methods.

| Methods | Sensitivity (%) | Specificity (%) | Precision (%) | AUC | Accuracy (%) |

|---|---|---|---|---|---|

| KNN | 77.9 | 77.4 | 79.7 | 79.43 | 77.5 |

| SVM | 78.5 | 75.2 | 75.7 | 78.54 | 79.5 |

| PSOWNNs | 91.6 | 98.8 | 98.6 | 95.43 | 95.2 |

| CNN | 94.3 | 93.4 | 94.9 | 93.65 | 93.8 |

6. Discussions

The improved SVM achieves the lowest score for all criteria on the Mini-MIAS mammography dataset. ANNs are also derived from KNNs and CNNs, but their major drawbacks are overfitting, hence the need for more training data, as well as their inability to extract features. Among the most sensitive and specific structures are machine learning methods. As a result of their less sensitivity to variance in input samples, convolutional neural networks outperform CNNs and PSOWNNs. Results show that the PSOWNNs have the best classification performance and lowest error rate. Based on the Mini-MIAS datasets, Figure 8 shows the receiver operating characteristics (ROC) area for the used structure. ROC analysis involves analyzing classification jobs at different threshold values. A statistical model's accuracy and, more broadly, the performance of a system can be evaluated based on this evaluation.

From the Mini-MIAS mammography dataset shown in Figure 9, you can see a visual representation of how the technique is used to segment different tissues and tumors.

Figure 9.

CNN for Mini-MIAS dataset was used to visually assess breast tumor segmentation.

7. Conclusion

A complicated illness, breast cancer is recognized as the second leading cause of cancer-associated mortality in women. A growing body of data suggests that many factors (e.g., genetics and environment) might play a role in breast cancer onset and development. Image-based diagnostic methods are among the many diagnostic platforms that can provide valuable information on breast cancer patients. Unfortunately, it is not always easy to identify breast abnormalities. Various technologies have been developed to screen for breast cancer, such as mammography, ultrasound, and thermography. Utilizing image processing and artificial intelligence (AI) techniques, the computer helps radiologists identify chest problems more effectively. This article evaluated many approaches to detecting breast cancer using AI and image processing. Using machine learning methods for identifying breast cancer, the authors present an innovative approach. Based on the support value on a neural network, the suggested method differs from previous techniques. A normalizing technique has been implemented to benefit the image in terms of performance, efficiency, and quality. According to our experiments, PSOWNNs are relatively superior to current approaches such as CNN. There is no doubt that machine learning methods are beneficial in terms of performance, efficiency, and quality of images, which are vital to the newest medical applications. Based on the results, PSOWNNs approaches are 91.6% sensitive. That is, 91.6 percent of breast cancer patients are detected correctly. A further comparison of the process 905 image with those of other illnesses reveals that 98.6 percent of the disorders are correctly diagnosed. Therefore, PSOWNNs have a specificity of 98.8%. Furthermore, PSOWNNs have a precision of 98.6 percent, which means 830 people are seen to have breast cancer, but only 830 (95.2 percent) are diagnosed. In other words, 95.2% of images are correctly classified. PSOWNNs are more accurate than other machine learning algorithms, SVM, KNN, and CNN.

Nomenclature

- ANN:

Artificial neural network

- BIRADS:

Breast Imaging Reporting and Dated System

- CNN:

Convolutional neural network

- DCNN:

Deep convolutional neural network

- DL:

Deep learning

- FN:

False negative

- FP:

False positive

- FPR:

False positive rate

- GLCM:

Gray-level cooccurrence matrix

- KNN:

k-nearest neighbor

- LDA:

Linear discrimination analysis

- MCC:

Matthew's correlation coefficient

- ML:

Machine learning

- NB:

Naïve bayes

- PCA:

Principal component analysis

- PSOWNN:

Particle swarm optimized wavelet neural network

- ReLU:

Rectified linear unit

- ROC:

Receiver operating characteristic

- ROI:

Region-of-Interest

- SVM:

Support vector machine

- TN:

True negative

- TP:

True positive

- TPR:

True positive rate.

Data Availability

Data are available and can be provided over the emails querying directly to the corresponding author (amin.valizadeh@mail.um.ac.ir).

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Malvezzi M., Carioli G., Bertuccio P., et al. European cancer mortality predictions for the year 2019 with focus on breast cancer. Annals of Oncology . 2019;30(5):781–787. doi: 10.1093/annonc/mdz051. [DOI] [PubMed] [Google Scholar]

- 2.Salguero-Aranda C., Sancho-Mensat D., Canals-Lorente B., Sultan S., Reginald A., Chapman L. STAT6 knockdown using multiple siRNA sequences inhibits proliferation and induces apoptosis of human colorectal and breast cancer cell lines. PLoS One . 2019;14(5) doi: 10.1371/journal.pone.0207558.e0207558 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Dunnwald L. K., Rossing M. A., Li C. I. Hormone receptor status, tumor characteristics, and prognosis: a prospective cohort of breast cancer patients. Breast Cancer Research . 2007;9(1):R6–R10. doi: 10.1186/bcr1639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.McGuire A., Brown J. A. L., Kerin M. J. Metastatic breast cancer: the potential of miRNA for diagnosis and treatment monitoring. Cancer & Metastasis Reviews . 2015;34(1):145–155. doi: 10.1007/s10555-015-9551-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Peart O. Metastatic breast cancer. Radiologic Technology . 2017;88(5):519M–539M. [PubMed] [Google Scholar]

- 6.Zarychta E., Ruszkowska-Ciastek B. Cooperation between angiogenesis, vasculogenesis, chemotaxis, and coagulation in breast cancer metastases development: pathophysiological point of view. Biomedicines . 2022;10(2):p. 300. doi: 10.3390/biomedicines10020300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Farrokh A., Goldmann G., Meyer-Johann U., et al. Clinical differences between invasive lobular breast cancer and invasive carcinoma of no special type in the german mammography-screening-program. Women & Health . 2022;62:1–13. doi: 10.1080/03630242.2022.2030448. [DOI] [PubMed] [Google Scholar]

- 8.Fisher E. R., Gregorio R. M., Redmond C., Fisher B. Tubulolobular invasive breast cancer: a variant of lobular invasive cancer. Human Pathology . 1977;8(6):679–683. doi: 10.1016/s0046-8177(77)80096-8. [DOI] [PubMed] [Google Scholar]

- 9.Kotsopoulos J., Chen W. Y., Gates M. A., Tworoger S. S., Hankinson S. E., Rosner B. A. Risk factors for ductal and lobular breast cancer: results from the nurses’ health study. Breast Cancer Research . 2010;12(6):R106–R111. doi: 10.1186/bcr2790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Guan Y., Haardörfer R., McBride C. M., Lipscomb J., Escoffery C. Factors associated with mammography screening choices by women aged 40-49 at average risk. Journal of Women’s Health . 2022 doi: 10.1089/jwh.2021.0232. [DOI] [PubMed] [Google Scholar]

- 11.Ding W., Zhang J. X. Deep transfer learning-based intelligent diagnosis of malignant tumors on mammography. Proceedings of the 2021 3rd International Conference on Industrial Artificial Intelligence (IAI); 2021 November; Shenyang China. IEEE; pp. 1–6. [Google Scholar]

- 12.Rezaei Z. A review on image-based approaches for breast cancer detection, segmentation, and classification. Expert Systems with Applications . 2021;182 doi: 10.1016/j.eswa.2021.115204.115204 [DOI] [Google Scholar]

- 13.Kaur C., Garg U. Artificial intelligence techniques for cancer detection in medical image processing: a review. Materials Today Proceedings . 2021 doi: 10.1016/j.matpr.2021.04.241. [DOI] [Google Scholar]

- 14.Hamidinekoo A., Denton E., Rampun A., Honnor K., Zwiggelaar R. Deep learning in mammography and breast histology, an overview and future trends. Medical Image Analysis . 2018;47:45–67. doi: 10.1016/j.media.2018.03.006. [DOI] [PubMed] [Google Scholar]

- 15.Ekici S., Jawzal H. Breast cancer diagnosis using thermography and convolutional neural networks. Medical Hypotheses . 2020;137 doi: 10.1016/j.mehy.2019.109542.109542 [DOI] [PubMed] [Google Scholar]

- 16.Retsky M. W., Demicheli R., Hrushesky W. J. M., Baum M., Gukas I. D. Dormancy and surgery-driven escape from dormancy help explain some clinical features of breast cancer. Apmis . 2008;116(7-8):730–741. doi: 10.1111/j.1600-0463.2008.00990.x. [DOI] [PubMed] [Google Scholar]

- 17.Zheng B., Yoon S. W., Lam S. S. Breast cancer diagnosis based on feature extraction using a hybrid of K-means and support vector machine algorithms. Expert Systems with Applications . 2014;41(4):1476–1482. doi: 10.1016/j.eswa.2013.08.044. [DOI] [Google Scholar]

- 18.Al-Turjman F., Stephan T. An automated breast cancer diagnosis using feature selection and parameter optimization in ANN. Computers & Electrical Engineering . 2021;90 doi: 10.1016/j.compeleceng.2020.106958.106958 [DOI] [Google Scholar]

- 19.Guha A., Fradley M. G., Dent S. F., et al. Incidence, risk factors, and mortality of atrial fibrillation in breast cancer: a SEER-Medicare analysis. European Heart Journal . 2022;43(4):300–312. doi: 10.1093/eurheartj/ehab745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cruz J. A., Wishart D. S. Applications of machine learning in cancer prediction and prognosis. Cancer Informatics . 2006;2 doi: 10.1177/117693510600200030.117693510600200 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ahmad F., Mat Isa N. A., Hussain Z., Osman M. K., Sulaiman S. N. A GA-based feature selection and parameter optimization of an ANN in diagnosing breast cancer. Pattern Analysis & Applications . 2015;18(4):861–870. doi: 10.1007/s10044-014-0375-9. [DOI] [Google Scholar]

- 22.Dong W., Bensken W. P., Kim U., Rose J., Berger N. A., Koroukian S. M. Phenotype discovery and geographic disparities of late-stage breast cancer diagnosis across U.S. Counties: a machine learning approach. Cancer Epidemiology Biomarkers & Prevention . 2022;31(1):66–76. doi: 10.1158/1055-9965.epi-21-0838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Stephan P., Stephan T., Kannan R., Abraham A. A hybrid artificial bee colony with whale optimization algorithm for improved breast cancer diagnosis. Neural Computing & Applications . 2021;33(20):13667–13691. doi: 10.1007/s00521-021-05997-6. [DOI] [Google Scholar]

- 24.Janiesch C., Zschech P., Heinrich K. Machine learning and deep learning. Electronic Markets . 2021;31(3):685–695. doi: 10.1007/s12525-021-00475-2. [DOI] [Google Scholar]

- 25.Pouyanfar S., Sadiq S., Yan Y., et al. A survey on deep learning. ACM Computing Surveys . 2019;51(5):1–36. doi: 10.1145/3234150. [DOI] [Google Scholar]

- 26.Dargan S., Kumar M., Ayyagari M. R., Kumar G. A survey of deep learning and its applications: a new paradigm to machine learning. Archives of Computational Methods in Engineering . 2020;27(4):1071–1092. doi: 10.1007/s11831-019-09344-w. [DOI] [Google Scholar]

- 27.Lee S. M., Seo J. B., Yun J., et al. Deep learning applications in chest radiography and computed tomography. Journal of Thoracic Imaging . 2019;34(2):75–85. doi: 10.1097/RTI.0000000000000387. [DOI] [PubMed] [Google Scholar]

- 28.Beura S., Majhi B., Dash R. Mammogram classification using two dimensional discrete wavelet transform and gray-level co-occurrence matrix for detection of breast cancer. Neurocomputing . 2015;154:1–14. doi: 10.1016/j.neucom.2014.12.032. [DOI] [Google Scholar]

- 29.Liu J. P., Li C. L. The short-term power load forecasting based on sperm whale algorithm and wavelet least square support vector machine with DWT-IR for feature selection. Sustainability . 2017;9(7):p. 1188. doi: 10.3390/su9071188. [DOI] [Google Scholar]

- 30.Acharya U. R., Raghavendra U., Fujita H., et al. Automated characterization of fatty liver disease and cirrhosis using curvelet transform and entropy features extracted from ultrasound images. Computers in Biology and Medicine . 2016;79:250–258. doi: 10.1016/j.compbiomed.2016.10.022. [DOI] [PubMed] [Google Scholar]

- 31.Muduli D., Dash R., Majhi B. Fast discrete curvelet transform and modified PSO based improved evolutionary extreme learning machine for breast cancer detection. Biomedical Signal Processing and Control . 2021;70 doi: 10.1016/j.bspc.2021.102919.102919 [DOI] [Google Scholar]

- 32.Khan S., Hussain M., Aboalsamh H., Mathkour H., Bebis G., Zakariah M. Optimized Gabor features for mass classification in mammography. Applied Soft Computing . 2016;44:267–280. doi: 10.1016/j.asoc.2016.04.012. [DOI] [Google Scholar]

- 33.Xiao Z., Yan Z. Radar emitter identification based on auto-correlation function and bispectrum via convolutional neural network. IEICE - Transactions on Communications . 2021;E104.B(12):1506–1513. doi: 10.1587/transcom.2021ebp3035. [DOI] [Google Scholar]

- 34.Liu R., Wang X., Lu H., et al. SCCGAN: style and characters inpainting based on CGAN. Mobile Networks and Applications . 2021;26(1):3–12. doi: 10.1007/s11036-020-01717-x. [DOI] [Google Scholar]

- 35.Zhou W., Yu L., Zhou Y., Qiu W., Wu M.-W., Luo T. Local and global feature learning for blind quality evaluation of screen content and natural scene images. IEEE Transactions on Image Processing . 2018;27(5):2086–2095. doi: 10.1109/tip.2018.2794207. [DOI] [PubMed] [Google Scholar]

- 36.Li B., Feng Y., Xiong Z., Yang W., Liu G. Research on AI security enhanced encryption algorithm of autonomous IoT systems. Information Sciences . 2021;575:379–398. doi: 10.1016/j.ins.2021.06.016. [DOI] [Google Scholar]

- 37.Yang L., Xiong Z., Liu G., Hu Y., Zhang X., Qiu M. An analytical model of page dissemination for efficient big data transmission of C-ITS. IEEE Transactions on Intelligent Transportation Systems . 2021 doi: 10.1109/TITS.2021.3134557. [DOI] [Google Scholar]

- 38.Eslami E., Yun H.-B. Attention-based multi-scale convolutional neural network (a+mcnn) for multi-class classification in road images. Sensors . 2021;21(15):p. 5137. doi: 10.3390/s21155137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Liao L., Du L., Guo Y. Semi-supervised SAR target detection based on an improved faster R-CNN. Remote Sensing . 2021;14(1):p. 143. [Google Scholar]

- 40.Liu H., Liu J., Hou S., Tao T., Han J. Perception consistency ultrasound image super-resolution via self-supervised CycleGAN. Neural Computing and Applications . 2021;10:1–11. [Google Scholar]

- 41.Sharifi A., Ahmadi M., Mehni M. A., Jafarzadeh Ghoushchi S., Pourasad Y. Experimental and numerical diagnosis of fatigue foot using convolutional neural network. Computer Methods in Biomechanics and Biomedical Engineering . 2021;24(16):1828–1840. doi: 10.1080/10255842.2021.1921164. [DOI] [PubMed] [Google Scholar]

- 42.Zuo C., Qian J., Feng S., et al. Deep learning in optical metrology: a review. Light: Science & Applications . 2022;11(1):1–54. doi: 10.1038/s41377-022-00714-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.He S., Guo F., Zou Q., Ding H. MRMD2.0: a python tool for machine learning with feature ranking and reduction. Current Bioinformatics . 2021;15(10):1213–1221. doi: 10.2174/1574893615999200503030350. [DOI] [Google Scholar]

- 44.Ahmadi M, Dashti Ahangar F, Astaraki N, Abbasi M, Babaei B. FWNNet: presentation of a new classifier of brain tumor diagnosis based on fuzzy logic and the wavelet-based neural network using machine-learning methods. Computational Intelligence and Neuroscience . 2021;2021:13. doi: 10.1155/2021/8542637.8542637 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Zou Q., Xing P., Wei L., Liu B. Gene2vec: gene subsequence embedding for prediction of mammalian N6-methyladenosine sites from mRNA. Rna . 2019;25(2):205–218. doi: 10.1261/rna.069112.118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Jin K., Yan Y., Chen M., et al. Multimodal deep learning with feature level fusion for identification of choroidal neovascularization activity in age‐related macular degeneration. Acta Ophthalmologica . 2022;100(2):e512–e520. doi: 10.1111/aos.14928. [DOI] [PubMed] [Google Scholar]

- 47.Yang L., Wu X., Luo M., et al. Na+/Ca2+ induced the migration of soy hull polysaccharides in the mucus layer in vitro. International Journal of Biological Macromolecules . 2022;199 doi: 10.1016/j.ijbiomac.2022.01.016. [DOI] [PubMed] [Google Scholar]

- 48.Chuang H. C., Chen D. R., Huang Y. L. Using tumor morphology to classify benign and malignant solid breast masses: speckle reduction imaging (SRI) versus non-SRI ultrasound imaging. IEICE technical report. IEICE Tech. Rep. . 2009;108(385):33–36. [Google Scholar]

- 49.Zhou S., Shi J., Zhu J., Cai Y., Wang R. Shearlet-based texture feature extraction for classification of breast tumor in ultrasound image. Biomedical Signal Processing and Control . 2013;8(6):688–696. doi: 10.1016/j.bspc.2013.06.011. [DOI] [Google Scholar]

- 50.Liu Y., Zou R., Guo H. Risk explicit interval linear programming model for uncertainty-based nutrient-reduction optimization for the lake Qionghai watershed. Journal of Water Resources Planning and Management . 2011;137(1):83–91. doi: 10.1061/(asce)wr.1943-5452.0000099. [DOI] [Google Scholar]

- 51.Zuluaga-Gomez J., Al Masry Z., Benaggoune K., Meraghni S., Zerhouni N. A CNN-based methodology for breast cancer diagnosis using thermal images. Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization . 2021;9(2):131–145. doi: 10.1080/21681163.2020.1824685. [DOI] [Google Scholar]

- 52.Carvalho E. D., Antonio Filho O. C., Silva R. R., et al. Breast cancer diagnosis from histopathological images using textural features and CBIR. Artificial Intelligence in Medicine . 2020;105 doi: 10.1016/j.artmed.2020.101845. [DOI] [PubMed] [Google Scholar]

- 53.Mahmood T., Li J., Pei Y., Akhtar F., Jia Y., Khand Z. H. Breast mass detection and classification using deep convolutional neural networks for radiologist diagnosis assistance. Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC); 2021, July; Madrid, Spain. IEEE; pp. 1918–1923. [Google Scholar]

- 54.Zhang Z., Ma P., Ahmed R., et al. Advanced point‐of‐care testing technologies for human acute respiratory virus detection. Advanced Materials . 2022;34(1) doi: 10.1002/adma.202103646.2103646 [DOI] [PubMed] [Google Scholar]

- 55.Jiang J., Guo Y., Huang Z., Zhang Y., Wu D., Liu Y. Adjacent surface trajectory planning of robot-assisted tooth preparation based on augmented reality. Engineering Science and Technology: An International Journal . 2022;27101001 [Google Scholar]

- 56.Qin C., Xiao D., Tao J., et al. Concentrated velocity synchronous linear chirplet transform with application to robotic drilling chatter monitoring. Measurement . 2022;194 doi: 10.1016/j.measurement.2022.111090.111090 [DOI] [Google Scholar]

- 57.Mobasheri L., Nasirpour M. H., Masoumi E., Azarnaminy A. F., Jafari M., Esmaeili S.-A. SARS-CoV-2 triggering autoimmune diseases. Cytokine . 2022;154 doi: 10.1016/j.cyto.2022.155873.155873 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Ala A, Alsaadi FE, Ahmadi M, Mirjalili S. Optimization of an appointment scheduling problem for healthcare systems based on the quality of fairness service using whale optimization algorithm and NSGA-II. Scientific Reports . 2021;11(1):19816–19819. doi: 10.1038/s41598-021-98851-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Liu Z., Fang L., Jiang D., Qu R. A machine-learning based fault diagnosis method with adaptive secondary sampling for multiphase drive systems. IEEE Transactions on Power Electronics . 2022 doi: 10.1109/tpel.2022.3153797. [DOI] [Google Scholar]

- 60.Zheng W., Liu X., Yin L. Research on image classification method based on improved multi-scale relational network. PeerJ Computer Science . 2021;7:p. e613. doi: 10.7717/peerj-cs.613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Liu Y, Tian J, Hu R, et al. Improved feature point pair purification algorithm based on SIFT during endoscope image stitching. Frontiers in Neurorobotics . 2022;16 doi: 10.3389/fnbot.2022.840594.840594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Kaur P., Singh G., Kaur P. Intellectual detection and validation of automated mammogram breast cancer images by multi-class SVM using deep learning classification. Informatics in Medicine Unlocked . 2019;16 doi: 10.1016/j.imu.2019.100239.100239 [DOI] [Google Scholar]

- 63.El Chamieh C., Vielh P., Chevret S. Statistical methods for evaluating the fine needle aspiration cytology procedure in breast cancer diagnosis. BMC Medical Research Methodology . 2022;22(1):40–11. doi: 10.1186/s12874-022-01506-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Rajkumar T., Amritha S., Sridevi V., et al. Identification and validation of plasma biomarkers for diagnosis of breast cancer in South Asian women. Scientific Reports . 2022;12(1):100–113. doi: 10.1038/s41598-021-04176-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Sannasi Chakravarthy S. R., Bharanidharan N., Rajaguru H. Multi-deep CNN based experimentations for early diagnosis of breast cancer. IETE Journal of Research . 2022:1–16. doi: 10.1080/03772063.2022.2028584. [DOI] [Google Scholar]

- 66.Wang Z., Sun H., Li J., et al. Preoperative prediction of axillary lymph node metastasis in breast cancer using CNN based on multiparametric MRI. Journal of Magnetic Resonance Imaging . 2022 doi: 10.1002/jmri.28082. [DOI] [PubMed] [Google Scholar]

- 67.Melekoodappattu J. G., Dhas A. S., Kandathil B. K., Adarsh K. S. Breast cancer detection in mammogram: combining modified CNN and texture feature based approach. Journal of Ambient Intelligence and Humanized Computing . 2022:1–10. doi: 10.1007/s12652-022-03713-3. [DOI] [Google Scholar]

- 68.Gonçalves C. B., Souza J. R., Fernandes H. CNN architecture optimization using bio-inspired algorithms for breast cancer detection in infrared images. Computers in Biology and Medicine . 2022;142 doi: 10.1016/j.compbiomed.2021.105205.105205 [DOI] [PubMed] [Google Scholar]

- 69.Li J., Yuan G., Fan H. Multifocus image fusion using wavelet-domain-based deep CNN. Computational intelligence and neuroscience . 2019;2019 doi: 10.1155/2019/4179397.4179397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Zhang Z., Wang L., Zheng W., Yin L., Hu R., Yang B. Endoscope image mosaic based on pyramid ORB. Biomedical Signal Processing and Control . 71(2022)103261 [Google Scholar]

- 71.Liu S., Yang B., Wang Y., Tian J., Yin L., Zheng W. 2D/3D multimode medical image registration based on normalized cross-correlation. Applied Sciences . 2022;12(6):p. 2828. doi: 10.3390/app12062828. [DOI] [Google Scholar]

- 72.Xu Q., Zeng Y., Tang W., et al. Multi-task joint learning model for segmenting and classifying tongue images using a deep neural network. IEEE journal of biomedical and health informatics . 2020;24(9):2481–2489. doi: 10.1109/jbhi.2020.2986376. [DOI] [PubMed] [Google Scholar]

- 73.Ahmadi M., Sharifi A., Jafarian Fard M., Soleimani N. Detection of brain lesion location in MRI images using convolutional neural network and robust PCA. International Journal of Neuroscience . 2021:1–12. doi: 10.1080/00207454.2021.1883602. [DOI] [PubMed] [Google Scholar]

- 74.Sadeghipour E., Sahragard N., Sayebani M.-R., Mahdizadeh R. Breast cancer detection based on a hybrid approach of firefly algorithm and intelligent systems. Indian Journal of Fundamental and Applied Life Sciences . 2015;5:p. S1. [Google Scholar]

- 75.Zhang M., Chen Y., Susilo W. PPO-CPQ: a privacy-preserving optimization of clinical pathway query for e-healthcare systems. IEEE Internet of Things Journal . 2020;7(10):10660–10672. doi: 10.1109/jiot.2020.3007518. [DOI] [Google Scholar]

- 76.Sadeghipour E., Hatam A., Hosseinzadeh F. Designing an intelligent system for diagnosing diabetes with the help of the Xcsla system. Journal of Mathematics and Computer Science . 2015;14(1):24–32. doi: 10.22436/jmcs.014.01.03. [DOI] [Google Scholar]

- 77.Ahmadi M., Sharifi A., Hassantabar S., Enayati S. QAIS-DSNN: tumor area segmentation of MRI image with optimized quantum matched-filter technique and deep spiking neural network. BioMed Research International . 2021;2021:16. doi: 10.1155/2021/6653879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Chen J., Lan D., Guo Y. Label constrained convolutional factor analysis for classification with limited training samples. Information Sciences . 2021;544:372–394. [Google Scholar]

- 79.Rezaei M., Farahanipad F., Dillhoff A., Elmasri R., Athitsos V. Weakly-supervised hand part segmentation from depth images. Proceedings of the 14th PErvasive Technologies Related to Assistive Environments Conference; June 2021; Corfu, Greece. pp. 218–225. [Google Scholar]

- 80.Sadeghipour E., Hatam A., Hosseinzadeh F. An expert clinical system for diagnosing obstructive sleep apnea with help from the XCSR classifier. Journal of Mathematics and Computer Science . 2015;14(1):33–41. doi: 10.22436/jmcs.014.01.04. [DOI] [Google Scholar]

- 81.Dorosti S., Jafarzadeh Ghoushchi S., Sobhrakhshankhah E., Ahmadi M., Sharifi A. Application of gene expression programming and sensitivity analyses in analyzing effective parameters in gastric cancer tumor size and location. Soft Computing . 2020;24(13):9943–9964. doi: 10.1007/s00500-019-04507-0. [DOI] [Google Scholar]

- 82.Abadi M. Q. H., Rahmati S., Sharifi A., Ahmadi M., Ahmadi M. HSSAGA: designation and scheduling of nurses for taking care of COVID-19 patients using novel method of hybrid salp swarm algorithm and genetic algorithm. Applied Soft Computing . 2021;108 doi: 10.1016/j.asoc.2021.107449.107449 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Zhou B., Arandian B. An Improved CNN Architecture to Diagnose Skin Cancer in Dermoscopic Images Based on Wildebeest Herd Optimization Algorithm. Computational Intelligence and Neuroscience . 2021;2021 doi: 10.1155/2021/7567870.7567870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Davoudi A, Ahmadi M, Sharifi A, et al. Studying the effect of taking statins before infection in the severity reduction of COVID-19 with machine learning. BioMed Research International . 2021;2021:12. doi: 10.1155/2021/9995073.9995073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Hassantabar S., Ahmadi M., Sharifi A. Diagnosis and detection of infected tissue of COVID-19 patients based on lung X-ray image using convolutional neural network approaches. Chaos, Solitons & Fractals . 2020;140 doi: 10.1016/j.chaos.2020.110170.110170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Yu Z., Wang Z., Yu X., Zhang Z. RNA-seq-based breast cancer subtypes classification using machine learning approaches. Computational intelligence and neuroscience . 2020;2020 doi: 10.1155/2020/4737969.4737969 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Thilagaraj M., Arunkumar N., Govindan P. Classification of breast cancer images by implementing improved dcnn with artificial fish school model. Computational Intelligence and Neuroscience . 2022;2022 doi: 10.1155/2022/6785707.6785707 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are available and can be provided over the emails querying directly to the corresponding author (amin.valizadeh@mail.um.ac.ir).