Abstract

Accumulated findings from studies in which implicit-bias measures correlate with discriminatory judgment and behavior have led many social scientists to conclude that implicit biases play a causal role in racial and other discrimination. In turn, that belief has promoted and sustained two lines of work to develop remedies: (a) individual treatment interventions expected to weaken or eradicate implicit biases and (b) group-administered training programs to overcome biases generally, including implicit biases. Our review of research on these two types of sought remedies finds that they lack established methods that durably diminish implicit biases and have not reproducibly reduced discriminatory consequences of implicit (or other) biases. That disappointing conclusion prompted our turn to strategies based on methods that have been successful in the domain of public health. Preventive measures are designed to disable the path from implicit biases to discriminatory outcomes. Disparity-finding methods aim to discover disparities that sometimes have obvious fixes, or that at least suggest where responsibility should reside for developing a fix. Disparity-finding methods have the advantage of being useful in remediation not only for implicit biases but also systemic biases. For both of these categories of bias, causes of discriminatory outcomes are understood as residing in large part outside the conscious awareness of individual actors. We conclude with recommendations to guide organizations that wish to deal with biases for which they have not yet found solutions.

Keywords: implicit bias, systemic bias, public health, disparity finding, prevention

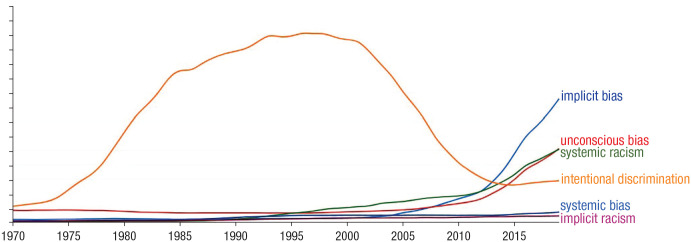

Figure 1 plots, over a 50-year period, appearances in English-language books of six discrimination-related terms. This historical record reveals a 40-year dominance and subsequent decline of “intentional discrimination” relative to the other five. The 1970 to 2010 dominance of “intentional discrimination” likely had roots in Title VII of the Civil Rights Act of 1964, which declared it illegal to discriminate on the basis of “race, color, religion, sex, or national origin.” Especially in the first several decades after Title VII became law, its phrase “on the basis of” was treated by most courts in the United States as requiring evidence of a decision maker’s intent to discriminate. The decline in uses of “intentional discrimination” after 2000, combined with rises in the use of three other terms—“unconscious bias,” “implicit bias,” and “systemic racism”—signal a substantial change in both scientific and public understanding of discrimination.

Fig. 1.

Usage, from 1969 through 2019, of six concepts prominent in scholarly understanding of intergroup discrimination. This plot was produced in Google Ngram (https://books.google.com/ngrams/) by entering the six two-word terms, separated by commas, into the Ngram Viewer’s search box.

Implicit bias was developed in psychology as a label for mental associations that, when triggered by demographic characteristics such as race, gender, or age, can influence judgment and behavior. The “implicit” modifier marks two characteristics of implicit bias: It is a bias that (a) manifests (and can be measured) indirectly and (b) can operate without those who perpetrate discrimination needing to be aware either of their biased associations or of the role those associations play in guiding their judgment and action.

Explicit bias (not shown in Fig. 1, but used less than “systemic bias”) identifies intergroup attitudes and stereotypes of which their possessors are aware. This awareness allows for direct measurement using self-report (e.g., survey) measures. Before the first use of “implicit bias” with its current meaning (Greenwald & Banaji, 1995), “unconscious bias” was used in legal scholarship on discrimination, even if without an established scientific understanding of what “unconscious” meant in the legal context. “Unconscious bias” continues to be used in legal scholarship and elsewhere as an approximate synonym of implicit bias.

Within a few years after first publication of the Implicit Association Test (IAT; Greenwald et al., 1998), the IAT’s most active developers stopped using the words “prejudice” and “racism” to describe implicit biases that could be measured with the IAT. The reason for this change was that nothing about the IAT’s procedure should prompt research subjects, while their classification latencies are being measured, to bear in mind the animus (hostility or antipathy) that is a central ingredient in most definitions of racism and prejudice (see 24 of these in Greenwald & Pettigrew, 2014). 1 In contrast with IAT measures, most self-report measures of (explicit) racial attitudes oblige subjects to actively contemplate hostile or disparaging sentiments about out-groups while reporting their agreement or disagreement with statements of those sentiments. Figure 1 suggests that researchers’ early choice not to use “implicit racism” may have kept use of that term to the low level it presently has.

Like “implicit racism,” “systemic racism” combines a first word that implies no intent (systemic) with a second one that implies hostile intent (racism). So that this article’s treatment will not be hampered by this potentially confusing juxtaposition, we proceed by using “systemic bias” where many others continue to use “systemic racism.” Although “systemic bias” already has established usage (see Fig. 1), its usage is vanishingly small in comparison with that of “systemic racism.” We use “systemic bias” to denote societal structures and processes that create, sustain, or exacerbate intergroup inequities without hostile intent. This includes phenomena for which the terms “institutional bias” and “structural bias” are also being used (unobjectionably). Systemic biases reside within the social system, not necessarily in the thought and decision processes of the actors who occupy society’s roles that produce the discriminatory consequences denoted by “systemic bias.”

As an example of systemic bias that occurs with no intent to harm, consider the home-buying decision of a family that happens to be White. If that family seeks high-quality public education—which depends on school funding, which depends on the school district’s tax revenues, which often depend on local property values, which themselves are influenced by a possibly long past history of racial segregation—that family will likely find a home in a neighborhood that is disproportionately White. Although the White family is not making their home-buying decision on the basis of race, the choice they make will likely be to invest in a predominantly White community, which will help to perpetuate existing residential racial segregation.

Preview

The concepts described and defined in this introduction will be used in the following five sections, headings of which are given here with only brief elaboration. Correctible Misunderstandings of Implicit Bias presents and corrects misunderstandings that have been propagated in public media and in some scientific treatments. What Is Known About Implicit Bias presents well-established findings of research, minimizing technical details. Research on Remedies for Implicit Bias reviews research on methods to remediate implicit biases, including the two methods that have received greatest research attention: experimental mental-debiasing interventions and group-administered trainings. We find inadequate evidence for effectiveness of either of these two approaches, a conclusion that motivates our presentation in Treating Discriminatory Bias as a Public-Health Problem, which describes the usefulness of remedies modeled on effective public-health strategies. Recommendations describes four strategies expected to reduce discriminatory consequences of implicit and systemic biases, concluding with an organizational self-test to assess the extent to which an organization has already adopted remedial approaches consistent with this article’s recommendations.

Correctible Misunderstandings of Implicit Bias

Media descriptions of psychological research on implicit bias have created public awareness that discrimination can be perpetrated by persons who lack intent to discriminate. These media presentations often describe implicit bias as a recognized cause of disparities associated with differences in race, gender, ethnicity, age, disability, socioeconomic status, sexual orientation, and other demographic characteristics. Accompanying the public-education value of many of these media presentations, there have been some problematic side effects in the form of misunderstandings, which are described and corrected here. As will be seen, scientists are not free of responsibility for occasional misunderstandings.

Misunderstanding 1: The IAT and other indirect measures assess prejudice and racism

Correction: Indirect measures capture associative knowledge about groups, not hostility toward them

This misunderstanding surfaced early, appearing in a few research reports by those working most actively to develop the IAT as a research procedure. As noted in our introduction, active efforts to correct this misunderstanding were made as soon as it became apparent that it was a mistake to equate implicit bias with racism or prejudice.

A related misunderstanding is that good people do not possess implicit biases. To the contrary, the mental associations that constitute implicit biases are unavoidably acquired from the cultural atmosphere in which one is immersed daily. This cultural immersion includes literature, visual entertainment media, and audio and print news media and is also embodied in long-established practices of many public and private institutions, including the gender typing and race typing associated with many occupations. Short of imposing severe social deprivation, shielding children from their cultural environment appears not only unachievable but also possibly quite undesirable because valuable cultural content would be lost along with the stereotypes that one might avoid. There is no evidence that scrupulously nondiscriminatory beliefs and practices of parents effectively shield their children from passive acquisition of the stereotypes and attitudinal associations that pervade the larger societal environment.

The foregoing notwithstanding, a connection between implicit race bias and explicit forms of racism must be informed by the striking evidence for large-scale decline in explicit race bias in the United States and elsewhere during the second half of the 20th century. 2 Recent events (in 2020 and 2021) have made it widely apparent that hostile racism has not disappeared in the United States, regardless of what survey data reveal. Nevertheless, it is widely understood that the United States is currently characterized by a systemic form of White-favoring bias that is embodied in social institutions, policies, and practices. If one accepts the plausible and widely held assumption that implicit biases arise from widely shared, lifelong exposure to a surrounding culture (i.e., a social system), then it may be reasonable to understand implicit bias as one of the forms in which systemic bias occurs.

Misunderstanding 2: Implicit measures predict spontaneous (automatic) behavior but do not predict deliberate (controlled, rational) behavior

Correction: Implicit measures predict both spontaneous and deliberate behavior

Responsibility for this misunderstanding belongs more to scientists than to journalists. The origin of this belief was Fazio’s (1990) influential two-process theory, which proposed that attitudes could operate both via a spontaneous/automatic route and via a more effortful/deliberative route. The prediction that many took from Fazio’s theory (even though Fazio did not) was that implicit (presumed automatic) measures should therefore predict spontaneous but not deliberate behavior and that explicit (self-report) measures should predict deliberate but not spontaneous behavior (e.g., Asendorpf et al., 2002; Dovidio et al., 2002; Payne & Gawronski, 2010). The portion of this prediction having to do with explicit measures—that they predict deliberate, but not spontaneous, behavior—has been substantially confirmed in three meta-analyses (Cameron et al., 2012; Greenwald et al., 2009; Kurdi et al., 2019). However, the same three meta-analyses also found that the portion of the prediction having to do with implicit measures was not supported. All three meta-analyses found that implicit measures were equally effective in predicting both deliberate and spontaneous behavior.

Misunderstanding 3: Implicit and explicit biases are unrelated to each other

Correction: Implicit and explicit biases are almost invariably positively correlated

Not knowing more than that implicit race biases are more pervasive than explicit race biases, many (including many psychologists) have assumed that implicit and explicit biases are unrelated. This assumption has now been discredited in six substantial meta-analyses that examined correlations between implicit and explicit measures of a wide variety of attitudes and stereotypes. The finding in all six meta-analyses was that parallel implicit and explicit measures of individual attitudes or stereotypes are almost invariably positively intercorrelated (for a review, see Greenwald & Lai, 2020). These same meta-analyses also established that correlations of implicit intergroup attitudes and stereotypes (implicit biases) with parallel self-report measures are smaller than are correlations of implicit and explicit measures in nonintergroup domains, such as political attitudes and consumer attitudes (Greenwald et al., 2009). The finding that average implicit–explicit correlations are weaker for intergroup attitudes and stereotypes than for other attitudes and stereotypes has been widely misconstrued as indicating that implicit–explicit correlations for intergroup attitudes and stereotypes are approximately zero. In actuality, these intergroup correlations are almost invariably numerically positive. A widely suspected explanation of the smaller magnitude of intergroup (than other) implicit–explicit correlations is that explicit (self-report) measures of intergroup attitudes have additional influences, such as self-presentation concerns, that do not affect implicit measures.

Misunderstanding 4: It is scientifically established that long-established implicit biases are durably modifiable

Correction: With only occasional exceptions, experimental attempts to reduce long-established biases have not found that they are durably modifiable

The term “debiasing” is frequently used in discussions of remedies for implicit bias. In the case of implicit biases, debiasing has two goals: (a) reducing scores on measures of implicit biases (mental debiasing) and (b) reducing discriminatory behavior that might result from implicit biases (behavioral debiasing). Most experimental studies have been limited to the mental-debiasing goal, which is almost always measured in the same session as administration of the intervention being tested. As a result, there is very little evidence for durability of modifications in implicit biases produced by interventions.

Between the publication of a literature review by Blair (2002) and a collection of 17 experimental studies by Lai et al. (2014), empirical findings from studies of implicit-bias-reducing interventions were widely interpreted as supporting a conclusion that implicit biases are malleable (meaning durably modifiable). However, almost all of the mental-debiasing interventions in these numerous published reports were conducted in single-session studies, and posttests were typically obtained within 15 min after relatively brief interventions. Understanding of these results changed after Lai et al. (2016) compared effects from identical interventions as measured in within-session posttests and in posttests delayed by a day or more. The study established that interventions found reliably to be effective when tested within the intervention session showed no effects when the posttests were delayed. This evidence and its interpretation are reviewed later under the heading Research on Remedies for Implicit Bias.

Misunderstanding 5: Group-administered procedures (often called antibias or diversity training) that are widely offered to reduce implicit race (and other) biases are effective methods of mitigating discriminatory bias

Correction: Scholarly reviews of the effectiveness of group-administered antibias or diversity-training methods have not found convincing evidence for their mental or behavioral debiasing effectiveness

Outside the laboratory, many who identify themselves as trainers offer group-administered interventions that they describe at least in part as designed to produce mental or behavioral debiasing. The absence of evidence for the effectiveness of either of these types of debiasing from group-administered trainings is understandable, given that few trainers have had professional training in the skills needed to design and conduct useful evaluations of the trainings they offer and that conducting such evaluations is rarely requested by their organizational clients.

This article’s authors’ encounters with descriptions of group-administered training strategies yielded the following list of advertised mental-debiasing methods: (a) exposure to counterstereotypic exemplars of members of stereotyped groups; (b) instruction to form and to remember intentions to avoid bias; (c) advice to act slowly when making decisions that might be biased (e.g., pausing to think, meditating); (d) learning about the existence and pervasiveness of implicit biases; and (e) discovering one’s own implicit biases by taking available online IAT measures. With the exception of counterstereotypic exemplars and remembering intentions, these methods have not had substantial attempts at experimental confirmation of effectiveness. The two methods that have been researched have shown effects on IAT measures in within-session posttests but have not been found to yield reproducibly durable mental debiasing. Evidence for effectiveness of group-administered training is considered in detail later in this article in under the Research on Remedies for Implicit Bias heading.

What Is Known About Implicit Bias

This section divides what is known about implicit bias into (a) plausible assertions that deliberately go a bit beyond what has been established by empirical research (three “quasiconclusions”), (b) knowledge based solidly on research evidence from studies using the IAT, and (c) critiques (both resolved and unresolved) of IAT research, followed by a brief list of important questions that await research answers.

Three quasiconclusions about implicit bias

“Quasi” can be defined as “almost, but not quite.” The causation, pervasiveness, and awareness statements in this section are all well rooted in reproducible empirical findings. At the same time, each of these statements at least mildly exceeds what can be empirically established either now or in the near future. These shortcomings do not prevent the three statements from being useful for practitioners interested in remediation of problems in which implicit biases are likely to be involved.

Causation: Implicit bias is a plausible cause of discriminatory behavior

A large body of research on how implicit-bias measures succeed or do not succeed in predicting discriminatory judgment and behavior has been summarized in three meta-analyses (Greenwald et al., 2009; Kurdi et al., 2019; Oswald et al., 2013). These meta-analyses collectively established that implicit-bias measures reliably predict discriminatory judgments and behavior. The observed correlations (rs) were typically small to moderate, meaning that most were between .05 and .30. Combined over the three meta-analyses, predictive-validity correlations averaged .165. Oswald et al. argued that these correlations were too small to be of practical significance, repeatedly characterizing them as “poor predictors” (pp. 171, 179, 182, 186). (That concern is evaluated under the Controversies heading later in this section.) Greenwald et al. (2015) showed that even correlations substantially smaller than the observed aggregate of .165 were “large enough to explain discriminatory impacts that are societally significant either because they can affect many people simultaneously or because they can repeatedly affect single persons” (p. 553).

“Correlation does not equal causation” is a widely known (and valid) social science aphorism. Even so, predictive-validity correlations are consistent with a causal interpretation, and this correlational evidence can be compelling about causation when there are no plausible alternative interpretations. For implicit biases, there is at least one plausible alternative interpretation—that implicit-bias measures are themselves shaped by the same developmental experiences that produce discriminatory behavior. Alternately stated, implicit biases and discriminatory behavior have one or more shared causes. The choice between a direct-cause interpretation and a shared-cause interpretation will likely remain unresolved until methods are available to study implicit biases as they emerge in early childhood. In the present circumstance of likely continued ignorance, the causal interpretation can be regarded as useful because it is (a) simple (parsimonious), (b) intuitive (plausible), and (c) almost certain not to be empirically refuted in the foreseeable future.

Pervasiveness: Implicit bias is considerably more widespread than is generally expected

Studies using parallel IAT and self-report (explicit) measures of the same biases have found consistently, even if not invariably, that implicit measures indicate greater attitudinal or stereotype bias than do parallel self-report measures. When the two types of measures are compared in standard-deviation units, implicit measures most often show stronger biases, measured as greater differences from neutrality. It follows that implicit biases must be possessed by many who lack explicit biases. Egalitarians who are unaware of well-established research findings might expect or assume that implicit bias is no more widespread than explicit bias and that those who lack explicit race preference (such as themselves) should not expect that they possess any implicit race preferences. Unsurprisingly, a good portion of egalitarians—persons who lack explicit race bias—are distressed to obtain a race-attitude IAT score that classifies them as having moderate or strong automatic preference for racial White relative to Black. 3

This pervasiveness conclusion is plausible to many scientists as well as to laypersons who are familiar with some of the scientific research on implicit bias. Nevertheless, the part of the conclusion expressed as “more widespread than generally expected” cannot be evaluated empirically because there are no empirical studies of expected pervasiveness of implicit biases. For those who wish to be correspondingly cautious, the pervasiveness conclusion might instead be stated as “expressions of implicit bias are considerably more widespread than are expressions of explicit bias.”

Awareness: Implicit bias may produce discriminatory behavior in persons who are unaware of being biased

Statement of this awareness belief prompts a question: How does a person become aware of possessing an implicit bias? One possible route is to have an implicit bias revealed by taking an IAT (preferably more than once). A second route is to suspect that one possesses an implicit bias after having learned about the pervasiveness of implicit biases. A third route is to use knowledge of one’s explicit attitude or stereotype as the basis for guessing the parallel implicit attitude or stereotype. The consistently observed positive correlations of IAT measures with parallel self-report (explicit) measures (described later in this section) can make this third strategy moderately effective, even though implicit biases are often possessed by people who lack explicit biases (see Note 3). Hahn et al. (2014) have suggested a fourth route, hypothesizing that people have introspective access to their implicit biases. Their stated conclusion poses a challenge to identify methods that can produce a convincing finding for or against their hypothesis. (This topic is returned to under this section’s concluding heading, What Is Not Yet Known.)

Validity of IAT measures

Some of the material in this section may be difficult to follow for a reader without some understanding of the IAT’s procedure. Most references to IAT measures in this article are to the standard form of the IAT, which has seven sets (blocks) of trials, each of which presents a stimulus (exemplar) belonging to one of the IAT’s two target categories or to one of its two attribute categories. Four of the seven blocks (ordinally, Blocks 3, 4, 6, and 7) present combined tasks in which exemplars from one pair of categories appear on all odd-numbered trials and exemplars from the other pair appear on all even-numbered trials. (A full description of the standard form of the IAT appears in Appendix A. For those who have not encountered an IAT measure, it may be even more useful to go to a website where one can try out one or more IAT measures: https://implicit.harvard.edu/implicit/.)

The respondent’s only instructed task in responding during an IAT measure is to press a left key or a right key to classify each exemplar into its proper category. The same two response keys are used to classify target and attribute concepts, and correct response sides for the two target categories (but not for the two attribute categories) are switched from those used initially in Blocks 3 and 4 for the second combined task in Blocks 6 and 7. The implicit measure is determined mainly by the latency difference between these two tasks, which provides the numerator of the IAT’s D measure, computed with a scoring algorithm established in 2003 (Greenwald et al., 2003). The standard IAT procedure is described fully in Appendix A. The scoring method is described fully in Appendix B of Greenwald et al. (2021). An interpretation of how the measure succeeds follows.

A theory of what the IAT measures

In the introductory presentation of the IAT, Greenwald et al. (1998) described it as a measure of association strengths with minimal elaboration. For example:

The usefulness of the IAT in measuring association strength depends on the assumption that when the two concepts that share a response are strongly associated, the sorting task is considerably easier than when the two response-sharing concepts are . . . weakly associated. (Greenwald et al., 2002, p. 8; stated similarly in Greenwald et al., 1998, p. 1469)

Multiple alternatives to the association-strength interpretation offered by others are treated later in this section under the heading Critiques of Measurement and Interpretation.

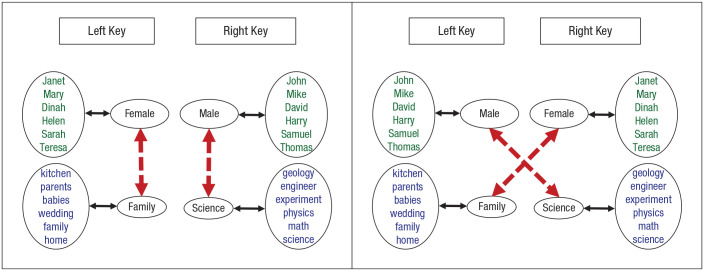

Figure 2 is an initial effort (19 years after the simple statement given above) at schematic description of how association strengths are theorized to be involved in responding to an IAT procedure. Figure 2 uses the category labels and exemplar stimuli of a gender–science stereotype IAT for a person who is assumed to have associations of male with science and of female with family. The figure depicts two levels of associations: (a) associations between categories (heavier double-ended arrows) and (b) associations of exemplar stimuli to categories (lighter double-ended arrows). The associations of category labels to exemplar stimuli are assumed to have been established by many experiences of contiguity in text and speech between the exemplars and their associated category labels. The stereotype-consistent associations between categories in Figure 2 (female with family; male with science) are assumed to have been formed by many life experiences, perhaps including more frequent encounters with male people in scientific roles and with female people in family roles. These category-level associations are what make it easy to give the same key-press response to exemplars of both of two associated categories. When instructions assign the same key to female and science, the (often strong) female = family association will interfere with producing the instructed key press required for the science category on trials that present female exemplars. In combination, these facilitation and interference effects of association strengths produce the difference in combined-task performance speeds that is captured in the IAT’s scoring method.

Fig. 2.

Representation of associations involved in responding to an Implicit Association Test gender–science stereotype measure. The left panel shows four categories in a stereotype-consistent structure; associations link all categories and exemplars for the instructions that request a response to each key. The red arrows represent the stereotype-consistent associations. In the right panel, these associations cross between the keys, comprising a source of interference in providing the instructed responses.

Construct validity of IAT measures

Cronbach and Meehl (1955) described construct validity of psychological traits as resting on a nomological network, which they defined as “the interlocking system of laws which constitute a theory” (p. 290). They further wrote, “Construct validation is possible only when some of the statements in the network lead to predicted relations among observables” (p. 300). The evidence for construct validity of IAT measures therefore rests on studies of correlations of IAT-measured constructs with measures of other constructs with which they should, in theory, be correlated. This subsection summarizes evidence concerning the correlational nomological network in which IAT measures reside. 4

IAT measures predict discrimination in judgment and behavior

After the IAT’s initial publication, most IAT researchers, including the measure’s creators, were withholding judgment on whether the IAT provided useful measures of implicit biases. Greatest interest was in answering the predictive-validity question: Does the IAT predict intergroup discrimination? Publications appearing in the early 2000s provided a mixture of “yes” answers (statistically significant correlations) and “no” answers (nonsignificant correlations). It was only when enough research had accumulated to conduct a quantitative (meta-analytic) review that this question began to be answered. The first meta-analysis was published in 2009 (Greenwald et al., 2009). Three of nine areas of research reviewed by Greenwald et al. involved intergroup discriminatory behavior. Subsequently, two other predictive-validity meta-analyses focused on intergroup discriminatory behavior were published (Kurdi et al., 2019; Oswald et al., 2013). The findings of all three meta-analyses revealed consistently small to moderate effect sizes of predictive-validity correlations. Greenwald et al. (2009) reported an average correlation (r) for intergroup domains of .21 (62 samples). Oswald et al. (2013) found an average predictive-validity correlation (r) of .14 (86 samples). Kurdi et al. (2019) did not report an average predictive-validity correlation for their meta-analysis. In a personal communication, Kurdi reported that this average correlation was .10 (253 samples).

Many expected known-groups differences are confirmed

Known-groups IAT studies are tests of differences in an IAT measure between two groups of subjects who differ in a characteristic that should be associated with either an attitude (association with valence) or an identity (association with self). Even though typically reported as a test of the difference between means for two groups, the statistical outcome is equivalent to that for a correlation in which one of the two correlated variables is a dichotomy. Expected group differences in both implicit attitudes and implicit identities are readily observed on IAT-measured attitudes when the two groups differ in (among many other things) race, gender, ethnicity, political attitudes, consumer brand preferences, religion, nationality, and university affiliations. When exceptions occur, they are informative about the consequences of stigmatized identities. The best-known case of this is the surprising absence of difference as a function of age of respondent in the strong association of age with negative valence as measured by the IAT (see top row of Table 5 in Nosek et al., 2007).

IAT attitude measures and parallel self-report measures are nearly uniformly positively correlated

This well-established conclusion is supported by findings from six large studies that reported correlations between parallel implicit (IAT) and self-report (explicit) measures for many attitudes. In a meta-analysis of 126 studies, Hofmann et al. (2005) reported that the average implicit–explicit correlation (r) was .24. In Nosek et al.’s (2007) study reporting on Project Implicit’s Internet-obtained volunteer data, implicit–explicit correlations were positive for 17 IAT measures (a few of them of stereotypes), and the weighted average r was .27. Greenwald et al.’s (2009) meta-analysis, based on 155 independent samples, found average implicit–explicit correlations of .21. In 57 experimental studies, Nosek (2005) reported an average implicit–explicit correlation of .36. For 95 experimental studies conducted via Internet, Nosek and Hansen (2008) reported an average implicit–explicit correlation of .36. Kurdi et al. (2019, personal communication) found an average implicit–explicit correlation of .12 for 160 studies limited to the domain of intergroup behavior.

Because of the generally positive correlations between IAT and self-report measures, these two types of measures very often agree in direction, meaning that both have means on the same side of their zero points. Nevertheless, it is possible (but only relatively infrequently true) that means for parallel IAT and self-report measures disagree in direction. The best-known example is for measures of White versus Black attitudinal preference, for which IAT and self-report are positively correlated (e.g., r = .207 in Nosek et al., 2007, Table 2). This positive correlation notwithstanding, demographically diverse research samples often show explicit preferences in the pro-Black direction, on average, accompanying moderate to strong White preferences on IAT measures. Samples limited to African American respondents often show the reverse pattern of small White preferences on the race-attitude IAT, on average, accompanying strong Black preference on explicit attitude measures.

IAT findings give birth to balanced identity theory

The earliest IAT studies focused on measures of attitudes, of self-esteem, and of stereotypes, often with interest in comparing the results of these IAT measures and parallel self-report measures. An early IAT study of gender stereotypes (Rudman et al., 2001) 5 produced unexpected findings with remarkable theoretical implications. The unexpected findings were that (a) for a gender–warmth stereotype IAT, only women associated female (more than male) with warm (relative to cold), whereas men reversed the expected stereotype, associating male more than female with warm, and (b) for a gender–potency stereotype IAT, only men showed the expected stereotype of associating male (more than female) with strong (relative to weak), whereas women associated male and female equally with strong. Rudman et al. concluded that “people possess implicit gender stereotypes in self-favorable form because of the tendency to associate self with desirable traits” (p. 1164). Because their IATs used mostly positively valenced words for “warm” and “strong” and mostly negatively valenced words for “cold” and “weak,” these IATs confounded valence with trait (a practice now understood as one to be avoided; see Greenwald et al., 2021). The finding that the confound worked in different directions for men and women provided inspiration for balanced identity theory (BIT; Greenwald et al., 2002), which used a balance–congruity principle to predict relations among interrelated trios of measures of identity, self-esteem (or self-concept), and attitude (or stereotype). A meta-analysis recently reported by Cvencek et al. (2021) found that the novel correlational predictions of BIT’s balance–congruity principle were consistently confirmed in 36 studies, involving 12,733 subjects, that had tested these predictions with both IAT and self-report measures.

Balanced identity theory predicts the frequently observed positive correlation between self-esteem and social identity

Greenwald et al. (2002) described BIT as an intellectual descendant of several affective–cognitive consistency theories that flourished in the 1950s and 1960s. BIT’s most significant impact may be that it provided a new understanding of the interrelations among the central constructs of personality psychology (identities, self-concepts, and self-esteem) and the central constructs of social psychology (attitudes and stereotypes).

This new understanding was provided by BIT’s balance–congruity principle. The balance–congruity principle translates to the proposition that when two categories (A and B) are associated with the same third category (C), an association between A and B should strengthen as a multiplicative function of the strengths of the A–C and B–C associations. The balance–congruity principle’s name has roots in Heider’s (1958) balance theory and Osgood and Tannenbaum’s (1955) congruity theory. The role of self-esteem (association of self with positive valence) is important in applications of the principle because most people have strongly positive self-esteem. Consider a young girl with strongly positive self-esteem. For this girl, both female (A) and positive valence (B) are associated with self (C). It follows from the balance–congruity principle that the association between female (A) and positive valence should strengthen, producing a positive attitude toward female. Any identity (e.g., male, young, Catholic, Swedish) can replace female in the category A role, generating the expectation that the people with high self-esteem should have positive attitudes toward all of their identities. Further, strength of the positive attitude should be predicted by the strength of both the identity association and the positive self-valence (self-esteem) association. Strength of positive attitudes toward one’s identities is therefore moderated by the magnitude of one’s self-esteem.

The correlational findings summarized in the foregoing paragraphs constitute the “nomological network” of “predicted relations among observables” on which construct validity of IAT measures rests (Cronbach & Meehl, 1955, p. 300). Two types of correlational evidence have been described. The first is evidence establishing expected positive correlations of IAT measures with (a) measures of discriminatory behavior and judgment; (b) membership in groups that are expected to differ in attitudes, identities, or both; and (c) parallel self-report measures. This evidence supports treating IAT measures as valid measures of attitudes and stereotypes but does not bear on theoretical interpretation of IAT measures as measures of association strengths. The second type of evidence comes from tests of BIT’s predictions for correlations within specified triads of measures of attitudes, identities, stereotypes, self-concepts, and self-esteem. Because those predictions depend on BIT’s definition of those social–cognitive constructs as having the form of mental associations, their confirmations support that associative theoretical understanding.

Critiques of IAT measurement and interpretation

This section presents questions that have been raised about interpretations of IAT data; most of these bear on construct validity. Each section starts with brief statement of a “standard view” on a question, which is often a position contained in one or more of the early publications of IAT findings. This is followed by a paragraph briefly describing published critiques of the standard view, in turn followed by evaluation of the merits of the contrasted views.

What psychological construct is measured by the IAT?

Standard view

The IAT measures strengths of associations between two dimensions, each identified by a contrasted pair of categories. For the Black–White race-attitude IAT, this would be (a) a valence dimension defined by the contrast of pleasant versus unpleasant valence and (b) a race dimension defined by the contrast of racial Black with racial White.

Critique

Alternative interpretations started to appear soon after the IAT’s initial 1998 publication. These included (in chronological order) differential familiarity of items in different categories (Ottaway et al., 2001), criterion shift (Brendl et al., 2001), figure-ground asymmetry (Rothermund & Wentura, 2001), task switching (Mierke & Klauer, 2001), salience asymmetry (Rothermund & Wentura, 2004), quadruple-process model (Conrey et al., 2005), category recoding (Rothermund et al., 2009), multinomial model (Meissner & Rothermund, 2013), and executive function (Ito et al., 2015; Klauer et al., 2010). Further description of each is available in Greenwald et al. (2020).

Evaluation

Most of the alternative conceptions predict the same findings as the association-strength interpretation. For the few that provide bases for competing predictions (differential familiarity, salience asymmetry, executive function), empirical tests of the competing predictions have not been supportive of the critiques (see Greenwald et al., 2020). For those alternatives that make at least a subset of the same predictions as the association-strength interpretation, a generalized principle shared with the association-strength interpretation is that IAT measures capture two opposed processes or representations in a way that provides a relative strength measure that correlates with other indicators of attitudes, stereotypes, identities, and self-esteem. The exception is that the association-strength interpretation is the only theoretical interpretation that can produce the novel predictions of BIT (Greenwald et al., 2002) that were described under the preceding Construct Validity heading. Consistent experimental confirmation of the BIT predictions (reviewed by Cvencek et al., 2021) favors the association-strength interpretation.

Do IAT measures have adequate test–retest reliability?

Standard view

Average test–retest reliability (r) of .50 for IAT measures was recently reported in a meta-analysis by Greenwald and Lai (2020). Similar results had been reported in previous reviews, including Nosek et al. (2007; r = .56) and Gawronski et al. (2017; r = .41). See also Payne et al. (2017, pp. 233–234).

Critique

The IAT’s test–retest reliability is too low for it to have individually diagnostic value. Payne et al. (2017) observed that “the temporal stability of [implicit] biases is so low that the same person tested 1 month apart is unlikely to show similar levels of bias” (p. 233).

Evaluation

Payne et al. (2017) did not provide data or statistical analysis to support their assertion that two administrations separated in time are “unlikely to show similar levels of bias.” Data from large-sample studies of IAT bias measures are useful in evaluating their assertion. Those studies have shown that intergroup-bias measures have means averaging about 0.7 SD from the zero values that indicate absence of bias (data from many respondents were first presented by Nosek et al., 2007, Table 2). Using the r = .50 estimate for test–retest reliability of IAT measures, application of well-known statistical properties of normal distributions produces conclusions that an individual-subject observation that is 0.7 SD from zero at Time 1 has (a) a 76% chance of being on the same side of zero at Time 2 and (b) a 58% chance of being greater than 0.5 SD from zero in the same direction at Time 2.

Does the IAT assess individual differences among persons or situation differences?

Standard view

In the article that introduced the IAT, Greenwald et al. (1998) described the IAT as a measure of “individual differences in implicit cognition.” This remains the standard view.

Critique

Payne et al. (2017) wrote that “most of the systematic variance in implicit biases appears to operate at the level of situations” and that “measures of implicit bias . . . are meaningful, valid, and reliable measures of situations rather than persons” (p. 236).

Evaluation

In the journal issue containing Payne et al.’s (2017) article, several of the invited commenters distributed influence on IAT measures more equally between person and situation than did Payne et al. In a later commentary, Connor and Evers (2020) pointed out that Payne et al. had interpreted the difference between correlations involving individual IAT data and large-N aggregates as indicating more “systematic variance” (p. 1331) in the group aggregates than in individual respondent data. This was incorrect, because aggregating multiple IAT scores into a mean score for the aggregate necessarily loses the (approximate) 50% of the systematic variability of individual scores that is due to between-person differences. That 50% figure, as Connor and Evers (2020) pointed out, is based on expected test–retest reliability (r = .50) of individual IAT scores. Statistical tests using large-N aggregates have great statistical power to detect small effects in data from which individual-person variance has been removed by aggregation. As an alert undergraduate statistics student will know, the estimated variance of aggregates of N observations can be computed by dividing the variance of individual observations by N – 1.

Even setting aside the article by Payne et al. (2017) and its critiques, there is much support for the individual-differences interpretation of IAT measures from well-established correlational research reviewed under this article’s Construct Validity heading, including many published reports of (a) known-groups differences in IAT-measured attitudes, (b) known-groups differences in IAT measures of self-concepts or identities, (c) demonstrations of the IAT’s predictive validity, and (d) positive correlations of self-report measures of attitudes with parallel IAT attitude measures. All of these findings are consistent with the interpretation of IAT measures as measures of individual differences among persons.

Does the zero value of an IAT implicit-bias measure indicate absence of bias?

Standard view

The zero value for IAT attitude measures was described in the initial IAT publication as indicating “absence of preference” (Greenwald et al., 1998, p. 1476). As the IAT was extended to measurement of stereotypes, self-concepts, and identities, an interpretation of absence of difference between contrasted associations involved in the IAT’s combined tasks was maintained. For example, the zero value of a widely used IAT measure of gender–career stereotype is assumed to indicate equal association of male and female with career (relative to family).

Critique

The absence-of-difference interpretation of the IAT’s zero point has been criticized as being “arbitrary” (Blanton & Jaccard, 2006) and as “‘right biased,’ such that individuals who are behaviorally neutral tend to have positive IAT scores” (Blanton et al., 2015, p. 1468).

Evaluation

This critique is important because if the IAT’s zero point is misplaced, a corrective relocation could substantially alter the estimated proportion of a subject population that scores in a range indicative of nontrivial bias. The critiques by Blanton and colleagues stop short of identifying an alternative location for the indifference point of IAT measures. Until recently, there was no theoretically derived basis for empirically confirming the absence-of-difference (in association strengths) interpretation of the IAT’s zero value. The standard view was based on the intuition that equal speeds of performance in the IAT’s two combined tasks should indicate equal strengths of the associations assumed to be drawn on in the two combined tasks (see Fig. 2). The recent development is the publication by Cvencek et al. (2021) of a meta-analysis of studies of BIT that confirms the validity of the IAT measure’s zero value as indicating absence of preference. Development of the theoretical basis for these confirmations is given in both Greenwald et al. (2020, pp. 33–37) and Cvencek et al. (2021, pp. 191–194).

Are the IAT’s correlations with measures of discriminatory judgment or behavior too small to be of any practical use?

Standard view

There is no standard view that identifies a correlational effect size for predictive validity that should be considered of practical importance. The criterion for significance in early IAT predictive-validity studies did not go beyond traditional use of Type I error probabilities (p values) to evaluate statistical significance.

Critique

In interpreting their meta-analysis’s finding of an aggregate correlation (r) of .140 for predictive validity of IAT measures of implicit bias, Oswald et al. (2013) concluded that “the IAT provides little insight into who will discriminate against whom” (p. 188). Oswald et al. (2015) similarly concluded that “IAT scores are not good predictors of ethnic or racial discrimination, and explain, at most, small fractions of the variance in discriminatory behavior in controlled laboratory settings” (p. 562).

Evaluation

In response to Oswald et al.’s (2013) initial critique, Greenwald et al. (2015) presented statistical simulations establishing that effect sizes even substantially smaller than the aggregate magnitudes found in the three published meta-analyses of IAT predictive validity (Greenwald et al., 2009; Kurdi et al., 2019; Oswald et al., 2013) “were large enough to explain discriminatory impacts that are societally significant either because they can affect many people simultaneously or because they can repeatedly affect single persons” (p. 553). Although not contesting the validity of Greenwald et al.’s simulations, Oswald et al. (2015) restated their prior observation that aggregate predictive-validity correlations were not “large enough” to have “substantial societal significance” (p. 565).

The last three of the five critiques of IAT measures evaluated in this section appear to be largely resolved. However, the first two have potential to produce informative new empirical developments. For the first one (Are IAT measures tapping associative knowledge?), the viable alternatives have not yet generated enough empirical evidence to demonstrate that they may be superior to the association-strength interpretation of IAT measures. For the second (Do IAT measures have adequate reliability?), the current answer is “yes, but.” The “but” is that research could be more efficient if reliability were stronger. A straightforward way to strengthen reliability is to use two or more administrations of an IAT measure (within a research session), at a cost of increased data-collection time. Greater research efficiency may be achievable by finding methods to reduce the inherent statistical noise of latency-based measures. Success in this endeavor would be welcomed by a sizable collection of cognition and social-cognition researchers who rely on latency measures.

What is not yet known about implicit bias

Although the first three questions listed after this paragraph have been discussed in multiple publications, they have not received confident answers. The last two have not yet received substantial attention. Investigations of all of these questions have potential both to afford new theoretical insights and to provide bases for useful applications. Some words of partial elaboration are added only for the last two. Further discussion of all of these questions is available in the consideration of a larger set of unanswered questions by Greenwald et al. (2020, pp. 39–45):

How do the association strengths measured by the IAT influence social behavior?

When, in child development, are implicit biases formed, and what are the experiences that form them?

Are implicit biases introspectively (consciously) accessible?

What are the effects of possessing implicit stereotypes tied to one’s own identities?

What is the effect of a person having implicit and explicit attitudes that differ?

Question 4 is suggested by an understanding of stereotype threat (Steele, 1997) as anxiety experienced by members of a stigmatized group who seek to excel in a domain in which their group is negatively stereotyped (e.g., pressure on women to perform well on a computer science test). To date, this form of conflict between an identity and a stereotype has been investigated almost exclusively in terms of explicit identities and stereotypes.

For Question 5, because of the typically positive correlation between implicit and explicit measures, large implicit–explicit discrepancies are not expected. However, there are some circumstances in which these discrepancies appear more than occasionally. The best-known example is that a substantial minority of Black respondents to the Black–White race-attitude IAT display the combination of White preference on the race-attitude IAT and strong Black preference on the parallel self-report attitude measure. There have been multiple studies with as-yet-inconclusive results seeking correlates of discrepancies between implicit and explicit self-esteem (see Cvencek et al., 2020, pp. 2–5). There has not yet been substantial research focused on consequences of other implicit–explicit discrepancies.

Research on Remedies for Implicit Bias

This section reviews methods considered as plausible or possible means of achieving mental or behavioral debiasing. The five subsections evaluate evidence on methods ranging from laboratory experimental interventions provided to one person at a time to educational approaches provided to assembled groups of participants.

Effects of experimental interventions

A variety of procedures designed to reduce implicit biases have been tested in experimental studies. The biases tested in these studies are often ones believed to be acquired early in life (for an overview of evidence for early acquisition, see Hailey & Olson, 2013). Such long-established implicit attitudes and stereotypes are mostly those associated with easily detected demographic characteristics (e.g., age, gender, and race). With the aid of IAT procedures adapted for use with preschool children, these implicit biases have been observed at ages as young as 4½ years (Cvencek et al., 2011). Unfortunately, however, the IAT method is not yet available for use earlier in childhood, when implicit biases are likely being established. Early established associations are likely not only to be strong but also to be sustained by interconnections within an associative-knowledge structure centered on the self (Greenwald et al., 2002). There is no theoretical basis for expecting these long-established implicit attitudes and stereotypes to be easily modifiable. By contrast, novel implicit attitudes or stereotypes (e.g., ones associated with previously unfamiliar persons or previously unknown groups) can easily be created and modified in experimental studies (e.g., Gregg et al., 2006). Such newly formed associations can even be reversed in valence when sufficient new information is provided (Mann et al., 2020; see also Cone et al., 2017).

Single-session experimental interventions

The first review of single-session implicit-bias interventions (Blair, 2002) included 24 studies that used the two most widely investigated measures of implicit biases, the IAT and sequential priming (Fazio et al., 1986). Only one of the 24 studies reviewed by Blair used a measure of treatment impact obtained at a time other than the intervention session, and it assessed impact just one day later (Dasgupta & Greenwald, 2001). Somewhat surprisingly, this heavy emphasis on testing the immediate impact of interventions persisted. In a 2019 meta-analysis of interventions designed to alter implicit measures, Forscher et al. (2019) found that only 38 of 598 studies (6.4%) included a posttest that was obtained other than within the session in which the intervention was administered (p. 530). Forscher et al. reported that this small proportion indicated “a lack of research interest in change beyond the confines of a single experimental session” (p. 542).

The first direct comparison of impact for immediate and delayed tests of single-session interventions was Lai et al.’s (2016) report of multiple tests involving eight interventions. Those eight interventions were ones that, in a previous collection of multiple single-session experiments by Lai et al. (2014), were found to reduce implicit race bias on immediate posttests. The eight effective interventions were in three categories: exposure to counterstereotypical exemplars, intentional strategies to overcome bias, and evaluative conditioning. Three categories that did not produce immediate impact were taking others’ perspectives, appeals to egalitarian values, and inducing emotion. Although Lai et al.’s 2016 study confirmed the previous observation of immediate effectiveness for all eight of the previously tested interventions, none of the eight displayed significant impact on posttests conducted after delays of just 1 or 2 days. Across all eight, the effects on delayed posttests averaged near zero (see Lai et al., 2016, Figure 2). Their 2016 results led Lai et al. to conclude that the interventions were “not changing implicit preferences per se, but are instead changing nonassociative factors that are related to IAT performance” (p. 1013) and that the findings were “a testament to how [implicit biases] remain steadfast in the face of efforts to change them” (p. 1014).

If the immediate changes in implicit-bias measures produced by at least three categories of brief interventions are not actually changes in implicit-bias associations, those changes must have some plausible theoretical explanation. We are aware of one plausible explanation: The observed ephemeral changes on implicit-bias measures may be priming effects of types that have been well established in social psychology since the 1970s. Social-psychological priming studies typically use brief interventions that activate a subset of representations associated with a mental category—for example, female scientists (a subset of females) or Black entertainers (a subset of all Black persons). The priming hypothesis is that the activated subcategory temporarily replaces a superordinate category that would have been activated but for the effect of the intervention. The activated subcategory can then be responsible for the observed temporary changes in IAT or other indirect measures of bias. 6

Interventions based on the contact hypothesis

Pettigrew and Tropp’s (2006) meta-analysis found broad support for the hypothesis that contact between members of two groups should be associated with increased liking or decreased disliking between the two groups. Largely because all of the 515 studies of intergroup contact research reviewed by Pettigrew and Tropp were reported before 2001, their review included no studies using implicit measures of attitudes or stereotypes. Because only 5% of the 713 independent samples analyzed by the authors were true experiments (i.e., with random assignment to contact versus control conditions; Pettigrew & Tropp, 2006, p. 755), the causal role of contact was indeterminate for the great majority of studies in their review.

Fortunately, more than a dozen intergroup-contact studies using dependent measures of implicit bias have been published since Pettigrew and Tropp’s review. These studies have found that more frequent or more favorable intergroup contact is associated with lower levels of implicit bias. However, most of these studies were correlational, either cross-sectionally (e.g., Turner et al., 2007) or longitudinally (e.g., Onyeador et al., 2020). To avoid the causal ambiguity of nonexperimental studies, we limit attention here to five studies that used experimental designs.

Turner and Crisp (2010) found that an intervention of imagined interaction, either with an elderly person or with a Muslim person, produced a lower level of implicit bias than was observed in their control conditions. Because their only posttest was within the same session as the intervention, there is insufficient basis for treating their observed treatment effect as a durable change in an implicit bias. This limitation did not apply to Vezzali et al.’s (2012) experiment with Italian fifth-grade students. After a 3-week intervention using imagined interactions with immigrants, their delayed posttest found that children who received that intervention displayed significantly lower implicit bias than did children in their control condition. Unfortunately, a subsequent replication study (Schuhl et al., 2019) did not reproduce Vezzali et al.’s finding of change remaining evident on a delayed posttest. Another imagined-contact study found reduced implicit bias at the end of the intervention session only for subjects who were initially high in prejudice (West et al., 2017); another study found that imagined contact reduced implicit bias in a second session 24 hr after the experimental manipulation, but only when implicit-bias feedback was part of the intervention (Pennington et al., 2016). In summary, imagined contact has shown encouraging effectiveness in attenuating implicit bias under a limited set of conditions, but the robustness of that durability has not been established.

One of the most interesting and ambitious experimental tests of the contact hypothesis on implicit-bias reduction was a longitudinal study of White college students who were randomly paired with either a White or an African American dormitory roommate at the beginning of their first year in college (Shook & Fazio, 2008). The dependent measure of implicit racial attitudes was a sequential priming measure, administered both in the first 2 weeks (pretest) and in the final 2 weeks (posttest) of the 3-month academic term. The posttest revealed a significant difference between the two roommate conditions: The African American–roommate condition showed a significantly (p = .04) greater reduction in implicit race bias from pretest to posttest relative to the White-roommate condition. Given the high interest value of this result, it is disappointing that this finding has remained unreplicated for more than a decade. Replication would provide valuable support for the conclusion that prolonged intergroup racial contact enables durable reduction of implicit race bias.

Multisession laboratory interventions

Effects on implicit race biases of interventions extended across time have been reported in a small number of laboratory studies. The first of these (Devine et al., 2012) tested the effectiveness of bias-reduction training administered in six sessions over a 12-week period. This study reported “the first evidence that a controlled, randomized intervention can produce enduring reductions in implicit bias” (p. 1271). Disappointingly, the finding was not reproduced in a replication study from the same laboratory that tripled the initial study’s sample size (Forscher et al., 2017). The authors reported that the 2017 study’s multisession intervention produced effects on self-report measures, suggesting that the intervention had produced durable changes in “knowledge of and beliefs about race-related issues” (Forscher et al., p. 133).

In a two-session experiment, Stone et al. (2020) sought to reduce first-year medical students’ implicit negative stereotype of Hispanic Americans 7 as medically noncompliant. Each of two workshop sessions incorporated multiple implicit-bias-reduction strategies, along with active-learning exercises relevant to medical settings. A posttest IAT was administered 3 to 7 days after the second workshop. On the IAT pretest, White medical students and those from non-Hispanic racial and ethnic minority groups significantly associated medical noncompliance with Hispanic American (relative to White American) ethnicity. In results comparing preworkshop and postworkshop measurements, White medical students showed a significant decrease in the implicit noncompliance stereotype of Hispanic patients, whereas Hispanic medical students and those from other racial/ethnic groups did not.

Interventions in field settings

Relatively few investigations of bias-reducing interventions have been conducted in settings that allow assessment of effectiveness beyond the laboratory.

A series of field experiments conducted by Dasgupta and colleagues was unusual in embedding interventions in everyday situations and in administering those interventions in person rather than via computer. These characteristics may explain why these studies, described in the next three paragraphs, found durable effects that have otherwise been extremely difficult to obtain.

In their study investigating possibilities for remedying the underrepresentation of women in professional leadership roles, Dasgupta and Asgari (2004) took advantage of geographical proximity of two similarly sized liberal arts colleges—one an all-women’s college, the other a coeducational college. Their study of women’s implicit gender–leader stereotypes began at the start of the women’s first year on campus. At the start there were 82 women participants, 41 at each school. One year later, 63% of the sample was available for posttests, which revealed that the male = leader stereotype had been significantly weakened for women at the women’s college, an effect that was strongest for those who had greatest exposure to female faculty. At the coeducational college, where the women had greater exposure to male professors in their science, technology, engineering, and math (STEM) classes, an opposite effect was observed. The male = leader stereotype had strengthened.

A second longitudinal study by Dasgupta’s group examined whether contact with women professors of mathematics would change students’ implicit attitudes toward math and their implicit math self-concept (Stout et al., 2011). Women students whose calculus course was taught by a female (rather than male) professor showed significantly more positive implicit attitudes toward mathematics and stronger implicit identification with the discipline (self–math association). Men’s responses were not affected by variations in professors’ gender. This difference remained apparent 3 months later—it was durable.

A third longitudinal field experiment from the same laboratory (Dennehy & Dasgupta, 2017) investigated consequences of randomly assigning first-year female engineering majors to a mentor who was a male senior engineering student, a mentor who was a female senior engineering student, or no mentor. Explicit measures of belonging, self-efficacy, and threat found statistically significant benefits of having a female mentor, both during the first-year mentoring experience and 1 year after the mentoring had ended. IAT results were directionally consistent with these findings—in some cases statistically significant, in other cases marginal.

Large-scale field experiments

Carnes et al. (2015) reported a study participated in by 2,290 faculty members from 92 medicine, science, or engineering academic departments at the University of Wisconsin. Faculty members in the 46 departments that were randomly assigned to the “gender-bias habit-reducing training workshop” condition received a 2.5-hr training session administered to their department unit. (Content of the workshop is described in detail on pp. 222–223 of Carnes et al., 2015.) These faculty members received a survey that assessed 13 outcome measures 2 days before, 3 days after, and 3 months after their department’s workshop. Each control department received the same surveys at the same times as a matched workshop-receiving department. The results showed no impact of the workshop on the IAT-measured gender–leader stereotype. However, significant benefits of the intervention (treatment vs. control comparisons) appeared on self-report measures of (a) confidence in personal ability to behave in gender-equitable fashion and (b) increased awareness of personal gender bias. A follow-up report by Devine et al. (2017) compared the university’s hiring rates of women in the 2 years before the workshop with those in the 2 years after the workshop. This analysis found “modest [p = .07] evidence that, whereas the proportion of women hired by control departments remained stable over time, the proportion of women hired by intervention departments increased” (p. 213).

Chang et al. (2019) reported results of a large field experiment at a multinational corporation. The corporation recruited 3,016 employees, in 63 countries, to complete an hour-long online diversity-training session in which “participants learned about the psychological processes that underlie stereotyping and research that shows how stereotyping can result in bias and inequity in the workplace, completed and received feedback on an Implicit Association Test assessing their associations between gender and career-oriented words, and learned about strategies to overcome stereotyping in the workplace” (p. 7779). Participants were randomly assigned to three conditions: gender-bias training, general-bias training, or a control training focused on psychological safety and active listening. Measures of training effectiveness included attitudes measured at an end-of-training survey and unobtrusively measured workplace behaviors observed for several months after the training. The workplace behaviors included nominations of fellow employees for service awards and volunteering to informally mentor junior employees over coffee.

Chang and coauthors sought to demonstrate improvements of attitude and behavior supporting gender equality among training recipients who, at baseline, no more than weakly supported advancement of women’s careers. They did not find this desired impact on behavior, but they did find some increase in self-reported attitudes favorable to gender equity. The only effect on behavior (but not much effect on attitude) was found for women in the United States who initially were most supportive of women’s careers (Chang et al., 2019, p. 7781). The researchers concluded that stand-alone trainings such as the one they used are not likely to be “solutions for promoting equality in the workplace, particularly given their limited efficacy among those groups [especially men] whose behaviors policymakers are most eager to influence” (p. 7778).

Paluck et al. (2021, pp. 550–553) reviewed seven other large-sample field experiments that used novel and imaginative measures (although no implicit measures) to assess behavioral consequences of experimental interventions. Paluck et al. evaluated the designs and executions of these studies very favorably but characterized their takeaways, collectively, as “sobering” because “effects are often limited in size, scope, or duration” (p.553). In particular, effect sizes were described as being “much smaller than those reported on average in the corresponding laboratory literature using theoretically similar interventions” (p. 553). On a more positive note, Paluck et al. observed that “the prejudice-reduction interventions often seem more successful at changing discriminatory behaviors than at reducing negative stereotypes or animus” (p. 553).

Effects of group-administered trainings

Widespread recognition of implicit bias as a contributor to discriminatory disparities has prompted the development of many commercially provided training programs that are offered to large organizations as ways to overcome undesired consequences of implicit (and other) biases. These offerings often have three components: (a) defining implicit bias as a source of unintended discrimination, (b) describing the pervasiveness of implicit biases, and (c) advocating remedial strategies. Educational components (a) and (b) often draw accurately on scientific understanding. However, the remedial component (c) is generally not an evidence-based procedure and is typically administered with no follow-up effort to document effectiveness.

Trainers’ advocacy of unsubstantiated remedies has the potential to create an unwarranted appearance that the organization receiving the training is operating in bias-free fashion. Kaiser et al. (2013) concluded that an “illusory sense of fairness” can be created when a corporation offers a program identified as “diversity training” (p. 504). This can encourage training recipients to “legitimize the status quo by becoming less sensitive to discrimination targeted at underrepresented groups and reacting more harshly toward underrepresented group members who claim discrimination” (p. 504).

The most efficient way to consider what is known about group-administered trainings is to draw on the conclusions of a few excellent scholarly reviews that have considered the impact of group-administered training either on behaviors that can affect equal opportunity among an organization’s personnel or on behavior that can improve equal opportunity in hiring. We describe these important reviews, concluding each with the authors’ interpretation of their findings.

Kalev et al. (2006) obtained data for 708 private U.S. companies sampled from a database maintained by the U.S. Equal Employment Opportunity Commission (EEOC). They identified three categories of diversity-oriented strategies rooted in “theories of how organizations achieve goals, how stereotyping shapes hiring and promotion, and how networks influence careers” (p. 589). The three categories were (a) group-administered training, (b) networking with mentoring, and (c) establishing organizational responsibility. Data on the 708 companies’ use of seven strategies in these three categories over the time period covered by the study (1971–2002) were obtained from company human resources (HR) managers via survey interviews. Data on employee diversity as it changed over the years covered by the study were obtained from required annual corporate reports to EEOC. In addition, the authors surveyed all of the companies, interviewing company HR managers to obtain additional data on the seven strategies over the 32-year period covered by the study.

The seven types of diversity-improving practices evaluated by Kalev et al. (2006) were (a) affirmative action plans, (b) diversity committees and taskforces, (c) diversity managers, (d) diversity training, (e) diversity evaluations for managers, (f) networking programs, and (g) mentoring programs. Kalev et al.’s judgments about effectiveness for these seven types of programs are quoted here from the Conclusion section of their article:

Practices that target managerial bias through feedback (diversity evaluations) and education (diversity training) show virtually no effect in the aggregate. They show modest positive effects when responsibility structures are also in place and among federal contractors. But they sometimes show negative effects otherwise. (Kalev et al., 2006, p. 611)

Kalev et al. (2006) concluded, “Efforts to moderate managerial bias through diversity training and diversity evaluations are least effective at increasing the share of White women, Black women, and Black men in management” (p. 589). They found that “establishing organizational responsibility” was the only category of methods that gave indications of effectiveness in increasing demographic diversity. A more recent review by Dobbin and Kalev (2013) gave a summary statement very similar to that in Kalev et al. (2006): “[We] find that diversity training (offered to all employees or to all managers) has little aggregate effect on workforce diversity” (p. 268).

Other review articles in organizational psychology journals (e.g., Leslie, 2019; Mor Barak et al., 2016; Nishii et al., 2018) have found (similar to Kalev et al., 2006) a lack of clear findings for the effectiveness of diversity-training programs. The conclusion of Nishii et al. (2018) is representative:

The pattern of results is filled with inconsistencies that severely limit our understanding of which diversity practices should be used, how they should be implemented, for what purpose, and to what effect. There is little theory that helps scholars and practitioners integrate disparate research results. (p. 38)

Paluck and Green (2009) reviewed 985 studies of prejudice reduction that had been reported between 2003 and 2008 (28% were unpublished). They sorted these into major categories of observational (nonexperimental, 60%), laboratory experimental (29%), and field experimental (11%). They regarded the nonexperimental studies as important because “the vast majority of real-world interventions—in schools, businesses, communities, hospitals, police stations, and media markets—have been studied with nonexperimental methods” (p. 345). Paluck and Green nevertheless concluded that the body of nearly 600 nonexperimental studies “cannot answer the question of ‘what works’ to reduce prejudice in these real-world settings,” largely because only a third of those studies were quasi-experimental (i.e., including comparison groups but without random assignment), and of those 207 studies, only 12 afforded any basis for drawing conclusions about causal impact or the lack thereof (p. 345).

Paluck and Green (2009) were similarly ambivalent about the laboratory experimental studies captured in their review. They concluded that “those interested in creating effective prejudice-reduction programs must remain skeptical of the recommendations of laboratory experiments until they are supported by research of the same degree of rigor outside of the laboratory” (p. 351). Field experimental studies received the most favorable comment (“the field experimental literature on prejudice reduction suggests some tentative conclusions and promising avenues for reducing prejudice”; p. 356). However, their approval of the field experimental studies nevertheless remained equivocal:

The strongest conclusion to be drawn from the field experimental literature . . . concerns the dearth of evidence for most prejudice-reduction programs. Few [of these] programs . . . have been evaluated rigorously. . . . Entire genres of prejudice-reduction interventions, including moral education, organizational diversity training [emphasis added], advertising, and cultural competence in the health and law enforcement professions, have never been [rigorously] tested. (p. 356)