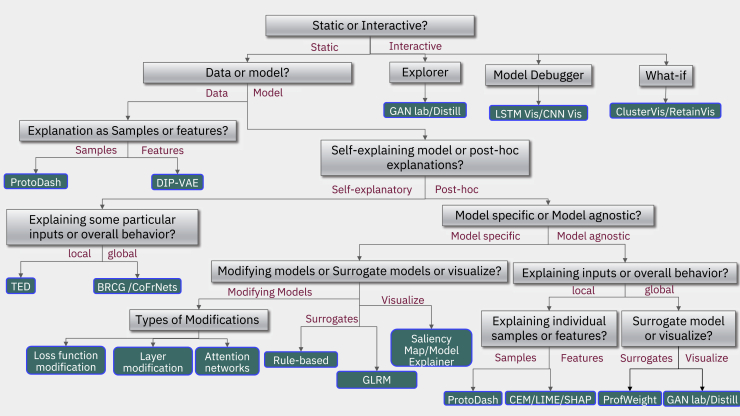

Figure 1.

Taxonomy tree for explainability in AI models

To figure out the most appropriate explanation method, we propose a taxonomy of questions represented as a decision tree to help navigate the process. The green leaf nodes represent algorithms that are in the current release of AI Explainability 360. Considering the data, different choices are possible relative to its representation and understanding: data understanding based on features, in which case theory can yield disentangled representations, such as in Disentangled Inferred Prior Variational AutoEncoders (DIP-VAEs)32; otherwise, a sample-based approach using ProtoDash33 is possible, which provides a way to do case-based reasoning. If the goal is to explain models instead of data, then the next question is whether a local explanation for individual samples or a global explanation for the entire model is needed. Following the global path, the next question is, Should it be a post hoc method or a self-explaining one? On the self-explaining branch, TED (teaching explanations for decision making)34 is one option, or a global method, such as BRCG (Boolean rule sets with column generation).35 On the model agnostic post hoc branch, again, explaining in terms of samples or features comes up. On the sample side, prototypes come up again, as on the feature side choices among the contrast of explanations methods (CEMs),36 as well as popular algorithms, such as LIME37 or SHAP,21 are available. Finally on the post hoc global side, surrogate models, such as ProfWeight, are available. On the model-specific branch, one has to choose between modifying models, surrogate models, or simply visualizations. Going back up, aiming for global explanations for the entire model, then the question again is whether something post hoc is needed or a directly interpretable model? A directly interpretable model could be a Boolean rule set, such as BRCG or GLRMs (generalized linear rule models),38 can yield the answer.