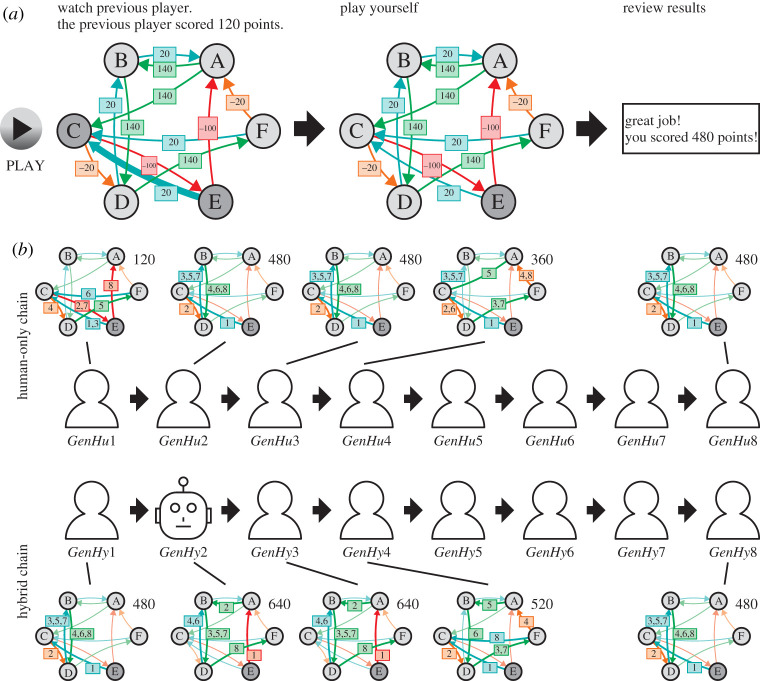

Figure 1.

(a) In the first stage of the task, participants saw an animation of the solution entered by the previous player (left-hand side). A snapshot showing the transition from node E to node C is depicted. In the second stage, the participants could enter a path by clicking on the respective nodes in sequence (centre). The node with grey background colour indicates the current node the participant is in. In the last stage, the total score of the player’s sequence is revealed (right-hand side). The network presented here is classified as human-regretful. (b) For each environment class, we constructed two chains of eight generations of players. In hybrid chains, the second generation player was replaced by an algorithm. The networks depict the solutions of the first four generations as well as the last generation for a selected environment (corresponding to (a)). The integer on the arrows denotes the step at which a player was choosing the move. The cumulative reward is shown in the upper right corner of each graphic. In this example, for the human-only chain the cumulative reward increases at first, but quickly reaches a plateau. For the hybrid chain, the algorithm shows a performance greater than observed in the human-only chain, but this improvement gets lost over subsequent human generations. (Online version in colour.)