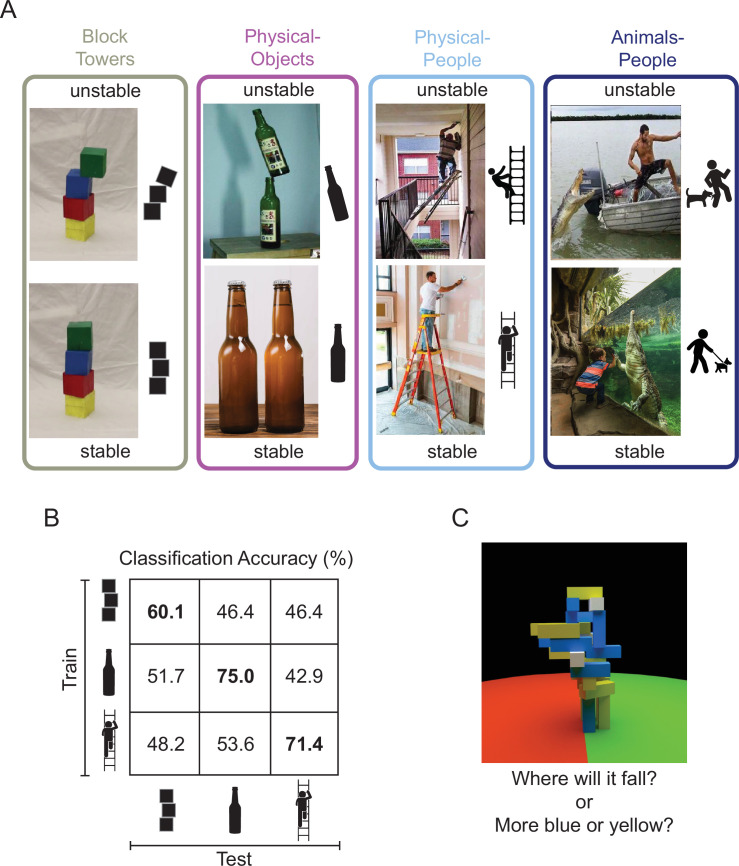

Figure 1. Design of studies conducted on machines and brains.

(A) Image sets depicting (1) unstable versus stable block towers (left), (2) objects in unstable versus stable configurations (middle left, the Physical-Objects set), (3) people in physically unstable versus stable situations (middle right, the Physical-People set), and (4) people in perilous (‘unstable’) versus relatively safe (‘stable’) situations with respect to animals (right, the Animals-People set). Note that by ‘physically unstable’ we refer to situations that are currently unchanging, but that would likely change dramatically after a small perturbation like a nudge or a puff of wind. The former three scenarios were used to study the generalizability of feature representations in a deep convolutional neural network (CNN) and the latter three scenarios were used in the human fMRI experiment. (B) Accuracy of a linear support vector machine (SVM) classifier trained to distinguish between CNN feature patterns for unstable versus stable conditions, as a function of Test Set. Accuracy within a scenario (i.e., train and test on the same scenario) was computed using a fourfold cross-validation procedure. (C) A functional localizer task contrasted physical versus nonphysical judgments on visually identical movie stimuli (adapted from Figure 2A from Fischer et al., 2016). During the localizer task, subjects viewed movies of block towers from a camera viewpoint that panned 360° around the tower, and either answered ‘where will it fall?’ (physical) or ‘does the tower have more blue or yellow blocks?’ (nonphysical).