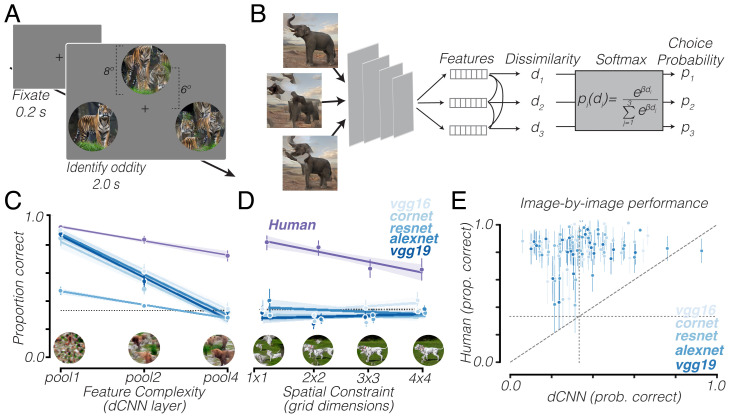

Fig. 2.

Human perception of objects is sensitive to feature complexity and spatial arrangement while dCNN observer models are insensitive to spatial arrangement. (A) Oddity detection task. Subjects saw three images, one natural and two synths, and chose the odd one out by key press. (B) Schematic of dCNN observer model fit to oddity detection task. Features are activations for each image extracted from the last convolutional layer of a dCNN. The Pearson distances between each image’s feature vector is computed (d1 to d3) and converted into choice probabilities (p1 to p3) using a softmax function with free parameter β which controls how sensitive the model is to feature dissimilarity. (C) The dCNN performance (blue) compared to human performance (purple) as a function of synths’ feature complexity, averaged across all observers, images, and spatial constraint levels. Performance indicates the proportion of trials in which the natural image was chosen as the oddity. Example synths from each feature complexity level are shown at the bottom. (D) The dCNN performance (blue) compared to human performance (purple) as a function of synths’ spatial constraints, across all observers and images for pool4 feature complexity. Example synths from each spatial constraint level are shown at the bottom. (E) The dCNN performance vs. human performance, image by image, for 1 × 1 pool4 condition. Vertical and horizontal dotted lines in C–E represent chance level. Diagonal dashed line in E is line of equality. Error bars in C–E indicate bootstrapped 95% CIs across trials. prob., probability; prop., proportion.