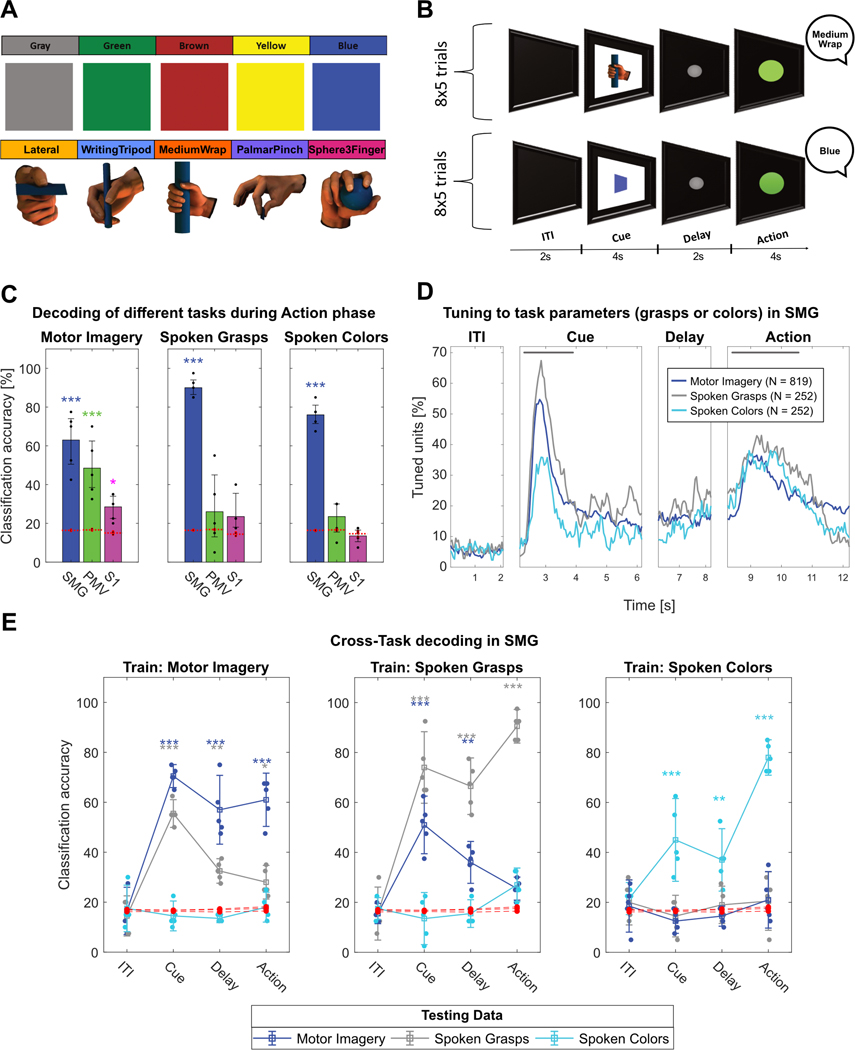

Figure 4|. SMG encodes speech.

A) Grasp images cued the “Motor Imagery” and “Spoken Grasps” tasks. Colored squares cued the “Spoken Colors” task. B) The task contained an ITI, a cue phase displaying the image of one of the grasp or colored squares, a delay phase and an action phase. During the action phase, the participant vocalized once the name of the cued grasp or color. C) Classification was performed for each individual session day using leave-one-out cross-validation (black dots) for all tasks. PCA was performed for feature selection. 95% c.i.s for the session mean was computed. Significance was computed by comparing actual data results to a shuffle distribution (averaged shuffle results = red dots, * = p < 0.05, ** = p < 0.01, *** = p < 0.001). SMG, PMv, and S1 showed significant classification results for motor imagery. Only SMG data significantly classified spoken grasps and spoken colors. D) Percentage of tuned units to grasps or colors in 50ms time bins in SMG for each task. The gray lines represent cue and action analysis windows for figure S2A,B. E) Cross-task classification was performed by training a classification model on one task (e.g. Motor Imagery) and evaluating it on all three tasks, for each phase separately. Confidence intervals and significance were computed as described in figure 4C). During the cue phase, generalization between tasks using the same image cue (“Motor Imagery” and “Spoken Grasps”) was observed. During the action phase, weak (*) or no generalization was observed.