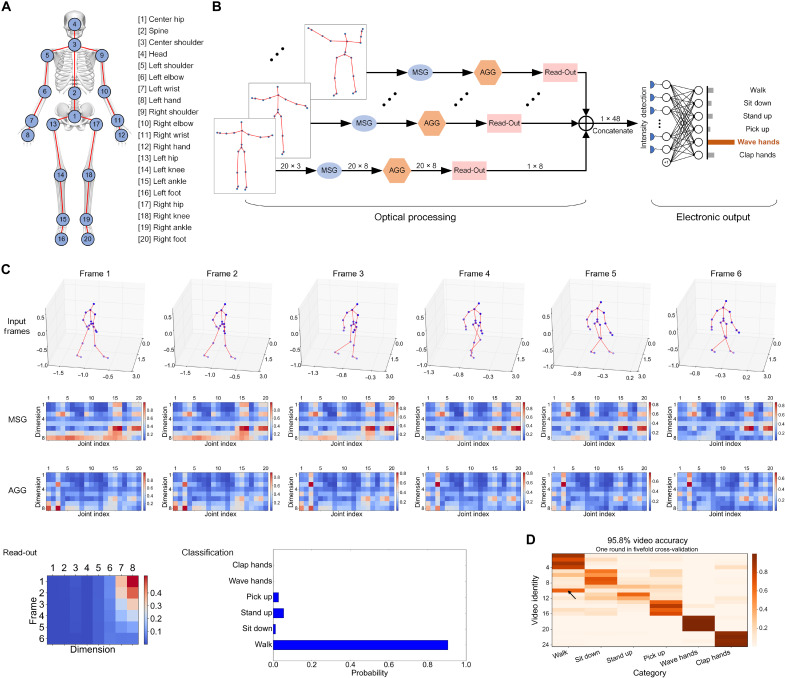

Fig. 4. Graph classification of the DGNN on the task of action recognition.

(A) Graph structure of skeleton data captured by Kinect V1. (B) The schematic of DGNN architecture for skeleton-based human action recognition. (C) Visualizing results of a selected subsequence from the test set for performing the action category of the walk. The normalized amplitude of each frame processed after optical MSG( · ), AGG( · ), the L2-normalized intensity values after optical Read − Out( · ), and the classification result are shown. (D) The inference results of all the test subsequences in one round of fivefold cross-validation. The arrow indicated slot is the only misclassified video of the database.