SUMMARY

We evaluated laboratory reports as early indicators of West Nile virus (WNV) disease cases in Texas. We compared WNV laboratory results in the National Electronic Disease Surveillance System Base System (NBS) to WNV disease cases reported to the state health department from 2008 to 2012. We calculated sensitivity and positive predictive value (PPV) of NBS reports, estimated the number of disease cases expected per laboratory report, and determined lead and lag times. The sensitivity and PPV of NBS laboratory reports were 86% and 77%, respectively. For every 10 positive laboratory reports, we expect 9·0 (95% confidence interval 8·9–9·2) reported disease cases. Laboratory reports preceded case reports with a lead time of 7 days. Electronic laboratory reports provided longer lead times than manually entered reports (P < 0·01). NBS laboratory reports are useful estimates of future reported WNV disease cases and may provide timely information for planning public health interventions.

Key words: West Nile virus, public health surveillance, epidemiology, laboratory diagnoses

INTRODUCTION

West Nile virus (WNV) is a mosquito-borne virus that causes annual seasonal outbreaks in the USA which peak during the summer months but vary in size and location from year to year [1, 2]. A large multistate outbreak occurred in 2012, with more cases reported nationally than in any year since 2003; one-third of all cases reported in 2012 were from Texas [3].

In Texas, positive WNV laboratory results are reported to the Department of State Health Services (DSHS) by participating laboratories through an electronic laboratory reporting system, the National Electronic Disease Surveillance System Base System (NBS). Detection of anti-WNV immunoglobulin (Ig)M antibodies in serum or cerebrospinal fluid is the most common way to diagnose WNV infection [4, 5]. The presence of anti-WNV IgM antibodies usually indicates recent infection, while the presence of IgG antibodies alone typically indicates past infection. The detection of WNV RNA indicates recent infection, but it is rarely identified in immunocompetent patients with clinical illness [6, 7]. Immunohistochemical staining can be used to detect WNV antigens in fatal cases [8].

During the 2012 WNV outbreak, Texas DSHS needed timely information on WNV disease cases to respond to public inquiries, inform stakeholders, and plan public health interventions. The objectives of our analysis were to evaluate if WNV laboratory reports in NBS accurately predict subsequent WNV disease cases, determine the sensitivity and positive predictive value (PPV) of WNV laboratory reports for WNV disease cases, and assess if laboratory reports provide more timely information about trends in disease cases than the traditional case-reporting mechanism in Texas.

METHODS

Case definitions

We defined an NBS suspect case as a person with evidence of recent WNV infection based on a laboratory result reported to Texas NBS. Evidence of recent WNV infection included a positive WNV IgM antibody, RNA, or viral antigen test result. A WNV disease case was defined as a Texas resident with a clinically compatible illness and laboratory evidence of recent WNV infection according to the national surveillance case definition [9]. A matched case was defined as any person who was an NBS suspect case and was also reported to Texas DSHS as a WNV disease case.

Case-finding and data extraction

WNV disease is a nationally notifiable condition and Texas laboratories are required to report positive test results to the state or local health department. Texas NBS is used by the health departments to receive electronic laboratory results for WNV and other notifiable conditions. Positive laboratory results are uploaded into NBS using electronic messages received from participating laboratories where the testing is performed. Negative test results are sometimes entered into NBS if they are linked to positive results on the same specimen (e.g. negative IgM and positive IgG antibody results on the same specimen might both be included and reported as two results). For laboratories that do not use electronic reporting, positive laboratory results are sent to the local health department. These results can be entered manually into NBS for local tracking, although this is not required to report a case. Healthcare providers also can report suspect cases to their local health department. After receiving a positive laboratory result through NBS or other means, the local health department conducts a case investigation to collect demographic and clinical information from interviews with the patient and medical providers. Patients meeting the national surveillance case definition are reported as disease cases [9]. All local and regional health departments in Texas report WNV disease cases to DSHS through a paper-based system. Although the state health department can use NBS to report nationally notifiable conditions to the Centers for Disease Control and Prevention (CDC), through 2012, DSHS reported WNV disease cases to CDC using ArboNET, the national arboviral surveillance system [10–12].

Since 2005, Texas DSHS has managed WNV laboratory results through NBS. In 2005 and 2006, all laboratory reports were entered manually. Electronic laboratory reporting in Texas was piloted in several laboratories in 2007 and two high-volume laboratories that provide WNV testing were added beginning in 2008. We limited our analysis to 2008–2012 to include years when laboratory results were routinely reported to NBS electronically.

WNV laboratory results were extracted from NBS using a query of 28 logical observation identifiers names and codes (LOINC) in the resulted and ordered test fields that represented tests for WNV IgM antibody, RNA, or antigen. To identify patients with evidence of recent WNV infection, we filtered the reports to exclude: (1) negative test results for any test type; (2) positive results for test types that do not meet the national surveillance case definition (e.g. WNV IgG and neutralizing antibody tests alone); and (3) subsequent reports when multiple positive laboratory reports were available for the same patient identification number (e.g. one cerebrospinal fluid and one serum, or two sera). Each person for whom a report remained after this filtering process was classified as an NBS suspect case. Variables obtained from NBS included: (1) patient's name, sex, date of birth, and contact address; (2) specimen collection date; (3) test type and results; (4) date the results were reported to NBS; and (5) whether the results were reported to NBS electronically or manually.

We obtained data for all WNV disease cases reported to Texas DSHS from 2008 to 2012 from the statewide database used for reporting to ArboNET. Variables obtained on WNV disease cases included (1) patient's name, sex, date of birth, and county of residence; (2) illness onset date; (3) clinical syndrome; (4) outcome; and (5) date the case was reported to Texas DSHS.

Data analysis

Matching was performed to determine the overlap between NBS suspect cases and WNV disease cases reported to Texas DSHS. Because no identification numbers were shared between NBS and the WNV disease case database, we used the SPEDIS function in SAS 9.2 (SAS Institute Inc., USA) to match records based on patients' first and last names and birthdates. A case was considered to match if there was (1) a complete match by first and last name or (2) a match by either first or last name and an exact match by birthdate. Less than 1% of NBS suspect cases were missing the patient's first name, last name, or date of birth.

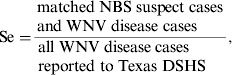

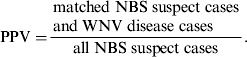

To evaluate the association between NBS suspect cases and WNV disease cases, we calculated sensitivity (Se) and PPV using the following equations:

|

|

We used the ratio of the number of NBS suspect cases to the number of WNV disease cases to estimate the number of expected disease cases for each NBS suspect case. To assess the impact of filtering results on the sensitivity and PPV, we determined the sensitivity and PPV of the unfiltered NBS laboratory reports, which include all WNV test types, negative results, and multiple reports from individual patients.

To evaluate the timing of reporting events, we assessed matched cases with available reporting dates. We defined the lead time as the difference between the date an NBS suspect case was entered into NBS and the date it was reported as a WNV disease case. We defined the lag time as the difference between the date of a WNV disease case symptom onset and the date an NBS suspect case was entered into NBS.

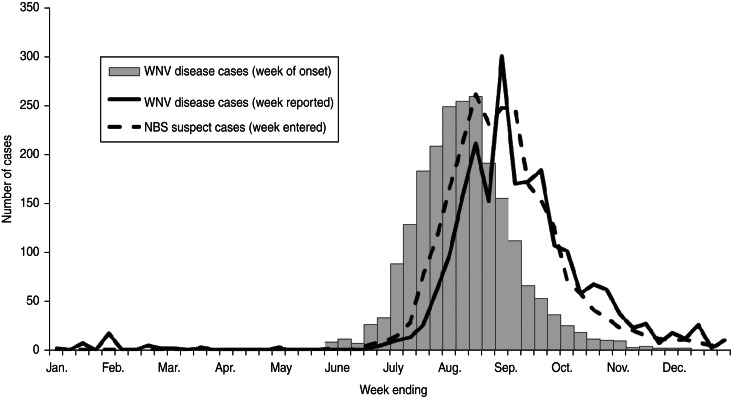

We assessed the distributions of WNV disease cases vs. NBS suspect cases, by graphing NBS suspect cases by date reported and WNV disease cases by date of illness onset and date of report to Texas DSHS. Dates were plotted by week because of marked fluctuations in daily data, especially for electronic laboratory reports, which reflect days that laboratories perform tests and report results.

For laboratory report lead and lag times, we determined median and interquartile range (IQR). Wilcoxon rank sum test was used to compare lead and lag times for electronic vs. manual laboratory reports and lag times for 2012 vs. other years. Linear regression was used to examine trends in lead time by year and the number of cases per year; logistic regression was used to examine trends in electronic reporting over time; and the Cochran–Armitage trend test was used to examine trends in sensitivity and PPV over time. All analyses were performed using SAS 9.2 and a two-sided P < 0·05 was used to determine statistical significance.

RESULTS

Case identification and characterization

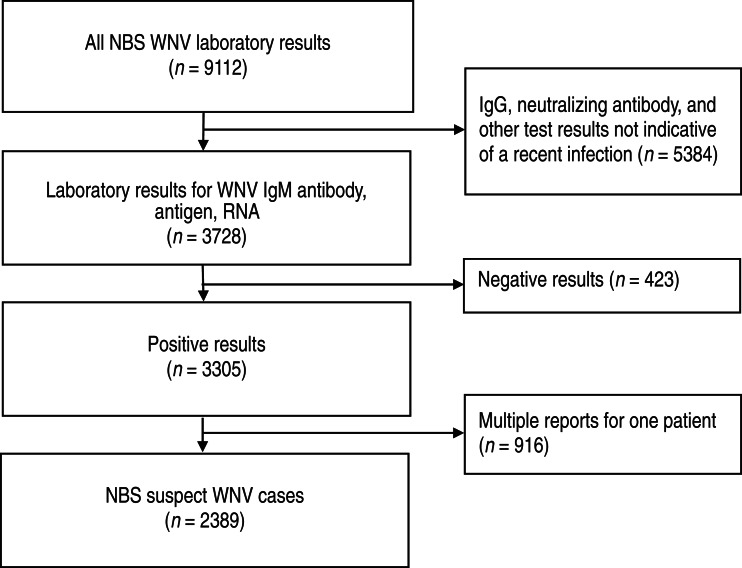

From 2008 to 2012, a total of 9112 WNV test results were reported to NBS in Texas (Fig. 1). Of those, 3728 (41%) were results for test types that are consistent with recent WNV infection, including 3613 IgM antibody, 115 RNA, and no viral antigen tests based on LOINC codes. The remaining 5384 (59%) WNV test results had insufficient evidence of recent infection. These included 5231 IgG antibody results and 153 results that represented other test types that could not be classified based on the LOINC code alone. Of the 3728 results for test types used to identify recent infections, 423 (11%) reports represented negative results that were entered along with a positive result (e.g. negative IgM and positive IgG results) from the same patient and 916 (25%) reports represented multiple reports from the same patient.

Fig. 1.

National Electronic Disease Surveillance System Base System (NBS) suspect West Nile virus (WNV) cases identified from laboratory results, Texas, 2008–2012.

After removing negative and duplicate reports, the individuals with the remaining 2389 reports were classified as NBS suspect WNV cases; of these, 2142 (90%) were reported electronically (Table 1). The proportion of NBS suspect WNV cases reported electronically each year did not change over the 5-year period (median 90%, range 86–98%; P = 0·77). During the same time period that the 2389 suspect cases were reported to NBS, 2161 WNV disease cases were reported to Texas DSHS (Table 1). The majority of both NBS suspect cases (88%, 2108/2389) and WNV disease cases (86%, 1865/2161) during the 5-year time period were reported during 2012.

Table 1.

Sensitivity and positive predictive value (PPV) of West Nile virus (WNV) suspect cases reported to the National Electronic Disease Surveillance System Base System (NBS) compared to WNV disease cases reported to the Texas Department of State Health Services (DSHS), by year, Texas, 2008–2012

| Year | NBS suspect cases | WNV disease cases | Matched cases* | Sensitivity† | PPV‡ | NBS suspect cases reported electronically |

|---|---|---|---|---|---|---|

| No. (%) | ||||||

| 2008 | 69 | 65 | 34 | 52% | 49% | 61 (88) |

| 2009 | 77 | 115 | 57 | 50% | 74% | 70 (91) |

| 2010 | 93 | 89 | 66 | 74% | 71% | 80 (86) |

| 2011 | 42 | 27 | 21 | 78% | 50% | 41 (98) |

| 2012 | 2108 | 1865 | 1670 | 90% | 79% | 1890 (88) |

| Total | 2389 | 2161 | 1848 | 86% | 77% | 2142 (90) |

A person who was an NBS suspect case and was also reported to Texas DSHS as a WNV disease case.

Sensitivity = matched cases/all WNV disease cases reported to Texas DSHS.

PPV = matched cases/all NBS suspect cases.

Sensitivity and PPV for NBS suspect cases

Of the 2161 WNV disease cases and 2389 NBS suspect cases, 1848 were present in both systems, 541 NBS suspect cases were not reported as WNV disease cases, and 313 WNV disease cases were not NBS suspect cases. Given this, the sensitivity of NBS reports was 86% (1848/2161) and the PPV of NBS reports was 77% (1848/2389) (Table 1). Sensitivity increased during the study period, from 52% in 2008 to 90% in 2012 (P < 0·01). PPV also increased during the study period, from 49% in 2008 to 79% in 2012 (P < 0·01). Using the ratio of NBS suspect cases to WNV disease cases, we estimate that for every 10 suspect cases reported to NBS, 9·0 [95% confidence interval (CI) 8·9–9·2] WNV disease cases were reported to Texas DSHS.

We also examined the impact that filtering NBS laboratory reports had on sensitivity and PPV. When all 9112 WNV laboratory reports to NBS from 2008 to 2012 were used, including all test types, positives, negatives, and multiple tests for the same patient, the sensitivity for identifying WNV disease cases remained stable at 88% (1892/2161) but the PPV decreased substantially to 21% (1892/9112). As a result, for every 10 WNV laboratory reports to NBS, only 2·4 (95% CI 2·3–2·5) WNV disease cases were reported to Texas DSHS.

Lead and lag times

Of 1846 cases that were reported to both systems and had available reporting dates, WNV laboratory results were reported to NBS with a median lead time of 7 days (IQR 3–15 days) before the corresponding disease case was reported to Texas DSHS (Table 2). Although some laboratory results were reported to NBS after the case report, most (94%, 1726/1846) laboratory results were entered prior to the case report. The lead time from NBS report to when a case was reported to DSHS did not change over the period from 2008 to 2012 (P = 0·49) and was not associated with the number of disease cases reported in a given year (P = 0·37). Electronic laboratory reports provided a longer lead time (median 8 days, IQR 4–16 days) than manually entered reports (median 2 days, IQR 0–4 days; P < 0·01).

Table 2.

Lead time and lag time for matched cases by year, Texas, 2008–2012*

| Year | Lead time (days)† | Lag time (days)‡ | ||

|---|---|---|---|---|

| Median | (IQR) | Median | (IQR) | |

| 2008 | 9 | (3–26) | 18 | (13–25) |

| 2009 | 13 | (6–21) | 20 | (15–35) |

| 2010 | 8 | (3–21) | 16 | (12–21) |

| 2011 | 16 | (5–35) | 19 | (14–25) |

| 2012 | 7 | (3–14) | 14 | (10–20) |

| Total | 7 | (3–15) | 14 | (10–20) |

IQR, Interquartile range.

A matched case was defined as a person who was a National Electronic Disease Surveillance System Base System (NBS) suspect case and was also reported to Texas Department of State Health Services as a West Nile virus (WNV) disease case.

Lead time was the difference between the date a National Electronic Disease Surveillance System Base System (NBS) suspect case was entered into NBS and the date it was reported as a WNV disease case.

Lag time was the difference between the date of a WNV disease case symptom onset and the date an NBS suspect case was entered into NBS.

Of 1847 matched cases that had a recorded date of onset in the Texas DSHS dataset and a reporting date in NBS, the median lag time between illness onset and reporting of a laboratory result to NBS was 14 days (IQR 10–20 days) (Table 2). The lag time was significantly shorter in 2012 (median 14 days, IQR 10–20 days) compared to previous years (median 18 days, IQR 13–25 days; P < 0·01). Electronic laboratory reports also provided a shorter lag time (median 14 days, IQR 10–20 days) than manually entered reports (median 19 days, IQR 13–29 days; P < 0·01). Overall, the median time from illness onset to specimen collection was 7 days (IQR 4–12 days). There was a median of 5 days (IQR 4–7 days) from specimen collection until a laboratory result was available and a median of only 1 day (IQR 0–2 days) from when the laboratory result was available until it was reported to NBS. The time from illness onset to reporting of results to NBS was the same for patients with neuroinvasive disease (i.e. meningitis, encephalitis, or acute flaccid paralysis) (median 14 days, IQR 10–20 days) and non-neuroinvasive disease (e.g. WNV fever) (median 14 days, IQR 10–21 days). However, the time from illness onset to specimen collection was shorter for neuroinvasive disease cases (median 6 days, IQR 3–10 days) compared to non-neuroinvasive disease cases (median 7 days, IQR 4–13 days; P < 0·01).

The distribution of NBS suspect cases by week reported was similar to the distribution of WNV disease cases by week of illness onset with the laboratory reports shifted later by ~2 weeks due to the lag time (Fig. 2). NBS suspect cases also had a similar distribution to WNV disease cases by week reported to Texas DSHS with the laboratory reports preceding the case reports by ~1 week due to the lead time.

Fig. 2.

West Nile virus (WNV) disease cases by week of onset and week reported and National Electronic Disease Surveillance System Base System (NBS) suspect cases by week entered, Texas, 2008–2012.

DISCUSSION

We found that NBS laboratory reports were useful surrogates for WNV disease cases that have already occurred and will be reported to Texas DSHS. From 2008 to 2012, most of the WNV disease cases reported to Texas DSHS had a corresponding NBS laboratory report. Laboratory reports provided ~1 week lead time for information about both the number and distribution of subsequent WNV disease cases. The data were most useful after being filtered to eliminate laboratory reports that did not satisfy the criteria of the case definition. Because filtering NBS laboratory results did not substantially alter the sensitivity but greatly improved the PPV, we recommend filtering NBS laboratory results before using them to assess disease trends. Using unfiltered data would be expected to substantially overestimate the subsequent number of WNV disease cases that might be expected. This finding also highlights the fact that positive IgG antibodies alone are evidence of previous and not recent infection and do not meet the criteria for the national surveillance case definition for WNV disease [9].

The PPV of NBS laboratory reports in this analysis (77%) was lower than a previous study which found a PPV of 85% for laboratory reports from a managed care organization in California for seven notifiable conditions (Campylobacter jejuni, Chlamydia trachomatis, Cryptosporidium parvum, hepatitis A, Neisseria meningitidis, Neisseria gonorrhoeae, Salmonella spp.) [13]. In that study, they found the PPV was decreased by patients with positive laboratory results who were not reported to the state health department as disease cases due to laboratory reporting errors, data-entry errors, failures to share information between county health departments, and disease coding. While we did not have this type of information for WNV laboratory reports, these same errors may have occurred and reduced the PPV of the NBS reports. Conversely, a study that evaluated laboratory reports for Lyme disease in New Jersey found a PPV of only 18% suggesting that laboratory reports may be poor surrogates for cases in diseases with complex case definitions and laboratory confirmation requirements [14]. The national surveillance case definition for WNV includes both clinical and laboratory criteria [9].

Our finding that electronically submitted laboratory results provided more lead time than manually entered results is consistent with the results of two previous studies. In Hawaii, electronic laboratory reports for five notifiable conditions (Salmonella spp., Shigella spp., Giardia spp., vancomycin-resistant enterococcus, Streptococcus pneumoniae) were submitted 4 days (95% CI 3–5 days) faster than paper reports [15]. Similarly, reporting of sexually transmitted infections, tuberculosis, and other communicable diseases to the New York City Department of Health and Mental Hygiene improved by a median of 11 days (range 3–42 days) when electronic laboratory reporting was used [16]. Increases in the proportion of laboratories using electronic reporting and sharing data electronically between health departments will likely decrease data errors and loss, and potentially improve both PPV and the lead time provided by laboratory reports [13, 15, 16]. However, laboratory reports alone lack some of the information gathered during traditional case reporting, such as symptoms, clinical syndrome, and outcome. The sensitivity and PPV of NBS reports both increased over time, causing the expected number of WNV disease cases for each NBS suspect case to remain relatively constant. However, this measure should be periodically re-evaluated as it may be affected by changes in Texas's electronic surveillance system [11].

Although we did not observe any trends in the lead time from laboratory result report date to disease case report date by year or the number of cases in a year, the median lag time from onset of illness to laboratory result report date was 4 days shorter in 2012 compared to previous years. This may be due to patients seeking care or healthcare providers ordering WNV testing earlier in the course of illness because of increased awareness of the disease during a large outbreak, or because of changes in laboratory testing schedules due to an increase in WNV test orders. In fact, with electronic laboratory reporting the rate-limiting steps in the timeliness of receiving results appear to be the time it takes for people to seek care, healthcare providers to order tests, and the laboratory to complete the testing. Patients with neuroinvasive disease appear to seek care or have laboratory tests ordered earlier in their course of illness but the difference is small and does not significantly impact the time to reporting of results. Laboratory test orders and results should be evaluated further to determine if they provide an early indicator of where WNV activity is occurring or when a seasonal outbreak has peaked.

This study was subject to at least several limitations. The results of this study may not be generalizable to other diseases investigated by Texas DSHS or to other states with different processes for investigating and reporting suspect WNV disease cases. We were also unable to classify all WNV laboratory results as to whether they provided sufficient evidence of recent infection, so some NBS suspect cases may have been missed. The lack of a common patient or sample identifier in the reporting systems could have led to errors in matching NBS laboratory reports and WNV disease cases. The relatively small numbers of cases reported from 2008 to 2011 might have impacted the precision of sensitivity and PPV calculations for those years. Finally, there was a lack of information on NBS suspect cases that were not reported as WNV disease cases and WNV disease cases that did not have NBS reports, so we were unable to assess these data for errors or find ways to improve the sensitivity or PPV of the system.

At least 42 states now have the capacity to receive electronic laboratory reports [11]. This number is expected to rise as more states comply with Centers for Medicare and Medicaid Services rules for meaningful use of health information technology. Therefore, electronic laboratory reports could increasingly be used to complement traditional case-reporting systems and provide more timely information about disease trends and outbreaks. We found that NBS laboratory reports could provide timely information about disease trends and may be useful during outbreaks for making decisions about public health interventions, such as insecticide spraying. Further analysis is warranted for WNV and other diseases and systems in other states to explore the usefulness and limitations of electronic laboratory reports as a complementary approach to infectious disease surveillance for the timely detection of cases.

ACKNOWLEDGEMENTS

We thank Doug Hamaker, Jim Schuermann, and Dawn Hesalroad for their assistance with this analysis. The findings and conclusions of this report are those of the authors and do not necessarily represent the views of the Centers for Disease Control and Prevention.

DECLARATION OF INTEREST

None.

REFERENCES

- 1.Centers for Disease Control and Prevention. Surveillance for human West Nile virus disease – United States, 1999–2008. Morbidity and Mortality Weekly Report 2010; 59 (SS-2): 1–17. [PubMed] [Google Scholar]

- 2.Petersen LR, Fischer M. Unpredictable and difficult to control: West Nile virus enters adolescence. New England Journal of Medicine 2012; 367: 1281–1284. [DOI] [PubMed] [Google Scholar]

- 3.Centers for Disease Control and Prevention. West Nile virus and other arboviral diseases – United States, 2012. Morbidity and Mortality Weekly Report 2013; 62: 513–520. [PMC free article] [PubMed] [Google Scholar]

- 4.Janusz KB, et al. Laboratory testing practices for West Nile virus in the United States. Vector Borne and Zoonotic Diseases 2011: 11: 597–599. [DOI] [PubMed] [Google Scholar]

- 5.Martin DA, et al. Standardization of immunoglobulin M capture enzyme-linked immunosorbent assays for routine diagnosis of arboviral infections. Journal of Clinical Microbiology 2000; 38: 1823–1826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lanciotti RS. Molecular amplification assays for the detection of flaviviruses. Advances in Virus Research 2003; 61: 67–99. [DOI] [PubMed] [Google Scholar]

- 7.Lanciotti RS, et al. Rapid detection of West Nile virus from human clinical specimens, field-collected mosquitoes, and avian samples by a TaqMan reverse transcriptase-PCR assay. Journal of Clinical Microbiology 2000; 38: 4066–4071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Guarner J, et al. Clinicopathologic study and laboratory diagnosis of 23 cases with West Nile virus encephalomyelitis. Human Pathology 2004; 35: 983–990. [DOI] [PubMed] [Google Scholar]

- 9.Centers for Disease Control and Prevention. Arboviral diseases, neuroinvasive and non-neuroinvasive: 2011 case definition. Atlanta, GA: US Department of Health and Human Services, CDC; 2011 (http://wwwn.cdc.gov/NNDSS/script/casedef.aspx?CondYrID=616&DatePub=1/1/201112:00:00AM). Accessed 27 September 2013. [Google Scholar]

- 10.Centers for Disease Control and Prevention. National Notifiable Diseases Surveillance System (NNDS) (http://wwwn.cdc.gov/nndss/script/nedss.aspx). Accessed 27 September 2013.

- 11.Centers for Disease Control and Prevention. State electronic disease surveillance systems — United States, 2007 and 2010. Morbidity and Mortality Weekly Report 2011; 60: 1421–1423. [PubMed] [Google Scholar]

- 12.National Electronic Disease Surveillance System Working Group. National Electronic Disease Surveillance System (NEDSS): a standards-based approach to connect public health and clinical medicine. Journal of Public Health Management and Practice 2001; 7: 43–50. [PubMed] [Google Scholar]

- 13.Backer HD, Bissell SR, Vugia DJ. Disease reporting from an automated laboratory-based reporting system to a state health department via local county health departments. Public Health Reports 2001; 116: 257–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Centers for Disease Control and Prevention. Effect of electronic laboratory reporting on the burden of Lyme disease surveillance — New Jersey, 2001–2006. Morbidity and Mortality Weekly Report 2008; 57: 42–45. [PubMed] [Google Scholar]

- 15.Effler P, et al. Statewide system of electronic notifiable disease reporting from clinical laboratories: comparing automated reporting with conventional methods. Journal of the American Medical Association. 1999; 282: 1845–1850. [DOI] [PubMed] [Google Scholar]

- 16.Nguyen TQ, et al. Benefits and barriers to electronic laboratory results reporting for notifiable diseases: the New York City Department of Health and Mental Hygiene experience. American Journal of Public Health 2007; 97 (Suppl. 1): S142–145. [DOI] [PMC free article] [PubMed] [Google Scholar]