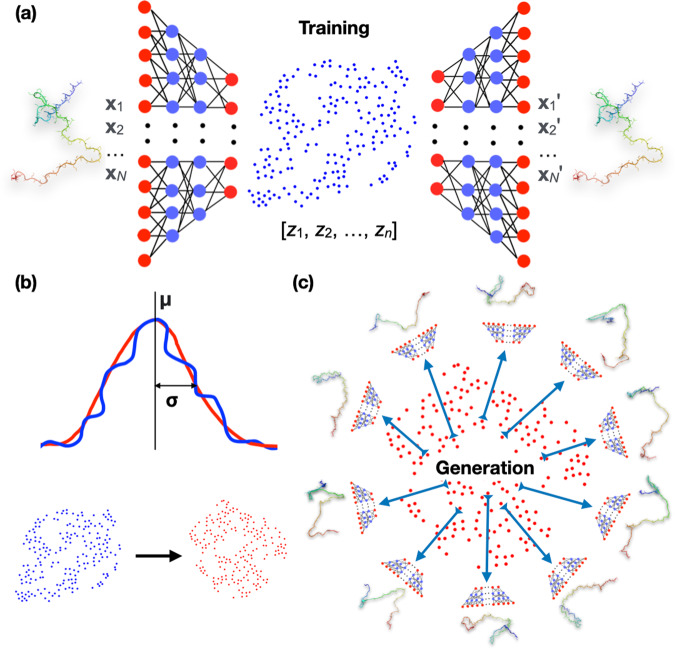

Fig. 1. Design of generative autoencoders.

a Illustration of the architecture of an autoencoder. The encoder part of the autoencoder represents the conformations of an IDP, specified by the Cartesian coordinates [x1, x2, …, xN] of N heavy atoms, as n-dimensional vectors [z1, z2, …, zn] in the latent space. The decoder then reconstructs the latent vectors back to conformations in Cartesian coordinates, [x1′, x2′, …, xN′]. During training, the weights of the neural networks are tuned to minimize the deviation of the reconstructed conformations from the original ones. b Modeling of the distribution of the latent vectors (blue) of the training set by a multivariate Gaussian (red). The mean vector and covariance matrix of the Gaussian are those of the training latent vectors. The curves illustrate a Gaussian fit to the distribution of the training data; the scatter plots show a comparison of the training data and the Gaussian model. c Generation of new conformations. Vectors sampled from the multivariate Gaussian are fed to the decoder to generate new conformations. IDP structures are shown in a color spectrum with blue at the N-terminus and red at the C-terminus.