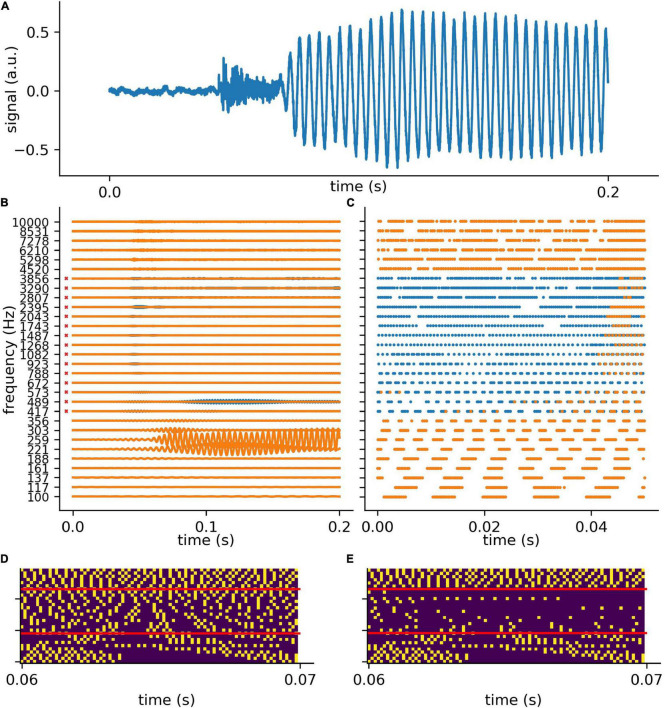

FIGURE 4.

Exemplary processing of a word in cochlea and DCN model. (A) The first 0.2 s of audio data of the German word “die” (the). (B) The 30 frequency components (blue without hearing loss, orange with hearing loss) after the first part of the model, which represents the cochlea and the spiral-ganglion (Figure 1A). A virtual hearing loss is applied by weakening the signal at a certain frequency range (e.g., 400 Hz–4 kHz, −30 dB). The bandpass filtered signal (matrix of 30 frequency channels and fs × signal duration) is fed to the LIF neurons (refractory time: ≈ 0.25 ms) and spike trains (C) are generated. These spike trains are down-sampled by a factor of 5 and fed to the deep neural network (D). (E) The same signal (of panel D) with added hearing loss of 30 dB in the frequency range 400 Hz–4 kHz being the speech relevant range.