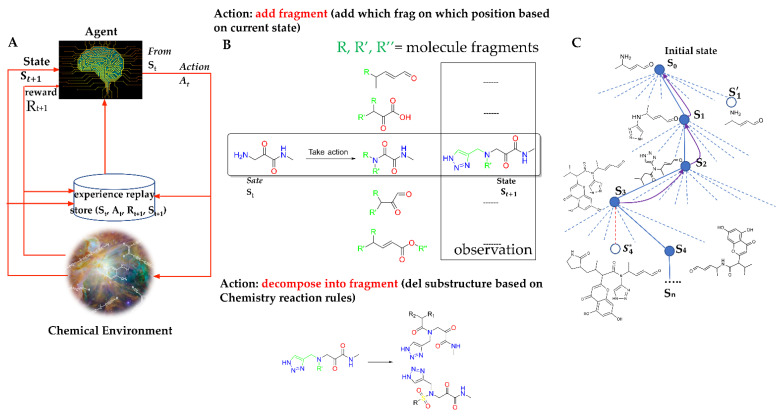

Figure 2.

(A) Framework of the ADQN–FBDD. (A) The ADQN–FBDD consists of a reinforced agent, a prioritized experience replay algorithm, and a chemical environment to perform chemical structure generation. The agent selects an action (insertion, deletion, or none) for the intermediate molecular fragment at each step to generate a new molecule that can maximize the cumulative rewards. The prioritized experience replay algorithm allows the agent to repeat the molecule generation based on the updated maximization of rewards. The chemical environment assesses the agent’s actions according to the predefined chemical rules and provides rewards. (B) Example of fragment-based actions. (C) The solid lines represent taken actions, including the addition or deletion of different fragments during an episode. The dashed lines represent actions that the RL agent was considered but did not take. An exploratory action is represented by the red dashed line, which was taken even though another sibling action, the one leading to S*, was ranked higher. The exploratory action did not result in any learning; however, other actions did, resulting in updates as demonstrated by the curved arrows where estimated values moved up the tree from later nodes to earlier nodes.