Abstract

Background: Colon capsule endoscopy (CCE) is an alternative for patients unwilling or with contraindications for conventional colonoscopy. Colorectal cancer screening may benefit greatly from widespread acceptance of a non-invasive tool such as CCE. However, reviewing CCE exams is a time-consuming process, with risk of overlooking important lesions. We aimed to develop an artificial intelligence (AI) algorithm using a convolutional neural network (CNN) architecture for automatic detection of colonic protruding lesions in CCE images. An anonymized database of CCE images collected from a total of 124 patients was used. This database included images of patients with colonic protruding lesions or patients with normal colonic mucosa or with other pathologic findings. A total of 5715 images were extracted for CNN development. Two image datasets were created and used for training and validation of the CNN. The AUROC for detection of protruding lesions was 0.99. The sensitivity, specificity, PPV and NPV were 90.0%, 99.1%, 98.6% and 93.2%, respectively. The overall accuracy of the network was 95.3%. The developed deep learning algorithm accurately detected protruding lesions in CCE images. The introduction of AI technology to CCE may increase its diagnostic accuracy and acceptance for screening of colorectal neoplasia.

Keywords: colon capsule endoscopy, artificial intelligence, convolutional neural network, colorectal neoplasia

1. Introduction

Capsule endoscopy (CE) is a primary diagnostic tool for the investigation of patients with suspected small bowel disease. Colon capsule endoscopy has been recently introduced as a minimally invasive alternative to conventional colonoscopy for evaluation of the colonic mucosa [1,2]. This system allows overcoming some of the drawbacks associated with conventional colonoscopy, including the potential for pain, use of sedation, and the risk of adverse events, including bleeding and perforation [3]. The clinical application of this tool has been most extensively studied in the setting of colorectal cancer screening, particularly for patients with previous incomplete colonoscopy, or for whom the latter exam is contraindicated, unfeasible or unwanted [4,5]. The role of CCE as an alternative to conventional colonoscopy in the setting of colorectal cancer screening is growing. A recent meta-analysis by Vuik and coworkers reported similar performance levels for conventional colonoscopy and CCE as well as the superiority of CCE compared to computed tomography colonography (virtual colonoscopy) [6]. Moreover, a single full-length CCE video may produce over 50,000 images, and reviewing these images is a monotonous and time-consuming task, requiring approximately 50 min for completion [2]. Furthermore, any given frame may capture only a fragment of a mucosal abnormality and lesions may be depicted in a very small number of frames. Therefore, the risk of overlooking important lesions is not insignificant [2].

The combination of enhanced computational power with large clinical datasets has potentiated the research and development of AI tools for clinical implementation. The application of automated algorithms to diverse medical fields has provided promising results regarding disease identification and classification [7,8,9]. Convolutional neural networks (CNN) are a type of multi-layered deep learning algorithm tailored for image analysis. The application of these technological solutions to small bowel CE has provided promising results in the detection of several types of lesions [10,11,12,13]. The introduction of AI tools for real-time detection of colorectal neoplasia in conventional colonoscopy has suggested a high diagnostic yield for CNN-based algorithms [14]. The impact of AI algorithms for detection of colorectal neoplasia in CCE images has been scarcely evaluated. Enhanced reading of CCE images through the application of these tools may improve the diagnostic accuracy of CCE for colorectal neoplasia, which is currently unsatisfactory [2]. Importantly, the implementation of automated algorithms may help to reduce the time required for reading a single CCE exam. The aim of this study was to develop and validate a CNN-based algorithm for the automatic detection of colonic protruding lesions using CCE images.

2. Materials and Methods

2.1. Study Design

A multicenter study was performed for development and validation of a CNN for automatic detection of colonic protruding lesions. CCE images were retrospectively collected from two different institutions: São João University Hospital (Porto, Portugal) and ManopH Gastroenterology Clinic (Porto, Portugal). One hundred and twenty-four CCE exams (124 patients, 24 from São João University Hospital and 100 from ManopH Gastroenterology Clinic), performed between 2010 and 2020, were included. The full-length video of all participants was reviewed, and a total of 5715 frames of the colonic mucosa were ultimately extracted. Significant frames were included regardless of image quality and bowel cleansing quality. Inclusion and classification of frames were performed by three gastroenterologists with experience in CCE (Miguel Mascarenhas, Hélder Cardoso and Miguel Mascarenhas Saraiva), each with an experience of >1500 CE previous to this study. A final decision on frame labelling required the agreement of at least two of the three researchers.

This study was approved by the ethics committee of São João University Hospital (No. CE 407/2020). The study protocol was conducted respecting the original and subsequent revisions of the declaration of Helsinki. This study is retrospective and of non-interventional nature. Thus, the output provided by the CNN had no influence on the clinical management of each included patient. Any information susceptible to identify the included patients was omitted, and each patient was assigned a random number in order to guarantee effective data anonymization for researchers involved in CNN development. A team with Data Protection Officer (DPO) certification (Maastricht University) confirmed the non-traceability of data and conformity with the general data protection regulation (GDPR).

2.1.1. Colon Capsule Endoscopy Procedure

For all patients, CCE procedures were conducted using the PillCam™ COLON 2 system (Medtronic, Minneapolis, MN, USA). This system consists of three major components: the endoscopic capsule, an array of sensors connected to a data recorder, and a software for frame revision. The capsule measures 32.3 mm in length and 11.6 mm in width. It has 2 high-resolution cameras, each with a 172° angle of view. The system frame rate varied automatically between 4 and 35 frames per second, depending on bowel motility. Each frame had a resolution of 512 × 512 pixels. The battery of the endoscopic capsule has an estimated life of ≥10 h [2]. This system was launched in 2009 and was not submitted to hardware updates since then. Thus, no significant changes in image quality were evident during this period. The images were reviewed using PillCam™ software version 9.0 (Medtronic, Minneapolis, MN, USA). Each frame was processed in order to remove information allowing patient identification (name, operating number, date of procedure).

Each patient received bowel preparation according to previously published guidelines [15]. Summarily, patients initiated a clear liquid diet in the day preceding capsule ingestion, with fasting in the night before examination. A solution consisting of polyethylene glycol was used in split-dosage (2 L in the evening and 2 L in the morning of capsule ingestion). Prokinetic therapy (10 mg domperidone) was used if the capsule remained in the stomach 1 h after ingestion, upon real-time image review on the recorder. Two boosters consisting of a sodium phosphate solution were applied after the capsule has entered the small bowel and with a 3 h interval. Only complete CCE exams were included. A complete exam was considered if the capsule was excreted.

2.1.2. Development of the Convolutional Neural Network

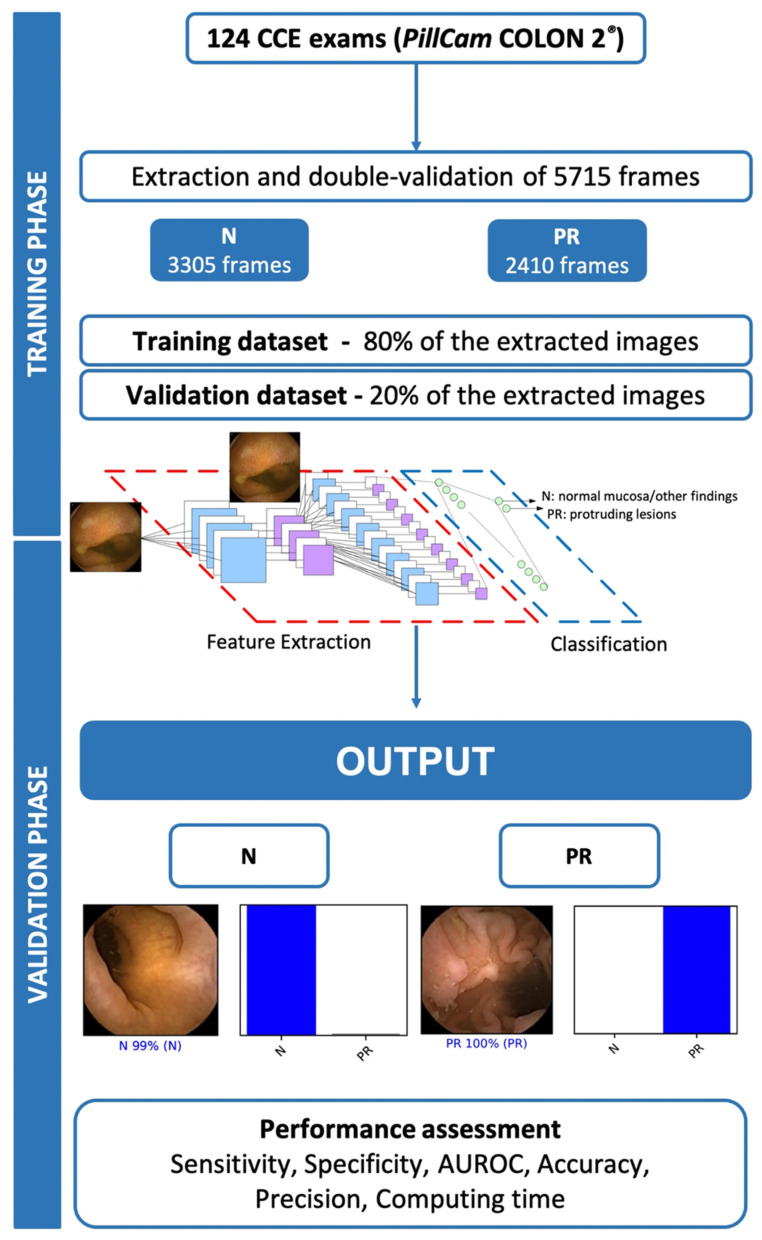

A deep learning CNN was developed for automatic detection of colonic protruding lesions. Protruding lesions included all polyps, epithelial tumors, and subepithelial lesions. From the collected pool of images (n = 5715), 2410 showed protruding lesions and 3305 displayed normal mucosa or other mucosal lesions (ulcers, erosions, red spots, angiectasia, varices and lymphangiectasia). This pool of images was split for constitution of training and validation image datasets. The training dataset was composed by 80% of the consecutively extracted images (n = 4572). The remaining 20% were used as the validation dataset (n = 1143). The validation dataset was used for assessing the performance of the CNN (Figure 1).

Figure 1.

Summary of study design for the training and validation phases. PR—protruding lesion; N—normal mucosa or other findings.

To create the CNN, we modified the Xception model with its weights trained on ImageNet (a large-scale image dataset aimed for use in development of object recognition software). To transfer this learning to our data, we kept the convolutional layers of the model. We replaced the last fully connected layers with 2 dense layers of size 2048 and 1024, respectively, and then attached a fully connected layer based on the number of classes we used to classify our endoscopic images. To avoid overfitting, a dropout layer of 0.3 drop rate was added between convolutional and classification components of the network. We applied gradient-weighted class activation mapping on the last convolutional layer [16], in order to highlight important features for predicting protruding lesions. The size of each image was set for 300 pixels of height and width. The learning rate of 0.0001, batch size of 128 and the number of epochs of 30 was set by trial and error. We used Tensorflow 2.3 and Keras libraries to prepare the data and run the model. The analyses were performed with a computer equipped with a 2.1 GHz Intel® Xeon® Gold 6130 processor (Intel, Santa Clara, CA, USA) and a double NVIDIA Quadro® RTX™ 8000 graphic processing unit (NVIDIA Corporate, Santa Clara, CA, USA).

2.1.3. Model Performance and Statistical Analysis

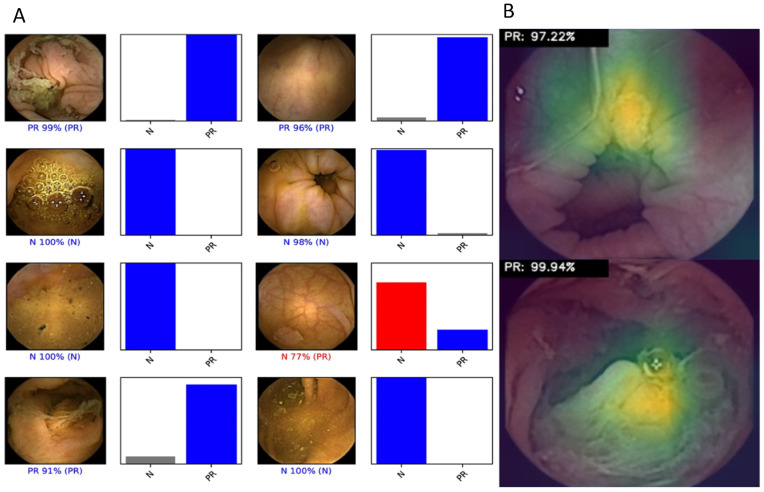

The primary outcome measures included sensitivity, specificity, positive and negative predictive values, and accuracy. Moreover, we used receiver operating characteristic (ROC) curve analysis and area under the ROC curve (AUROC) to measure the performance of our model in the distinction between the categories. For each image, the trained CNN calculated the probability for each of the categories (protruding lesions vs. normal colonic mucosa or other findings). A higher probability value translated in a greater confidence in the CNN prediction. The software generated heatmaps that localized features that predicted a class probability (Figure 2A). The category with the highest probability score was outputted as the CNN’s predicted classification (Figure 2B). The output provided by the network was compared to the specialists’ classification (gold standard). We performed a 3-fold cross validation. Therefore, the entire dataset was split into 3 even-sized image groups. Training and validation datasets were created for each of the five groups, at a proportion of 80% and 20% for training and validation datasets, respectively. Sensitivities, specificities, positive and negative predictive values are presented as means ± standard deviations (SD). Additionally, the image processing performance of the network was determined by calculating the time required for the CNN to provide output for all images in the validation image dataset. Sensitivities, specificities, positive and negative predictive values were obtained using one iteration and are presented as percentages. Statistical analysis was performed using Sci-Kit learn v0.22.2 [17].

Figure 2.

Heatmaps (A) and output (B) obtained from the application of the convolutional neural network. (A) Examples of heatmaps showing CCE features of protruding lesions as identified by the CNN. (B) The bars represent the probability estimated by the network.

3. Results

3.1. Construction of the Network

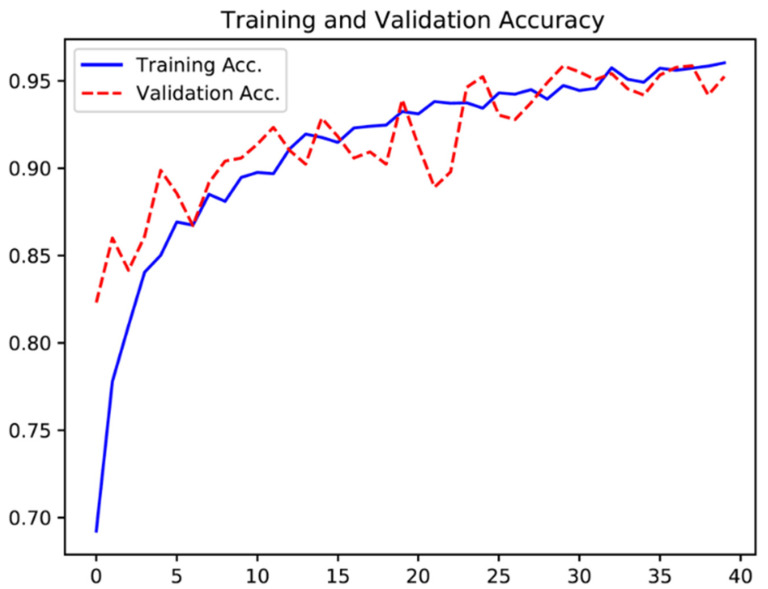

One hundred and twenty-four patients were submitted to CCE and enrolled in this study. A total of 5715 frames were extracted, 2410 showing protruding lesions (2303 polyps, 8 subepithelial lesions and 99 epithelial tumors) and 3305 showing normal colonic mucosa or other findings. The training dataset was constituted by 80% of the total image pool. The remaining 20% of frames (n = 1143) were used for testing the model. This validation dataset was composed by 482 (42.2%) images with evidence of protruding lesions and 661 (57.8%) images with normal colonic mucosa/other findings. The CNN evaluated each image and predicted a classification (protruding lesions vs. normal mucosa/other lesions), which was compared with the classification provided by gastroenterologists. Repeated inputs of data to the CNN resulted in the improvement of its accuracy (Figure 3).

Figure 3.

Evolution of the accuracy of the convolutional neural network during training and validation phases, as the training and validation datasets were repeatedly inputted in the neural network.

3.2. Overall Performance of the Network

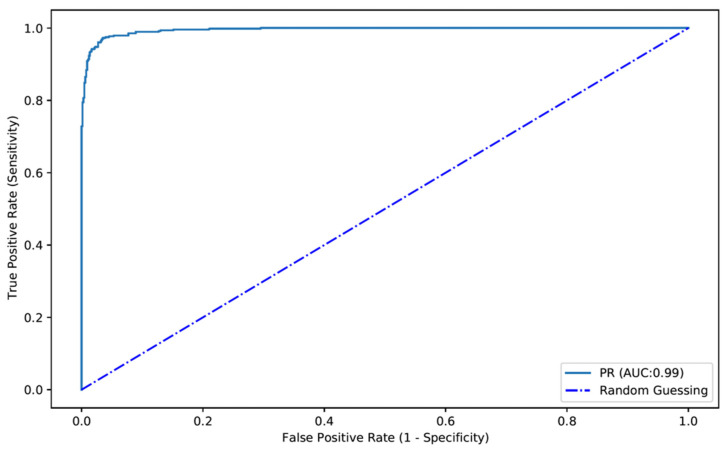

The confusion matrix between the trained CNN and expert classifications is shown in Table 1. Overall, the developed model had a sensitivity and specificity for the detection of protruding lesions of 90.0% and 99.1%, respectively. The positive and negative predictive values were, respectively, 98.6% and 93.2%. The overall accuracy of the network was 95.3% (Table 1). The AUROC for detection of protruding lesions was 0.99 (Figure 4).

Table 1.

Confusion matrix and performance marks.

| Expert Classification | |||

|---|---|---|---|

| Protruding Lesion | Normal Mucosa | ||

| CNN classification | Protruding lesion | 434 | 6 |

| Normal mucosa | 48 | 655 | |

| Sensitivity | 90.0% | ||

| Specificity | 99.1% | ||

| PPV | 98.6% | ||

| NPV | 93.2% | ||

| Accuracy | 95.3% | ||

Abbreviations: CNN—convolutional neural network; PPV—positive predictive value; NPV—negative predictive value.

Figure 4.

ROC analyses of the network’s performance in the detection of protruding lesions vs. normal colonic mucosa/other findings. ROC—receiver operator characteristics. PR—protruding lesion.

We performed a 3-fold cross validation, where the entire dataset was randomized and split in 3 equivalent parts. The performance results for the three experiments are shown in Table 2. Overall, the estimated model accuracy was 95.6 ± 1.1%. The mean sensitivity and specificity of the model were 87.4 ± 4.6% and 96.1 ± 1.4%. The algorithm had a mean AUC of 0.976 ± 0.006.

Table 2.

Three-fold cross validation.

| Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) | Accuracy (%) | AUC | |

|---|---|---|---|---|---|---|

| Fold 1 | 82.8 | 97.5 | 62.6 | 99.1 | 96.9 | 0.980 |

| Fold 2 | 87.4 | 95.9 | 57.1 | 99.2 | 95.4 | 0.970 |

| Fold 3 | 92.1 | 94.7 | 48.4 | 99.6 | 94.6 | 0.980 |

| Overall, mean (±SD) | 87.4 ± 4.6 | 96.1 ± 1.4 | 56.0 ± 7.1 | 99.3 ± 0.2 | 95.6 ± 1.1 | 0.976 ± 0.006 |

Abbreviations: ±SD—±standard deviation; PPV—positive predictive value; NPV—negative predictive value; AUC—area under the receiving operator characteristics curve.

3.3. Computational Performance of the CNN

The CNN completed the reading of the testing dataset in 17.5 s (approximately 15.4 ms/frame). This translates into an approximated reading rate of 65 frames per second. At this rate, the CNN would complete the revision of a full-length CCE video containing an estimate of 50,000 frames in approximately 13 min.

4. Discussion

The exploration of AI algorithms for application to conventional endoscopic techniques for automatic detection of colorectal neoplasia has been producing promising results over the last decade. The development and implementation of these systems has been recently endorsed (although with limitations) by the European Society of Gastrointestinal Endoscopy [18]. Furthermore, a recent meta-analysis has suggested that the application of AI models for adenoma and polyp’s identification may substantially increase the adenoma detection rate and the number of adenomas detected per colonoscopy [19]. These improvements in commonly used performance metrics have shown not to be affected by factors known to influence detection by the human eye, including the size and morphology of the lesions [19]. Artificial intelligence is expected to play a major role in improving the acceptability and the diagnostic yield of CCE [20]. These systems may help in several steps of the CCE process, from predicting the quality of colon cleanliness, lesion detection and the distinction of colorectal lesions [20,21,22].

In our study, we have developed a deep learning tool based on a CNN architecture for automatic detection of protruding lesions in the colonic lumen using CCE images. This study has several highlights. First, our model demonstrated high levels of performance, with a sensitivity of 90.0%, a specificity of 99.1, an accuracy of 95.3% and an AUROC of 0.99. Obtaining fairly high levels of sensitivity and negative predictive value is paramount for CNN-assisted reading systems, which are designed to lessen the probability of missing lesions, while maintaining a high specificity. Third, our network had a remarkable image processing performance, being capable of reading 65 images per second.

The precise role of CCE in everyday clinical practice is yet to be defined. Thus far, most studies highlight its potential when applied in the setting of colorectal cancer screening. Although colonoscopy remains the undisputed gold standard, studies have suggested that CCE could be viewed as a non-invasive complement, rather than substitutive of conventional colonoscopy, particularly in the setting of a previous incomplete colonoscopy [23]. Current guidelines on colorectal cancer screening list CCE as a valid alternative to colonoscopy for the screening of an average-risk population [15]. Studies comparing the diagnostic yield of CCE with another non-invasive screening test, CT colonography, have shown the superiority of CCE [24]. Moreover, when following a first positive fecal-immunological test, CCE may reduce the need for more invasive conventional colonoscopy [25]. Although conflicting evidence exist, some studies have shown that adoption of CCE as a screening method may lead to a higher uptake rate compared to conventional colonoscopy [26]. Moreover, CCE may not only be seen as an alternative to conventional colonoscopy but rather as a complementary solution in programmed screening settings. Indeed, CCE may help to shorten waiting lists, decrease hospital appointments and make screening available to remote areas [27]. In this setting, the cost-effectiveness of CCE appears to be greater when the prevalence of colorectal cancer is lower and the uptake rate is superior to that of conventional colonoscopy [28]. However, the use of CCE is hampered by its purely diagnostic character, the need for a rigorous bowel cleansing protocol, as well as the time required for reading each CCE exam.

The development of AI tools for detection of colorectal neoplasia in CCE images has been poorly explored. Automatic detection of these lesions is limited by the poor resolution of CCE images combined with their variable morphology, size and color. To our knowledge, only two other studies have assessed the potential of the application of CNN models to CCE images. Yamada et al. was the first to explore the implementation of AI algorithms for the identification of colorectal neoplasia in frames extracted from CCE exams. Their network was developed using a relatively large pool of CCE images (17,783 frames from 178 patients). Overall, their algorithm achieved a good performance (AUROC of 0.90) [29]. However, the sensitivity of their model was modest (79%) compared to that of our network. Blanes-Vidal et al. adapted a preexisting CNN (AlexNet) and trained it for the detection of colorectal polyps. The sensitivity, specificity and accuracy expressed in their paper were 97%, 93% and 96%, respectively. In our perspective, the development of these technologies should aim to support a clinical decision rather than substitute the role of the clinician. Therefore, these systems must remain highly sensitive in order to minimize the risk of missing lesions.

Our network demonstrated a high image processing performance (65 frames/second). To date, no value for comparison exists regarding CCE. Nevertheless, these performance marks exceed those published for CNNs applied to other CE systems [11,30]. The development of highly efficient networks may, in the near future, translate into shorter reading times, thus overcoming one of the main drawbacks of CCE. Further well-designed studies are required to assess if a high image processing capacity in experimental settings can be reproduced as an enhanced time efficiency regarding reading times of CCE exams comparing to conventional reading. The combination of enhanced diagnostic accuracy and time efficiency may have a pivotal role in widening the indications for CCE and its acceptance as a valid screening and diagnostic tool.

This study has several limitations. First, it is a retrospective study. Therefore, further prospective multicentric studies in a real-life setting are desirable to confirm the clinical value of our results. Second, although we included a large number of patients from two distinct medical centers, the number of extracted images is small. This limited number of extracted images was mainly dictated by the low number of frames showing protruding lesions. In order to produce a balanced dataset while minimizing the risk of missing lesions, an equilibrium between the number of images showing protruding lesions and normal mucosa was fostered. This may partially explain the suboptimal sensitivity. We are currently expanding our image pool in order to increase the robustness of our model, thus contributing to decrease the rate of false negative CE exams, which should be one of the main endpoints in developing these algorithms. The multicentric nature of our work reinforces the validity of our results. Nevertheless, multicentric studies including larger populations are required to ensure the clinical significance of our findings. Moreover, future studies for clinical validation of these tools must contemplate the comparison of performance between AI software and conventional colonoscopy, the gold standard technique for the detection and characterization of these lesions.

In conclusion, we developed a highly sensitive and specific CNN-based model for detection of protruding lesions in CCE images. We believe that the implementation of AI tools to clinical practice will be a crucial step for wider acceptance of CCE for non-invasive screening and diagnosis of colorectal neoplasia. Future studies should assess the impact of AI algorithms in mitigating the limitations of CCE in a real-life clinical setting, particularly the time required for reviewing each exam, as well as evaluate the potential benefits in terms of diagnostic yield.

Author Contributions

M.M.—study design, revision of videos, image extraction and labelling and construction and development of the Convolutional Neural Network (CNN), data interpretation. J.A.—study design, organization of patient database, image extraction, organization of the dataset, construction and development of the Convolutional Neural Network (CNN), data interpretation and drafting of the manuscript. T.R.—study design, construction and development of the CNN, bibliographic review, drafting of the manuscript. H.C.—study design, revision of videos, image extraction and labelling, data interpretation. P.A.—study design, data interpretation, drafting of the manuscript. J.P.S.F.—study design, construction and development of the CNN, statistical analysis. M.M.S.—study design, revision of scientific content of the manuscript. G.M.—study design, revision of scientific content of the manuscript. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of São João University Hospital (No. CE 407/2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

The authors acknowledge Fundação para a Ciência e Tecnologia (FCT) for supporting the computational costs related to this study through CPCA/A0/7363/2020 grant. This entity had no role in study design, data collection, data analysis, preparation of the manuscript and publishing decision.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Eliakim R., Fireman Z., Gralnek I.M., Yassin K., Waterman M., Kopelman Y., Lachter J., Koslowsky B., Adler S.N. Evaluation of the PillCam Colon capsule in the detection of colonic pathology: Results of the first multicenter, prospective, comparative study. Endoscopy. 2006;38:963–970. doi: 10.1055/s-2006-944832. [DOI] [PubMed] [Google Scholar]

- 2.Eliakim R., Yassin K., Niv Y., Metzger Y., Lachter J., Gal E., Sapoznikov B., Konikoff F., Leichtmann G., Fireman Z., et al. Prospective multicenter performance evaluation of the second-generation colon capsule compared with colonoscopy. Endoscopy. 2009;41:1026–1031. doi: 10.1055/s-0029-1215360. [DOI] [PubMed] [Google Scholar]

- 3.Niikura R., Yasunaga H., Yamada A., Matsui H., Fushimi K., Hirata Y., Koike K. Factors predicting adverse events associated with therapeutic colonoscopy for colorectal neoplasia: A retrospective nationwide study in Japan. Gastrointest. Endosc. 2016;84:971–982.e976. doi: 10.1016/j.gie.2016.05.013. [DOI] [PubMed] [Google Scholar]

- 4.Spada C., Hassan C., Bellini D., Burling D., Cappello G., Carretero C., Dekker E., Eliakim R., de Haan M., Kaminski M.F., et al. Imaging alternatives to colonoscopy: CT colonography and colon capsule. European Society of Gastrointestinal Endoscopy (ESGE) and European Society of Gastrointestinal and Abdominal Radiology (ESGAR) Guideline—Update 2020. Endoscopy. 2020;52:1127–1141. doi: 10.1055/a-1258-4819. [DOI] [PubMed] [Google Scholar]

- 5.Milluzzo S.M., Bizzotto A., Cesaro P., Spada C. Colon capsule endoscopy and its effectiveness in the diagnosis and management of colorectal neoplastic lesions. Exp. Rev. Anticancer Ther. 2019;19:71–80. doi: 10.1080/14737140.2019.1538798. [DOI] [PubMed] [Google Scholar]

- 6.Vuik F.E.R., Nieuwenburg S.A.V., Moen S., Spada C., Senore C., Hassan C., Pennazio M., Rondonotti E., Pecere S., Kuipers E.J., et al. Colon capsule endoscopy in colorectal cancer screening: A systematic review. Endoscopy. 2021;53:815–824. doi: 10.1055/a-1308-1297. [DOI] [PubMed] [Google Scholar]

- 7.Yasaka K., Akai H., Abe O., Kiryu S. Deep Learning with Convolutional Neural Network for Differentiation of Liver Masses at Dynamic Contrast-enhanced CT: A Preliminary Study. Radiology. 2018;286:887–896. doi: 10.1148/radiol.2017170706. [DOI] [PubMed] [Google Scholar]

- 8.Esteva A., Kuprel B., Novoa R.A., Ko J., Swetter S.M., Blau H.M., Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gargeya R., Leng T. Automated Identification of Diabetic Retinopathy Using Deep Learning. Ophthalmology. 2017;124:962–969. doi: 10.1016/j.ophtha.2017.02.008. [DOI] [PubMed] [Google Scholar]

- 10.Aoki T., Yamada A., Aoyama K., Saito H., Tsuboi A., Nakada A., Niikura R., Fujishiro M., Oka S., Ishihara S., et al. Automatic detection of erosions and ulcerations in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest. Endosc. 2019;89:357–363.e352. doi: 10.1016/j.gie.2018.10.027. [DOI] [PubMed] [Google Scholar]

- 11.Aoki T., Yamada A., Kato Y., Saito H., Tsuboi A., Nakada A., Niikura R., Fujishiro M., Oka S., Ishihara S., et al. Automatic detection of blood content in capsule endoscopy images based on a deep convolutional neural network. J. Gastroenterol. Hepatol. 2020;35:1196–1200. doi: 10.1111/jgh.14941. [DOI] [PubMed] [Google Scholar]

- 12.Ding Z., Shi H., Zhang H., Meng L., Fan M., Han C., Zhang K., Ming F., Xie X., Liu H., et al. Gastroenterologist-Level Identification of Small-Bowel Diseases and Normal Variants by Capsule Endoscopy Using a Deep-Learning Model. Gastroenterology. 2019;157:1044–1054.e1045. doi: 10.1053/j.gastro.2019.06.025. [DOI] [PubMed] [Google Scholar]

- 13.Tsuboi A., Oka S., Aoyama K., Saito H., Aoki T., Yamada A., Matsuda T., Fujishiro M., Ishihara S., Nakahori M., et al. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig. Endosc. 2020;32:382–390. doi: 10.1111/den.13507. [DOI] [PubMed] [Google Scholar]

- 14.Repici A., Badalamenti M., Maselli R., Correale L., Radaelli F., Rondonotti E., Ferrara E., Spadaccini M., Alkandari A., Fugazza A., et al. Efficacy of Real-Time Computer-Aided Detection of Colorectal Neoplasia in a Randomized Trial. Gastroenterology. 2020;159:512–520.e517. doi: 10.1053/j.gastro.2020.04.062. [DOI] [PubMed] [Google Scholar]

- 15.Spada C., Hassan C., Galmiche J.P., Neuhaus H., Dumonceau J.M., Adler S., Epstein O., Gay G., Pennazio M., Rex D.K., et al. Colon capsule endoscopy: European Society of Gastrointestinal Endoscopy (ESGE) Guideline. Endoscopy. 2012;44:527–536. doi: 10.1055/s-0031-1291717. [DOI] [PubMed] [Google Scholar]

- 16.Selvaraju R.R., Cogswell M., Das A., Vedantam R., Parikh D., Batra D. Grad-cam: Visual explanations from deep networks via gradient-based localization; Proceedings of the IEEE International Conference on Computer Vision; Venice, Italy. 22–29 October 2017; pp. 618–626. [Google Scholar]

- 17.Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., Blondel M., Prettenhofer P., Weiss R., Dubourg V., et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011;12:2825–2830. [Google Scholar]

- 18.Bisschops R., East J.E., Hassan C., Hazewinkel Y., Kamiński M.F., Neumann H., Pellisé M., Antonelli G., Bustamante Balen M., Coron E., et al. Advanced imaging for detection and differentiation of colorectal neoplasia: European Society of Gastrointestinal Endoscopy (ESGE) Guideline—Update 2019. Endoscopy. 2019;51:1155–1179. doi: 10.1055/a-1031-7657. [DOI] [PubMed] [Google Scholar]

- 19.Hassan C., Spadaccini M., Iannone A., Maselli R., Jovani M., Chandrasekar V.T., Antonelli G., Yu H., Areia M., Dinis-Ribeiro M., et al. Performance of artificial intelligence in colonoscopy for adenoma and polyp detection: A systematic review and meta-analysis. Gastrointest. Endosc. 2021;93:77–85.e76. doi: 10.1016/j.gie.2020.06.059. [DOI] [PubMed] [Google Scholar]

- 20.Bjørsum-Meyer T., Koulaouzidis A., Baatrup G. Comment on “Artificial intelligence in gastroenterology: A state-of-the-art review”. World J. Gastroenterol. 2022;28:1722–1724. doi: 10.3748/wjg.v28.i16.1722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nakazawa K., Nouda S., Kakimoto K., Kinoshita N., Tanaka Y., Tawa H., Koshiba R., Naka Y., Hirata Y., Ota K., et al. The Differential Diagnosis of Colorectal Polyps Using Colon Capsule Endoscopy. Intern. Med. 2021;60:1805–1812. doi: 10.2169/internalmedicine.6446-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yamada K., Nakamura M., Yamamura T., Maeda K., Sawada T., Mizutani Y., Ishikawa E., Ishikawa T., Kakushima N., Furukawa K., et al. Diagnostic yield of colon capsule endoscopy for Crohn’s disease lesions in the whole gastrointestinal tract. BMC Gastroenterol. 2021;21:75. doi: 10.1186/s12876-021-01657-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Spada C., Barbaro F., Andrisani G., Minelli Grazioli L., Hassan C., Costamagna I., Campanale M., Costamagna G. Colon capsule endoscopy: What we know and what we would like to know. World J. Gastroenterol. 2014;20:16948–16955. doi: 10.3748/wjg.v20.i45.16948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cash B.D., Fleisher M.R., Fern S., Rajan E., Haithcock R., Kastenberg D.M., Pound D., Papageorgiou N.P., Fernández-Urién I., Schmelkin I.J., et al. Multicentre, prospective, randomised study comparing the diagnostic yield of colon capsule endoscopy versus CT colonography in a screening population (the TOPAZ study) Gut. 2020;70:2115–2122. doi: 10.1136/gutjnl-2020-322578. [DOI] [PubMed] [Google Scholar]

- 25.Holleran G., Leen R., O’Morain C., McNamara D. Colon capsule endoscopy as possible filter test for colonoscopy selection in a screening population with positive fecal immunology. Endoscopy. 2014;46:473–478. doi: 10.1055/s-0034-1365402. [DOI] [PubMed] [Google Scholar]

- 26.Groth S., Krause H., Behrendt R., Hill H., Börner M., Bastürk M., Plathner N., Schütte F., Gauger U., Riemann J.F., et al. Capsule colonoscopy increases uptake of colorectal cancer screening. BMC Gastroenterol. 2012;12:80. doi: 10.1186/1471-230X-12-80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bjoersum-Meyer T., Spada C., Watson A., Eliakim R., Baatrup G., Toth E., Koulaouzidis A. What holds back colon capsule endoscopy from being the main diagnostic test for the large bowel in cancer screening? Gastrointest. Endosc. 2021;95:168–170. doi: 10.1016/j.gie.2021.09.007. [DOI] [PubMed] [Google Scholar]

- 28.Hassan C., Zullo A., Winn S., Morini S. Cost-effectiveness of capsule endoscopy in screening for colorectal cancer. Endoscopy. 2008;40:414–421. doi: 10.1055/s-2007-995565. [DOI] [PubMed] [Google Scholar]

- 29.Yamada A., Niikura R., Otani K., Aoki T., Koike K. Automatic detection of colorectal neoplasia in wireless colon capsule endoscopic images using a deep convolutional neural network. Endoscopy. 2020;53:832–836. doi: 10.1055/a-1266-1066. [DOI] [PubMed] [Google Scholar]

- 30.Saito H., Aoki T., Aoyama K., Kato Y., Tsuboi A., Yamada A., Fujishiro M., Oka S., Ishihara S., Matsuda T., et al. Automatic detection and classification of protruding lesions in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest. Endosc. 2020;92:144–151.e141. doi: 10.1016/j.gie.2020.01.054. [DOI] [PubMed] [Google Scholar]