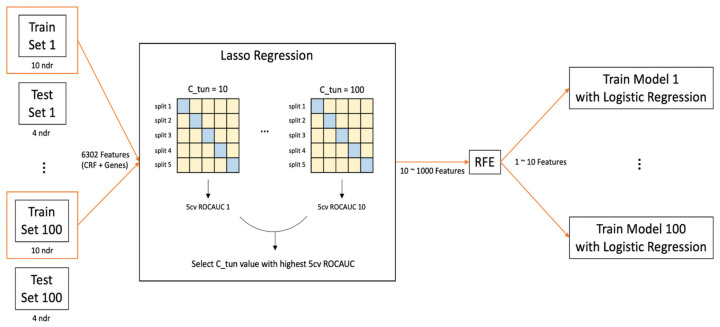

Figure 2.

The overall model training scheme. The dataset was split into training and test sets in an 8:2 ratio. This random split was repeated 100 times. For each split, the model training involving Least Absolute Shrinkage and Selection Operator (LASSO) regression, and recursive feature elimination (RFE) was performed. In the LASSO regression, the C parameter was scanned from 10 to 100 in multiples of 10 for the highest 5-fold cross-validation (5-CV) area under the receiver operating characteristic curve (AUC-ROC) value. For the best C parameter, typically 10 to 1000 features survived. Among these features, a fixed number, ranging from 1 to 10, of features was selected through RFE. For a given number of the selected features, 100 different models were developed due to the 100 random data splits. The model performance was evaluated with the test set using logistic regression of the selected feature(s). For 5-CV, the training and test sets are shown in light yellow and blue, respectively.