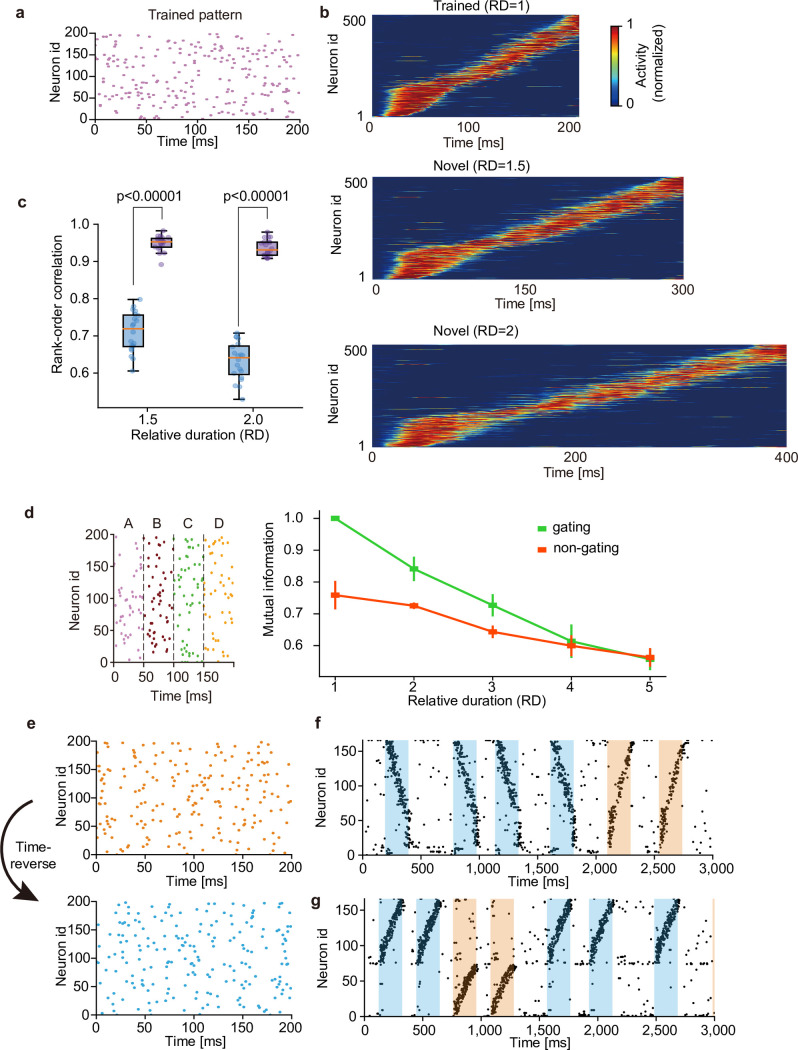

Fig 5. Context-dependent learning of sequence information.

(a) For testing on time-warped patterns, the network was trained on random spike trains embedding a single pattern. (b) The trained network responded sequentially to the original and stretched patterns with two untrained lengths (i.e., the relative durations RD of 1.5 and 2). (c) Similarities of sequential order between the responses to the original and two untrained patterns were measured before (blue) and after (purple) learning. Independent simulations were performed 20 times, and p-values were calculated by two-sided Welch’s t-test. (d) The input spike pattern used in the task in Fig 3 was considered (left) to quantify the degree of time warping that can be tolerated by our network model. Performance of both gating and non-gating models trained by input patterns with various relative durations are shown. (e) A time-inverted spike pattern (bottom) was generated from a original pattern (top). (f) The network was exposed to the original pattern in (e) during learning, and its responses were tested after learning for both original and time-inverted patterns. Both patterns activated a single cell assembly. (g) The network was exposed to both original and time-inverted patterns during learning as well as testing. Two assemblies with different preferred patterns were formed. For the visualization purpose, only 160 out of 500 neurons are shown in (f) and (g).