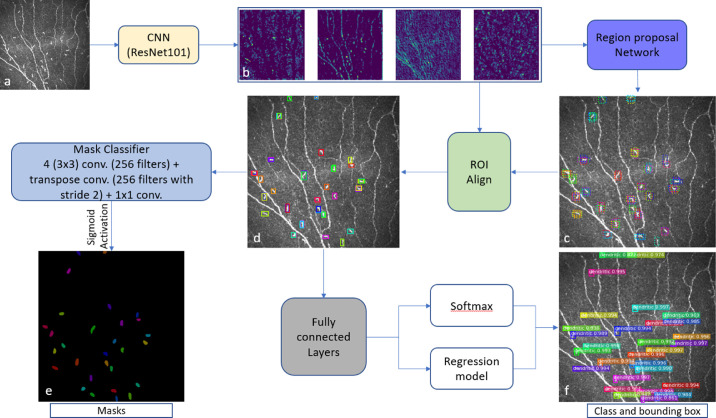

Figure 2.

Mask R-CNN architecture. The pretrained ResNet101 (light yellow box) generates feature maps (b) from the input image (a). From the feature maps, the RPN (purple box; 3 × 3 convolution with 512 filters, padding the same) generates multiple ROIs (dotted bounding box) with the help of predefined bounding boxes referred to as anchors (c). The green box denotes the ROI Align network, which takes both the proposed bounding boxes from the RPN network and the feature maps as inputs and uses this information to find the best-fitting bounding box (d) for each proposed DC. These aligned box maps are fed into fully connected layers (7 × 7 convolution with 1024 filters + 1 × 1 convolution with 1024 filters), denoted by the gray box, and then generates a class and bounding box for each object using softmax and a regression model, respectively (f). Finally, the aligned box maps are fed into the Mask classifier (4 3 × 3 convolution with 256 filters + transpose convolution with 256 filters and stride = 2 + 1 × 1 convolution + sigmoid activation), denoted by the light blue box, to generate binary masks for each object (e).