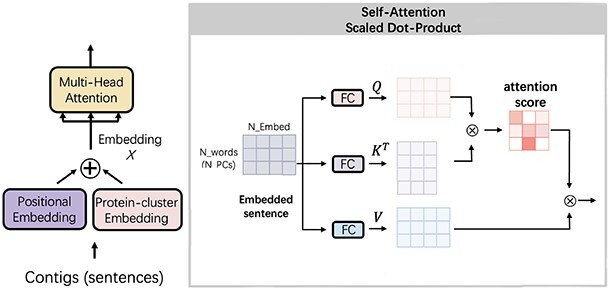

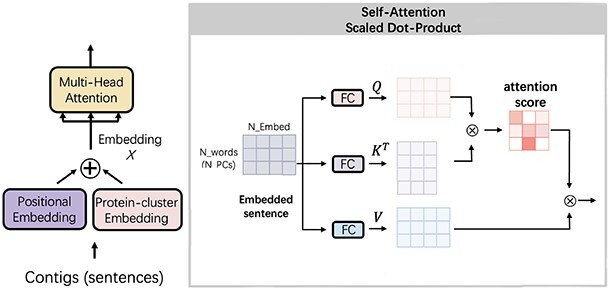

Figure 3.

The self-attention mechanism in the Transformer model. The input of the self-attention is the embedded vector and the output is the weighted features with protein–protein relationships information.

The self-attention mechanism in the Transformer model. The input of the self-attention is the embedded vector and the output is the weighted features with protein–protein relationships information.