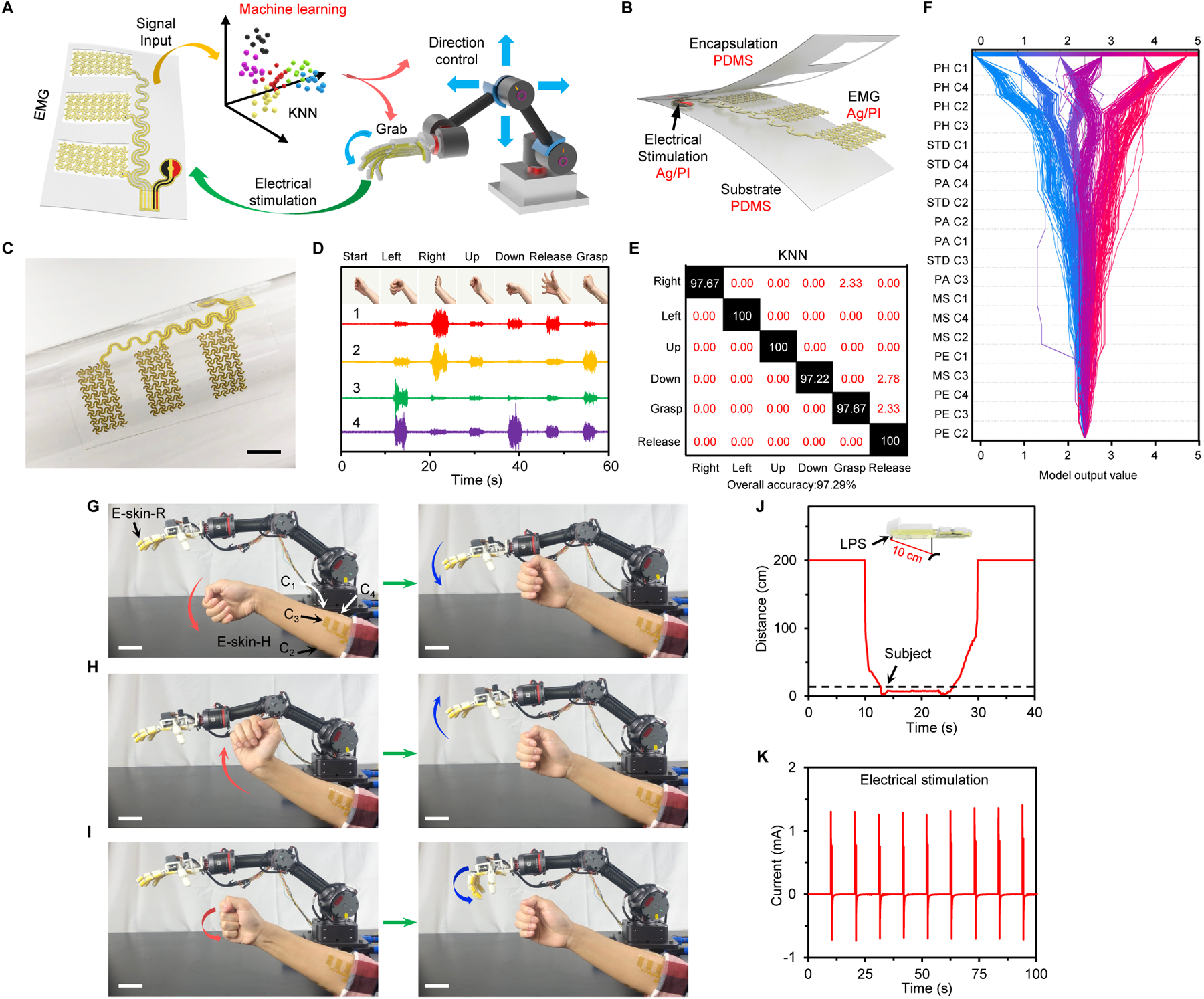

Fig. 3. Evaluation of the e-skin-H for AI-assisted human-machine interaction.

(A) Schematic of machine learning-enabled human gesture recognition and robotic control. (B and C) Schematic (B) and photograph (C) of a PDMS encapsulated soft e-skin-H with sEMG and electrical stimulation electrodes for closed-loop human interactive robotic control. Scale bar, 1 cm. (D) sEMG data collected by the four-channel e-skin-H from 6 human gestures. (E) Classification confusion matrix using a KNN model based on real-time experimental data. White text values, percentages of correct predictions; red text values, percentages of incorrect predictions. (F) A SHAP decision plot explaining how a KNN model arrives at each final classification for every datapoint using all 5 features. Each decision line tracks the features contributions to every individual classification; each final classification is represented as serialized integers (that map to a hand movement). Dotted lines represent misclassified points. (G–I) Time-lapse images of the AI-assisted human-interactive robotic control using the M-Bot. Scale bars, 5 cm. (J) Response of the LPS when the robot approaches and leaves an object. (K) Current applied on a participant’s arm during the feedback stimulation.