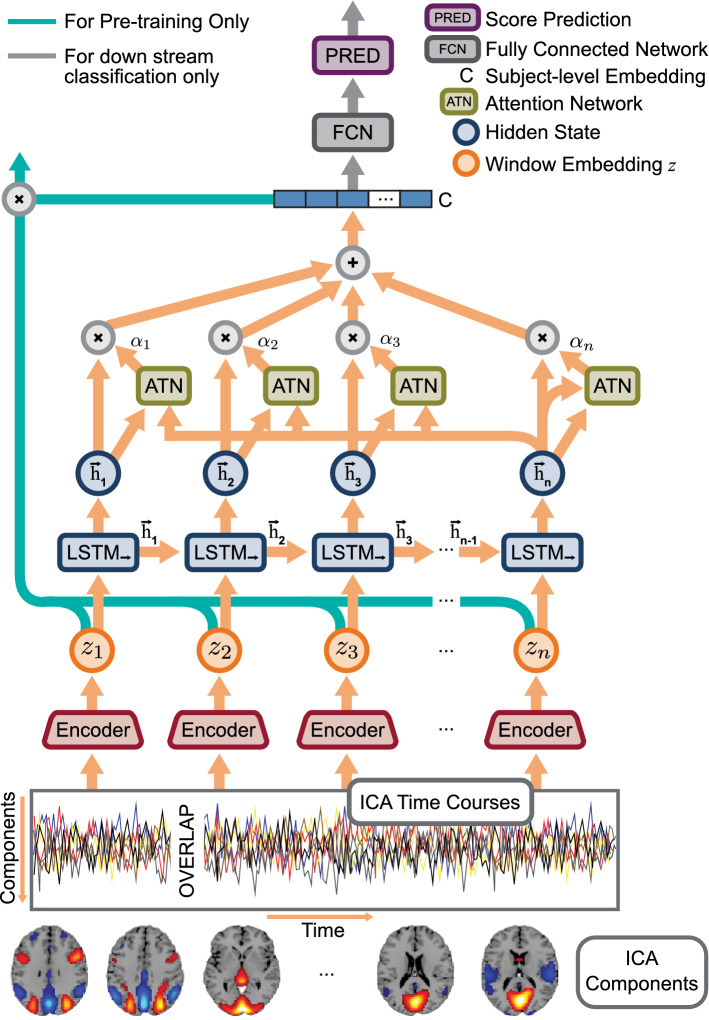

Figure 7.

The whole MILC architecture—an attention-based top-down recurrent network (created in programs Adobe Illustrator 26.0.3, http://ww.adobe.com/products/illustrator.html and Inkscape 1.1.2, http://inkscape.org/release/inkscape-1.1.2). Precisely, we used an LSTM network with an attention mechanism as a parameter-shared encoder to generate the latent embeddings for the sliding window at all relevant positions. The top LSTM network (marked as LSTM) used these embeddings () to obtain the global representation for the entire subject. During pretraining, we intended to maximize the mutual information between and . In the downstream classification task, we used the global representation directly as input to a fully connected network for predictions. Based on these predictions, we estimated feature attributions using different interpretability methods. Finally, we evaluated the feature attributions using the RAR method and an SVM model.