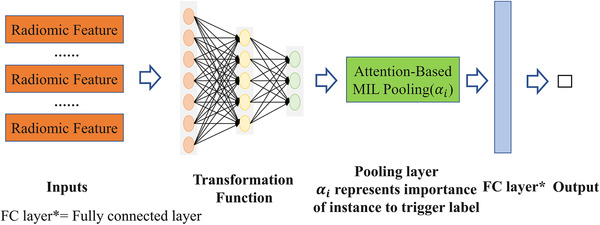

FIGURE 2.

Architecture of the attention‐based deep MIL. Extracted radiomics features are used as the input to the transformation network, which is then pooled with attention. A fully connected final layer combines the attention‐based pooling to give the output probability