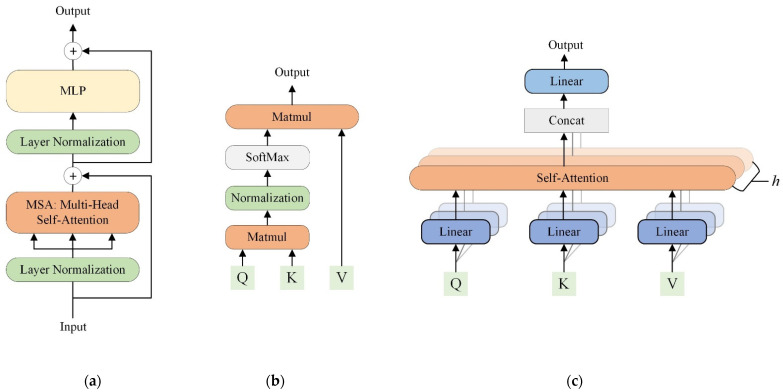

Figure 2.

(a) The transformer block consists of an MSA, an MLP, skip connections, and layer normalizations. (b) Self-attention (Scaled Dot-Product Attention). Matmul: multiplication of two matrices. (c) Multi-head attention consists of multiple parallel self-attention heads. Concat: concatenation of feature representations. h: the number of self-attention heads.