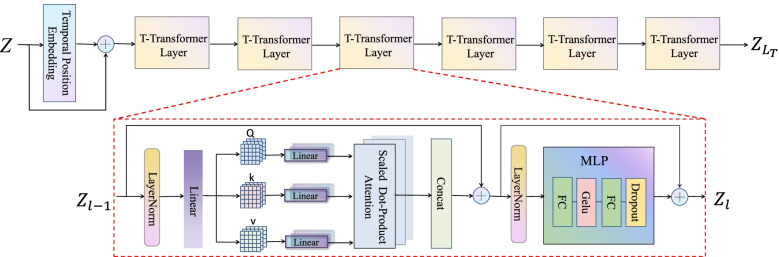

Fig. 2.

Temporal transformer (T-transformer) module. The module combines the encoded features of the connected human pose vector Z through the TPE and the input sequence, and obtains the output through the T-transformer module composed of 6 identical T-transformer layers. Specifically, each T-transformer layer will through the layer norm, and then the multi-head attention calculation is performed by the dot product attention composed of Q, K, and V of multiple heads, and finally connect the attention results and pass through the MLP composed of two FC layers