Abstract

Artificial intelligence (AI) for prostate magnetic resonance imaging (MRI) is starting to play a clinical role for prostate cancer (PCa) patients. AI-assisted reading is feasible, allowing workflow reduction. A total of 3,369 multi-vendor prostate MRI cases are available in open datasets, acquired from 2003 to 2021 in Europe or USA at 3 T (n = 3,018; 89.6%) or 1.5 T (n = 296; 8.8%), 346 cases scanned with endorectal coil (10.3%), 3,023 (89.7%) with phased-array surface coils; 412 collected for anatomical segmentation tasks, 3,096 for PCa detection/classification; for 2,240 cases lesions delineation is available and 56 cases have matching histopathologic images; for 2,620 cases the PSA level is provided; the total size of all open datasets amounts to approximately 253 GB. Of note, quality of annotations provided per dataset highly differ and attention must be paid when using these datasets (e.g., data overlap). Seven grand challenges and commercial applications from eleven vendors are here considered. Few small studies provided prospective validation. More work is needed, in particular validation on large-scale multi-institutional, well-curated public datasets to test general applicability. Moreover, AI needs to be explored for clinical stages other than detection/characterization (e.g., follow-up, prognosis, interventions, and focal treatment).

Keywords: Artificial intelligence, Deep learning, Image processing (computer-assisted), Multiparametric magnetic resonance imaging, Prostatic neoplasms

Key points

Artificial intelligence shows promise for being applied to prostate cancer magnetic resonance imaging (MRI).

Open datasets for prostate MRI are limited.

Commercial solutions are available but lack adequate validation.

Grand challenges could provide the means for bias-free validation.

Background

Prostate cancer (PCa) is the second most prevalent cancer among men worldwide [1]. Nevertheless, the mortality rate is relatively low, and most patients die with and not of PCa [2]. Timely and accurate diagnosis is therefore of utmost importance to avoid overtreatment of men with indolent, clinically insignificant PCa, and to offer radical curative treatment to men with life-threatening, clinically significant PCa (csPCa) [3]. Present-day guidelines advise the use of multiparametric magnetic resonance imaging (mpMRI) prior to biopsies [3], as it can noninvasively discriminate patients with indolent PCa from those with csPCa, retaining a high sensitivity for csPCa [4–6]. Using the version 2 of the Prostate Imaging Reporting and Data System (PI-RADS) [7], radiologists make a semiquantitative assessment of each suspicious lesion observed on mpMRI and assign a corresponding csPCa likelihood score, from 1 to 5. Together with clinical variables, such as patient age, prostate-specific antigen (PSA) levels, and family history, PI-RADS scores help clinicians determine whether further investigation (via systematic or targeted biopsies) is needed to make a final diagnosis.

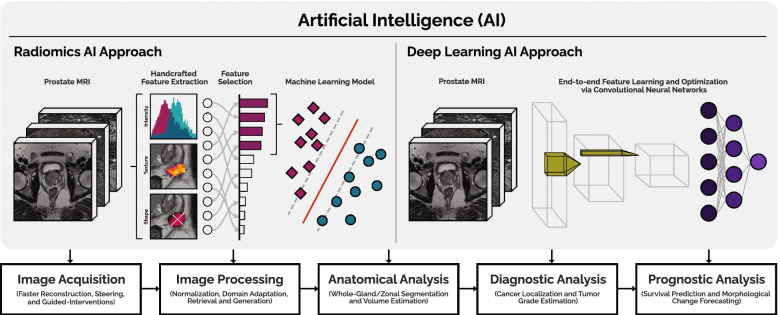

At present time, the processing and interpretation of prostate mpMRI data in clinical routine is entirely performed by human experts (radiologists) who, while competent, are time-limited, cost-intensive, and cannot be easily scaled to meet increasing imaging demands [8]. Furthermore, human performance is dependent on experience and training, leading to significant variability between observers [9–11]. In contrast to purely qualitative interpretation, artificial intelligence (AI) exploits the quantitative nature of mpMRI data. AI can automate and support (parts of) the radiological workflow (Fig. 1), improve diagnostic accuracy, reduce costs, and alleviate the workload of healthcare personnel.

Fig. 1.

Use of artificial intelligence in the radiological workflow of prostate magnetic resonance imaging to automate, improve, and support critical tasks, considering radiomics and deep learning approaches

In recent years, continuous technical developments and increased dataset quantity and quality have pushed AI performance close to that of experienced radiologists [12–14], leading to the emergence of both publicly and commercially available solutions. However, adequate validation via large-scale retrospective multicenter studies or prospective clinical studies is often still lacking. To realize this, the prostate MRI community should invest in curating large-scale, multicenter datasets, develop a unified methodology for standardized performance estimation, reach consensus on the reference outcome standard (beginning from the presence/absence of csPCa), and establish the minimum requirements for potential testing cohorts.

The purpose of this narrative review is to provide an overview of open datasets, commercially/publicly available AI systems, and grand challenges for prostate mpMRI. We focus on methods for segmenting prostate anatomy, and for diagnosis and localization of csPCa. While the prostate segmentation can facilitate the calculation of PSA density (and also guide treatment planning and future interventions), diagnosis and localization can inform risk stratification and biopsy strategies. As we approach a new phase in AI applications to prostate mpMRI, where the goal is to move towards transparent validation and clinical translation, we specifically report studies that investigated commercially or publicly available AI systems. Furthermore, we summarize publicly available MRI data that can be used to accelerate the development of AI systems and discuss the increasingly important role of grand challenges, which allow for bias-free benchmarking of AI algorithms applied to prostate mpMRI.

Open datasets

AI, especially deep learning, requires large, well-curated datasets to facilitate training and meaningful validation [15]. Furthermore, models require diverse, multicenter, multivendor data to achieve robust performance and generalization. However, most algorithms reported in literature thus far, use relatively small, single-center datasets [16]. The limited number and quality of publicly available datasets for prostate MRI, further aggravates this issue.

Table 1 provides an overview of 17 public datasets for prostate MRI, which were found by the authors through their collaborative role in this research field and was updated with additional internet searches (i.e., The Cancer Imaging Archive, Zenodo, XNAT, GitHub, and grand-challenge.org). A total of 3,369 prostate MRI cases (including some overlapping cases) are available, of which 2,238 cases primarily include mpMRI images acquired between 2003–2021 in Europe and the United States. All cases were provided as full 3D volumes, except for the QUBIQ21 dataset [17], which provided a single slice per case. A total of 412 cases were collected for anatomical segmentation tasks, whereas the remainder were collected for PCa detection and/or classification. The majority of cases were scanned with a 3-T scanner, whereas only 296 cases were scanned with a 1.5-T scanner. Scanner vendors include the following: Siemens (Siemens Healthineers, Erlangen, Germany), Philips (Philips Healthcare, Best, The Netherlands), and GE (General Electric Healthcare Systems, Milwaukee, WI, USA) for 2571, 446, and 110 cases, respectively. A total of 346 cases were scanned with endorectal coils, whereas the remaining were scanned with phased-array surface coils. In 2,240 cases lesion delineations are available and 56 cases have matching histopathologic section images obtained from radical prostatectomy specimen. Table 1 shows that clinical variables are available for some cases, e.g., 2,620 cases with an associated PSA level. The scans are available in Digital Imaging and COmmunications in Medicine (DICOM), ITK MetaImage or NIFTI format for 1,547, 1,580, and 242 cases, respectively. Total size of all open datasets (images, annotations, and meta-data) amounts to approximately 253 GB. In 2021, delineations of PCa lesions and prostatic zones for (parts of) the PROSTATEx dataset [18] were curated by an independent third-party and publicly released at [19].

Table 1.

Summary of prostate MRI public datasets

| Number | Dataset | Data source | Modalities | Dataset size | Acquisition years | Files size | Data type | Field strength | Scanner manufacturer and model | Coil type | Clinical variables | Purpose | Reference |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | PROSTATE-MRI | TNCI | MRI (triplanar T2W, DWI, pre-contrast T1W, DCE) | MRI cases (n = 26), pathology images (n = 26) | 2008–2010 | 3.4 GB | MRI cases (DICOM), pathology images (JPEG) | 3 T | Philips Achieva | Endorectal | Gleason grade (n = 26) | Disease classification | [20] |

| 2 | Prostate Fused-MRI-Pathology | WRUHC, HUP | MRI (triplanar T2W, DWI, pre-contrast T1W, DCE) | MRI cases (n = 28), pathology images (n = 16) | 2009–2011 | 81.2 GB | MRI cases (DICOM), pathology images (TIFF, XML) | 3 T | Siemens Verio | Endorectal | Not reported | Disease classification | [21] |

| 3 | Prostate-3T | RUMC | MRI (axial T2W) | MRI cases (n = 64), SV and NVB segmentations (n = 15) | 2003–2005 | 610 MB | MRI cases (DICOM), SV and NVB segmentations (MHA) | 3 T | Siemens Trio | Pelvic phased-array surface | Not reported | Cancer detection | [22] |

| 4 | ICCVB | Not reported | MRI (axialT2W, DWI, DCE, ADC) | MRI cases (n = 12) | Not reported | 5.6 GB | MRI cases (DICOM) | 1.5 T (n = 7), 3 T (n = 5) | GE (n = 7), Siemens (n = 5) | Endorectal (n = 7), phased-array surface (n = 5) | Not reported | Cancer detection | [23] |

| 5 | TCGA-PRAD | UPMC, WHSL, LHMC | MRI (axial T2W, DWI, pre-contrast T1W, DCE, ADC), CT, PET | MRI cases (n = 10), CT images (n = 4), PET images (n = 3), pathology images (n = 14), genomics data (n = 14) | Not reported | 3.74 GB | MRI cases (DICOM), CT images (DICOM), PET images (DICOM), pathology images (WEB), genomics data (WEB) | 1.5 T | GE Signa HDx | Endorectal | PSA (n = 14), Gleason grade (n = 14), lesion location (n = 14), TNM classification (n = 14) | Disease classification, Cancer detection | [24] |

| 6 | PROSTATE-DIAGNOSIS | BMC | MRI (axial and coronal T2W, DWI, pre-contrast T1W, DCE) | MRI cases (n = 92), SV and NVB segmentations (n = 15), zones and lesions segmentations (n = 5) | 2008–2010 | 5.6 GB | MRI cases (DICOM), SV and NVB segmentations (MHA), zones and lesions segmentations (NRRD) | 1.5 T | Philips Achieva | Endorectal | Full report for: MRI (n = 54), biopsy (n = 43), specimen (n = 25), treatment (n = 52) | Disease classification, Cancer detection | [25] |

| 7 | Prostate-MRI-US-Biopsy | UCCUC | MRI (axial T2W), Ultrasound | MRI cases (n = 842), ultrasound cases (n = 1,151), gland and lesions segmentations (n = 1,150) | 2004–2011 | 78.2 GB | MRI cases (DICOM), ultrasound cases (DICOM), gland and lesions segmentations (STL) | MRI: 3 T (n = 807), 1.5 T (n = 35). Ultrasoun: 2–10 MHz | MRI: Siemens Skyra (n = 609), Siemens Vida (n = 60), Siemens Prisma (n = 38), Siemens Verio (n = 36), Siemens Trio (n = 32), Siemens Avanto (n = 31), Siemens Espree (n = 1), GE Discovery MR750w (n = 14), GE Signa HDx (n = 10), GE Optima MR450w (n = 2), Philips Achieva (n = 5), Philips Ingenia (n = 4). Ultrasound: Hitachi Hi-Vision, Noblus C41V | MRI: Transabdominal phased-array surface. Ultrasound: End-fire probe | PIRADS (n = 840), PSA (n = 1146), Gleason grade (n = 196), prostate volume (n = 1,150), lesion location (n = 1,150) | Disease classification, Cancer detection | [26] |

| 8 | QIN PROSTATE | BWH | MRI (axial T2W, DWI, pre-contrast T1W, DCE, ADC) | MRI cases (n = 22) | Not reported | 4.4 GB | MRI cases (DICOM) | 3 T | GE Signa HDx | Endorectal | Not reported | Disease classification, Cancer detection | [27] |

| 9 | QIN-PROSTATE-Repeatability | BWH | MRI (axial T2W, DWI, DCE, ADC) | MRI cases (n = 15) zones and lesions segmentations (n = 15) | 2013–2015 | 14.86 GB | MRI cases (DICOM), zone and lesions segmentations (DICOM) | 3 T | GE Signa HDx | Endorectal | Not reported | Repeatability measurements, Disease classification | [28] |

| 10 | SPIE-AAPM-NCI PROSTATEx Challenges | RUMC | MRI (triplanar T2W, DWI, DCE, ADC, PDW) | MRI cases (n = 346), Ktrans images (n = 345), thumbnails images (n = 345) | 2012 | 15.4 GB | MRI cases (DICOM), Ktrans images (MHD), thumbnail images (BMP) | 3 T | Siemens Trio (n = 57), Siemens Skyra (n = 289) | Pelvic phased-array surface | Lesion location (n = 344), significant/insignificant cancer (n = 204), Gleason grade group (n = 99) | Disease classification, Cancer detection | [18, 29] |

| 11 | NCI-ISBI 2013 ASPS Challenge | BMC, RUMC | MRI (axial T2W) | MRI cases (n = 80), zones segmentations (n = 80) | 2003–2010 | 600 MB | MRI cases (DICOM), zones segmentations (NRRD) | 1.5 T (n = 40), 3 T (n = 40) | Philips Achieva (n = 40), Siemens Trio (n = 40) | Endorectal (n = 40), pelvic phased-array surface (n = 40) | Not reported | Zones segmentation | [30, 31] |

| 12 | PROMISE12 Challenge | HUH, BIDMC, UCL, RUMC | MRI (axial T2W) | MRI cases (n = 80), whole gland segmentations (n = 50) | Not reported | 1.2 GB | MRI cases (MHD), gland segmentations (MHD) | 1.5 T (n = 30), 3 T (n = 50) | GE (n = 20), Semiens (n = 60) | Endorectal (n = 41), phased-array surface (n = 39) | Not reported | Whole gland segmentation | [32, 33] |

| 13 | Medical Segmentation Decathlon | RUMC | MRI (axial T2W, ADC) | MRI cases (n = 48), zones segmentations (n =32) | Not reported | 229 MB | MRI cases (NII.GZ), zones segmentations (NII.GZ) | 3 T | Not reported | Phased-array surface coil | Not reported | Zones segmentation | [34, 35] |

| 14 | QUBIQ21 Challenge | Not reported | MRI (axial T2W) | MRI cases (n = 55), whole and central gland segmentations (n = 55 [327 masks]) | Not reported | 2.04 GB | MRI cases (NII.GZ), whole and central gland segmentations (NII.GZ) | Not reported | Not reported | Endorectal | Not reported | Segmentation uncertainty estimation | [17] |

| 15 | NCIGT-PROSTATE | BMWH | MRI (triplanar T2W) | MRI cases (n = 10), gland segmentations (n = 10) | Not reported | 768 MB | MRI cases (DICOM) | 3 T | GE Signa HDx | Endorectal | Not reported | Whole gland segmentation | [36] |

| 16 | PI-CAI Challenge | RUMC, UMCG, ZGT | MRI (triplanar T2W, DWI, ADC) | MRI cases (n = 1,500), lesions segmentations (n = 1,295) | 2012-2021 | 32.5 GB | MRI cases (MHA), lesions segmentations (NII.GZ) | 1.5 T (n = 82), 3 T (n = 1,418) | Siemens ([Skyra 3 T, TrioTim 3 T, Prisma 3 T, Aera 1.5 T, Avanto 1.5 T, Espree 1.5 T]; n = 1,221), Philips ([Ingenia 3 T, Achieva 1.5 T, Intera 1.5 T]; n = 279) | Phased-array surface coil | Age (n = 1,500), PSA (n = 1,460), PSA density (n = 1,047), Gleason grade (n = 1,001), prostate volume (n = 1,473), histopathology type (n = 1,001) | Disease classification, Cancer detection | [37, 38] |

| 17 | Prostate158 | CUB | MRI (axial T2W, DWI, ADC) | MRI cases (n = 139), zones segmentation (n = 139), lesions segmentations (n = 83) | Not reported | 2.6 GB | MRI cases (NII.GZ), zones and lesions segmentations (NII.GZ) | 3 T | Not reported | Phased-array surface coil | Not reported | Zones segmentation, Cancer detection | [39] |

ADC Apparent diffusion coefficient; BIDMC Beth Israel Deaconess Medical Center, Boston, MA, USA; BMC Boston Medical Center, Boston, MA, USA; BWH Brigham and Women’s Hospital, Boston, MA, USA; CT Computed Tomography; CUB Charité-Universitätsmedizin Berlin, Berlin, Germany; DCE Dynamic contrast-enhanced; DWI Diffusion-weighted imaging; GE General Electric Healthcare Systems, Milwaukee, WI, USA; HUH Haukeland University Hospital, Bergen, Norway; HUP Hospital of the University of Pennsylvania, Philadelphia, PA, USA; LHMC Lahey Hospital Medical Center, Burlington, MA, USA; MRI Magnetic resonance imaging; NVB Neurovascular Bundle; PDW Proton Density-Weighted; PET Positron emission tomography; Philips Philips Healthcare, Best, the Netherlands; PI-RADS Prostate Imaging-Reporting And Data System; PSA Prostate-specific antigen; RUMC Radboud University Medical Center, Nijmegen, the Netherlands; Siemens Siemens Healthineers, Erlangen, Germany; SV Seminal vesicles; T1W T1-weighted; T2W T2-Weighted; TNCI The National Cancer Institute, Bethesda, MD, USA; TNM Tumor Node Metastasis; UCCUC The University of California Clark Urology Center, Los Angeles, CA, USA; UCL University College London, London, UK; UMCG University Medical Center Groningen, Groningen, the Netherlands; UPMC University of Pittsburgh Medical Center, Pittsburgh, PA, USA; WHSL Washington University in St. Louis, Saint Louis, MO, USA; WRUHC Western Reserve University Hospitals, Cleveland, OH, USA; ZGT Ziekenhuis Groep Twente, Twente, the Netherlands.

Although quite some prostate MRI data seem to be available, the quality of the outcome that is to be predicted, i.e., the reference standard for annotations (if any), is disputable. For datasets carrying annotations of the prostate anatomy, the reference standard is often one or few human readers (whose annotations highly depend on their experience level). Similarly, we notice that for the prediction of csPCa, the quality of annotations provided per dataset highly differ. One dataset reports pathology outcome from MRI fusion biopsies, another uses in-bore MRI biopsies, another uses radical prostatectomy, while for others the reference standard remains unclear. Accuracy across these various tissue sampling strategies can vary strongly. Inconsistencies and missing information across imaging data, cohort distribution, and reference standard can also make it difficult to consolidate multiple public datasets into one. At the same time, most public datasets are too small to be used on their own. We conclude that annotations and data characteristics for public datasets are often ill-defined, and we advise that potential users contact the data providers for additional information (e.g., patient distribution, follow-up status) prior to usage.

Data overlap is an issue with public datasets. In some cases, all or part of the dataset contains cases from other public datasets. For example, the NCI-ISBI 2013 dataset [30] combined 40 cases from the Prostate-3T dataset [22] and 40 cases from the PROSTATE-DIAGNOSIS dataset [25], and the entire PROSTATEx [18] and Prostate-3T [22] datasets are included in the PI-CAI dataset [38]. Combining these datasets, may therefore inadvertently lead to false assumptions of data size in scientific AI experiments or product development.

All the datasets were confirmed to have been collected with institutional/ethical review board approval, except for I2CVB [23], Prostate158 [39], and QUBIQ21 [17], for which this information was not found. The datasets are all anonymized. Anonymization is becoming increasingly difficult in our online world in terms of data strictly not being traceable to patient information. Radiological images are almost always acquired, exchanged, and stored in DICOM format. The DICOM header is very rich in information that could lead to tracing back to the patient. The DICOM standard defines security concepts for anonymization, and public tools that implement this are available [40]. A simpler solution is to provide images in non-DICOM format, which contain header with minimal information. The drawback of non-DICOM images or very strongly anonymized DICOM images is that relevant scientific information may get lost. Public prostate MRI data should preferably be carefully anonymized DICOM images with as many tags preserved as possible. The Cancer Imaging Archive provides a very strong DICOM anonymization procedure with the most comprehensive set of DICOM tags available for scientific research [41].

Patient inclusion criteria were ambiguous for most datasets, which may raise questions about the degree of bias in the selected data. Images were mainly acquired for PCa detection in patients with suspected csPCa and/or for intervention or staging purposes. The PROSTATE-MRI [20] and Prostate Fused-MRI-Pathology [21] datasets included patients in whom biopsy confirmed cancer and who underwent radical prostatectomy. For the QIN-PROSTATE-Repeatability dataset [28], the criteria were the patient’s ability to undergo prostate MRI with an endorectal coil and complete the repeat examination. For the Medical Segmentation Decathlon (MSD) dataset [35], the criterion was the suitability for the development of a semantic segmentation algorithm.

The datasets have been used extensively by researchers for the development of clinical applications, including segmentation of prostate tissue and detection/diagnosis of csPCa [16, 42]. For segmentation-related applications, the PROMISE12 [22] and MSD [35] datasets were most commonly used for segmentation of the whole prostate gland and prostate zones, respectively (e.g. [43, 44]). PROSTATEx [22] is currently the dataset that is most commonly used for development of AI for detection of csPCa (e.g. [45–47]).

As of May 6, 2022, the PI-CAI challenge [37] has publicly released 1,500 (of 12,500) cases [38] with a much stronger reference standard than that of the PROSTATEx challenge [29]. Additionally, PI-CAI reserves a hidden testing cohort of 1,000 cases, with histopathology-confirmed positives (Gleason grade > 1) and histopathology (Gleason grade < 2) or follow-up confirmed negatives, that will span the complete distribution of patients encountered in clinical routine. Data will be multivendor (3-T scanners from Phillips and Siemens) and multicenter (Radboudumc, Ziekenhuis Groep Twente, University Medical Center Groningen, Norwegian University of Science and Technology). Patient age, PSA density, PSA level and prostate volume will be provided for all cases. Expert-derived lesion delineations are provided for approximately 80% of all cases, and AI-derived lesion delineations (pseudo-labels) are provided for all cases, using a state-of-the-art csPCa detection developed at Radboudumc [48].

An additional source of public images will be the ProCancer-I platform [49]. It was launched in 2020 to solve issues concerning national and international medical data sharing regulations, and lack of tooling, causing many institutions not to make their data available [50, 51]. To enable these institutions that are willing to share their data to improve, validate, and test state-of-the-art AI tools for prostate MRI diagnosis, the EU-funded platform provides a scalable high-performance computing platform that will host the world’s largest collection of anonymized prostate MRI image datasets (> 17,000 cases) based on data donations in compliance with EU legislation (GDPR). To ensure rapid clinical implementation of the developed models, the platform partners will closely monitor performance, accuracy and reproducibility. Optima [52] is another EU-funded initiative that aims at overcoming the limitation of data sharing while enabling research and clinical partners to leverage a variety of federated and centralized European data for the dynamic development and clinical implementation of AI tools to combat prostate, breast, and lung cancer.

Federated learning is an alternative approach to making data available. It allows to train robust prostate AI for MRI, with representative data from multiple countries and institutions, but, in contrast to the conventional approaches, in a federated learning framework, the AI model is trained by iteratively sharing model weights obtained from training on local data. Consequently, the local data need not to be shared and never leaves the hospitals [53, 54]. Promising frameworks for federated learning include Flower [55], FedML [56], and pySyft [57], which support several operating systems, the use of graphics processing units, and differential privacy. A successful example of federated training of a prostate segmentation algorithm is reported by Sarma et al. [58].

Available AI tools for prostate MRI

An inventory of commercial/public prostate MRI AI products/tools provides an overview of available technology and supported clinical applications. This is relevant to both the clinical end user and scientists exploring knowledge gaps. In reviews, these updates quickly become outdated. Of note, a website [59] provides an updated overview of available AI-based prostate MRI software for clinical radiology.

A comprehensive overview of current commercial products/public tools is summarized in Table 2. Eleven vendors offer products that help report and acquire prostate MRI for diagnostics and intervention. The AI claims range from modest automatic segmentation of the prostate to measure prostate volume, to calculation of a tumor heatmap, up to an automated detection of csPCa [60]. Consequently, products vary in their level of clinical support and ability to improve workflow or reader variability. Only few vendors are currently able to automatically generate reports that can help reduce the diagnostic workflow. For only one vendor (Siemens Healthineers) [13], a prototype was shown to increase diagnostic accuracy and reduce variability between readers [60]. Various trials are underway, and it is expected that soon other vendors will upgrade their products with similar claims. AI for prostate MRI is not dissimilar to many other radiology applications, in that peer-reviewed evidence of effectiveness is mostly lacking [61]. As shown in Table 2, levels of certification vary, which also implies that the level of validation varies. The ‘soft’ AI engine claim of it being able to produce a tumor heatmap without explicit detection performance can do with a class I certification with little validation studies. The ability to predict presence of csPCa and associated claims of workflow improvements requires much stronger evidence and validation levels (level II and above).

Table 2.

Overview of commercially available prostate MRI tools that implement AI. The table attempts a comprehensive comparison in terms of highest claim and level of trust based on certification level

| Number | Vendor | Product(s) name | Highest AI claim | FDA | CE |

|---|---|---|---|---|---|

| 1 | Quibim | DWI-IVIM, DCE-PKM, Texture, T2 mapping, QP-Prostate | Quantitative MR possibly reducing machine dependence and automate some report generation | Class II | Class IIa |

| 2 | Quantib | Quantib-Prostate | Automatic tumor detection that can automate some report generation | Class II | Class IIb |

| 3 | JLK | JPC-01K | Heatmap that may help spot tumors | No | Class I |

| 4 | Siemens Healthineers | Prostate MR Syngo.via, AI-Rad companion | Automatic tumor detection that can automate some report generation | Class II | Class IIb |

| 5 | Lucida | Prostate Intelligence | Automatic tumor detection that can automate some report generation | No | Class I |

| 6 | Cortechs.ai | OnQ Prostate | Heatmap that may help spot tumors | Class II | No |

| 7 | Elekta | ABAS | Automated anatomy segmentation | Class II | Not reported |

| 8 | Mirada | DLCExpert | Automated anatomy segmentation | No | Class IIb |

| 9 | MIM | MIM Maestro | Automated anatomy segmentation | Class II | Class III |

| 10 | Philips | MRCAT Prostate + Auto-Contouring | Automated anatomy segmentation | Class II | Not reported |

| 11 | General Electric | PROView DL | Automated anatomy segmentation | Class II | Not reported |

AI Artificial intelligence, CE European conformity, FDA Food and Drug Administration. For more information, check [59] for detailed descriptions of features and links to vendor websites and related information

Non-commercial public AI tools for prostate MRI may reflect the current state-of-the-art. Many tools have been made available in the form of publications, codes, software plugins, or grand challenge algorithms for research or non-clinical purposes. A number of these tools have already been presented in other review articles [16, 42, 62, 63]. However, access to these trained models is not always possible, and when it is possible, it is usually not easy for end users or researchers to implement. Furthermore, if developers want to benchmark against these models, they usually must use the source code, install libraries, and make changes to fit the model, which can lead to unfair and non-direct benchmarking. One way to overcome this problem is to use platforms that easily allow the use and direct benchmarking of pre-trained models.

NVIDIA Clara Imaging [64] is a platform that provides a framework for the development and direct deployment of AI applications for medical imaging. It includes a set of public, pre-trained deep learning models. Currently, the available models appear to focus primarily on segmentation tasks, including nnU-Net [43], a self-configuring method for deep learning-based segmentation that has shown excellent performance on the MSD [34] and PROMISE12 [32] challenges. Another platform is Grand Challenge–Algorithms [65], to which pre-trained models can be uploaded so that developers can directly test the method and compare their models against its performance. The platform currently includes a prostate MRI segmentation model and two csPCa detection models. Furthermore, the Federated Tumor Segmentation (FeTS) Platform [66] provides access to multiple pre-trained models that can be deployed in a federated fashion.

Grand challenges

Grand challenges provide the means to benchmark and validate multiple AI models across a set of common training and testing datasets, in a bias-free manner. For prostate MRI, there are a handful of public challenges, each of which focus on one of two clinical outcome categories: prostate anatomy segmentation (NCI-ISBI 2013 [31], PROMISE12 [33], MSD [34], QUBIQ21 [17], Prostate158 [39]) and csPCa detection/diagnosis (PROSTATEx [29], Prostate158 [39] and PI-CAI [37]).

The NCI-ISBI 2013, MSD and Prostate158 challenges evaluated the performance of AI models for segmentation of the prostatic peripheral zone (PZ) and transitional zone (TZ). Meanwhile, the PROMISE12 challenge evaluated the segmentation of the whole gland, not its constituent zones. Segmentation of the whole gland is considered an easier task than segmentation of prostatic zones (especially PZ). This is reflected by a top Dice similarity coefficient in the literature of about 0.90 (TZ) and 0.75 (PZ) [67]. In the MSD, nnU-Net [43], which performed the best, had similar results with a Dice similarity coefficient of 0.90 and 0.77 for TZ and PZ, respectively.

PROMISE12 ranked AI-derived segmentations using a score that averages four different similarity and distance metrics relative to an expert’s manual annotations. The top score is 100, but in their article [33], challenge organizers explained that final scores are normalized with a second (inexperienced) reader to 85. They already indicated that very high scores (> 90) are likely in the realm of inter-reader variability. During the challenge, the best score achieved was 87. However, in the present-day post-challenge leader board, ten submissions have a score ranging from 89.5 to 91.9, with the highest score being achieved using MSD-Net [68]. At these higher limits of performance, differences between AI algorithms, with respect to the PROMISE12 reference standard (human expert with six years of experience), may not be indicative of better or worse performance. Particularly, with deep learning algorithms performing so well, the issue now becomes to define a better reference standard that is more representative of the biological ground-truth, which remains an open research question.

The QUBIQ21 challenge aimed to quantify uncertainties in biomedical image segmentation. Recent advances in probabilistic deep learning allow for uncertainty estimation across predictions [69], which can pave the way to explainable, trustworthy AI and can inform clinicians about diagnostic uncertainty of AI [70]. QUBIQ21 addresses multiple organs and imaging modalities, including prostate MRI. For prostate MRI, there are two segmentation tasks for 55 T2W cases (one mid-gland slice with six expert annotations per case).

The Prostate158 challenge is a recently released challenge that aims to segment the PZ and TZ of the prostate in addition to segmenting the PCa lesions. The challenge provides 139 cases for training and validation of AI models and uses a hidden test dataset of 19 cases for performance evaluation of the models.

The PROSTATEx and PI-CAI challenges aim to evaluate the performance of AI models for csPCa detection and classification. Launched in 2014, the PROSTATEx challenge has been the only public benchmark for this task to-date. More than 1,765 entries have been submitted during the challenge, with the maximum value of the area under the curve at receiver operating characteristic analysis currently at 0.95. Meanwhile, in the PI-CAI challenge provides the largest training (n ≈ 9,000; of which 1,500 cases will be made public), validation (n ≈ 100), and testing (n ≈ 1,000) datasets to-date, with a study design and reference standard established in conjunction with multi-disciplinary radiology, urology and AI experts in the domain. PI-CAI also includes an international reader study with 63 radiologists (42 centers, 18 countries; 1–23 years of experience reading prostate MRI, median 9 years) till-date, to assess the clinical viability of stand-alone AI relative to radiologists.

Discussion

AI is starting to get an assistive role in the PCa clinical pathway. The advent of deep learning for medical imaging allows realizing stand-alone AI that achieves good to expert level performance in the prediction of segmentation volume and csPCa detection [14, 15, 45]. Deep learning AI models are being incorporated in products that provide human interface software that aims to help improve workflow and reduce diagnostic performance variability [45, 47, 60, 71]. Moreover, these AI diagnostic models can be used before, during and after radiation therapy. Segmentation models can be used for organ delineation in the planning phase and for prostate-targeted MRI-guided radiotherapy [72]. Detection models can for example be used to monitor the response of the lesion during and after treatment [73]. Similar developments have already been seen in other medical imaging domains such as breast [74] and lung [75]. The recent availability of prostate MRI data explains the rather late development of prostate MRI AI. The development is further complicated because prostate MRI is intrinsically multiparametric with an enormously different appearance of the image parameters.

Other complications include the presence of image artifacts [76] and that MR image acquisition is not standardized, although minimum requirements for PI-RADS reading exist [7]. An important role for AI may therefore also be in image preprocessing and quality control [77–80]. Finally, prostate MRI hampers a well-defined reference standard with definitions of cancer significance widely varying. The AI-induced large-scale collection and curation of data will help further develop the field. To that end, AI can help prostate MRI realize a better perspective for men with PCa, by reducing unnecessary biopsies, reducing overtreatment, providing early detection to achieve less burden, and increasing survival.

We have attempted to provide an overview of the current state-of-the-art of AI applications for prostate MRI. Unlike other review papers [16, 42, 62] that focus on AI tools that have been developed, this review focuses on open datasets, commercially/publicly available AI, and grand challenges. However, since this is a rapidly growing field, a limitation of this review is that it will become outdated in a relatively short period of time, just like the review papers before it. Therefore, up-to-date reviews of this field are constantly needed.

In conclusion, available prostate MRI AI products are relatively few, with only one validated for assisting in the difficult detection task and others for the simpler gland volume estimation task. The AI prediction of other clinical outcomes in the prostate cancer pathway is still maturing or even needs to start at all. A lot of research is still required to successfully realize AI to help in the whole prostate pathway. Public well-curated datasets are available but are relatively small and vary in quality of the reference standard. More computational AI challenges are needed to provide independent validation of products and research to build trust in AI for prostate MRI.

Abbreviations

- AI

Artificial intelligence

- csPCa

Clinically significant prostate cancer

- DICOM

Digital Imaging and COmmunications in Medicine

- mpMRI

Multiparametric magnetic resonance imaging

- MSD

Medical segmentation decathlon

- PCa

Prostate cancer

- PI-RADS

Prostate Imaging and Reporting and Data System

- PSA

Prostate-specific antigen

- PZ

Peripheral zone

- TZ

Transitional zone

Authors’ contributions

All authors drafted and reviewed the manuscript. The authors read and approved the final manuscript.

Funding

This work was financed by the Research Council of Norway (Grant Number 295013), the Norwegian Cancer Society and Prostatakreftforeningen (Grant Number 215951), the Liaison Committee between the Central Norway Regional Health Authority and the Norwegian University of Science and Technology (Grant Numbers 90265300 and 90793700), EU H2020 ProCAncer-I (Grant Number 952159), EU H2020 PANCAIM (Grant Number 101016851), and EU IMI2 PIONEER (Grant Number 777492). Open access funding provided by Norwegian University of Science and Technology.

Availability of data and materials

Not applicable.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Henkjan Huisman declares to receive research support from Siemens Healthineers, the other authors declare that they have no competing interests.

Footnotes

This article belongs to the thematic series entitled “AI tools for prostate MRI and PET/CT: from data science to clinical value” guest editors: Rossano Girometti (Udine, Italy), Renato Cuocolo (Baronissi, Italy), and Andrey Fedorov (Boston, MA, USA).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Sung H, Ferlay J, Siegel RL, et al. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J Clin. 2021;71:209–249. doi: 10.3322/caac.21660. [DOI] [PubMed] [Google Scholar]

- 2.Welch HG, Albertsen PC. Reconsidering prostate cancer mortality - the future of PSA screening. N Engl J Med. 2020;382:1557–1563. doi: 10.1056/NEJMms1914228. [DOI] [PubMed] [Google Scholar]

- 3.Mottet N, Bellmunt J, Bolla M, et al. EAU-ESTRO-SIOG Guidelines on Prostate Cancer. Part 1: Screening, Diagnosis, and Local Treatment with Curative Intent. Eur Urol. 2017;71:618–629. doi: 10.1016/j.eururo.2016.08.003. [DOI] [PubMed] [Google Scholar]

- 4.Rouviere O, Puech P, Renard-Penna R, et al. Use of prostate systematic and targeted biopsy on the basis of multiparametric MRI in biopsy-naive patients (MRI-FIRST): a prospective, multicentre, paired diagnostic study. Lancet Oncol. 2019;20:100–109. doi: 10.1016/S1470-2045(18)30569-2. [DOI] [PubMed] [Google Scholar]

- 5.Kasivisvanathan V, Rannikko AS, Borghi M, et al. MRI-targeted or standard biopsy for prostate-cancer diagnosis. N Engl J Med. 2018;378:1767–1777. doi: 10.1056/NEJMoa1801993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Eklund M, Jaderling F, Discacciati A, et al. MRI-targeted or standard biopsy in prostate cancer screening. N Engl J Med. 2021;385:908–920. doi: 10.1056/NEJMoa2100852. [DOI] [PubMed] [Google Scholar]

- 7.Weinreb JC, Barentsz JO, Choyke PL, et al. PI-RADS prostate imaging - reporting and data system: 2015, Version 2. Eur Urol. 2016;69:16–40. doi: 10.1016/j.eururo.2015.08.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Litjens G, Debats O, Barentsz J, Karssemeijer N, Huisman H. Computer-aided detection of prostate cancer in MRI. IEEE Trans Med Imaging. 2014;33:1083–1092. doi: 10.1109/TMI.2014.2303821. [DOI] [PubMed] [Google Scholar]

- 9.Rosenkrantz AB, Ayoola A, Hoffman D, et al. The learning curve in prostate MRI interpretation: self-directed learning versus continual reader feedback. AJR Am J Roentgenol. 2017;208:W92–W100. doi: 10.2214/AJR.16.16876. [DOI] [PubMed] [Google Scholar]

- 10.Gatti M, Faletti R, Calleris G, et al. Prostate cancer detection with biparametric magnetic resonance imaging (bpMRI) by readers with different experience: performance and comparison with multiparametric (mpMRI) Abdom Radiol (NY) 2019;44:1883–1893. doi: 10.1007/s00261-019-01934-3. [DOI] [PubMed] [Google Scholar]

- 11.Greer MD, Shih JH, Lay N, et al. Interreader variability of prostate imaging reporting and data system version 2 in detecting and assessing prostate cancer lesions at prostate MRI. AJR Am J Roentgenol. 2019;212:1197–1204. doi: 10.2214/AJR.18.20536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cao RM, Bajgiran AM, Mirak SA, et al. Joint prostate cancer detection and gleason score prediction in mp-MRI via FocalNet. IEEE Trans Med Imaging. 2019;38:2496–2506. doi: 10.1109/TMI.2019.2901928. [DOI] [PubMed] [Google Scholar]

- 13.Winkel DJ, Wetterauer C, Matthias MO, et al. Autonomous detection and classification of PI-RADS lesions in an MRI screening population incorporating multicenter-labeled deep learning and biparametric imaging: proof of concept. Diagnostics (Basel) 2020;10:951. doi: 10.3390/diagnostics10110951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Saha A, Hosseinzadeh M, Huisman H. End-to-end prostate cancer detection in bpMRI via 3D CNNs: effects of attention mechanisms, clinical priori and decoupled false positive reduction. Med Image Anal. 2021;73:102155. doi: 10.1016/j.media.2021.102155. [DOI] [PubMed] [Google Scholar]

- 15.Hosseinzadeh M, Saha A, Brand P, Slootweg I, de Rooij M, Huisman H. Deep learning-assisted prostate cancer detection on bi-parametric MRI: minimum training data size requirements and effect of prior knowledge. Eur Radiol. 2022;32:2224–2234. doi: 10.1007/s00330-021-08320-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Li HY, Lee CH, Chia D, Lin ZP, Huang WM, Tan CH. Machine learning in prostate MRI for prostate cancer: current status and future opportunities. Diagnostics (Basel) 2022;12:289. doi: 10.3390/diagnostics12020289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Quantification of Uncertainties in Biomedical Image Quantification Challenge . Grand Challenge. 2021. [Google Scholar]

- 18.Litjens G, Debats O, Barentsz J, Karssemeijer N, Huisman H (2017) Prostatex challenge data. The Cancer Imaging Archive. https://wiki.cancerimagingarchive.net/pages/viewpage.action?pageId=23691656. Accessed 30 May 2022. 10.7937/K9TCIA.2017.MURS5CL

- 19.Cuocolo R, Stanzione A, Castaldo A, De Lucia DR, Imbriaco M (2021) PROSTATEx masks. GitHub. https://github.com/rcuocolo/PROSTATEx_masks. Accessed 30 May 2022

- 20.Choyke P, Turkbey B, Pinto P, Merino M, Wood B (2016) Data From PROSTATE-MRI. The Cancer Imaging Archive. https://wiki.cancerimagingarchive.net/display/Public/PROSTATE-MRI. Accessed 30 May 2022 . 10.7937/K9/TCIA.2016.6046GUDv

- 21.Madabhushi A, Feldman M (2016) Fused radiology-pathology prostate dataset. The Cancer Imaging Archive. https://wiki.cancerimagingarchive.net/display/Public/Prostate+Fused-MRI-Pathology. Accessed 30 May 2022. 10.7937/K9/TCIA.2016.TLPMR1AM

- 22.Litjens G, Futterer J, Huisman H (2015) Data From Prostate-3T. The Cancer Imaging Archive. https://wiki.cancerimagingarchive.net/display/Public/Prostate-3T. Accessed 30 May 2022. 10.7937/K9/TCIA.2015.QJTV5IL5

- 23.Lemaitre G, Marti R, Meriaudeau F. Original multi-parametric MRI images of prostate. Zenodo; 2016. [Google Scholar]

- 24.Zuley ML, Jarosz R, Drake BF, et al. Radiology data from the Cancer Genome Atlas Prostate Adenocarcinoma [TCGA-PRAD] collection. The Cancer Imaging Archive; 2016. [Google Scholar]

- 25.Bloch BN, Jain A, Jaffe CC. Data From PROSTATE-DIAGNOSIS. The Cancer Imaging Archive; 2015. [Google Scholar]

- 26.Natarajan S, Priester A, Margolis D, Huang J, Marks L. Prostate MRI and ultrasound with pathology and coordinates of tracked biopsy (Prostate-MRI-US-Biopsy) The Cancer Imaging Archive; 2020. [Google Scholar]

- 27.Fedorov A, Tempany C, Mulkern R, Fennessy F. Data From QIN PROSTATE. The Cancer Imaging Archive; 2016. [Google Scholar]

- 28.Fedorov A, Schwier M, Clunie D, et al. Data From QIN-PROSTATE-Repeatability. The Cancer Imaging Archive; 2018. [Google Scholar]

- 29.Armato SG, 3rd, Huisman H, Drukker K, et al. PROSTATEx Challenges for computerized classification of prostate lesions from multiparametric magnetic resonance images. J Med Imaging (Bellingham) 2018;5:044501. doi: 10.1117/1.JMI.5.4.044501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bloch N, Madabhushi A, Huisman H, et al. NCI-ISBI 2013 challenge: automated segmentation of prostate structures. The Cancer Imaging Archive; 2015. [Google Scholar]

- 31.NCI-ISBI 2013 Challenge - Automated Segmentation of Prostate Structures (2015). The Cancer Imaging Archive. Available via https://wiki.cancerimagingarchive.net/display/Public/NCI-ISBI+2013+Challenge+-+Automated+Segmentation+of+Prostate+Structures. Accessed 30 May 2022

- 32.PROMISE12 Grand Challenge . Grand Challenge. 2012. [Google Scholar]

- 33.Litjens G, Toth R, van de Ven W, et al. Evaluation of prostate segmentation algorithms for MRI: the PROMISE12 challenge. Med Image Anal. 2014;18:359–373. doi: 10.1016/j.media.2013.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Antonelli M, Reinke A, Bakas S et al (2021) The Medical Segmentation Decathlon:arXiv arXiv:2106.05735. 10.48550/ARXIV.2106.05735

- 35.Simpson AL, Antonelli M, Bakas S, et al. A large annotated medical image dataset for the development and evaluation of segmentation algorithms. The Medical Segmentation Decathlon; 2019. [Google Scholar]

- 36.Jolesz F. NCIGT_PROSTATE. XNAT; 2009. [Google Scholar]

- 37.The PI-CAI Challenge . Grand Challenge. 2020. [Google Scholar]

- 38.Saha A, Twilt JJ, Bosma JS, et al. Artificial Intelligence and Radiologists at Prostate Cancer Detection in MRI: The PI-CAI Challenge. Zenodo; 2022. [Google Scholar]

- 39.Prostate158 Challenge (2022) Grand Challenge. Available via https://prostate158.grand-challenge.org/data. Accessed 2 June 2022. 10.5281/zenodo.6481141

- 40.Aryanto KY, Oudkerk M, van Ooijen PM. Free DICOM de-identification tools in clinical research: functioning and safety of patient privacy. Eur Radiol. 2015;25:3685–3695. doi: 10.1007/s00330-015-3794-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kirby J, Smith K. Submission and De-identification Overview. The Cancer Imaging Archive; 2022. [Google Scholar]

- 42.Twilt JJ, van Leeuwen KG, Huisman HJ, Futterer JJ, de Rooij M. Artificial Intelligence Based Algorithms for Prostate Cancer Classification and Detection on Magnetic Resonance Imaging: A Narrative Review. Diagnostics (Basel) 2021;11:959. doi: 10.3390/diagnostics11060959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Isensee F, Jaeger PF, Kohl SAA, Petersen J, Maier-Hein KH. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods. 2021;18:203–211. doi: 10.1038/s41592-020-01008-z. [DOI] [PubMed] [Google Scholar]

- 44.Calisto MB, Lai-Yuen SK. EMONAS-Net: Efficient multiobjective neural architecture search using surrogate-assisted evolutionary algorithm for 3D medical image segmentation. Artif Intell Med. 2021;119:102154. doi: 10.1016/j.artmed.2021.102154. [DOI] [PubMed] [Google Scholar]

- 45.Mehralivand S, Yang D, Harmon SA, et al. A cascaded deep learning-based artificial intelligence algorithm for automated lesion detection and classification on biparametric prostate magnetic resonance imaging. Acad Radiol. 2021;28:S1076-6332(21)00377-9. doi: 10.1016/j.acra.2021.08.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Pellicer-Valero OJ, Marenco Jimenez JL, Gonzalez-Perez V, et al. Deep learning for fully automatic detection, segmentation, and Gleason grade estimation of prostate cancer in multiparametric magnetic resonance images. Sci Rep. 2022;12:2975. doi: 10.1038/s41598-022-06730-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Vente C, Vos P, Hosseinzadeh M, Pluim J, Veta M. Deep learning regression for prostate cancer detection and grading in bi-parametric MRI. IEEE Trans Biomed Eng. 2021;68:374–383. doi: 10.1109/TBME.2020.2993528. [DOI] [PubMed] [Google Scholar]

- 48.Bosma JS, Saha A, Hosseinzadeh M, Slootweg I, de Rooij M, Huisman H (2021) Annotation-efficient cancer detection with report-guided lesion annotation for deep learning-based prostate cancer detection in bpMRI. arXiv arXiv:2112.05151. 10.48550/arXiv.2112.05151

- 49.ProCAncer-I: An AI Platform integrating imaging data and models, supporting precision care through prostate cancer’s continuum. Available via https://www.procancer-i.eu. Accessed 30 May 2022.

- 50.Haas S, Wohlgemuth S, Echizen I, Sonehara N, Muller G. Aspects of privacy for electronic health records. Int J Med Inform. 2011;80:e26–e31. doi: 10.1016/j.ijmedinf.2010.10.001. [DOI] [PubMed] [Google Scholar]

- 51.Phillips M. International data-sharing norms: from the OECD to the General Data Protection Regulation (GDPR) Hum Genet. 2018;137:575–582. doi: 10.1007/s00439-018-1919-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.OPTIMA: Tackling Cancer through Real Word Dara and Artificial Intelligence. Available via https://www.optima-oncology.eu. Accessed 30 May 2022

- 53.Brendan McMahan H, Moore E, Ramage D, Hampson S, Agüera y Arcas B (2016) Communication-efficient learning of deep networks from decentralized data. arXiv arXiv:1602.05629. 10.48550/arXiv.1602.05629

- 54.Kairouz P, McMahan HB, Avent B, et al. Advances and open problems in federated learning. Found Trends Machine Learn. 2021;14:1–210. doi: 10.1561/2200000083. [DOI] [Google Scholar]

- 55.Flower: A friendly federated learning framework. Available via https://flower.dev. Accessed 30 May 2022

- 56.FedML: The Federated Learning/Analytics and Edge AI Platform. Available via https://fedml.ai. Accessed 30 May 2022

- 57.Syft+Grid: Code for computing on data you do not own and cannot see. GitHub. Available via https://github.com/OpenMined/PySyft. Accessed 30 May 2022

- 58.Sarma KV, Harmon S, Sanford T, et al. Federated learning improves site performance in multicenter deep learning without data sharing. J Am Med Inform Assoc. 2021;28:1259–1264. doi: 10.1093/jamia/ocaa341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.AI for Radiology: an implementation guide. Grand Challenge. Available via https://grand-challenge.org/aiforradiology/?subspeciality=All&modality=All&ce_under=All&ce_%20class=All&fda_class=All&sort_by=ce+certification&search=prostate. Accessed 30 May 2022

- 60.Winkel DJ, Tong A, Lou B, et al. A novel deep learning based computer-aided diagnosis system improves the accuracy and efficiency of radiologists in reading biparametric magnetic resonance images of the prostate: results of a multireader, multicase study. Investig Radiol. 2021;56:605–613. doi: 10.1097/RLI.0000000000000780. [DOI] [PubMed] [Google Scholar]

- 61.van Leeuwen KG, Schalekamp S, Rutten MJCM, van Ginneken B, de Rooij M. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur Radiol. 2021;31:3797–3804. doi: 10.1007/s00330-021-07892-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Castillo TJMC, Arif M, Niessen WJ, Schoots IG, Veenland JF. Automated classification of significant prostate cancer on MRI: a systematic review on the performance of machine learning applications. Cancers (Basel) 2020;12:1606. doi: 10.3390/cancers12061606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Tataru OS, Vartolomei MD, Rassweiler JJ, et al. Artificial intelligence and machine learning in prostate cancer patient management-current trends and future perspectives. Diagnostics (Basel) 2021;11:354. doi: 10.3390/diagnostics11020354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.NVIDIA Clara Imaging. NVIDIA. Available via https://developer.nvidia.com/clara-medical-imaging. Accessed 30 May 2022

- 65.Grand Challenge Algorithms. Grand Challenge. Available via https://grand-challenge.org/algorithms. Accessed 30 May 2022

- 66.FeTS: Federated Tumor Segmentation. GitHub. Available via https://fets-ai.github.io/Front-End. Accessed 30 May 2022

- 67.Montagne S, Hamzaoui D, Allera A, et al. Challenge of prostate MRI segmentation on T2-weighted images: inter-observer variability and impact of prostate morphology. Insights Imaging. 2021;12:71. doi: 10.1186/s13244-021-01010-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Zheng B, Liu Y, Zhu Y, et al. MSD-Net: multi-scale discriminative network for COVID-19 lung infection segmentation on CT. IEEE Access. 2020;8:185786–185795. doi: 10.1109/ACCESS.2020.3027738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.McCrindle B, Zukotynski K, Doyle TE, Noseworthy MD. A Radiology-focused Review of Predictive Uncertainty for AI Interpretability in Computer-assisted Segmentation. Radiol Artif Intell. 2021;3:e210031. doi: 10.1148/ryai.2021210031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Hu S, Worrall D, Knegt S, Veeling B, Huisman H, Welling M (2019) Supervised uncertainty quantification for segmentation with multiple annotations. arXiv arXiv:1907.01949. 10.48550/arXiv.1907.01949

- 71.Schelb P, Wang X, Radtke JP, et al. Simulated clinical deployment of fully automatic deep learning for clinical prostate MRI assessment. Eur Radiol. 2021;31:302–313. doi: 10.1007/s00330-020-07086-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Salembier C, Villeirs G, De Bari B, et al. ESTRO ACROP consensus guideline on CT- and MRI-based target volume delineation for primary radiation therapy of localized prostate cancer. Radiother Oncol. 2018;127:49–61. doi: 10.1016/j.radonc.2018.01.014. [DOI] [PubMed] [Google Scholar]

- 73.Wang YF, Tadimalla S, Hayden AJ, Holloway L, Haworth A. Artificial intelligence and imaging biomarkers for prostate radiation therapy during and after treatment. J Med Imaging Radiat Oncol. 2021;65:612–626. doi: 10.1111/1754-9485.13242. [DOI] [PubMed] [Google Scholar]

- 74.Salim M, Wahlin E, Dembrower K, et al. External evaluation of 3 commercial artificial intelligence algorithms for independent assessment of screening mammograms. JAMA Oncol. 2020;6:1581–1588. doi: 10.1001/jamaoncol.2020.3321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Venkadesh KV, Setio AAA, Schreuder A, et al. Deep learning for malignancy risk estimation of pulmonary nodules detected at low-dose screening CT. Radiology. 2021;300:438–447. doi: 10.1148/radiol.2021204433. [DOI] [PubMed] [Google Scholar]

- 76.Hoeks CMA, Barentsz JO, Hambrock T, et al. Prostate cancer: multiparametric MR imaging for detection, localization, and staging. Radiology. 2011;261:46–66. doi: 10.1148/radiol.11091822. [DOI] [PubMed] [Google Scholar]

- 77.Giganti F, Allen C, Emberton M, Moore CM, Kasivisvanathan V, group Ps Prostate imaging quality (PI-QUAL): a new quality control scoring system for multiparametric magnetic resonance imaging of the prostate from the PRECISION trial. Eur Urol Oncol. 2020;3:615–619. doi: 10.1016/j.euo.2020.06.007. [DOI] [PubMed] [Google Scholar]

- 78.Sorland KI, Sunoqrot MRS, Sandsmark E et al (2022) Pseudo-T2 mapping for normalization of T2-weighted prostate MRI. Magn Reson Mater Phy. 10.1007/s10334-022-01003-9 [DOI] [PMC free article] [PubMed]

- 79.Sunoqrot MRS, Nketiah GA, Selnaes KM, Bathen TF, Elschot M. Automated reference tissue normalization of T2-weighted MR images of the prostate using object recognition. Magn Reson Mater Phy. 2021;34:309–321. doi: 10.1007/s10334-020-00871-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Sunoqrot MRS, Selnaes KM, Sandsmark E, et al. A quality control system for automated prostate segmentation on T2-weighted MRI. Diagnostics (Basel) 2020;10:714. doi: 10.3390/diagnostics10090714. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.