Figure 3:

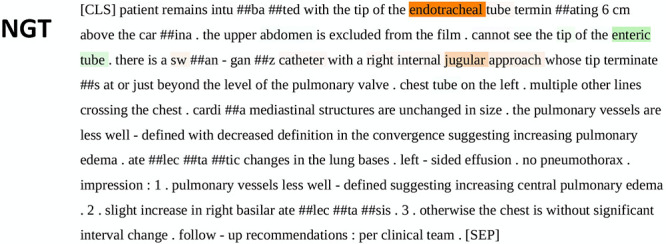

Example of “sequence classification explainer” from PubMedBERT. This figure demonstrates the process of “tokenization,” an automatic process that occurs after unprocessed text is introduced to the model. Words represented by the pretrained model’s “vocabulary” are maintained in their entirety, while those not in the model vocabulary are broken down into fragments that do exist in the vocabulary. These fragments are annotated with preceding “##.” Though the fragments do not hold real meaning, the model learns what combinations of the fragments mean through training in context of the text despite absence of these terms in the model’s vocabulary. Words are highlighted and color-coded per their positive (green), neutral (white), or negative (red) impact on the given task. The resulting saliency map provides insight and context for the model’s probability output. Highlighted annotation of certain words indicates that the model provided attention over a certain threshold to those words before providing an output. BERT = bidirectional encoder representations from transformers, NGT = enterogastric tube.