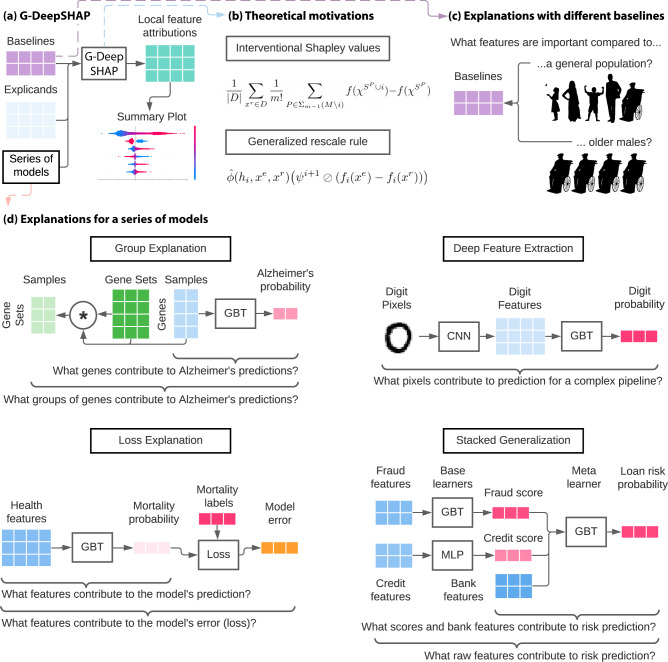

Fig. 1. G-DeepSHAP estimates Shapley value feature attributions to explain a series of models using a baseline distribution.

a Local feature attributions with G-DeepSHAP require explicands (samples being explained), a baseline distribution (samples being compared to), and a model that is comprised of a series of models. They can be visualized to understand model behavior (Supplementary Methods Section 1.3). b Theoretical motivation behind G-DeepSHAP (Methods sections The Shapley value and A generalized rescale rule to explain a series of models). c The baseline distribution is an important, but often overlooked, a parameter that changes the scientific question implicit in the local feature attributions we obtain. d Explaining a series of models enables us to explain groups of features, model loss, and complex pipelines of models (deep feature extraction and stacked generalization). Experimental setups are described in Supplementary Methods Section 1.2.