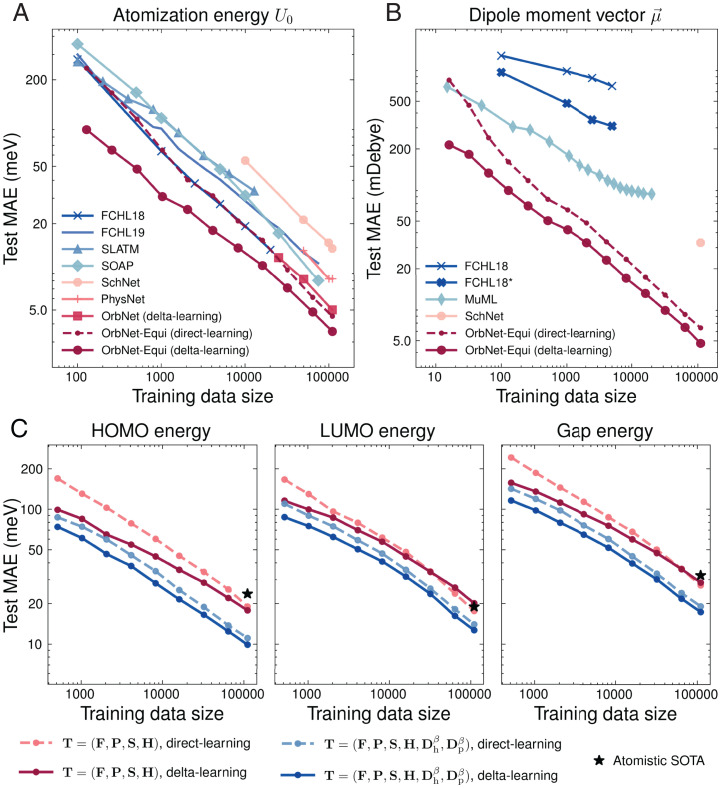

Fig. 3.

Model performance on the QM9 dataset. (A and B) Test MAE of OrbNet-Equi is shown as functions of the number of training samples along with previously reported results from task-specific ML methods [FCHL18 (49), FCHL19 (51), Spectrum of London and Axilrod-Teller-Muto (ATM) potential (98), Smooth Overlap of Atomic Positions (50), FCHL18* (51), MuML (52)] and deep learning–based methods [SchNet (13), PhysNet (14), OrbNet (26)] for targets (A) electronic energy U0 and (B) molecular dipole moment vector on the QM9 dataset. Results for OrbNet-Equi models trained with direct learning and delta learning are shown as dashed and solid lines, respectively. (C) Incorporating energy-weighted density matrices to improve data efficiency on learning frontier orbital properties. The HOMO, LUMO, and HOMO–LUMO gap energy test MAEs of OrbNet-Equi are shown as functions of the number of training samples. For models with the default feature set (red curves), the reduction in test MAE for delta-learning models over direct-learning models gradually diminishes as the training data size grows. The LUMO and gap energy MAE curves exhibit a cross-over around 32,000 to 64,000 training samples; thereafter, direct-learning models outperform delta-learning models. In contrast, when the energy-weighted density matrix features are supplied (blue curves), the test MAE curves between direct-learning and delta-learning models remain gapped when the training data size is varied. The black stars indicate the lowest test MAEs achieved by Atomistic ML methods [SphereNet (17)] trained with 110,000 samples.