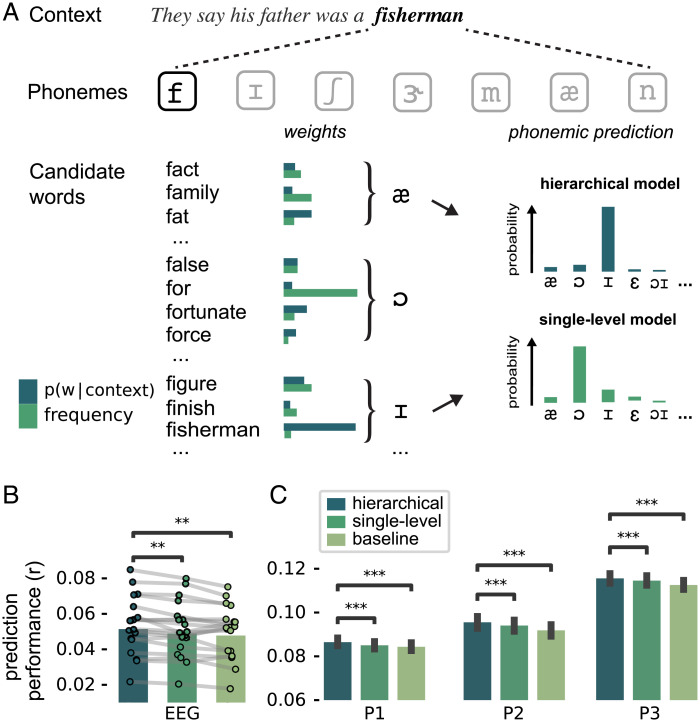

Fig. 6.

Evidence for hierarchical inference during phoneme prediction. (A) Two models of phoneme prediction during incremental word recognition. Phonemic predictions were computed by grouping candidate words by their identifying next phoneme, and weighting each candidate word by its prior probability. This weight (or prior) could be either based on a word’s overall probability of occurrence (i.e., frequency) or on its conditional probability in that context (from GPT-2). Critically, in the frequency-based model, phoneme predictions are based on a single level: short sequences of within-words phonemes (hundreds of milliseconds long) plus a fixed prior. By contrast, in the contextual model, predictions are based not just on short sequences of phonemes but also on a contextual prior which is, itself, based on long sequences of prior words (up to minutes long), rendering the model hierarchical (see Materials and Methods). (B and C) Model comparison results in EEG (B) and all MEG participants (C). EEG: dots with connecting lines represent individual participants (averaged over all channels). MEG: bars represent median across runs; error bars represent bootstrapped absolute deviance (averaged over language network sources). Significance levels correspond to P < 0.01 (**) or P < 0.001 (***) in a two-tailed paired t or Wilcoxon sign rank test.