Abstract

Background

Platform trials can evaluate the efficacy of several experimental treatments compared to a control. The number of experimental treatments is not fixed, as arms may be added or removed as the trial progresses. Platform trials are more efficient than independent parallel group trials because of using shared control groups. However, for a treatment entering the trial at a later time point, the control group is divided into concurrent controls, consisting of patients randomised to control when that treatment arm is in the platform, and non-concurrent controls, patients randomised before. Using non-concurrent controls in addition to concurrent controls can improve the trial’s efficiency by increasing power and reducing the required sample size, but can introduce bias due to time trends.

Methods

We focus on a platform trial with two treatment arms and a common control arm. Assuming that the second treatment arm is added at a later time, we assess the robustness of recently proposed model-based approaches to adjust for time trends when utilizing non-concurrent controls. In particular, we consider approaches where time trends are modeled either as linear in time or as a step function, with steps at time points where treatments enter or leave the platform trial. For trials with continuous or binary outcomes, we investigate the type 1 error rate and power of testing the efficacy of the newly added arm, as well as the bias and root mean squared error of treatment effect estimates under a range of scenarios. In addition to scenarios where time trends are equal across arms, we investigate settings with different time trends or time trends that are not additive in the scale of the model.

Results

A step function model, fitted on data from all treatment arms, gives increased power while controlling the type 1 error, as long as the time trends are equal for the different arms and additive on the model scale. This holds even if the shape of the time trend deviates from a step function when patients are allocated to arms by block randomisation. However, if time trends differ between arms or are not additive to treatment effects in the scale of the model, the type 1 error rate may be inflated.

Conclusions

The efficiency gained by using step function models to incorporate non-concurrent controls can outweigh potential risks of biases, especially in settings with small sample sizes. Such biases may arise if the model assumptions of equality and additivity of time trends are not satisfied. However, the specifics of the trial, scientific plausibility of different time trends, and robustness of results should be carefully considered.

Supplementary Information

The online version contains supplementary material available at (10.1186/s12874-022-01683-w).

Keywords: Adding arms, Non-concurrent controls, Platform trials

Background

Platform trials are multi-arm trials that allow new experimental treatment arms to enter and leave the trial over time [1, 2]. Such trials can improve the statistical efficiency compared to separate trials because of the flexible features such as stopping treatments early for futility or efficacy, adding new treatments to be tested during the course of the trial, and sharing control groups [3]. One controversy in the analysis of such trials is the use of non-concurrent controls in treatment versus control comparisons. For an experimental treatment that enters the platform trial at a later time point, we denote control patients who were recruited before the experimental treatment entered the platform as non-concurrent controls and patients who are recruited to the control when the experimental treatment is part of the platform as concurrent controls for the specific treatment arm. Unadjusted treatment-control comparisons that pool concurrent with non-concurrent controls can be biased if there are time trends in the control data, e.g., due to a change in standard of care, change in the patient population, or other external changes such as seasonal effects or a pandemic [4–8].

As two hypothetical trials where non-concurrent controls could be incorporated in the analysis, consider the settings of platform trials in depression and non-alcoholic steatohepatitis with a continuous or binary outcome, respectively.

Platform trial for Major Depressive Disorder. Major Depressive Disorder (MDD) is a common psychiatric disorder and is a leading cause of burden of disease and years lost due to disability worldwide [9]. While safe and effective pharmacological treatments for depression are available, they only achieve sufficient symptom reductions in about half of the patients [10]. Importantly, the major drug classes in this area were discovered and developed decades ago and there have been very few successful attempts to develop novel drugs since. More recently, however, a number of candidate drugs with different putative mechanisms of action have been evaluated in pre-clinical and early stage clinical studies. Thus, platform trials that allow for assessing multiple medications simultaneously have the potential to substantially speed up drug development in this area [11]. Blinding is particularly critical in this indication because of the types of outcomes used (e.g. interview-based ratings and patient-reported outcomes), expectation bias and typically large placebo responses in MDD trials. Consider a platform trial in MDD where experimental treatment arms are compared with a common control. The primary endpoint is the change in a continuous clinical rating scale, such as the Montgomery-Åsberg Depression Rating Scale (MADRS), from baseline to six weeks post randomization. To reduce the sample size and the number of patients assigned to the control group, the use of non-concurrent controls could be considered. However, time trends in the placebo response cannot be excluded in this setting. A specific source of such time trends can be expectation bias, which may vary, depending on the allocation probability to control and the number and type of treatments currently in the platform. Especially, if the allocation probability to control is low and treatments that are perceived as very promising enter the platform, the expectation bias may increase. Therefore, adjusting for potential time trends would be essential to obtain robust results.

Platform trial for Non-Alcoholic Steatohepatitis. NonAlcoholic Steatohepatitis (NASH) is currently an area of high unmet medical need with no approved therapies in Europe and the United States [12]. To facilitate and accelerate the identification of the most effective and promising novel treatment options for participants with NASH, multiple potential novel therapies and combinations thereof can be tested in platform trials. As example consider a Phase 2b platform trial where the primary efficacy endpoint is a binary endpoint indicating whether the patient responded or not to treatment at 48 weeks. As the determination of response requires paired biopsies at baseline and at the end of the study, there is a high patient burden and limiting the sample size is important, especially from a patients perspective. Therefore, the use of non-concurrent controls can be considered in the analyses to reduce the number of patients required in the clinical trial. However, also in this setting time trends can occur, e.g., due to changes in the patient population or standard of care, but also due to variability in the assessment of the endpoint [13].

Lee and Wason [14] investigated linear regression models to estimate the treatment effect of interest in trials with continuous data that include a factor corresponding to time to adjust for potential time trends. They demonstrated in a simulation study that using regression models that adjust for time trends by means of a step-wise function leads to unbiased tests, even if the true time trend is linear rather than step-wise. This holds if the linear regression model is fitted to data of the tested experimental treatment group and the control group as well as to the data of all other trial arms. However, only if the model is fit based on data of all trial arms, the inclusion of non-concurrent controls improves the power compared to an analysis using concurrent controls only.

In this article, we derive the conditions under which model-based time trend adjustments control the type 1 error rate and increase the statistical power for treatment-control comparisons. In particular, we consider the simple setting of a two-period platform trial that starts with a single treatment and a control to which another treatment arm is added in the second period.

We consider the regression model fitted with data from all arms and modelling a common step-wise time trend. For this model we show that treatment effect estimates are unbiased if the time trends in all arms are equal and additive in the model scale. This holds, regardless of the specific functional form of the time trends. For the associated hypothesis tests, we show that for binary endpoints and logistic regression models they asymptotically control the type 1 error rate under the above assumptions. For continuous data analysed with the standard linear model, type 1 error control holds asymptotically, if block randomisation is used. We show that, under the above conditions, the model-based analysis incorporating non-concurrent controls increases the power of the trial as well as the precision of treatment effect estimates compared to analyses based on concurrent controls only.

We also investigate the properties of methods when time trends differ across treatment arms or are not additive on the model scale. We show through simulations that heterogeneous time trends across groups can lead to an inflation of the type 1 error rate and biased treatment effect estimates. Furthermore, for binary data and logistic regression models, where the validity of the testing and estimation procedures relies on the assumption of equal time trends on the log odds scale of the regression model, we demonstrate that equal time trends in other scales do not guarantee type 1 error control. In addition, we consider alternative regression models, as models allowing for different step-wise time trends between treatments, or regression models fitted with data of the tested treatment arm only. However, they only marginally increase the power of hypothesis tests (only due to larger degrees of freedom for linear models).

Objectives and requirements for confirmatory clinical trials (mainly phase III) and early phase trials are different. This extends to early and late phase platform trials. In spite of these differences, however, the issues we address in this paper are all related to the handling of potential time trends in the accumulating data. We chose to illustrate them using a framework of type 1 error control, but also investigate the bias in treatment effect estimates. These considerations are of different importance in different phases of drug development, but should not be ignored in any phase.

Methods

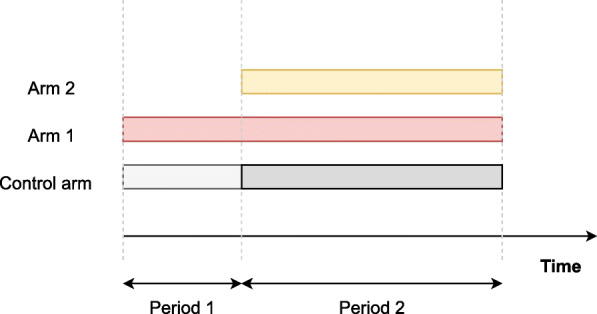

To capture the principles of the use of non-concurrent controls, it is sufficient to analyze a simplified model. We therefore consider a randomised, parallel group platform trial, initially comparing a single experimental treatment (k=1) to a control (k=0), as in [14]. After N1 patients have been recruited, a new experimental treatment arm (k=2) is added to the trial with the intention of comparing this new added arm 2 with the common control. Thus the trial is divided into two periods (s=1,2) before and after the addition of the new arm. See Fig. 1 for an illustration of the design. Furthermore, let N2 denote the total sample size in period 2 and N=N1+N2 the overall sample size.

Fig. 1.

Platform trial scheme. Example platform trial with initially a treatment group (arm 1) and a control group in period 1; and with a new treatment (arm 2) starting in period 2. Light grey represents non-concurrent controls with respect to the new treatment, dark grey represents concurrent controls

We focus on the hypothesis tests and treatment effect estimates comparing treatment 2 to control. We assess the impact of time trends on the validity of inference when incorporating non-concurrent controls according to different adjustment methods. We estimate and test the treatment effect of treatment 2 compared to control based on regression models where the time trend is modelled as a step function. We consider the regression model investigated in [14] for continuous data, fitted to data from all treatment arms and the control. In addition, we also investigate a model including an interaction between treatment and time period to allow for a different time trend in treatment arm 1 compared to the other groups. Furthermore, we investigate the extension to binary data. Let Yj denote the response (binary or continuous) for patient j, where j=1,...,N is the patient index corresponding to the order in which patients have been enrolled into the trial, and let kj be the treatment patient j received (kj=0,1,2).

Model-based time trend adjustments

To estimate the treatment effect of treatment 2 against the control, we fit a regression model, adjusting for time by a step function, based on data from all treatment arms

| 1 |

where η0 is the response in the control group in the first period, θk is the effect of treatment k compared to control, and ν denotes a step-wise time effect between periods 1 and 2. For continuous data, g(·) is the identity function and we fit a standard linear model as specified in (1) and perform the corresponding t-test to test the one-sided null hypothesis H02:θ2≤0 (assuming that larger values correspond to better outcomes). For binary data, g(·) is the logit link function and H02 is tested based on a logistic regression. Also note that the step-wise function, I(j>N1), amounts to a stratified model with time periods as strata and a common treatment effect across strata.

Model (1) fits a common time trend across all treatment arms. To relax this assumption, we consider an additional model that includes an interaction effect, allowing for a different effect of time in treatment arm 1 compared to control and treatment 2. The resulting model is given by

| 2 |

Because a common time trend in the control and treatment 2 is modelled, also in this model the treatment effect of treatment 2 is given by θ2. Also note that in the above models the effect of time on the response is assumed to be additive for continuous data, and multiplicative on the odds ratio scale for binary data.

Tests based on concurrent controls only and based on naively pooling controls

For comparative purposes, we additionally consider the pooled test that does not account for time trends and the test based on concurrent controls only comparing treatment 2 to control using a t-test for continuous and a logistic regression (with factor treatment) for binary data. We apply these tests using data of treatment 2 and pooling concurrent and non-concurrent controls (pooled analysis) or using concurrent control data only (separate analysis).

Results

In this section, we report results on the properties of the model based estimation and testing procedures based on model (1). In “Estimation under model-based period-wise adjustments” section, we rewrite the treatment effect estimator for treatment 2 of the linear model as a weighted sum of period-wise per-group means. Based on this representation, we derive the reduction in variance of this estimator compared to the estimator based on concurrent controls only.

In “Analytical results on the properties of estimators and hypothesis tests” section, we discuss further properties of the model-based estimators and tests under the assumption that the time trends are equal across treatment groups and additive on the model scale. We show that the model based treatment effect estimator is unbiased, even if the shape of the time effect is mis-specified and deviates from the step function. In addition, we give conditions on the randomisation or testing procedure, that guarantee control of the type 1 error rate of the model based hypothesis test.

Finally in “Evaluation of methods through simulations” section, we illustrate the procedures in a simulation study and quantify the potential inflation of type 1 error in the model based hypothesis tests if time trends are not equal across treatment groups or not additive on the model scale.

Testing and estimation in model-based approaches

Estimation under model-based period-wise adjustments

To illustrate how the models (1) and (2) estimate the treatment effect of treatment 2 compared to the control when adjusting for potential time trends, we write the regression model estimators as a weighted sum of the period-wise per-group estimates [14, 15]. Let nk,s and be the sample sizes and sample means of the observations per arm and period (k=0,1,2 and s=1,2). Thus, in our setting, nk,s≥1 except for n2,1=0, and . The estimate of θ2 is given by

with weights wk,s defined below. The derivation of the weights can be found in the supplementary material (Section A).

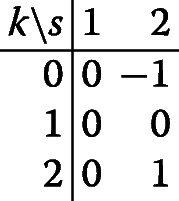

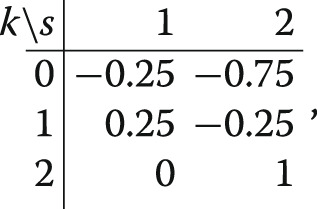

First note that for model (2) based on all data and including an interaction effect, the matrix wk,s is given by

so that , and therefore the non-concurrent controls do not contribute to the treatment effect estimator. Hence, we do not consider this model further in this section.

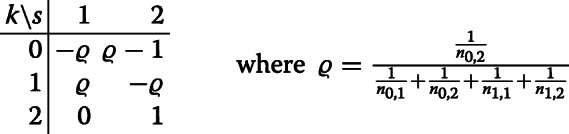

For model (1), based on data from all treatment arms and adjusting for time by a step function, the matrix of weights wk,s is given by

and thus

Thus, the treatment effect is estimated by the difference in the mean of treatment group 2 and a model-based estimate of the control response in period 2, given by

| 3 |

Note that this estimate is a weighted average of the mean control response in period 2 and the mean control response in period 1, adjusted by the time trend estimated from treatment 1. The weight of the non-concurrent controls is given by ϱ. The relative reduction in variance of this estimate compared to the estimator based on concurrent controls only is given by

Thus, reduction in variance is increasing in the number of non-concurrent control patients, in the number of concurrent patients on arm 1, and in the number of non-concurrent patients on arm 1. In particular, keeping the number of concurrent controls fixed and assuming that n0,1→∞,n1,1→∞, and n1,2→∞, we have ϱ→1. On the other hand, increasing the number of concurrent control patients but keeping the other sample sizes fixed we have ϱ→0 as n0,2→∞, and thus asymptotically no reduction takes place.

Consider now a specific example, with equal randomisation for arms 0 and 1 in each period, that is, n0,1=n1,1 and n0,2=n1,2, but possibly different for arm 2, so that n2,2≥1 is arbitrary. Then, the reduction in variance is and thus proportional to the proportion of non-concurrent controls over the total number of controls. Especially, the reduction is delimited above by 1/2 (as n0,1→∞). For instance, in the example n0,1=n0,2=n1,1=n1,2=n2,2/2 considered by [14] the weights and the treatment effect estimator are given by

and

The model based estimate of the period 2 control response has 25% lower variance than the estimator based on concurrent controls only. An example of how weights are defined in the case of equal randomisation also for arm 2, that is, n0,2=n1,2=n2,2, can be found in the supplementary material (Section B).

So what we are actually estimating when we use models in (1) and (2) is a weighted average of the treatment effect of treatment arm 2 over the control in period 2 and the treatment-time interactions between periods.

Analytical results on the properties of estimators and hypothesis tests

We derive the properties of tests and estimators of the linear and logistic regression model (1) under the assumption that time trends are equal across groups and additive on the model scale such that the data are generated according to the model

| 4 |

where Yj,g(),η0 and refer to the continuous or binary response, the link function (identity and logit functions for continuous or binary responses, respectively), the control response and treatment effects, respectively, as in (1) and (2). For continuous data, we furthermore assume that the error terms in the responses are identically and independently normally distributed with zero-mean and equal variances. The term f(·) represents the time trend function and tj is the calendar time when patient j is enrolled in the trial. Note that when f(·) is a step function with a step at the end of period 1, then model (1) is correct. Otherwise, (1) is a misspecified model because the functional form of the time trend is not correctly modelled.

For continuous data, the model estimate is an unbiased estimate for θ2, even under mis-specification of the time trend pattern, i.e., if f(·) is not a step function (see Section C in the supplementary material for a proof). Moreover, as shown in “Estimation under model-based period-wise adjustments” section, the model-based estimate based on all data reduces the variance of the treatment effect estimator as compared to separate analysis, and therefore leads to a gain in power. But, by inspection of (3) it is clear that if the time trend in treatment 1 differs from the trend in the control, the estimate will be biased.

As regards testing, for continuous endpoints and given the functional form of the time trend pattern is correctly specified, we first notice that the weight ϱ in (3) is chosen such that variance of is minimised. However, if the time trend pattern is mis-specified and simple randomisation or random allocation is used, the residual variances of the fitted model (1) will depend on the specific shape of the time trend in the data generating model (4). Especially, the residual variance may not be constant over time such that the overall variances in the two periods may differ. As the linear model assumes homoscedaticity, this may lead to an underestimation of the standard error of and therefore to a type 1 error rate inflation of the corresponding test. See Section F.1 in the supplementary material for an example, where the type 1 error rate is inflated.

In order to obtain unbiased testing procedures for trials with continuous endpoints, we propose two approaches. The first consists of choosing a randomisation procedure that controls the variability over time. We can achieve this by stratifying for calendar time in the randomisation process by means of block randomisation. Then, since patients randomised in the same block are enrolled approximately at the same calendar time, asymptotically, the time trend does not introduce additional variation in the estimate. Block randomisation is an equivalent of stratified randomisation, where one stratifies for an important risk factor. In this case, block randomisation coincides with stratifying randomisation by time intervals. However, it is well known that stratified randomisation followed by an analysis that does not adjust for the stratification factor, leads to an overestimation of the variance [16]. Thus, the resulting tests will be conservative. If the time trends are very strong, this may result in overly conservative tests. An alternative approach to this one is to allow for different variances between periods and estimate them separately.

On the other hand, when using binary endpoints, the variance estimators are consistent even under mis-specification of the trend pattern, f(·). So, in this case, the treatment effect estimators are asymptotically unbiased and so are the hypothesis tests.

Evaluation of methods through simulations

We conducted a simulation study to quantify the gains in efficiency and potential biases of the considered methods under a wide range of scenarios. We investigated settings where model assumptions are met, as well as settings with a mis-specified model where the time trend does not have a step-wise shape or the time trends differ between treatment arms. Especially, we investigated the performance of the aforementioned analysis approaches with respect to the type 1 error rate and statistical power for the test of H02:θ2=0, as well as the bias and mean squared error of the estimator of the effect of treatment 2, θ2.

Design

We simulated data of a platform trial as described in Section 2, considering the data generating model (4). We consider that patient index corresponds to the order in which patients are enrolled and that at each unit of time one and only one patient enters in the trial, so that, tj=j for j=1,...,N. We assume equal sample sizes per arm and allocations to arms 1:1 and 2:1:1 in periods 1 and 2, respectively. Furthermore, patients were assigned to arms following block randomisation, as proposed in “Analytical results on the properties of estimators and hypothesis tests” section. In the first period, we used a block size of 4, while, in the second, a block size of 12. We consider three patterns for the time trend function:

a linear time trend ;

a step-wise time trend ;

and an inverse-U time trend: ;

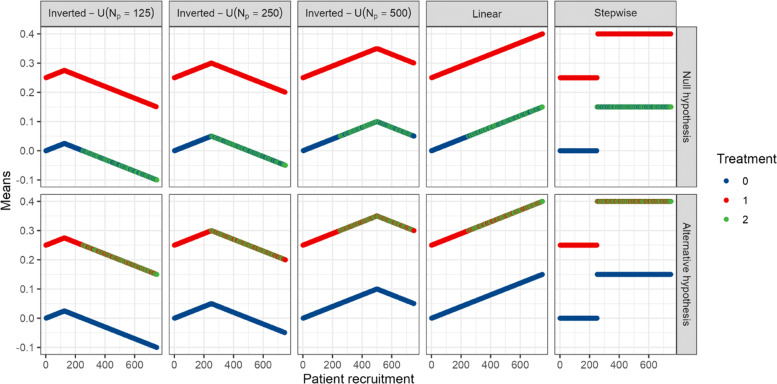

where quantifies the strength of the time trend in arm kj and I(·) the indicator function. Note that if takes different values depending on the treatment arm, then we have different time trends between arms. For the inverse-U time trend, Np denotes the point at which the trend turns from positive to negative. We consider three different values for this point, it can be either when half of the patients in period 1 have been recruited (Np=N1/2), after the patients were recruited in period 1 and before the start of the second period (Np=N1), or when half of the patients in period 2 have been recruited (Np=N1+N2/2). In the main manuscript, we show the results for the scenarios with Np=N1+N2/2. The results for Np=N1/2,N1 can be found in the supplementary material. Note that negative correspond to a U-shaped (instead of inverse-U shaped) trend. Figure 2 illustrates the changes of means according to these patterns. In the supplementary material we also show that considering random instead of fixed entry times tj does not noticeably change the results.

Fig. 2.

Means for continuous endpoints with respect to patient enrolment to the trial in the presence of equal time trends across arms, depending on the time trends’ pattern (linear, step-wise and inverted-U time trends) and for λ0=λ1=λ2=0.15

For treatment 1 and the control group the sample size per group was set to 125 in each period. The sample size for treatment 2 in period 2 is 250 (such that its sample size matches the overall sample size of treatment 1). For continuous endpoints, we assumed normally distributed residuals with equal variances σ2=1 across treatment arms, zero mean for control arm (η0=0), simulated trials under the null hypothesis, θ2=0, as well as the alternative hypothesis θ2=0.25. In both cases, we considered an effect of θ1=0.25 for treatment 1. For these effect sizes, the pooled t-test has 80% power at 2.5% one-sided significance level. For binary endpoints, we considered control response rates of 0.7, non-effect of treatment 2 under the null hypothesis θ2=0 (in terms of the odds ratio OR2=1) or a positive effect under the alternative hypothesis (OR2=1.8), and scenarios where treatment 1 has either a positive or a negative effect (i.e., θ1>0 and θ1<0, respectively). With the aforementioned configuration for these scenarios, the power to detect an odds ratio OR2=1.8 is 80% assuming a probability p0=0.7 at 2.5% significance level using the pooled z-test. Finally, we simulated the three time trends patterns mentioned before assuming equal time trends across all treatment groups (λk=λ, for k=0,1,2), and equal time trends between treatment 2 and control arms and different from treatment 1 arm (λ0=λ2≠λ1). We simulated 100,000 replicates for each scenario to obtain estimates of the type 1 error, power and bias of the treatment effect.

Simulation results

In settings where the time trends are equal across treatment arms (i.e., scenarios with λk=λ for k=0,1,2), all regression models that fit time trends as a step function control the type 1 error rate for the test of H02 and lead to unbiased effect estimates in the examples we investigated. This holds, if the true time trend is a step function as well as if it is linear or U-inverted. For the model without interaction term (1) this confirms the theoretical considerations in the above section as well as the simulation results of [14]. Furthermore, the test based on (1) leads to an improved power compared to the separate analysis using only concurrent controls (see Fig. S1 in the supplementary material). Similarly, for binary data we note that in settings where the time trends are equal across arms and given by (4), the tests and estimators of the corresponding logistic regression model control the error rate and we see an improved power compared to the separate analysis using only concurrent controls. (Figure S7 in the supplementary material.).

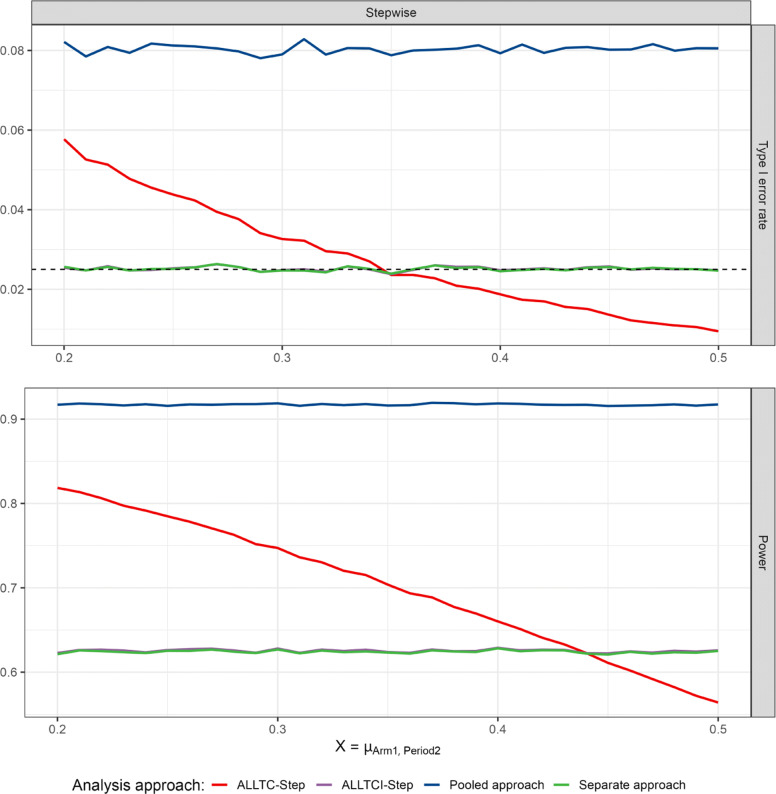

In contrast, if the time trend in treatment 1 differs from the trend in the control arm, control of the type 1 error is no longer guaranteed. For example, suppose a setting as in Table 1 where the mean outcome in the control arm increases by 0.1 from period 1 to period 2. Then, the type 1 error rate for the test of H02 depends on the increase in the mean outcome of treatment 1. Let us consider different values of the mean outcome of arm 1 in period 2 as in Fig. 3. If the increase in the mean outcome of treatment arm 1 from period 1 to period 2 is lower than in the control, the type 1 error rate may be substantially inflated, reaching type 1 error rates larger than 0.05%. If it is larger than 0.1 the procedure becomes strictly conservative, obtaining type 1 error rates below 0.01, and the power is adversely affected (see Fig. 3). Indeed, the treatment effect estimates will be positively or negatively biased depending on the time trend in treatment 1. Furthermore, if instead of step-wise time trends, the time trends’ patterns are linear or inversed-U, the same behaviour is observed, as is shown in Fig. 4 when varying the strength of the time trend in arm 1 and fix the time trends of control and arm 2.

Table 1.

Example for continuous endpoints. Mean responses for continuous endpoints according to the arm and the period in the presence of step-wise trends for scenarios presented in “Design” section and for λ0=λ2=0.1. Here X represents the mean response of arm 1 in period 2, which we vary in Fig. 3

| Period 1 | Period 2 | |||||

|---|---|---|---|---|---|---|

| Control | Arm 1 | Arm 2 | Control | Arm 1 | Arm 2 | |

| H0 | 0 | 0.25 | – | 0.1 | X | 0.1 |

| H1 | 0 | 0.25 | – | 0.1 | X | 0.35 |

Fig. 3.

Type 1 error rate and power of rejecting H02 for continuous endpoints in the presence of step-wise trends with respect to the mean in the treatment arm 1 in the second period and according to the model used (see “Methods” section) for scenarios presented in “Design” section and described in Table 1. ALLTC-step refers to models using all treatment data and control and adjusting for time by a step function (see (1)), ALLTCI to models using all treatment data and control with interaction between time and treatment arm and adjusting for time by a step function (see (2)), and pooled and separate approaches refer to t-tests comparing treatment 2 to control using concurrent and non-concurrent control data, and concurrent control data only, respectively. Note that lines for ALLTCI-Step and the separate approach are overlapping

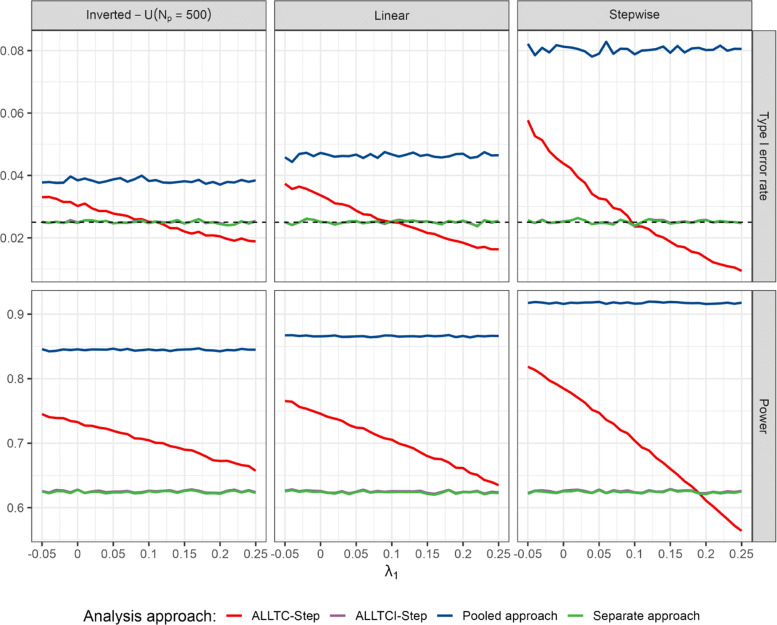

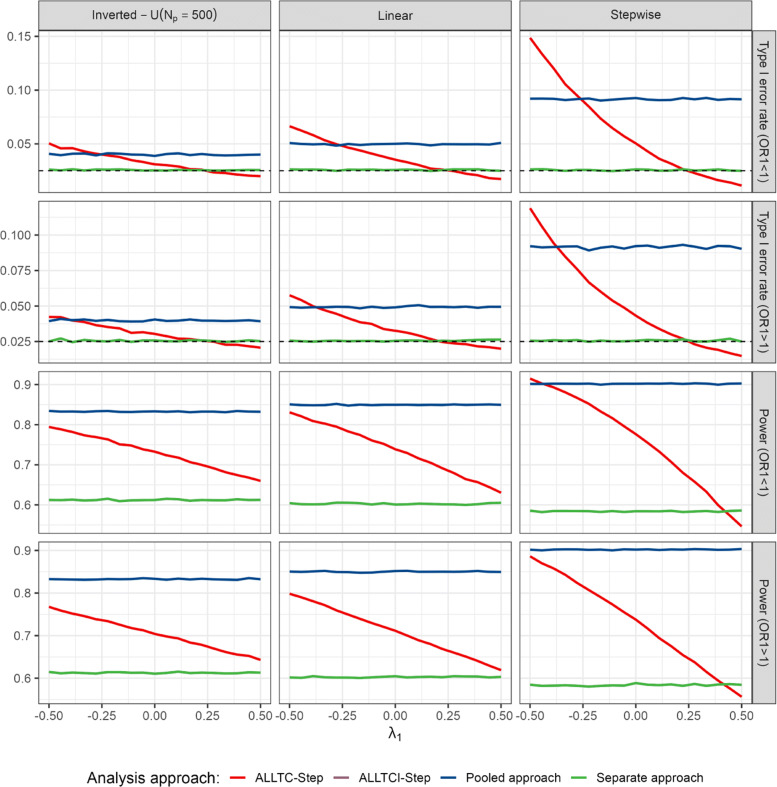

Fig. 4.

Type 1 error rate and power of rejecting H02 for continuous endpoints in the presence of linear, step-wise and inverted-U time trends (for λ0=λ2=0.1) with respect to the strength of the time trend in treatment arm 1 (λ1) according to the model used (see “Methods” section) for scenarios presented in “Design” section. ALLTC-step refers to models using all treatment data and control and adjusting for time by a step function (see (1)), ALLTCI refers to models using all treatment data and control with interaction between time and treatment arm and adjusting for time by a step function (see (2)), and pooled and separate approaches refer to t-tests comparing treatment 2 to control using concurrent and non-concurrent control data, and concurrent control data only, respectively. Lines for ALLTCI-Step and the separate approach are overlapping

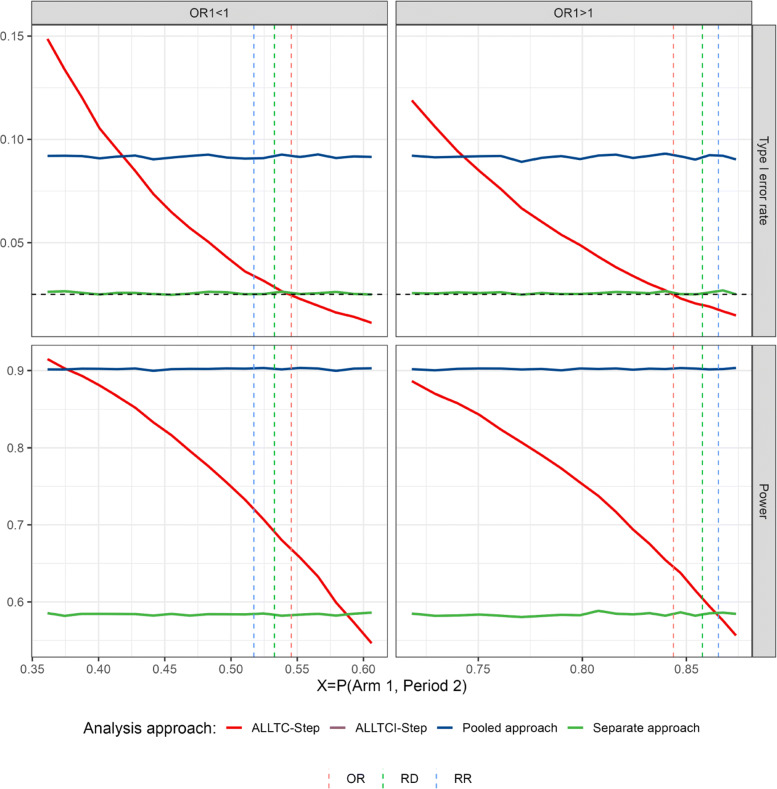

A similar result can be found for binary data when considering a fixed increment in the response rate from period 1 to period 2 of the control arm and varying the response rate of the treatment arm 1 assuming to have a beneficial effect (see Table 2). If the time trend in treatment 1 is smaller than in the control arm on a multiplicative odds ratio scale the type 1 error rate may be inflated. If the time trend is larger, the test becomes strictly conservative (and loses power) (see second column in Fig. 5). Note that this implies that - given for treatment 1 the alternative holds - when time trends are equal between control and treatment 1 on a risk ratio or risk difference scale (vertical lines in blue and green colors in Fig. 5, respectively), the type 1 error of the test for treatment 2 is not maintained.

Table 2.

Example for binary endpoints. Responses rates for binary endpoints according to the arm and the period in the presence of step-wise trends for scenarios presented in “Design” section with λ0=λ2=0.25. Here X represents the observed response rate of arm 1 in period 2, which we vary in Fig. 5. Arm 1 is assumed to have a beneficial effect in period 1, corresponding to OR1>1 (OR1=1.8)

| Period 1 | Period 2 | |||||

|---|---|---|---|---|---|---|

| Control | Arm 1 | Arm 2 | Control | Arm 1 | Arm 2 | |

| H0 | 0.7 | 0.81 | – | 0.75 | X | 0.75 |

| H1 | 0.7 | 0.81 | – | 0.75 | X | 0.84 |

Fig. 5.

Type 1 error rate and power of rejecting H02 for binary endpoints in the presence of step-wise trends (for λ0=λ2 and varying λ1) with respect to the response rate in the treatment arm 1 in the second period and according to the model used (see “Methods” section) for scenarios presented in “Design” section and described in Tables 2 and 3. Vertical lines refer to those situations when time trends are equal across arms on odds ratio (OR), risk difference (RD), and relative risk (RR) scales. ALLTC-step refers to models using all treatment data and control and adjusting for time by a step function (see (1)), ALLTCI refers to models using all treatment data and control with interaction between time and treatment arm and adjusting for time by a step function (see (2)), and pooled and separate approaches refer to logistic regression models without adjusting for time comparing treatment 2 to control using concurrent and non-concurrent control data, and concurrent control data only, respectively. Lines for ALLTCI-Step and the separate approach are overlapping

If we consider the same situation as above, but instead of having a beneficial effect in treatment 1, this shows a negative effect as compared to the control (see Table 3), the same behaviour is observed but inflation is much higher, even reaching levels above 0.10 (see first column in Fig. 5). Moreover, similar results are observed when trends are linear or inversed-U rather than piece-wise as shown in Fig. 6. Note, however, that since in our simulations the strength of the trend (λ1) takes the same value in all three patterns, it has a greater impact on the piece-wise trend, since changes are more gradual in the two cases with continuous time trends.

Table 3.

Example for binary endpoints. Responses rates for binary endpoints according to the arm and the period in the presence of step-wise trends for scenarios presented in “Design” section with λ0=λ2=0.25. Here X represents the observed response rate of arm 1 in period 2, which we vary in Fig. 5. Arm 1 is assumed to have a negative effect in period 1, corresponding to OR1<1 (OR1=0.4)

| Period 1 | Period 2 | |||||

|---|---|---|---|---|---|---|

| Control | Arm 1 | Arm 2 | Control | Arm 1 | Arm 2 | |

| H0 | 0.7 | 0.48 | – | 0.75 | X | 0.75 |

| H1 | 0.7 | 0.48 | – | 0.75 | X | 0.84 |

Fig. 6.

Type 1 error rate and power of rejecting H02 for binary endpoints in the presence of linear, step-wise and inverted-U time trends (for λ0=λ2) with respect to the strength of the time trend in treatment arm 1 (λ1) and according to the model used (see “Methods” section) for scenarios presented in “Design” section. ALLTC-step refers to models using all treatment data and control and adjusting for time by a step function (see (1)), ALLTCI refers to models using all treatment data and control with interaction between time and treatment arm and adjusting for time by a step function (see (2)), and pooled and separate approaches refer to logistic regression models without adjusting for time comparing treatment 2 to control using concurrent and non-concurrent control data, and concurrent control data only, respectively. Lines for ALLTCI-Step and the separate approach are overlapping

An option to address the type 1 error inflation is to apply the extended model (2) including an interaction term between period and treatment 1. Indeed, as shown in Figs. 4 and 6, for the above example the extended model controls the type 1 error rate, both for continuous as well as binary data. However, applying this model does not lead to a relevant improvement in power compared to the analysis based on concurrent data only. Indeed for both, the linear and the logistic regression models, data of non-concurrent controls do not contribute to the treatment effect estimate. For the linear model, however, they contribute to the variance estimate.

Note that in this simulation study we focused on how time trends affect the operating characteristics when using model-based adjustments. However, also other deviations from the model assumptions not considered in the simulation study (as, e.g., heteroscedasticity) can affect the type 1 error rate. Especially, for unequal sample sizes in the control and treatment groups and unequal variances between groups or periods, the pooled variance estimator of the linear model is biased. Depending on the scenario, this can lead to an increased type 1 error rate but also strictly conservative testing procedures. To account for heterogeneous variances, a model allowing for different variances across periods and treatment groups can be fitted.

Additional simulation results for trials with three periods

Above we considered platform trials starting with a single treatment and a control, where a second treatment arm enters later but ends at the same time as treatment arm 1. Consequently, the factor period in the regression model for this platform trial has only two levels.

In addition, we performed a simulation study for trials where recruitment to arm 1 ends before the end of the platform trial. Then, the trial is divided into three time periods, where the first and last only involve one treatment and the control but in the second participants are randomised to both treatment arms and the control (see Fig. S16 in the supplementary material). Overall, we find that the properties described for the two period trial design also apply to the scenarios with three periods considered in the simulations. In particular, under equal time trends, the type 1 error is controlled when modeling time trends with step functions based on data from all arms using a variant of model (1) where period has three levels. If the time trend in treatment 1 differs from that in treatment arm 2 and the control, the type 1 error rate may be inflated. However, in the considered examples the type 1 error rate inflation is less pronounced than in the two-period design because the overlap between arms 1 and 2 is smaller. As a consequence, the non-concurrent controls have less weight in the test statistics computed from the step function model. However, this implies that the power gain compared to an analysis based on concurrent controls only is smaller than in the design with two periods (see the supplementary material for further details).

Further modeling approaches

In previous sections, we modeled time trends with step functions, based on data from all arms. In this section, we introduce some alternative methods and investigate their properties. For the simulation results for these methods see the supplementary material (see Figs. S4 and S12).

If rather than using data from all treatment arms as in (1) and (2), one wants to compare the efficacy of treatment 2 against control using data from the control arm in both periods and data of treatment 2 (which is collected in the second period, only), a regression model fitted by the observations {Yj,j∈{1,…,N}|kj=0,2} can be used. This regression model is given by

| 5 |

where the period effect is fitted in the model in a step-wise way as before.

As noted by Lee and Wason [14], linear models that only use data of treatment 2 and control arms (as in (5)) maintain the type 1 error rate and lead to unbiased estimators of the treatment effect. This holds, as long as the variance of the residuals is the same in the two periods. Furthermore, type 1 error rate control is maintained, even if there is a different time trend in treatment arm 1. However, there is no relevant gain in power as compared to the separate analysis. This holds, because the non-concurrent control data do not contribute to the treatment effect estimate. For the linear model, however, the non-concurrent control data contribute to the variance estimator. Though, only for very small sample sizes, this has an impact on the power. Therefore, the incorporation of the non-concurrent control by means of this approach does not increase the trial’s efficiency.

Instead of assuming a step-wise effect of time over periods, models that linearly adjust for time can be used. Here we assume that patients arrive at a uniform pace, and patient index is proportional to time. Versions of the regression models (1), (2) and (5) with linear trends for time are obtained by replacing the step function term in the models above by a linear effect function, and are as follows:

| 6 |

| 7 |

| 8 |

Similarly to what was previously noted, models incorporating data from all treatment arms ((1) and (6)) control the type 1 error rate and can substantially improve the power under the assumption of equal time trends in all treatment arms in the scale of the model. However, these models in (6) additionally rely on the correct specification of the time trend in the model. If correctly specified, that is, when time trends are linear, such models achieve higher power as compared to those modeling time as a step function (as in (1)) while controlling the type 1 error rate. But, error inflation occurs when the time trend is non-linear. Furthermore, when time trends differ between arms, the inflation is even higher than when time is modeled as a step function. In the same way, models (7) and (8) also yield biased results and type 1 error inflation when the parameterisation of the time in the model is incorrectly specified.

Discussion

We investigated frequentist, model-based approaches to adjust for time trends in platform trials utilizing nonconcurrent controls. We examined conditions under which the model-based approaches can successfully adjust for time trends for the simple case of a two-period trial with two experimental treatments and a shared control. The model-based approach including data from all arms and fitting a common time trend as a step function, controls the type 1 error rate and improves the statistical power in all considered scenarios where the time trend is equal across arms and additive in the model scale. This holds asymptotically, even if the time trend is not a step function but has a different shape and if block randomisation is used. Suppose, in contrast, random allocation is used, and the time trends are not step functions. In that case, an inflation of the type 1 error in the linear model can arise because the variance of the residuals may differ across periods (but only a single variance term is estimated by the model). A simple fix for this is to fit a model that allows different residual variances per period. On the other hand, type 1 error control is lost for the above step function models if the time trends are different between arms or not additive in the scale of the model. The amount of potential type 1 error rate inflation depends on the size of the differences between the time trends across arms. If the differences are sufficiently small, the type 1 error rate is not substantially affected (see Fig. 3).

Furthermore, we considered models, modelling time trends as linear functions or as step functions with interaction terms for treatment arm and period. In addition, we investigated a step function model fitted on the control group data and data of treatment arm 2 only. We show that these models do not lead to a noticeable gain in power or are less robust with respect to the control of the type 1 error rate.

For the assessment of estimators in clinical trials, the estimand framework [17] has become an important tool. While the estimand, defined as the target of estimation, is derived from the trial objective and is therefore not directly affected by the use of non-concurrent controls, the properties of estimators depend on if and how non-concurrent controls are employed in the estimation of treatment effects [18]. Time trends can affect estimators through several aspects of the data generating process in a clinical trial, such as the study population, the measurement of endpoints, or the impact of intercurrent events.

Also, in the hypothetical platform trials in MDD and NASH, considered in the introduction, time trends can occur. The model-based analysis can fully adjust for time trends only if the assumption of homogeneity and additivity of the time trends holds. While one can empirically assess these assumptions based on the observed data, such assessments may have limited sensitivity if trials are not powered for this objective. On the other hand, experience from previous trials and subject matter knowledge may provide justification for the assumption of equal time trends. Heterogeneous time trends across treatment arms may occur in settings where several treatments show an effect compared to control, but the external factors causing the time trend do not equally affect the efficacy of all considered treatments. Examples are vaccine trials, where different variants of the targeted virus are predominant over time and vaccine efficacy depends on the variant. Another example is shifts in the population in settings, where treatment efficacy is not homogeneous across the population. In particular, patients recruited early in a trial may already be known by the investigator(s), while newly diagnosed patients may enter later on. In addition, time trends may not be additive in the scale considered in the model, e.g., if there are ceiling effects in the outcome scale (as it happens, for instance, in ADAS-COG in Alzheimer’s disease), if the treatment effect is not additive to the placebo effect [19] (as it may occur in the example of MDD), or, for binary outcomes, when the time trends are equal on different scales (as the risk ratio instead of the odds ratio scale). However, even if there is a risk of bias, the use of non-concurrent controls can lead to more precise estimates in terms of mean squared error because of a bias-variance trade-off. Especially, in settings with small sample sizes, the reduction in variability may outweigh the potential bias introduced. The extent of time treatment interactions in actual clinical trials will depend on the specific context and it would be important to analyse recent platform trials in this respect to better understand the plausibility of different scenarios. The scenarios we considered in the simulation study used thresholds for type 1 and type 2 error control that are frequently used in traditional confirmatory trials. In early phase platform trials different choices would be made reflecting the different trade-offs involved. Furthermore, the trial may not be designed with error rate control as a major focus.

While we considered only a simple scenario, these models can directly be extended to trials with more treatments and periods. In this case, a period defines the time span where the set of treatments in the platform trial does not change. Also in this setting, the assumption of equal time trends that are additive on the model scale is needed to obtain unbiased estimators and conservative testing procedures. If time trends differ, the amount of bias and type 1 error rate inflation will depend on the weight the treatment arms with deviating time trends have in the final test statistics. In addition, if there are different deviating time trends across arms their impact may cancel out, depending on the specific scenario.

In this paper, we focus on trials with no interim analysis, where the sample size per arm is fixed. However, platform trials often allow for interim analyses with the possibility to stop an arm early, either due to futility or due to an early rejection of the null hypothesis. In trials with such interim analyses, the factor period, defined by the time periods between an entry or exit of an arm, will depend on the interim results. This introduces additional complexity as it affects the joint distribution of the independent factor period and the dependent variable in the model and can lead to biased treatment effect estimates and an increased type 1 error rate.

We delimited our investigations to analyses based on linear and logistic regression models. To extend the approach to other types of data (e.g., time to event endpoints, counts and clustered data), the respective appropriate regression models, including time as a step function, could be applied. In addition, to address confounding with measured baseline variables, the regression models can be extended to adjust for other covariates.

Another extension are models that model time trends with more general smooth functions such as splines. Furthermore, non-parametric Bayesian approaches have been proposed to estimate the effect of time, smoothing estimates of time trends by borrowing information between periods [20]. However, also these methods correctly adjust for time trends only if they are equal in all arms.

A further approach that has been proposed to adjust for time trends are randomisation tests. For two-armed trials with covariate and response adaptive randomisation, for example, they have been shown to robustly control the type 1 error rate in the presence of time trends [21]. These tests can be extended to multi-armed trials [22] using conditional randomisation tests, where for each treatment-control comparison, the re-randomisation is limited to the respective treatment and control. However, this conditional re-randomisation is deterministic for the non-concurrent controls in platform trials. Therefore, including non-concurrent controls does not improve the power of the conditional randomisation test compared to a conditional randomisation test based on concurrent controls only. Consequently, this approach would not be beneficial in increasing the efficiency of platform trials by incorporating non-concurrent controls.

Non-concurrent controls can be considered as the ideal case of historical data, since they are borrowed from the immediate past, randomised, and are part of the same trial infrastructure [23]. It is an open question whether the large array of dynamic modeling methods proposed to incorporate historical data [24–28] can be adapted to incorporate non-concurrent controls in platform trials.

Conclusions

Model-based analyses that make use of data from all treatment arms including non-concurrent controls are a powerful and fairly robust analysis strategy. If time trends in the different arms have equal additive effects on outcome on the model scale, a model that adjusts for time as step function based on data from all arms controls the type 1 error rate while increasing the power compared to an analysis with concurrent controls only. For binary data and logistic regression models, type 1 error control is maintained, even if the functional form of the common time trend deviates from the step function. For continuous data, this holds if block randomisation is applied or a model allowing for different variances across periods is chosen. However, if time trends differ between arms or are not additive to treatment effects in the scale of the model, the type 1 error control may be lost and treatment effect estimates can be biased.

If non-concurrent controls are included in the analysis of platform trials, we recommend the use of step function models to adjust for potential time trends. Only in trials where the environment can be considered “stable" for the duration of the trial and there is no time trend in the outcomes (e.g., for short trials with objective endpoints and homogeneous patient populations and in diseases with a long-established standard of care and no recent changes in treatment options), adjustment for time trends may not be necessary, and, as in this scenario the distribution of concurrent and non-concurrent controls are identical, we can simply pool concurrent and non-concurrent controls. In any case, if non-concurrent data are utilised as the primary analysis, also the analysis using only concurrent control data should be presented as sensitivity analysis.

Whether to use non-concurrent controls or not should be a case by case decision based on the expected bias-variance trade-off and subject-matter considerations such as the duration of the trial and potential changes in the standard of care during the trial. Especially in settings with small sample sizes, the efficiency gained by using step function models to incorporate non-concurrent controls can outweigh potential biases. To assess the bias-variance trade-off, the specifics of the trial, scientific plausibility of heterogeneous time trends across treatments, and robustness of results should be carefully considered.

Supplementary Information

Additional file 1 Supplementary document with technical derivations, proofs, and additional simulation study results.

Acknowledgements

The authors thank the reviewers for their constructive comments and suggestions on the manuscript.

Abbreviations

Authors’ contributions

MBR and MP conceived and designed the research and jointly contributed to drafting the article. EG, MBR, MP, PJ and KV drafted the methodological parts. MBR and PK wrote the code for the simulations and PK performed the simulations and prepared figures and tables. All authors contributed to the interpretation of findings, critically reviewed and edited the manuscript. All authors critically appraised the final manuscript. The authors read and approved the final manuscript.

Funding

EU-PEARL (EU Patient-cEntric clinicAl tRial pLatforms) project has received funding from the Innovative Medicines Initiative (IMI) 2 Joint Undertaking (JU) under grant agreement No 853966. This Joint Undertaking receives support from the European Union’s Horizon 2020 research and innovation programme and EFPIA andChildren’s Tumor Foundation, Global Alliance for TB Drug Development non-profit organisation, Spring works Therapeutics Inc. This publication reflects the authors’ views. Neither IMI nor the European Union, EFPIA, or any Associated Partners are responsible for any use that may be made of the information contained herein.

Availability of data and materials

R code are available on GitHub through the repository: https://github.com/MartaBofillRoig/NCC_timetrends. This repository contains the main code and R functions to reproduce the results of the simulation study. The datasets generated and/or analysed are also available in the repository.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Carl-Fredrik Burman is an employee and shareholder of AstraZeneca. Dominic Magirr declares a competing interest as an employee of Novartis Pharma AG. Peter Mesenbrink is an employee of Novartis Pharmaceuticals Corporation and owns both restricted and unrestricted shares of stock in Novartis. Peter Jacko and Kert Viele are employees of Berry Consultants, a consulting company specializing in the design of Bayesian and adaptive clinical trials. The rest of the authors declare that they have no competing interests regarding the content of this article.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

5/8/2024

Author name has been corrected FROM First name: "Marta Bofill" Last name: "Roig" TO First name: Marta Last name: "Bofill Roig. The correct reference should be "Bofill Roig et al" instead of "Roig et al".

References

- 1.Woodcock J, LaVange LM. Master Protocols to Study Multiple Therapies, Multiple Diseases, or Both, N Engl J Med. 2017;377(1):62–70. doi: 10.1056/NEJMra1510062. [DOI] [PubMed] [Google Scholar]

- 2.Meyer EL, Mesenbrink P, Dunger-Baldauf C, Fülle HJ, Glimm E, Li Y, Posch M, König F. The Evolution of Master Protocol Clinical Trial Designs: A Systematic Literature Review. Clin Ther. 2020;42(7):1330–60. doi: 10.1016/j.clinthera.2020.05.010. [DOI] [PubMed] [Google Scholar]

- 3.Saville BR, Berry SM. Efficiencies of platform clinical trials: A vision of the future. Clin Trials. 2016;13(3):358–66. doi: 10.1177/1740774515626362. [DOI] [PubMed] [Google Scholar]

- 4.Dodd LE, Freidlin B, Korn EL. Platform Trials — Beware the Noncomparable Control Group. N Engl J Med. 2021;384(16):1572–73. doi: 10.1056/NEJMc2102446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lee KM, Brown LC, Jaki T, Stallard N, Wason J. Statistical consideration when adding new arms to ongoing clinical trials: the potentials and the caveats. Trials. 2021;22(1):203. doi: 10.1186/s13063-021-05150-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Collignon O, Burman C, Posch M, Schiel A. Collaborative Platform Trials to Fight COVID-19: Methodological and Regulatory Considerations for a Better Societal Outcome. Clin Pharmacol Ther. 2021;110(2):311–20. doi: 10.1002/cpt.2183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.International Council For Harmonisation of Technical Requirements For Pharmaceuticals For Human Use (ICH). E9 Statistical principles for clinical trials. 1998. https://www.ema.europa.eu/en/documents/scientific-guideline/ich-e-9-statistical-principles-clinicaltrials-step-5_en.pdf.

- 8.International Council For Harmonisation of Technical Requirements For Pharmaceuticals For Human Use (ICH). E 10 Choice of Control Group in Clinical Trials. 2000. https://www.ema.europa.eu/en/documents/scientific-guideline/ich-e-10-choice-controlgroup-clinical-trials-step-5_en.pdf.

- 9.Otte C, Gold SM, Penninx BW, Pariante CM, Etkin A, Fava M, Mohr DC, Schatzberg AF. Major depressive disorder. Nat Rev Dis Prim. 2016;2(1):1–20. doi: 10.1038/nrdp.2016.65. [DOI] [PubMed] [Google Scholar]

- 10.Sforzini L, Worrell C, Kose M, Anderson IM, Aouizerate B, Arolt V, Bauer M, Baune BT, Blier P, Cleare AJ, et al. A delphi-method-based consensus guideline for definition of treatment-resistant depression for clinical trials. Mol Psychiatry. 2022;27(3):1286–99. doi: 10.1038/s41380-021-01381-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gold SM, Bofill Roig M, Miranda JJ, Pariante C, Posch M, Otte C. Platform trials and the future of evaluating therapeutic behavioural interventions. Nat Rev Psychol. 2022;1(1):7–8. doi: 10.1038/s44159-021-00012-0. [DOI] [Google Scholar]

- 12.Fraile JM, Palliyil S, Barelle C, Porter AJ, Kovaleva M. Non-alcoholic steatohepatitis (nash)–a review of a crowded clinical landscape, driven by a complex disease. Drug Des Dev Ther. 2021;15:3997. doi: 10.2147/DDDT.S315724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rowe IA, Parker R. The placebo response in randomized trials in nonalcoholic steatohepatitis simply explained. Clin Gastroenterol Hepatol. 2022;20(3):564–72. doi: 10.1016/j.cgh.2021.05.059. [DOI] [PubMed] [Google Scholar]

- 14.Lee KM, Wason J. Including non-concurrent control patients in the analysis of platform trials: is it worth it? BMC Med Res Methodol. 2020;20(1):1–12. doi: 10.1186/s12874-020-01043-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Elm JJ, Palesch YY, Koch GG, Hinson V, Ravina B, Zhao W. Flexible analytical methods for adding a treatment arm mid-study to an ongoing clinical trial. J Biopharm Stat. 2012;22(4):758–72. doi: 10.1080/10543406.2010.528103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Matts JP, Lachin JM. Properties of permuted-block randomization in clinical trials. Control Clin Trials. 1988;9(4):327–44. doi: 10.1016/0197-2456(88)90047-5. [DOI] [PubMed] [Google Scholar]

- 17.International Council For Harmonisation of Technical Requirements For Pharmaceuticals For Human Use (ICH). ICH E9(R1) addendum on estimands and sensitivity analysis in clinical trials to the guideline on statistical principles for clinical trials. 2019. https://database.ich.org/sites/default/files/E9-R1_Step4_Guideline_2019_1203.pdf.

- 18.Collignon O, Schiel A, Burman C-F, Rufibach K, Posch M, Bretz F. Estimands and complex innovative designs. Clin Pharmacol Ther. 2022. [DOI] [PMC free article] [PubMed]

- 19.Boehm K, Berger B, Weger U, Heusser P. Does the model of additive effect in placebo research still hold true? a narrative review. JRSM Open. 2017;8(3):2054270416681434. doi: 10.1177/2054270416681434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Saville BR, Berry DA, Berry NS, Viele K, Berry SM. The bayesian time machine: Accounting for temporal drift in multi-arm platform trials. Clin Trials. 2022. (In press). [DOI] [PubMed]

- 21.Simon R, Simon NR. Using randomization tests to preserve type I error with response adaptive and covariate adaptive randomization. Stat Probab Lett. 2011;81(7):767–72. doi: 10.1016/j.spl.2010.12.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wang Y, Rosenberger WF, Uschner D. Randomization tests for multiarmed randomized clinical trials. Stat Med. 2020;39(4):494–509. doi: 10.1002/sim.8418. [DOI] [PubMed] [Google Scholar]

- 23.Burger HU, Gerlinger C, Harbron C, Koch A, Posch M, Rochon J, Schiel A. The use of external controls: To what extent can it currently be recommended? Pharm Stat. 2021;20(6):1002–16. doi: 10.1002/pst.2120. [DOI] [PubMed] [Google Scholar]

- 24.Viele K, Berry S, Neuenschwander B, Amzal B, Chen F, Enas N, Hobbs B, Ibrahim JG, Kinnersley N, Lindborg S, Micallef S, Roychoudhury S, Thompson L. Use of historical control data for assessing treatment effects in clinical trials, Pharm Stat. 2014;13(1):41–54. doi: 10.1002/pst.1589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ghadessi M, Tang R, Zhou J, Liu R, Wang C, Toyoizumi K, Mei C, Zhang L, Deng CQ, Beckman RA. A roadmap to using historical controls in clinical trials – by Drug Information Association Adaptive Design Scientific Working Group (DIA-ADSWG) Orphanet J Rare Dis. 2020;15(1):69. doi: 10.1186/s13023-020-1332-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hall KT, Vase L, Tobias DK, Dashti HT, Vollert J, Kaptchuk TJ, Cook NR. Historical Controls in Randomized Clinical Trials: Opportunities and Challenges. Clin Pharmacol Ther. 2021;109(2):343–51. doi: 10.1002/cpt.1970. [DOI] [PubMed] [Google Scholar]

- 27.Schmidli H, Gsteiger S, Roychoudhury S, Hagan AO, Spiegelhalter D, Neuenschwander B. Robust Meta-Analytic-Predictive Priors in Clinical Trials with Historical Control Information. Biometrics. 2014:1023–32. 10.1111/biom.12242. [DOI] [PubMed]

- 28.Schmidli H, Häring DA, Thomas M, Cassidy A, Weber S, Bretz F. Beyond Randomized Clinical Trials: Use of External Controls. Clin Pharmacol Ther. 2020;107(4):806–16. doi: 10.1002/cpt.1723. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1 Supplementary document with technical derivations, proofs, and additional simulation study results.

Data Availability Statement

R code are available on GitHub through the repository: https://github.com/MartaBofillRoig/NCC_timetrends. This repository contains the main code and R functions to reproduce the results of the simulation study. The datasets generated and/or analysed are also available in the repository.