Abstract

There is ongoing debate as to whether emotion perception is determined by facial expressions or context (i.e., non-facial cues). The present investigation examined the independent and interactive effects of six emotions (anger, disgust, fear, joy, sadness, neutral) conveyed by combinations of facial expressions, bodily postures, and background scenes in a fully crossed design. Participants viewed each face-posture-scene (FPS) combination for 5 s and were then asked to categorize the emotion depicted in the image. Four key findings emerged from the analyses: (1) For fully incongruent FPS combinations, participants categorized images using the face in 61% of instances and the posture and scene in 18% and 11% of instances, respectively; (2) postures (with neutral scenes) and scenes (with neutral postures) exerted differential influences on emotion categorizations when combined with incongruent facial expressions; (3) contextual asymmetries were observed for some incongruent face-posture pairings and their inverse (e.g., anger-fear vs. fear-anger), but not for face-scene pairings; (4) finally, scenes exhibited a boosting effect of posture when combined with a congruent posture and attenuated the effect of posture when combined with a congruent face. Overall, these findings highlight independent and interactional roles of posture and scene in emotion face perception. Theoretical implications for the study of emotions in context are discussed.

Supplementary Information

The online version contains supplementary material available at 10.1007/s42761-021-00061-x.

Keywords: Emotion categorization, Facial expressions, Body postures, Background scenes, Emotion perception

Perceiving and appreciating emotional communication is a foundational aspect of interpersonal interactions. Research has traditionally focused on facial expressions as the primary cue for emotion categorization (see Ekman, 1993; Izard, 1994). However, a growing body of research indicates that pairing facial expressions with incongruent/conflicting non-facial cues can alter perceivers’ categorizations of emotions matching the face (hereafter referred to as face categorizations). These changes in emotion categorization have been found using a variety of distinct cues (Hassin et al., 2013), including body posture (e.g., Meeren et al., 2005; de Gelder, 2006; see also Mehrabian, 1969; Ekman & Friesen, 1967), voice (e.g., de Gelder & Vroomen, 2000; de Gelder et al., 1999; Ethofer et al., 2006), background scene (e.g., Barrett & Kensinger, 2010; Ngo & Isaacowitz, 2015; Righart & de Gelder, 2008), situational information (e.g., Carroll & Russell, 1996; Hess et al., 2020), culture of the perceiver (e.g., Masuda et al., 2008), and even the psychological construction of language (Lindquist & Gendron, 2013). Thus, non-facial cues can influence emotion perception, challenging the long-held notion that the most important cue for emotion perception is the face.

One might conclude from this collection of studies that the face is less important than non-facial cues when perceiving emotion. Indeed, some have proposed that facial expressions are “inherently ambiguous” and thus depend on particular combinations of non-face information for emotion categorization (Hassin et al., 2013). For example, the emotion seeds hypothesis predicts that combinations of face cues and non-face cues that feature two incongruent, yet perceptually similar, emotions are more likely to result in a categorical change than two perceptually dissimilar emotions (Aviezer et al., 2017). However, research supporting this theory has largely been limited to the influence of body postures or body postures with congruent background scenes on facial expressions (see Aviezer et al., 2008), making it difficult to identify the unique influence of non-face modalities of emotion. To address some of these issues, the current study examined the independent and interactive effects of emotion faces, postures, and scenes on emotion categorization.

Examining Postures and Scenes as Non-facial Emotion Cues

A handful of studies have examined the roles of postures and scenes on emotion perception. Van den Stock and colleagues examined shared and unique neurological underpinnings of perceiving emotion postures and scenes (Van den Stock et al., 2014) and how emotion postures influenced perception of facial and vocal expressions of emotion (Van den Stock et al., 2007). Reschke et al. (2018) also examined the additive effects of emotion scenes combined with postures featuring disgust facial expressions. Findings indicated that while the presence of an anger posture was sufficient to elicit a categorical change (i.e., participants judged the disgust face as angry when no background scene was provided), other emotions (sadness and fear) necessitated that the posture be paired with a congruent scene (e.g., a sadness posture embedded in a funeral scene) to shift categorization of the emotion. This suggests that combinations of non-facial elements differentially impact emotion perception.

Though informative, prior research has fallen short of investigating emotion perception when multiple competing, incongruent non-facial cues are present (e.g., a fear face on an angry posture in a sad scene). Furthermore, previous research has shown that some face-posture combinations of two distinct emotions exhibit an asymmetrical effect (Mondloch et al., 2013), meaning that while one emotion impairs the identification of the other emotion, the reverse relation is not present. For example, Mondloch et al. (2013) reported that sadness postures reduced face categorizations of angry faces, but angry postures did not reduce face categorizations of sadness faces. To date, research has not examined potential asymmetrical effects of emotion scenes in addition to emotion postures. Such work would further elucidate the debate over proposed mechanisms for contextualized emotion perception (e.g., Lecker et al., 2020; Mondloch et al., 2013).

The unique and combinatorial effect of emotion scenes also merits further examination. Previous studies have typically included a single non-facial cue (e.g., a fearful posture; a fear scene; Mondloch et al., 2013; Van den Stock et al., 2014) or multiple congruent non-facial cues on a single facial expression (e.g., a sadness posture with a casket featuring a disgust face; Aviezer et al., 2008; Reschke et al., 2018). Prior research has found that pairing an emotion scene with a congruent emotion posture can boost categorical changes compared to a posture with no background scene (Reschke et al., 2018). However, these findings were limited because only disgust faces were used and emotion scenes matching emotion faces were not included as a comparison group. Given the ongoing debate regarding the nature of contextualized emotion perception (e.g., Aviezer et al., 2017; Mondloch et al., 2013), investigating how emotion categorization is differentially influenced by background scenes would contribute to our understanding of the interactional nature of emotion categorization.

The Present Investigation

This investigation assessed adult emotion categorization of combinations of facial expressions, body postures, and emotion scenes. Discrete emotions of anger, disgust, fear, joy, and sadness, as well as neutral, were included for each element (face, posture, scene). Elements were fully crossed to examine all possible combinations of emotion cues. The analyses were guided by four research questions:

Are fully incongruent combinations (e.g., a sad face on a fear posture in a disgust scene) categorized according to the face, posture, or scene?

Do emotion postures and emotion scenes similarly influence emotion categorizations when combined with incongruent facial expressions?

Are the influences of emotion postures and scenes on emotion faces symmetrical across inverted pairings (e.g., anger face with disgust posture/scene vs. disgust face with anger posture/scene)?

Does scene congruency boost or reduce the effects of emotion faces and emotion postures on emotion categorizations?

Method

Pre-Registration

The procedures and planned analyses were pre-registered on the Open Science Framework prior to data collection (see https://osf.io/vqjpc/?view_only=c2c7d08cac9549ba9f4d5b6cdd0c5112).

Participants

Our pre-registered power analysis using the effect sizes from a pilot study indicated that at least 72 participants would be necessary to detect a small effect ( = 0.005) with power of 0.95 and an alpha level of 0.01 (see analyses for Aim 2 below). In order to ensure proper counterbalancing of the stimuli, we recruited 80 undergraduate students (43 female; Mage = 20.58 years, SD = 2.08 years, range = 18–31 years) from a large university in the USA. One additional participant was excluded from the final sample due to equipment failure. Participants were given course credit as compensation.

Stimuli

All stimuli components (facial expressions, postures, scenes) were taken from highly rated exemplars from previously validated stimuli sets to ensure that each component corresponded with the intended emotion, thus ensuring source clarity (see details below; Ekman et al., 1972). A concern with stimuli used in previous research is that participants may become increasingly familiar with the face identities with repeated exposure, which could facilitate their ability to directly compare stimuli across trials (Burton, 2013). For instance, Lecker et al. (2020) used 6 face identities to display 4 distinct emotions across 96 trials, resulting in each participant viewing each face identity 16 times. For the current study, we used 48 distinct face identities displaying one emotion each with face identity distributed randomly amongst stimuli blocks (see below), thus minimizing comparison effects compared to previous research using fully crossed designs.

Facial Expressions

Six facial expressions (anger, disgust, fear, joy, sadness, neutral) conveyed by 48 actors representing 4 races (Asian, Black, Hispanic, White; 2 males and 2 females for each race) were adopted from the NimStim and RADIATE sets of facial expressions (Conley et al., 2018; Tottenham et al., 2009). All images were originally validated as expressing the intended emotion (percent agreement: females = 74–100%; males = 73–100%; see Supplementary Table 1). A sample emotion face is presented in Fig. 1a.

Fig. 1.

Sample stimuli pieces that were individually validated as follows: (a) fear face, (b) joy posture, and (c) disgust scene. These components were edited together to create composite image (d). All facial expressions, postures, and scenes were combined using Adobe Photoshop to create 17,280 distinct face-posture-scene combinations (2 genders 4 races 6 facial expressions 6 postures 2 posture exemplars 6 scenes 5 scene exemplars)

Body Postures

Twenty-four postures (half female, half male) featuring the body from shoulders to feet and conveying two distinct versions of each of the six emotions (anger, disgust, fear, joy, sadness, neutral) were selected from a validated set of stimuli (Lopez et al., 2017). Each discrete emotion posture was originally rated as depicting the intended emotion (percent agreement: females = 65–96%; males = 73–100%; see Supplementary Table 2). The stimuli also included four neutral postures (2 female, 2 male) based on female and male prototypes (Reschke et al., 2018) that were validated using dimensional ratings on a 9-point scale (5.00 being neutral; Russell et al., 1989) and were confirmed as expressing neutral valence and arousal (female Mvalence, 4.94; female Marousal, 5.00; male Mvalence, 5.00; male Marousal, 4.73). A sample emotion body posture is presented in Fig. 1b.

Scenes

Scene images depicted a discrete emotion (anger, disgust, fear, joy, neutral, sadness) and included 5 exemplars for each emotion to reduce participants’ repeated exposure to each image. First, all scene images were validated with a separate sample of 26 undergraduate students (18 female; Mage = 21.15 years, SD = 4.76). Participants were asked to “select the emotion that best describes how someone in this context would feel” from five options in the following order: joy, sadness, fear, anger, disgust. All discrete emotion scenes were rated as depicting the intended emotion (percent agreement: 76–100%; see Supplementary Table 3). Next, all scene images were rated by a different sample of 21 undergraduate students (12 female; Mage = 19.05 years, SD = 1.28) using scales for valence and arousal (Russell et al., 1989). The neutral scenes were rated as neutral in both valence and arousal (Mvalence = 5.39, SD = 0.66; Marousal = 4.61, SD = 1.29). A sample emotion scene is presented in Fig. 1c.

Face-Posture-Scene Composites

All facial expressions, postures, and scenes were combined using Adobe Photoshop to create 17,280 distinct face-posture-scene combinations (2 genders 4 races 6 facial expressions 6 postures 2 posture exemplars 6 scenes 5 scene exemplars). In accordance with previous calls for realistic looking stimuli (Civile & Obhi, 2015), necks were included in each composite. A sample face-posture-scene composite image is presented in Fig. 1d.

Design

We pre-registered our intent to collapse across gender, race, posture exemplar, and scene exemplar, reducing the number of conditions to 216 (fully crossed face posture scene design). The 17,280 stimuli were divided equally into 80 blocks of 216 images, each block containing an image from all 216 conditions. Participants were randomly assigned to view 1 block of images.

Procedure

All procedures took place in a campus computer lab. Each participant sat at a desktop computer with a 20″ monitor and completed all measures using E-Prime 3.0 testing software. Testing stations were separated by partitions to prevent participants from seeing another participant’s screen. Participants first completed the consent form and demographics questionnaire and received detailed instructions about the study. For the testing portion, stimuli were displayed in a random order and a fixation cross appeared before each image in the center of the screen for 500 ms. Each image appeared on the monitor for 5 s and then disappeared to reveal the following prompt, “Please select the emotion that best describes what this person is feeling.” Below the prompt, the following options were provided vertically in a set order: Anger, Disgust, Fear, Joy, Sadness. Participants indicated their categorization using a keyboard and could take as much time as needed to respond. After participants completed half of a block, there was a 5-min break followed by a reiteration of the instructions. Each session lasted approximately 40 min.

Analytic Strategy

As noted previously, our analyses were guided by 4 aims. First, we compared the saliency of each cue when pitted directly against the other cues by examining a subset of the face-posture-scene (FPS) combinations in which all emotion cues were incongruent (e.g., an angry face on a disgust posture in a fear scene). We anticipated that participants’ face categorizations would overall exceed posture categorizations and scene categorizations. Second, we tested whether reductions in face categorizations were systematic across cues by testing the independent effects of postures (with neutral scenes) and scenes (with neutral postures) on face categorizations, as well as how emotion facial expressions influenced categorizations matching the posture (with neutral scene) or scene (with neutral posture). These analyses were largely exploratory; thus, we did not make formal predictions. Third, we tested for asymmetries in contextual influence (e.g., whether an angry posture/scene influenced categorization of a disgust face as much as a disgust posture/scene influenced categorization of an angry face). We did not make specific predictions given the exploratory nature of this question (though see Mondloch et al., 2013). Finally, we examined the effect of scene congruency (whether the scene matched the face, the scene matched the posture, or the scene was neutral) on face categorizations and posture categorizations. We expected congruent scenes to boost the effect of emotion postures (see Reschke et al., 2018). However, we did not make predictions regarding scenes congruent with facial expressions given the exploratory nature of the question. The statistical models used in Aims 2 and 4 were conducted using generalized linear mixed effects models specified with a compound symmetry covariance matrix. Restricted maximum likelihood (REML) and Satterthwaite approximation for degrees of freedom were used in each model (see Wilcox, 1987). All factors tested were fixed effects. A full confusion matrix is presented in Supplementary Table 4.

We conducted a set of preliminary analyses to examine participants’ categorizations of each emotion cue embedded in neutral versions of the other cues (e.g., an anger face on a neutral posture in a neutral scene; a neutral face on an anger posture in a neutral scene; a neutral face on a neutral posture in an anger scene) to address potential pre-existing differences in contextualized source clarity among each emotion cue (face, posture, scene). Results indicated that participants rated facial expressions significantly more accurately (M = 0.85, SE = 0.021) than postures (M = 0.48, SE = 0.021) and scenes (M = 0.32, SE = 0.021), F(2, 1106) = 189.43, p < 0.001. Thus, we included participants’ ratings of each facial emotion cue (with neutral postures and scenes), posture emotion cue (with neutral faces and scenes), or scene emotion cue (with neutral faces and postures) as covariates in all models examining emotion categorizations to control for individual differences in contextualized recognition accuracy for each cue at the participant level. By including these covariates, we ensure that any mean differences observed are not due to pre-existing differences in contextualized recognition accuracy for each cue.

Results

Aim 1: Categorizations of Fully Incongruent Combinations—Face, Posture, or Scene?

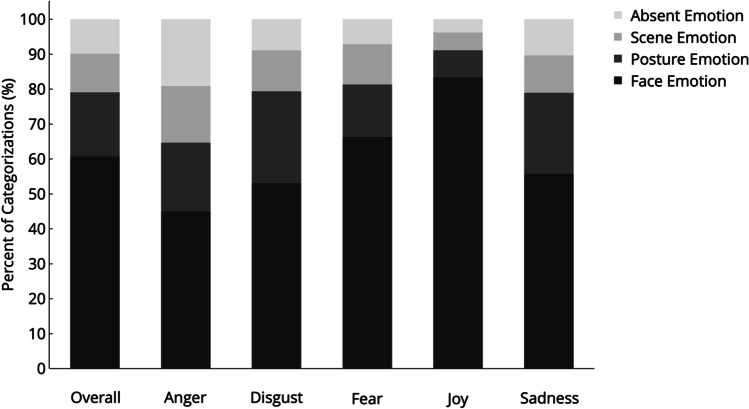

We first examined FPS combinations in which all face, posture, and scene emotion cues were incongruent, thus pitting the cues directly against one another. This allowed us to compare the percentage of face categorizations, posture categorizations, and scene categorizations. When collapsed across all emotions, 60.73% of emotion categorizations matched the emotion of the face, whereas 18.38% matched the posture, 11.08% matched the scene, and 9.81% did not match any cue (referred to hereafter as absent categorizations). The categorizations were not equally distributed, goodness-of-fit χ2 (3) = 3305.01, p < 0.001, and all categorization types differed significantly from one another (ps < 0.001) except for scene categorizations and absent categorizations (p = 0.054; see Fig. 2). Parallel patterns emerged even when controlling for individual differences in face, posture, and scene categorizations when each cue was embedded in neutral versions of other cues (see Supplementary Fig. 1).

Fig. 2.

Percent of emotion categorizations corresponding to the face, posture, and scene for fully incongruent FPS combinations, separated by face emotion. “Absent Emotion” refers to categorizations of an emotion not present in the constructed stimulus (e.g., categorizing a combination of angry-face, fear-posture, and sadness-scene as ‘disgust’)

Similar patterns emerged across each emotion. Specifically, emotion categorizations were not equally distributed for anger, disgust, fear, joy, or sadness faces (ps < 0.001). Likewise, face categorizations were significantly greater than posture, scene, and absent categorizations for each discrete emotion (ps < 0.001). Additionally, posture categorizations were significantly greater than scene categorizations and absent categorizations for disgust, fear, joy, and sadness (ps ≤ 0.039). However, for anger the posture categorizations did not differ significantly from scene categorizations (p = 0.076) nor absent categorizations (p = 0.756). Lastly, scene categorizations were significantly greater than absent categorizations for disgust and fear (ps ≤ 0.047), but not for anger, joy, or sadness (ps ≥ 0.143).

In summary, face categorizations exceeded posture categorizations and scene categorizations overall and when analyzed separately by face.

Aim 2: Are Categorizations Systematic?

Previous research has shown that emotion faces paired with incongruent non-facial cues result in systematic changes in face and non-face categorizations (see Lecker et al., 2020). The following analyses first examined whether categorizations matching the face were uniquely influenced by incongruent postures or scenes (face vs. non-face). We next analyzed how categorizations matching the non-facial cues were influenced by incongruent facial expressions (non-face vs. face).

Face vs. Non-face

This set of analyses tested whether categorizations matching the face varied systematically when combined with incongruent postures or scenes (e.g., does the pattern of non-facial influence on emotion faces differ between postures and scenes?). A 5 (face emotion) × 5 (non-face emotion) × 2 (non-face cue: posture, scene) model predicting face categorizations examined the interactive effects of face emotion and non-face emotion by cue type (postures with neutral scenes vs. scenes with neutral postures). Participants’ categorizations of each facial expression of emotion when combined with a neutral posture and neutral scene (e.g., angry face on a neutral posture in a neutral scene) were mean-centered (see Schneider et al., 2015) and included as covariates to control for differences in contextualized recognition accuracy of each face emotion at the participant level. Of primary interest was examining the main effect of non-face cue and the interactions of non-face cue with face emotion and non-face emotion. Additionally, exploring the 3-way interaction allowed us to test potential differences in the patterns of the face emotion × non-face emotion interactions for postures and scenes (e.g., did an anger posture influence a disgust face differently than an anger scene influenced a disgust face?).

There were significant main effects of face emotion, F(4, 3857) = 37.80, p < 0.001, = 0.04, and non-face emotion, F(4, 3820) = 13.31, p < 0.001, = 0.01, as well as a significant interaction of face × non-face emotion, F(16, 3820) = 20.78, p < 0.001, = 0.08. Of particular interest was a significant main effect of non-face cue, F(1, 3820) = 115.50, p < 0.001, = 0.03, as well as significant interactions of face × non-face cue, F(4, 3820) = 16.61, p < 0.001, = 0.02, and non-face emotion × non-face cue, F(4, 3820) = 6.94, p < 0.001, = 0.01, which indicated that postures and scenes exerted differing levels of influence on face categorizations as a function of face emotion and non-face emotion. Lastly, a significant face × non-face emotion × non-face cue, F(16, 3820) = 12.09, p < 0.001, = 0.05, indicated that face × non-face emotion patterns differed between postures and scenes.

Further examination of the significant effect of non-face cue revealed that postures with a neutral scene exhibited a stronger reduction in face categorizations (M = 0.70) than scenes with a neutral posture (M = 0.83; p < 0.001). This indicated that postures overall were more likely to reduce face categorizations than scenes. Comparisons within the significant face × non-face cue and non-face emotion × non-face cue interactions demonstrated that this pattern held for all facial expressions (ps < 0.045), except fear (p = 0.20), and all context emotions (ps < 0.001), except sadness (p = 0.24).

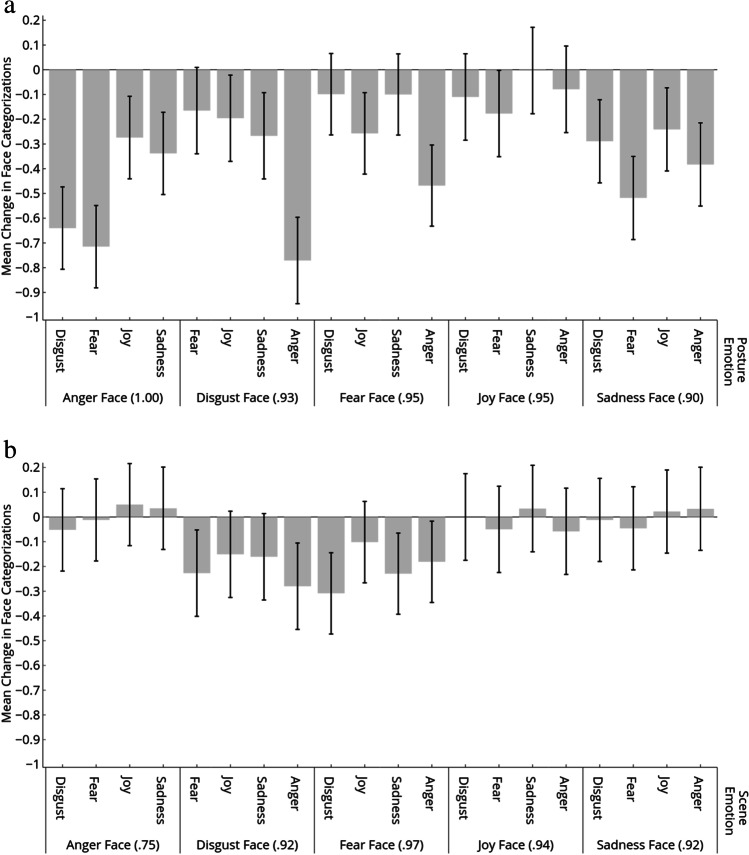

Bonferroni-corrected comparisons (Alpha = 0.005) of the 3-way interaction broke down face categorizations between non-face emotions within each facial expression separately for postures and scenes (see below). Each set of comparisons first examined differences between congruent combinations (e.g., anger face on anger posture/scene) compared to incongruent combinations (e.g., an anger face on disgust posture/scene), and then explored differences between all incongruent combinations within each face emotion (e.g., an anger face on disgust posture/scene vs. fear posture/scene vs. joy posture/scene vs. sadness posture/scene). Mean reductions in incongruent compared to congruent pairs of face categorizations are displayed in Fig. 3. Separate narrative descriptions of all pairwise comparisons for each non-face cue are below.

Fig. 3.

Estimated marginal means of change in face categorizations by non-face emotion separated by (a) posture (neutral scene) and (b) scene (neutral posture). Means above ‘0’ indicate that the addition of the non-face cue increased categorization of the face, whereas means below ‘0’ indicate that non-face cue decreased categorization of the face. The proportion agreement for congruent pairings of faces and non-face cues (e.g., Fig. 3a: anger face on an anger posture; Fig. 3b: anger face with an anger scene) are contained in parentheses. Error bars represent 99.5% confidence intervals (CIs). CIs that do not contain ‘0’ signify that the non-face emotion paired with the facial expression (e.g., anger face on disgust posture) resulted in a significant change in face categorizations compared to the congruent version (e.g., anger face on anger posture) at the p < .005 level. Incongruent emotions that have CIs that do not contain the mean of a neighboring non-face emotion indicate significantly different means at the p < .005 level. For example, anger face on a disgust posture resulted in a significant change in face categorizations compared to the congruent pairing of M = -.64, p < .005, which was significantly larger than the mean change of an anger face on a sadness posture of M = -.33, p < .005

Face vs. Posture (Neutral Scene)

These comparisons examined differences in face categorizations between congruent and incongruent combinations of faces and postures when the scene was neutral (see Fig. 3a).

Anger Faces

Each non-anger posture resulted in a significant reduction in face categorizations compared to an anger posture (ps < 0.0005). Disgust and fear postures resulted in significantly larger reductions in face categorizations compared to sadness and joy (ps < 0.0005). Reductions did not differ significantly between disgust and fear (p = 0.20) or between sadness and joy (p = 0.28).

Disgust Faces

Anger, joy, and sadness postures resulted in significant reductions in face categorizations compared to a disgust posture (ps < 0.0005), whereas fear did not differ significantly (p = 0.008). Anger postures produced significantly greater reductions in face categorizations than fear, joy, and sadness postures (ps < 0.0005). Reductions in face categorizations did not differ significantly between fear, joy, and sadness postures (ps ≥ 0.101).

Fear Faces

Anger and joy postures resulted in significant reductions in face categorizations compared to a fear posture (ps < 0.0005), whereas disgust and sadness postures did not differ significantly from fear postures (ps ≥ 0.087). Anger postures produced significantly stronger reductions in face categorizations than fear, joy, and sadness postures (ps < 0.0005). Face categorizations did not differ significantly between disgust, joy, and sadness postures (ps ≥ 0.006).

Joy Faces

Fear postures produced a significant reduction in face categorizations compared to joy postures (p = 0.0044), whereas anger, disgust, and sadness postures did not differ from joy postures (ps ≥ 0.078). There were no significant differences in face categorization reduction between anger, disgust, fear, and sadness postures (ps ≥ 0.0051).

Sadness Faces

Each non-sadness posture resulted in significantly lower face categorizations compared to a sadness posture (ps < 0.0005). Fear postures produced significantly larger reductions in face categorizations than disgust and joy postures (ps < 0.0005). Face categorizations did not differ significantly between anger and fear postures (p = 0.024) or anger, disgust, and joy postures (ps = 0.017).

Face vs. Scene (Neutral Posture)

These comparisons examined differences in face categorizations between congruent and incongruent combinations of faces and scenes when the posture was neutral (see Fig. 3b).

Anger Faces

There were no significant differences in face categorizations between disgust, fear, joy, and sadness scenes and anger scenes (ps ≥ 0.384), nor were there any significant differences in reductions between the non-anger scenes (ps ≥ 0.086).

Disgust Faces

Anger and fear scenes resulted in significant reductions in face categorizations compared to disgust scenes (ps < 0.0003), whereas joy and sadness scenes did not differ from disgust scenes (ps ≥ 0.0097). Reductions in face categorizations did not differ significantly between anger, fear, joy, and sadness scenes (ps ≤ 0.037).

Fear Faces

Anger, disgust, and sadness scenes resulted in significant reductions in face categorizations compared to fear scenes (ps < 0.002), whereas joy scenes did not differ from fear scenes (p = 0.083). Disgust scenes exhibited a significantly larger reduction in face categorizations than joy scenes (p < 0.0005). All other comparisons of non-fear scenes were not statistically significant (ps ≥ 0.029).

Joy Faces

There were no significant differences in face categorizations between anger, disgust, fear, and sadness scenes compared to joy scenes (ps ≥ 0.354), nor were there any significant differences between the non-joy scenes (ps ≥ 0.141).

Sadness Faces

There were no significant differences in face categorizations between anger, disgust, fear, and sadness scenes (ps ≥ 0.438), nor were there any significant differences between non-sadness scenes (ps ≥ 0.182).

Interim Summary

Taken together, these results demonstrate that postures (with neutral scenes) overall exert a stronger reduction in face categorizations than scenes (with neutral postures). Additionally, most face-posture combinations resulted in significant reductions in face categorizations whereas reductions for scenes were only observed for disgust and fear faces. Moreover, the influence of posture was stronger for some face-posture combinations than others, whereas scenes (with the exception of a fear face in a disgust scene) did not differ from one another in influence.

Non-Face Vs. Face

This set of analyses examined whether emotion categorizations matching the posture or the scene differed by face emotion. A 5 (non-face emotion) × 5 (face emotion) × 2 (non-face cue: posture, scene) model predicting non-face categorizations examined the interactive effects of non-face emotion and face emotion by cue type (emotion postures with neutral scenes vs. emotion scenes with neutral postures). Participants’ categorizations matching each posture or scene when combined with a neutral face (e.g., neutral face on an anger posture in a neutral scene; neutral face on a neutral posture in an anger scene) were mean-centered and included as covariates to control for differences in contextualized recognition accuracy of posture and scene emotions at the participant level. As with the analyses of face categorizations, of primary interest was testing the main effect of non-face cue and the interactions of non-face cue with non-face emotion and face emotion. Additionally, analysis of the 3-way interaction allowed us to test differences in the patterns of non-face emotion × face emotion interactions between postures and scenes (e.g., does an anger face influence a disgust posture vs. a disgust scene similarly?).

There were significant main effects of non-face emotion, F(4, 3890) = 21.63, p < 0.001, = 0.02, and face emotion, F(4, 3820) = 10.40, p < 0.001, = 0.01, as well as a significant interaction of non-face emotion × face emotion, F(16, 3820) = 217.42, p < 0.001, = 0.48. Additionally, and of particular interest, there was a significant main effect of non-face cue type, F(1, 3896) = 91.23, p < 0.001, = 0.02, as well as significant interactions of non-face emotion × non-face cue type, F(4, 3892) = 20.65, p < 0.001, = 0.02, and face emotion × non-face cue type, F(4, 3820) = 7.05, p < 0.001, = 0.01, which indicated that postures and scenes exerted differing levels of influence on non-face emotion categorizations and that this differed by non-face emotion and face emotion. Lastly, a significant 3-way non-face emotion × face emotion × non-face cue type interaction, F(16, 3820) = 4.24, p < 0.001, = 0.02, indicated that the non-face emotion × face emotion patterns differed between postures and scenes.

Further examination of the significant main effect of non-face cue revealed that faces produced significantly greater reductions in categorizations matching the scene (M = 0.24) compared to categorizations matching the posture (M = 0.35; p < 0.001). Comparisons within the significant non-face emotion × non-face cue interaction and face emotion × non-face cue interaction indicated that this pattern held for all non-face emotions (ps ≤ 0.0006), except joy (p = 0.27) and sadness (p = 0.87), and all face emotions (ps ≤ 0.033), except fear (p = 0.39).

Bonferroni-corrected comparisons (Alpha = 0.005) between face emotions within each non-face emotion were conducted separately for postures and scenes, first for congruent combinations compared to incongruent combinations, and second between all incongruent combinations. Mean reductions in incongruent compared to congruent pairs of categorizations matching the non-face emotion are displayed in Fig. 4. Separate narrative descriptions of all pairwise comparisons for each non-face cue are featured below.

Fig. 4.

Estimated marginal means of change in categorizations matching the non-face emotion separated by (a) posture (neutral scene) and (b) scene (neutral posture). Means above ‘0’ indicate that the addition of the face cue increased categorization of the posture (Fig. 4a) or scene (Fig. 4b), whereas means below ‘0’ indicate that face cue decreased categorization of the posture or scene. The proportion agreement for congruent pairings of faces and non-face cues (e.g., Fig. 4a: anger posture with an anger face; Fig. 4b: anger scene with an anger face) are contained in parentheses. Error bars represent 99.5% confidence intervals (CIs). CIs that do not contain ‘0’ signify that the face emotion paired with the non-face emotion (e.g., disgust face on an anger posture) resulted in a significant change in categorizations matching the non-face emotion compared to the congruent version (e.g., anger face on anger posture) at the p < .005 level. Incongruent emotions that have CIs that do not contain the mean of a neighboring non-face emotion indicate significantly different means at the p < .005 level. For example, a joy face on an anger posture resulted in a significant change in categorizations matching the posture compared to the congruent pairing of M = -.86, p < .005, which was significantly larger than the mean change of a disgust face on an anger posture of M = -.35, p < .005

Posture vs. Face (Neutral Scene)

These comparisons examined differences in categorizations matching the posture as a function of face emotion when the scene was neutral (see Fig. 4a).

Anger Postures

Disgust, fear, joy, and sadness faces each resulted in significant reductions in posture categorizations compared to angry faces (ps < 0.0005). Joy faces resulted in significantly greater reductions in posture categorizations compared to disgust and sadness faces (ps ≤ 0.00051), but not fear faces (p = 0.018). Fear and sadness faces each produced significantly greater reductions in posture categorizations than disgust faces (ps < 0.0005), though fear and sadness did not differ from one another (p = 0.27).

Disgust Postures

Anger, fear, joy, and sadness faces each resulted in significant reductions in posture categorizations compared to disgust faces (ps < 0.0005). Joy faces resulted in significantly greater reductions in posture categorizations compared to anger faces (p < 0.0005) but did not differ significantly from fear or sadness faces (ps ≥ 0.00054). Fear and sadness faces each produced significantly greater reductions in posture categorizations than anger faces (ps < 0.0005) but did not differ from each other (p = 0.30).

Fear Postures

Anger, disgust, joy, and sadness faces each resulted in significant reductions in posture categorizations compared to fear faces (ps < 0.0005). Anger, disgust, and joy faces each produced significantly greater reductions in posture categorizations than sadness faces (ps < 0.0005) but did not differ from one another (ps ≥ 0.25).

Joy Postures

Anger, disgust, fear, and sadness faces each resulted in significant reductions in posture categorizations compared to joy faces (ps < 0.0005) but did not differ from one another (ps ≥ 0.148).

Sadness Postures

Anger, disgust, fear, and joy faces each resulted in significant reductions in context categorizations compared to sadness faces (ps < 0.0005). Joy faces resulted in significantly greater reductions in posture categorizations compared to disgust and fear faces (ps ≤ 0.0048). Anger faces also differed significantly from disgust faces (p < 0.0005) but did not differ from joy faces (p = 0.59) or fear faces (p = 0.022). Fear faces did not differ from disgust faces (p = 0.098).

Scene vs. Face (Neutral Scene)

These comparisons examined differences in categorizations matching the scene as a function of face emotion when the posture was neutral (see Fig. 4b).

Anger Scenes

Disgust, fear, joy, and sadness faces each resulted in significant reductions in scene categorizations compared to angry faces (ps < 0.0005). Sadness faces produced significantly greater reductions in scene categorizations in comparison to disgust faces (p = 0.0015) but did not differ from fear or joy faces (ps ≥ 0.32). Disgust, fear, and joy faces did not differ from one another (ps ≥ 0.017).

Disgust Scenes

Anger, fear, joy, and sadness faces each resulted in significant reductions in scene categorizations compared to disgust faces (ps < 0.0005). Joy and sadness faces each produced significantly greater scene categorizations than anger and fear faces (ps < 0.0005) but did not differ significantly from one another (ps ≥ 0.41). Anger and fear faces did not differ significantly from one another (p = 0.28).

Fear Scenes

Anger, disgust, joy, and sadness faces each resulted in significant reductions in scene categorizations compared to fear faces (ps < 0.0005) but did not differ significantly from one another (ps ≥ 0.037).

Joy Scenes

Anger, disgust, fear, and sadness faces each resulted in significant reductions in scene categorizations compared to joy faces (ps < 0.0005) but did not differ from one another (ps ≥ 0.41).

Sadness Scenes

Anger, disgust, fear, and joy faces each resulted in significant reductions in scene categorizations compared to sadness faces (ps < 0.0005). Joy faces produced a significantly greater reduction in scene categorizations than fear faces (p = 0.00037) but did not differ from anger or disgust faces (ps ≥ 0.030). Anger, disgust, and fear faces did not differ significantly (ps ≥ 0.015).

Interim Summary

These results collectively demonstrate that faces exerted a stronger reduction for scene categorizations than posture categorizations. Additionally, all face-posture and face-scene combinations resulted in significant reductions in posture and scene categorizations. However, faces produced differential effects for anger, disgust, fear, and sadness postures, whereas faces only produced differential effects for anger, disgust, and sadness scenes.

Aim 3: Asymmetry Analyses

We examined asymmetrical effects by first calculating difference scores between both fully congruent face-posture (with neutral scene) parings and face-scene (with neutral posture) pairings and pairings with incongruent contexts (e.g., anger faces on anger scenes – anger faces on a disgust scenes = anger face-disgust scene difference score) for each participant, with larger difference scores representing a greater net change in face categorizations as a result of the incongruent context. Next, we compared difference scores separately for postures and scenes for every emotion pairing (e.g., anger-disgust vs. disgust-anger) to identify asymmetrical effects. Thus, this novel approach calculated difference scores at the participant level.

Bonferroni-corrected paired t-tests (alpha level = 0.005) examined differences in contextual influence between face-context pairs separately for postures and scenes. For postures, asymmetries in contextual influences were observed for pairings of anger-fear vs. fear-anger, anger-joy vs. joy-anger, sadness-fear vs. fear-sadness, and joy-sadness vs. sadness/joy (ps < 0.005; see Fig. 5). However, there were no significant differences in mean reduction of face categorizations for any face-scene pairings (ps ≥ 0.0097).

Fig. 5.

Mean change in Face Categorizations for incongruent Face-Posture pairings with neutral scenes. The first letter in the pair indicates the facial expression and the second letter indicates the posture (A = Anger, D = Disgust, F = Fear, J = Joy, S = Sadness). Scores indicate the mean change in face categorizations from the congruent face-posture pairing (e.g., Anger-Anger) to the incongruent face-posture pairing (e.g., Anger-Disgust). For example, the contextual influence score of the Anger-Disgust (AD) pairing indicates a net change of -.66 in face categorizations compared to the congruent pairing (Anger-Anger). Bonferroni-corrected paired t-tests indicate instances in which one incongruent pairing (e.g., Anger-Fear, M = .72 SE = .06) significantly differed in its change in face categorization from the inverse incongruent pairing (e.g., Fear-Anger, M = .47, SE = .06), p < .001. ‘*’ p < .005, ‘**’ p < .001, ‘***’ p < .0005. Error bars represent ± 1 SE

In summary, several asymmetries of contextual influence were observed for incongruent face-posture pairings, but none were observed for face-scene pairings.

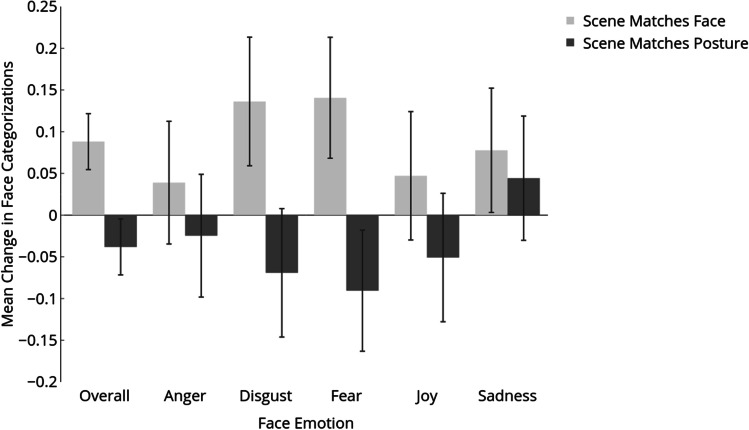

Aim 4: Scene Congruency

This set of analyses examined whether emotion categorizations of the face or the posture in incongruent face-posture pairings was boosted or reduced when combined with scenes congruent with either the face or posture. Analyses were conducted separately for face categorizations and posture categorizations.

Face Categorizations

These analyses examined the effect of scene congruency on face categorizations for incongruent face-posture combinations (e.g., whether a disgust face paired with an anger posture is more or less likely to be categorized as disgust when accompanied by a disgust scene, anger scene, or neutral scene). A 5 (face emotion) × 5 (posture emotion) × 3 (scene congruency: scene matches face, scene is neutral, scene matches posture) model with the constraint that the posture did not match the facial expression examined the interactive effect of scene on face categorizations. Participants’ categorizations of each of the facial expressions of emotion embedded in neutral contexts (e.g., angry face on a neutral posture in a neutral scene) were included as a covariate to control for individual differences in contextualized recognition accuracy between facial expressions at the participant level.

There were significant main effects of face emotion, F(4, 4628) = 116.09, p < 0.001, = 0.09, and posture emotion, F(4, 4659) = 82.64, p < 0.001, = 0.07, as well as a significant interaction of face emotion × posture emotion, F(11, 4600) = 31.91, p < 0.001, = 0.07. Unique to the present analysis, there was a significant main effect of scene congruency, F(2, 4600) = 37.55, p < 0.001, = 0.02, as well as a significant interaction of face emotion × scene congruency, F(8, 4600) = 3.88, p < 0.001, = 0.01. The interaction of posture emotion × scene congruency and three-way interaction of face emotion × posture emotion × scene congruency were not significant (ps ≥ 0.184). Follow-up comparisons of the significant main effect of scene congruency examined the boosting or reducing effect of scenes matching the face and scenes matching the posture as compared to neutral scenes. Pairwise comparisons of the significant face emotion × scene congruency interaction examined these differences for each facial expression. The alpha level was Bonferroni-adjusted (alpha = 0.025) to minimize type I error.

Scenes congruent with the face emotion significantly boosted face categorizations in comparison to neutral scenes (p < 0.0025), whereas scenes congruent with a competing posture emotion significantly reduced face categorizations compared to neutral scenes (p = 0.011; see Fig. 6). The boosting pattern of scenes congruent with the face was characteristic of disgust, fear, and sadness faces (ps ≤ 0.019), but not anger and joy faces (ps ≥ 0.17; see Fig. 6). The reduction pattern of scenes congruent with posture was only characteristic of fear faces (p = 0.005), but not anger, disgust, joy, or sadness faces (ps ≥ 0.045; see Fig. 6).

Fig. 6.

Mean change in face categorizations of faces with scenes matching either the face or posture compared to neutral scenes. For example, images featuring a fear face and a matching fear scene resulted in a significant increase in face categorizations of .14 compared to a fear face in a neutral scene, whereas fear faces featuring scenes matching a competing posture resulted in a significant decrease in face categorizations of -.09 compared to a fear face in a neutral scene. Error bars represent 97.5% Confidence Intervals (CIs). CIs that do not contain ‘0’ indicate a significant change in face categorizations compared to neutral scenes at the p < .025 level.

Posture Categorizations

We next examined the additive effect of scene congruency on categorizations matching the posture for incongruent face-posture combinations (e.g., whether a disgust face paired with an anger posture more or less likely to be categorized as anger when accompanied by an anger scene, a disgust scene, or a neutral scene). A 5 (posture emotion) × 5 (face emotion) × 3 (scene congruency: scene matches posture, scene is neutral, scene matches face) model with the constraint that the posture did not match the facial expression examined the interactive effect of scene on posture categorizations. Participants’ categorizations of each of the emotion postures embedded in a neutral scene and a neutral face (e.g., neutral face on an angry posture in a neutral scene) were included as a covariate to control for differences in contextualized recognition accuracy between emotion postures at the participant level.

There were significant main effects of posture emotion, F(4, 4657) = 101.36, p < 0.001, = 0.08, and face emotion, F(4, 4601) = 76.75, p < 0.001, = 0.06, as well as a significant interaction of posture emotion × face emotion, F(11, 4601) = 35.23, p < 0.001, = 0.08. Unique to the present analysis, there was a significant main effect of scene congruency, F(2, 4601) = 33.12, p < 0.001, = 0.01, as well as a significant face emotion × scene congruency interaction, F(8, 4601) = 2.39, p = 0.014, = 0.004. The interaction of posture emotion × scene congruency and three-way interaction of posture emotion × face emotion × scene congruency were not significant (ps ≥ 0.216). Subsequent pairwise comparisons further examined the significant effect of scene congruency and face emotion × scene congruency interaction, comparing each scene congruent with the posture or face with a neutral scene. The alpha level was Bonferroni-adjusted (alpha = 0.025) to minimize type I error.

Scenes congruent with the emotion posture significantly increased posture categorizations compared to neutral scenes (p < 0.0025), and scenes congruent with the face significantly reduced posture categorizations (p < 0.0025). The boosting pattern of scenes congruent with the posture emotion was characteristic of disgust and fear faces (ps ≤ 0.004), but not anger, joy, or sadness faces (ps ≥ 0.036). Conversely, reductions in posture categorizations from scenes congruent with the face emotion were characteristic of disgust and fear faces (p ≤ 0.012), but not anger, joy, or sadness faces (ps ≥ 0.099; see Fig. 7).

Fig. 7.

Mean change in posture categorizations with scenes matching the face or posture compared to neutral scenes. For example, scenes congruent with postures resulted in a significant increase in posture categorizations of .065 compared to a neutral scene, and a scene congruent with the face resulted in a significant decrease in posture categorizations of -.052 compared to a neutral scene. Error bars represent 97.5% CIs. CIs that do not contain ‘0’ indicate a significant change in face categorizations compared to neutral scenes at the p < .025 level

In summary, scenes overall boosted a posture’s diminutive effect on face categorizations when congruent with the posture and incongruent with the face, resulting in a corresponding increase in categorizations matching the posture. When congruent with the accompanying face yet incongruent with the posture, scenes had the opposite effect; namely, they increased face categorizations and decreased posture categorizations.

Discussion

The present study teased apart the unique and combinatorial effects of emotion faces, postures, and scenes using a fully crossed design. Below we survey the general pattern of findings and provide considerations for future empirical and theoretical work.

Face (Pre)Dominance

Overall, participants’ categorizations matching the face for fully incongruent FPS combinations significantly exceeded categorizations matching the posture or scene, a pattern that was confirmed even when controlling for individual differences in contextualized recognition accuracy. These findings support our prediction that face categorizations would generally exceed posture categorizations and scene categorizations. Indeed, categorizations matching the posture or scene varied significantly as a function of face emotion and only two face emotion × posture emotion combinations resulted in majority-posture categorizations: anger-disgust and disgust-anger. In contrast to recent theorizing (e.g., Hassin et al., 2013), these results suggest that, overall, participants categorized incongruent face-posture-scene combinations more often according to the face emotion than the posture or scene emotion.

At least three explanations may explain the proclivity for face categorizations. First, the inclusion of a positive emotion (joy) combined with negative emotion cues may have inflated face categorizations due to the difficulty of producing cross-valence categorical changes in emotion perception (Aviezer et al., 2012a; Israelashvili et al., 2019; Gendron et al., 2014). However, face categorizations remained significantly greater than posture and scene categorizations even when omitting joy cues (ps < 0.001; see Supplementary Fig. 2). Second, the large number of face identities used in our study may have decreased direct comparison of faces across trials, thereby deflating the influence of non-facial cues (see Burton, 2013). Lastly, it is possible that not explicitly directing participants to the face decreased the influence of non-face cues, a paradox that has been shown in recent work (see Lecker et al., 2020). However, pilot data from our lab manipulating question type (“categorize the face” vs. “categorize the emotion”) indicated no such differences, making this explanation unlikely.

Postures Produce Categorical Changes in Face Categorizations

Despite only producing a few categorical changes, postures nevertheless exhibited a powerful influence on emotion categorizations. Namely, postures resulted in significant reductions in face categorizations for the majority of face-posture combinations in the study. Interestingly, the only pattern of categorical changes that conformed to the emotion seeds hypothesis (see Aviezer et al., 2008) was for postures combined with disgust faces. These findings coupled with previous research suggest that the emotion seeds hypothesis most consistently describes the independent effects of incongruent postures (alone or with congruent scenes) combined with disgust faces, but likely not for other facial expressions of emotion (see Aviezer et al., 2008, 2012b; Lecker et al., 2020). Such inconsistencies may be due to differences in study design (e.g., one face emotion vs. multiple face emotions), stimuli validation (e.g., FACs-coded facial expressions vs. naturally posed expressions), or potential methodological artifacts (e.g., variability in face identity; Burton, 2013). Future research is needed to address these discrepancies.

Several asymmetrical effects were also observed for emotion postures. Some were novel to the current study (anger-joy vs. joy-anger; joy-sadness vs. sadness-joy) and some confirmed prior research (sadness-fear vs. fear-sadness; Aviezer et al., 2012b). Additionally, some asymmetries found in previous research were not replicated in the present study (e.g., anger-fear vs. fear-anger; Aviezer et al., 2012b; anger-sadness vs. sadness-anger; Mondloch et al., 2013; disgust-fear vs. fear-disgust; Lecker et al., 2020). These results demonstrate the need to further investigate the robustness of asymmetrical categorical changes, including the potential of stimulus-specific effects, and how such changes can be explained by differences in study design (see Lecker et al., 2020) and analytical approach.

Unique Effects of Scenes

Scenes as a non-face cue interacted with faces distinctly from postures in several ways. First, the pattern of categorical change for emotion scenes appeared to be driven by face incongruency rather than perceptual similarity. Specifically, incongruent negative emotion scenes (with neutral postures) produced significant reductions in face categorizations when combined with disgust or fear faces, but these reductions did not differ significantly between scenes. Additionally, there were no asymmetrical effects observed for emotion scenes, suggesting that asymmetries in contextual influence are produced by postures, not scenes. Scenes also demonstrated an enhancing effect when paired with matching faces or postures. Scenes matching the face emotion boosted face categorizations and reduced posture categorizations, whereas scenes matching the posture boosted posture categorizations and reduced face categorizations. These findings expand previous research on additive effects of emotion scene (Reschke et al., 2018) to four other discrete emotions and also demonstrate that scenes congruent with the face attenuate the influence of an incongruent posture. This study adds to a growing body of research expanding our knowledge of the supporting, interactional role scenes play in contextualized emotion perception.

Additional Considerations

This investigation points to several considerations for future research. First, although the stimuli were well-validated, use of less caricatured expressions may more accurately reflect everyday emotion categorization (Carroll & Russell, 1997). Moreover, the fully crossed design may have introduced combinations lacking ecologically validity (see Matsumoto & Hwang, 2010; though see Hassin et al., 2013 for a compelling argument against this notion). Future work examining naturally occurring mismatches between face, posture, and scene emotion cues is needed (see Abramson et al., 2017, for an example of naturally occurring mismatches between faces and postures). Second, the use of a set list of discrete emotion choices likely influenced participants’ categorizations of the images (DiGirolamo & Russell, 2017; Russell, 1993). An open-ended “other” option or the use of free-labeling could provide further granularity with which the images can be perceived (Lindquist & Gendron, 2013). Third, it is possible that participants may have assumed to only use one source of emotional information (e.g., the face) while ignoring others, or that our choice of labels may have biased participants towards certain cues over others (see Lindquist & Gendron, 2013, for an excellent review on the influence of labels on emotion categorization). Future work examining the stability of and individual differences in cue utilization in emotion perception is needed. Fourth, the current study revealed much lower recognition rates of the contextualized stimuli (e.g., neutral face, sad posture, neutral scene) than that of isolated cues (e.g., sad posture, no face, no scene) from previous validation studies. Additional research is needed to determine whether such differences were an artifact of the prompt of our study (i.e., directing participants to the person) or indicative of interactional effects of emotions in context (i.e., participants look to the face more than other cues when categorizing full-bodied emotions in a background scene). Finally, cultural aspects of the observer and the perceived character must be considered. Prior research has documented cross-cultural differences in perception of contextual elements (e.g., Masuda & Nisbett, 2001), interpretation of emotion-related cues (e.g., Masuda et al., 2008; Matsumoto et al., 2012), and normative expressivity of emotion (see Matsumoto et al., 2005). Examining differences in emotion perception as a function of the cultural orientation of the emoter and the observer, as well as how culturally specific socialization practices foster such differences, represents a fascinating line of future research.

Reconceptualizing Emotion Perception

These findings underscore the complexity of the decades-old debate on the importance (or lack thereof) of face and non-face cues in emotion categorization (see Ekman & Friesen, 1967; Mehrabian, 1969; Hassin et al., 2013). Although our results revealed an overall tendency to use the face to categorize emotion, face predominance is not synonymous with resistance to context, nor does contextual influence denote inherent facial ambiguity. Significant reductions in face categorizations were observed across multiple combinations of faces and postures (and not scenes), including several instances where the posture exerted greater influence on emotion categorization than the face. Moreover, scenes, while never independently overpowering face categorizations, played an interactive role in emotion categorizations, largely enhancing or attenuating the effect of postures. Whether non-facial cues within the body (e.g., posture) play a more significant role in producing categorical changes in face categorizations than cues outside the body (e.g., scenes) is a subject worthy of additional research (e.g., Chen & Whitney, 2019).

Recent emotion perception research has emphasized a need to go beyond the face. We favor a more radical shift: moving beyond the search for the “most important” cue. Such an anti-reductionist view is not new (see Lazarus, 1991). Regrettably, paradigms of emotion traditionally seek to parse out or accentuate particular elements at the expense of others. It is imperative that researchers not fall into the trap of seeking the most meaningful element, nor assume that the cues presented are necessarily utilized, or even perceived, by the observer. The multi-faceted, interactional nature of emotion perception necessitates that investigations embrace the nuance and subjectivity of the phenomena of interest.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

The authors would like to thank Ryan McLean for assisting with the stimuli creation and McKay Morgan for helpful insight into an early version of the manuscript.

Additional Information

Data Availability

The data for these studies are available on OSF: https://osf.io/vqjpc/?view_only=c2c7d08cac9549ba9f4d5b6cdd0c5112.

Ethical Approval

The study was conducted following APA ethical standards and with approval of the Institutional Review Board (IRB) at Brigham Young University (Approval Number X18379, Protocol Title: “Perceiving Emotions in Context”).

Conflicts of Interest

The authors declare that there is no conflict of interest.

Informed Consent

Informed consent was obtained from all research participants.

References

- Abramson, L., Marom, I., Petranker, R., & Aviezer, H. (2017). Is fear in your head? A comparison of instructed and real-life expressions of emotion in the face and body. Emotion, 17(3), 557–565. 10.1037/emo0000252 [DOI] [PubMed]

- Aviezer H, Ensenberg N, Hassin RR. The inherently contextualized nature of facial emotion perception. Current Opinion in Psychology. 2017;17:47–54. doi: 10.1016/j.copsyc.2017.06.006. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, …Bentin, S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science. 2008;19:724–732. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012;338:1225–1229. doi: 10.1126/science.1224313. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Holistic person processing: Faces with bodies tell the whole story. Journal of Personality and Social Psychology. 2012;103:20–37. doi: 10.1037/a0027411. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Kensinger EA. Context is routinely encoded during emotion perception. Psychological Science. 2010;21:595–599. doi: 10.1177/0956797610363547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton A. Why has research in face recognition progressed so slowly? The importance of variability. The Quarterly Journal of Experimental Psychology. 2013;66:1467–1485. doi: 10.1080/17470218.2013.800125. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology. 1996;70:205. doi: 10.1037/0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Facial expressions in Hollywood’s portrayal of emotion. Journal of Personality and Social Psychology. 1997;72:164–176. doi: 10.1037/0022-3514.72.1.164. [DOI] [PubMed] [Google Scholar]

- Chen Z, Whitney D. Tracking the affective state of unseen persons. Proceedings of the National Academy of Sciences. 2019;116(15):7559–7564. doi: 10.1073/pnas.1812250116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Civile C, Obhi SS. Towards a mechanistic understanding of the effects of body posture on facial emotion categorization. The American Journal of Psychology. 2015;128:367–377. doi: 10.5406/amerjpsyc.128.3.0367. [DOI] [PubMed] [Google Scholar]

- Conley MI, Dellarco DV, Rubien-Thomas E, Cohen AO, Cervera A, Tottenham N, Casey BJ. The racially diverse affective expression (RADIATE) face stimulus set. Psychiatry Research. 2018;270:1059–1067. doi: 10.1016/j.psychres.2018.04.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nature Reviews. Neuroscience. 2006;7:242–249. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Böcker KB, Tuomainen J, Hensen M, Vroomen J. The combined perception of emotion from voice and face: Early interaction revealed by human electric brain responses. Neuroscience Letters. 1999;260:133–136. doi: 10.1016/S0304-3940(98)00963-X. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Vroomen J. The perception of emotions by ear and by eye. Cognition & Emotion. 2000;14:289–311. doi: 10.1080/026999300378824. [DOI] [Google Scholar]

- DiGirolamo MA, Russell JA. The emotion seen in a face can be a methodological artifact: The process of elimination hypothesis. Emotion. 2017;17:538–546. doi: 10.1037/emo0000247. [DOI] [PubMed] [Google Scholar]

- Ekman P. Facial expression and emotion. American Psychologist. 1993;48:384–392. doi: 10.1037/0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Head and body cues in the judgment of emotion: A reformulation. Perceptual and Motor Skills. 1967;24(3):711–724. doi: 10.2466/pms.1967.24.3.711. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen W, Ellsworth P. The face and emotion. Pergamon; 1972. [Google Scholar]

- Ethofer, T., Anders, S., Erb, M., Droll, C., Royen, L., Saur, R., ... & Wildgruber, D. (2006). Impact of voice on emotional judgment of faces: An event‐related fMRI study. Human Brain Mapping, 27, 707-714. 10.1002/hbm.20212 [DOI] [PMC free article] [PubMed]

- Gendron, M., Roberson, D., van der Vyver, J. M., & Barrett, L. F. (2014). Cultural relativity in perceiving emotion from vocalizations. Psychological Science, 25(4), 911–920. [DOI] [PMC free article] [PubMed]

- Hassin RR, Aviezer H, Bentin S. Inherently ambiguous: Facial expressions of emotions, in context. Emotion Review. 2013;5:60–65. doi: 10.1177/1754073912451331. [DOI] [Google Scholar]

- Hess U, Dietrich J, Kafetsios K, Elkabetz S, Hareli S. The bidirectional influence of emotion expressions and context: Emotion expressions, situational information and real-world knowledge combine to inform observers’ judgments of both the emotion expressions and the situation. Cognition and Emotion. 2020;34(3):539–552. doi: 10.1080/02699931.2019.1651252. [DOI] [PubMed] [Google Scholar]

- Israelashvili J, Hassin RR, Aviezer H. When emotions run high: A critical role for context in the unfolding of dynamic, real-life facial affect. Emotion. 2019;19(3):558–562. doi: 10.1037/emo0000441. [DOI] [PubMed] [Google Scholar]

- Izard CE. Innate and universal facial expressions: Evidence from developmental and cross-cultural research. Psychological Bulletin. 1994;115:288–299. doi: 10.1037/0033-2909.115.2.288. [DOI] [PubMed] [Google Scholar]

- Kret ME, de Gelder B. Social context influence recognition of bodily expressions. Experimental Brain Research. 2010;203:169–180. doi: 10.1007/s00221-010-2220-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazarus RS. Emotion and adaptation. Oxford University Press; 1991. [Google Scholar]

- Lecker, M., Dotsch, R., Bijlstra, G., & Aviezer, H. (2020) Bidirectional contextual influence between faces and bodies in emotion perception. Emotion, 20(7), 1154–1164. 10.1037/emo0000619 [DOI] [PubMed]

- Lindquist KA, Gendron M. What’s in a word? Language constructs emotion perception. Emotion Review. 2013;5:66–71. doi: 10.1177/1754073912451351. [DOI] [Google Scholar]

- Lopez LD, Reschke PJ, Knothe JM, Walle EA. Postural communication of emotion: Perception of distinct poses of five discrete emotions. Frontiers in Psychology. 2017;8:710. doi: 10.3389/fpsyg.2017.00710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masuda T, Ellsworth PC, Mesquita B, Leu J, Tanida S, Van de Veerdonk E. Placing the face in context: Cultural differences in the perception of facial emotion. Journal of Personality and Social Psychology. 2008;94:365–381. doi: 10.1037/0022-3514.94.3.365. [DOI] [PubMed] [Google Scholar]

- Masuda T, Nisbett RE. Attending holistically versus analytically: Comparing the context sensitivity of Japanese and Americans. Journal of Personality and Social Psychology. 2001;81:922–934. doi: 10.1037/0022-3514.81.5.922. [DOI] [PubMed] [Google Scholar]

- Matsumoto D, Yoo SH, Hirayama S, Petrova G. Development and validation of a measure of display rule knowledge: The display rule assessment inventory. Emotion. 2005;5:23–40. doi: 10.1037/1528-3542.5.1.23. [DOI] [PubMed] [Google Scholar]

- Matsumoto D, Hwang H. Judging faces in context. Social and Personality Psychology Compass. 2010;4:393–402. doi: 10.1111/j.1751-9004.2010.00271.x. [DOI] [Google Scholar]

- Matsumoto D, Hwang HS, Yamada H. Cultural differences in the relative contributions of face and context to judgments of emotions. Journal of Cross-Cultural Psychology. 2012;43:198–218. doi: 10.1177/0022022110387426. [DOI] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102:16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehrabian A. Significance of posture and position in the communication of attitude and status relationships. Psychological Bulletin. 1969;71(5):359–372. doi: 10.1037/h0027349. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Nelson NL, Horner M. Asymmetries of influence: Differential effects of body postures on perceptions of emotional facial expressions. PLoS ONE. 2013;8:e73605. doi: 10.1371/journal.pone.0073605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ngo N, Isaacowitz DM. Use of context in emotion perception: The role of top-down control, cue type, and perceiver’s age. Emotion. 2015;15:292–302. doi: 10.1037/emo0000062. [DOI] [PubMed] [Google Scholar]

- Reschke PJ, Knothe JM, Lopez LD, Walle EA. Putting “context” in context: The effects of body posture and emotion scene on adult categorizations of disgust facial expressions. Emotion. 2018;18:153–158. doi: 10.1037/emo0000350. [DOI] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Recognition of facial expressions is influenced by emotional scene gist. Cognitive, Affective, & Behavioral Neuroscience. 2008;8:264–272. doi: 10.3758/CABN.8.3.264. [DOI] [PubMed] [Google Scholar]

- Russell JA. Forced-choice response format in the study facial expression. Motivation and Emotion. 1993;17:41–51. doi: 10.1007/BF00995206. [DOI] [Google Scholar]

- Russell JA, Weiss A, Mendelsohn GA. Affect grid: A single-item scale of pleasure and arousal. Journal of Personality and Social Psychology. 1989;57:493–502. doi: 10.1037/0022-3514.57.3.493. [DOI] [Google Scholar]

- Schneider BA, Avivi-Reich M, Mozuraitis M. A cautionary note on the use of the Analysis of Covariance (ANCOVA) in classification designs with and without within-subject factors. Frontiers in Psychology. 2015;6:474. doi: 10.3389/fpsyg.2015.00474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., … Nelson, C. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research, 168, 242-249. 10.1016/j.psychres.2008.05.006 [DOI] [PMC free article] [PubMed]

- Van den Stock JV, Righart R, de Gelder B. Body expressions influence recognition of emotions in the face and voice. Emotion. 2007;7:487–494. doi: 10.10337/1528-3542.7.3.487. [DOI] [PubMed] [Google Scholar]

- Van den Stock JV, Vandenbulcke M, Sinke C, de Gelder B. Affective scenes influence fear perception of individual body expressions. Human Brain Mapping. 2014;35:492–502. doi: 10.1002/hbm.22195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilcox RR. New designs in analysis of variance. Annual Review of Psychology. 1987;38:29–60. doi: 10.1146/annurev.ps.38.020187.000333. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data for these studies are available on OSF: https://osf.io/vqjpc/?view_only=c2c7d08cac9549ba9f4d5b6cdd0c5112.