Abstract

Enhancing cognitive function through mentally challenging exercises (“brain training”) or non-invasive brain stimulation (NIBS) is an enticing yet controversial prospect. Although use of these methods is increasing rapidly, their effectiveness remains questionable. Notably, cognitive enhancement studies have typically failed to consider participants’ expectations. However, high expectations could easily make brain-training approaches appear more effective than they actually are. We addressed this major gap in the literature by assessing the perceived effectiveness of brain training and NIBS in a series of surveys. Our results suggest that people are optimistic about the possibilities of cognitive enhancement, particularly through brain training. Moreover, reading a brief message implying high or low effectiveness of such methods can raise or lower expectations, respectively, suggesting that perceptions of brain training are malleable – at least in the short term. Measuring expectations in brain training and NIBS is important to determining whether these cognitive enhancement methods truly are effective.

Keywords: Brain training, Cognitive enhancement, Demand characteristics, Expectation, Intervention design, Non-invasive brain stimulation, Placebo effect

Introduction

Cognitive enhancement is a tantalizing prospect. Techniques such as “brain training” (i.e., mentally challenging computer exercises (Rabipour & Raz, 2012)) and non-invasive brain stimulation (NIBS; i.e., electrical stimulation through the scalp using Transcranial Magnetic Stimulation, Direct Current Stimulation, or related techniques (Miniussi, Harris, & Ruzzoli, 2013)) have recently emerged to improve or maintain cognitive functioning. These methods are exciting to many researchers and clinicians, and gaining popularity among consumers: Market research suggests that digital health applications, largely comprised of brain training games, are worth over $1 billion in the United States and will grow rapidly (SharpBrains, 2014). Brain stimulation has similarly inspired a booming industry and mounting do-it-yourself (DIY) user community (“Hacking your brain,” 2015) despite concerns over safety and regulation (Carter & Forte, 2016; Farah, 2015; Jwa, 2015).

Determining whether these products actually work is challenging (Moreau, Kirk, & Waldie, 2016; Simons et al., 2016). Crucially, psychological factors – including a person’s motivations for pursuing cognitive enhancement, prior experience with such products, expectations of outcomes, and subjective assessment of performance – can influence the apparent effectiveness of cognitive enhancement via biased sampling, placebo effects, or Hawthorne-like effects (Gross, 2017; Schambra, Bikson, Wager, DosSantos, & DaSilva, 2014; Schwarz, Pfister, & Buchel, 2016). The few studies that have broached these problems suggest they can be substantial (Boot, Simons, Stothart, & Stutts, 2013; Foroughi, Monfort, Paczynski, McKnight, & Greenwood, 2016).

Given the potential influence of expectations on brain training and stimulation trials, understanding how people perceive these cognitive enhancement methods is crucial. Previously, we found that people generally believe brain training to be effective (Rabipour & Davidson, 2015; see Torous, Staples, Fenstermacher, Dean, & Keshavan, 2016 for similar findings). Interestingly, older adults (OA) appeared to be more optimistic than young adults (YA). Here we sought to replicate and extend our previous work by assessing perceptions of brain training and NIBS. We further sought to determine whether information implying high or low program effectiveness could measurably change people’s expectations compared to their prior beliefs. Finally, we also examined whether certain individual characteristics might be associated with expectations. Because older adults are prominent targets of the cognitive enhancement industries (e.g., Lumos Labs Inc., 2017; Nintendo DS, 2017; Posit Science Inc., 2017), we paid particular attention to age.

Materials and Methods

Experimental Design

Study 1: Expectations of Brain Training

We surveyed people about their expectations of brain training in two separate studies, using the Expectation Assessment Scale (Rabipour & Davidson, 2015; Rabipour, Davidson, & Kristjansson, In preparation). On a scale from 1-7 (1 = lowest expectation; 4 = no expectation/neutral; 7 = highest expectation), we asked responders to rate how successful they expected “computerized cognitive training” or “non-invasive brain stimulation” to be at improving various cognitive domains. This survey reflects the degree to which people might be influenced by readily available and highly propagated information about brain training, at least in the short term.

Study 1a

We surveyed 110 people at baseline (53 women, one participant excluded due to incomplete responses; Table 1A). We recruited young adults from the student subject pool at Florida State University and older adults from the community in Tallahassee, Florida. Whereas the YA were recruited only to complete the survey in exchange for course credit, the OA participated in the context of a brain training intervention (Souders et al., 2017) and received monetary compensation (100 USD).

Table 1.

| Age | Years of Education | n Women | ||

|---|---|---|---|---|

| A) | YA (n=50) | 19.58 (±1.23) | 13.60 (±0.90) | 28 |

| OA (n=59) | 72.35 (±5.20) | 16.47 (±1.50) | 25 | |

|

| ||||

| B) | YA (n=110) | 26.99 (±1.23) | 15.39 (±2.19) | 65 |

| MA (n=54) | 45.63 (±7.14) | 15.70 (±1.88) | 35 | |

| OA (n=93) | 68.22 (±5.90) | 15.15 (±1.98) | 70 | |

Study 1b

We surveyed an additional 263 people (175 women, six participants dropped due to incomplete responses; Table 1B) about their expectations, first at baseline and then again after reading two messages in counterbalanced order; i) a message implying that brain training is highly effective (High Expectation condition) and ii) another implying that brain training is ineffective (Low Expectation condition; for greater detail, see Rabipour & Davidson, 2015). Asking participants to respond to the same questions under different conditions enables an examination of the extent to which expectation ratings may change, at least in the short term, based on information received. As a buffer between the High and Low Expectation conditions, we also asked participants to rate their expectations after reading a third “neutral” message with no implication of effectiveness, directly after responding to the first expectation condition they received. Response patterns under this neutral condition were consistent with those of the preceding condition received. We therefore did not include responses from the neutral condition in our analyses.

Participants were recruited from undergraduate psychology courses at the University of Ottawa through ads and flyers, and via web-based recruitment, including Amazon Mechanical Turk and Qualtrics.

Study 2: Expectations of Non-Invasive Brain Stimulation

We surveyed 516 people about their expectations of NIBS (272 women, 88 participants did not report age or sex; Table 2) using a modified version of the survey in Study 1. We defined NIBS as “a form of brain stimulation that delivers weak currents through the surface of the scalp over a brain region, aiming to improve cognitive or motor function.” We recruited young and middle-aged adults using the same methods as in Study 1b. We excluded the concentration domain in our analyses due to low number of responses.

Table 2.

Participant demographics for Study 2

| Age | Years of Education | n Women | |

|---|---|---|---|

| YA (n=300) | 23.19 (±5.39) | 14.11 (±4.72) | 190 |

| MA (n=50) | 45.28 (±6.70) | 15.10 (±2.48) | 31 |

| OA (n=78) | 66.58 (±5.34) | 14.64 (±2.50) | 51 |

Statistical Analysis

Our analyses included chi-square, t-tests, and repeated-measure analysis of variance (ANOVA), comparing responses for each expectation condition (baseline, high, low) and cognitive domain (general cognitive function, memory, concentration, distractibility, reasoning ability, multitasking ability, performance in everyday activities), across age (YA, MA, OA) and the order in which the expectation conditions were delivered. We applied Greenhouse-Geisser corrections for sphericity where applicable. We reported our results of multiple t-tests with adjusted alpha levels using the Holm-Bonferroni approach, to account for unequal sample sizes. Following ANOVA, we computed post-hoc analyses with unadjusted alpha levels.

Results

Study 1: Expectations of Brain Training

Study 1a: People are Optimistic About Brain Training at Baseline

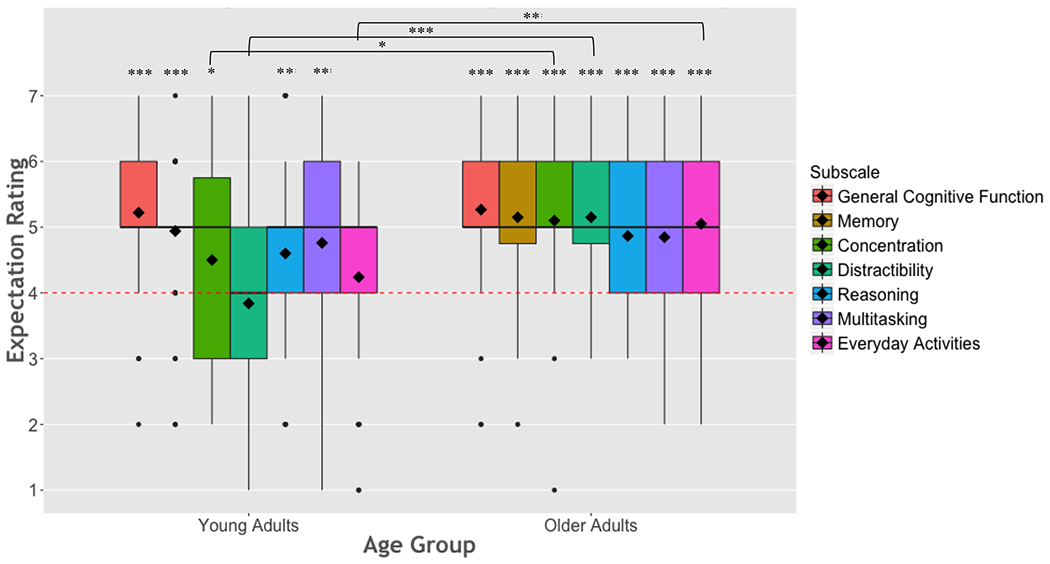

Given the possibility of OA having higher expectations than YA (Rabipour & Davidson, 2015) we analyzed age groups separately. Overall, both age groups were optimistic (Fig. 1). Whereas OA rated their expectations significantly higher than neutral for all cognitive domains (t58≥6.028, p<0.0001, Cohen’s d≥1.58), YA were optimistic (i.e., ratings higher than neutral) about general cognitive function (t49=9.984, p<0.0001, Cohen’s d=2.85, CI=0.97-1.47), memory (t49=6.95, p<0.0001, Cohen’s d=1.99, CI=0.67-1.21), concentration (t49=2.519, p=0.015, Cohen’s d=0.72, CI=0.10-0.90), reasoning ability (t49=3.096, p=0.003, Cohen’s d=0.88, CI=0.21-0.99), and multitasking (t49=3.569, p=0.001, Cohen’s d=1.02, CI=0.33-1.19), but not for distractibility and performance in everyday activities.

Fig. 1.

Expectation ratings in young and older adults at baseline. Whereas ratings generally varied between 4 and 5 in young adults, the distribution of responses surrounded 5 / “somewhat successful” in older adults. Dashed line indicates the neutral point (score of 4) on the survey scale. Dashed lines are drawn across the neutral point (rating of 4). Bold lines represent the group medians; diamonds represent the group means. *p < .05. **p < .01. ***p < .001.

Repeated measures analysis of variance (ANOVA) directly comparing age groups (YA versus OA) across the seven cognitive domains revealed a significant main effect of age (F1,107=8.414, ηp2=0.073, p=0.005), cognitive domain (F5.15,550.85=9.467, ηp2=0.081, p<0.0001), and interaction (F5.15,550.85=8.019, ηp2=0.070, p<0.0001). Further investigation of these interactions revealed that, compared to YA, OA reported significantly higher expectations of improvement in distractibility (t86.02=5.601, p<0.0001, Cohen’s d=1.08, CI=0.847-1.778) and performance in everyday activities (t107=3.45, p=0.001, Cohen’s d=0.66, CI=0.345-1.277) following cognitive training.

Notably, at least 92% of participants reported being confident in their expectations of each cognitive domain at baseline. Age differences in reported confidence were not significant after correcting for multiple comparisons. Similarly, we did not find significant differences in the baseline ratings of YA, MA, or OA based on reported confidence.

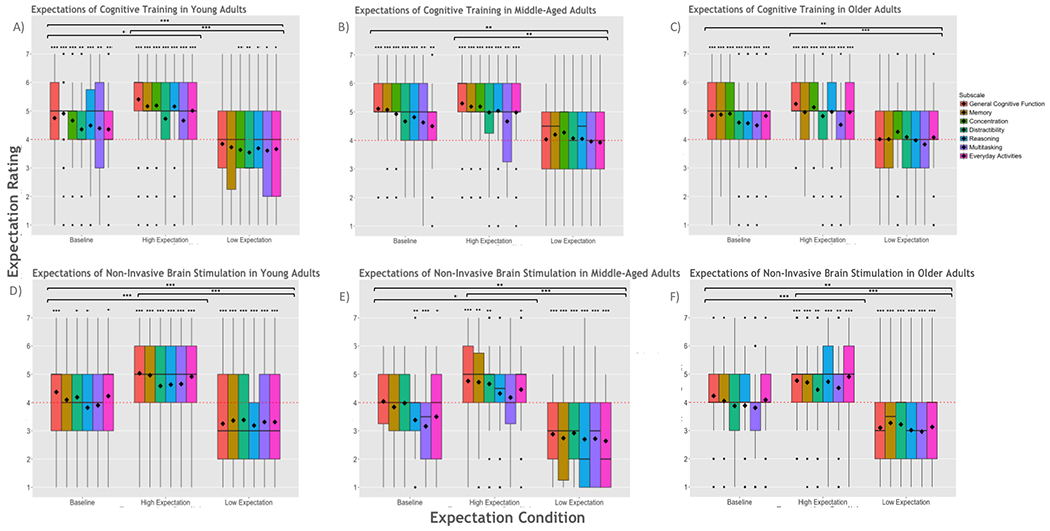

Study 1b: Expectations of Brain Training Are Malleable

Expectations were similarly optimistic at baseline for all cognitive domains in YA (t109≥2.685, p≤0.008, Cohen’s d≥0.51; Fig. 2A), MA (t53≥2.709, p≤0.009, Cohen’s d≥0.74; Fig. 2B), and OA (t92≥3.964, p<0.0001, Cohen’s d≥0.83; Fig. 2C) who completed the questionnaire under the three different conditions. Ratings were significantly above neutral under the High Expectation condition for all cognitive domains in YA (t109≥5.03, p<0.0001, Cohen’s d≥0.96), MA (t53≥3.37, p≤0.001, Cohen’s d≥0.93), and OA (t93≥4.448, p<0.0001, Cohen’s d≥0.92). Under the Low Expectation condition, ratings were significantly below neutral for concentration (M=3.64, SD=1.42, t109=2.688, p=0.008, Cohen’s d=0.51, CI=0.10-0.63) and distractibility (M=3.55, SD=1.43, t109=3.331, p=0.001, Cohen’s d=0.64, CI=0.18-0.73) in YA. In MA and OA, ratings under the Low Expectation condition were not significantly different from neutral.

Fig. 2.

Expectations of computerized cognitive training and non-invasive brain stimulation across conditions in (A,D) young (B,E) middle-aged and (C,F) older adults. Expectation ratings consistently increase relative to baseline in the High Expectation condition and decrease relative to baseline in the Low Expectation condition. Dashed lines are drawn across the neutral point (rating of 4). Bold lines represent the group medians; diamonds represent the group means. *p < .05. **p < .01. ***p < .001.

The majority of participants were confident in their expectations for each cognitive domain at baseline (≥86%), and under the High (≥86%) and Low (≥83%) Expectation conditions. Under the Low Expectation condition, a greater percentage of OA (86/93=92%) reported confidence in their expectations for general cognitive function compared to YA (84/110=76%, X2=9.607, p=0.002) and MA (42/54=78%, X2=6.555, p=0.01). Age differences in reported confidence were not significant at baseline or under the High Expectation condition after correcting for multiple comparisons.

Repeated measures ANOVA comparing age groups and the order in which participants read the expectation messages across the seven cognitive domains, under the three expectation conditions revealed a significant 3-way interaction between age, order, and condition (F3.54,444.64=4.062, ηp2=0.31, p=0.005). Further investigation of this effect by age revealed, in YA, a main effect of condition (F1.65,178=85.724, ηp2=0.443, p<0.0001), cognitive domain (F4.76,513.74=5.95, ηp2=0.052, p<0.0001), and an interaction between domain and order (F4.76,513.74=4.948, ηp2=0.044, p<0.0001), condition and order (F1.65,178=4.628, ηp2=0.041, p=0.016), as well as condition and domain (F9.08,980.72=3.255, ηp2=0.029, p=0.001; Fig. 2A).

Specifically, ratings of YA significantly increased relative to baseline for all cognitive domains under the High Expectation condition (t109≥ 2.348, p≤0.021, Cohen’s d≥0.22) and decreased relative to baseline under the Low Expectation condition (t109≥ 4.79, p<0.0001, Cohen’s d≥0.47). Moreover, YA who first received the High Expectation condition rated higher expectations of general cognitive function (M1=5.65±0.84, M2=5.05±0.34, t108=2.52, p=0.015, Cohen’s d=0.93, CI=0.122-1.078) after reading the High Expectation message, and lower expectations of multitasking function after reading the Low Expectation message (M1=3.36±1.42, M2=4.08±1.85, t108=2.267, p=0.025, Cohen’s d=0.44, CI=0.09-1.346) compared to those who first received the Low Expectation condition, respectively.

In MA (Fig. 2B) and OA (Fig. 2C), responses showed a main effect of condition (MA: F1.65,85.82=23.117, ηp2=0.308, p<0.0001; OA: F1.93,175.95=40.604, ηp2=0.309, p<0.0001), cognitive domain (MA: F4.07,211.45=4.001, ηp2=0.071, p=0.004; OA: F4.61,419.46=9.501, ηp2=0.095, p<0.0001), and an interaction between condition and order (MA: F1.65, 85.82=3.78, ηp2=0.068, p=0.034; OA: F1.93,175.95=9.528, ηp2=0.095, p<0.0001). Responses of MA also demonstrated an interaction between condition and domain (F8.53,443.39=2.15, ηp2=0.04, p=0.027). Relative to baseline, MA expectation ratings significantly increased under the High Expectation condition for performance in everyday activities (Mbaseline=4.5±1.36, MHigh=4.98± 1.34, t53=2.854, p=0.006, Cohen’s d=0.39, CI=0.143-0.82), and decreased significantly under the Low Expectation condition for all cognitive domains (t53≥ 3.287, p≤0.002, Cohen’s d≥0.46). MA who received the High Expectation condition first rated higher expectations of general cognitive function after reading the High Expectation message (M1=5.67±0.92, M2=4.93±1.49, t52=2.196, p=0.033, Cohen’s d=0.60, CI=0.064-1.418).

In OA, ratings significantly increased relative to baseline under the High Expectation condition for general cognitive function (Mbaseline=4.85±1.22, MHigh=5.25±1.04, t92=2.907, p=0.005, Cohen’s d=0.31, CI=0.126-0.67), and decreased significantly under the Low Expectation condition for all cognitive domains (t92≥ 3.101, p≤0.003, Cohen’s d≥0.49). OA who first received the High Expectation condition rated higher expectations for general cognitive function, memory, concentration, reasoning ability, multitasking ability, and performance in everyday activities (t91≥ 2.22, p≤0.029, Cohen’s d≥0.46) after reading the High Expectation message, and higher expectations for all cognitive domains after reading the Low Expectation message (t91≥3.023, p≤0.003, Cohen’s d≥0.63).

We found significant differences based on order in baseline ratings of distractibility and multitasking in YA, and reasoning ability in OA. These, however, suggest a chance finding unrelated to our experimental manipulation.

We further probed the degree to which participants believed the expectations messages were convincing, and whether the messages were persuasive enough to change their initial expectations for the general cognitive function domain (Table 3). The majority of participants (72%) believed the High Expectation condition was convincing, with 58% reporting that the message was persuasive enough to change their initial perceptions. Similarly, 62% of participants believed the Low Expectation condition was convincing, with 56% reporting that the message was persuasive enough to change their initial perceptions. However, the information in the Low Expectation condition was less compelling to OA compared to YA (71/110=65%), who were significantly less swayed by this pessimistic message (41/93=44%; X2=8.529, p=0.003). Ratings of convincingness were significantly higher under the High compared to the Low Expectation condition (F1,251=7.498, ηp2=0.029, p=0.007), and significantly interacted with the order in which participants received the High and Low Expectations conditions (F1,251=5.763, ηp2=0.022, p=0.017). Specifically, participants who first received the High Expectation condition were more convinced by the High Expectation message, compared to participants who first received the Low Expectation condition (M1=5.22±1.23, M2=4.61±1.60, t190.12=3.33, p=0.001, Cohen’s d=0.43, CI=0.25-0.975).

Table 3.

Percentage of participants who reported that the High and Low Expectation messages were convincing and persuasive enough to change their initial perceptions, respectively, based on the order in which they received each condition

| High Expectation Condition First | Low Expectation Condition First | |||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| High Message | Low Message | High Message | Low Message | |||||

|

| ||||||||

| Convincing | Persuasive | Convincing | Persuasive | Convincing | Persuasive | Convincing | Persuasive | |

| YA | 83 | 64 | 68 | 68 | 52 | 53 | 63 | 58 |

| MA | 85 | 59 | 74 | 59 | 52 | 52 | 59 | 63 |

| OA | 82 | 57 | 53 | 41 | 57 | 57 | 52 | 48 |

With regard to intervention schedule, participants largely believed cognitive training at higher frequency with lower session duration (i.e., 30 minutes, three times per week) to be most effective (65%) and convenient (59%).

Individual Differences in Expectations of Brain Training at Baseline

Based on our previous study (Rabipour & Davidson, 2015), we examined individual characteristics hypothesized to correlate with expectations of cognitive training at baseline. Nearly all participants (90%) reported having at least some exposure to cognitive training, either through media advertisements (31%), general knowledge of different programs or practices (59%), or personal experience (18%). Participants with at least some knowledge of different programs or practices reported higher expectations of general cognitive function (M1=5.05±1.10, M2=4.57±1.30, t201.82=3.13, p=0.002, Cohen’s d=0.25, CI=0.178-0.785), memory (M1=5.12±1.01, M2=4.64±1.17, t253=3.460, p=0.001, Cohen’s d=0.44, CI=0.205-0.745), concentration (M1=4.99±1.12, M2=4.51±1.20, t253=3.269, p=0.001, Cohen’s d=0.41, CI=0.19-0.765), and reasoning ability (M1=4.78±1.24, M2=4.28±1.34, t253=3.081, p=0.002, Cohen’s d=0.39, CI=0.065-0.776), compared to those with no knowledge, respectively. Baseline ratings did not significantly differ based on exposure to media advertisements or personal experience.

Finally, participants with confidence in their ratings at baseline reported higher expectations of memory (M1=5.00±1.08, M2=4.32±1.22, t242=3.059, p=0.002, Cohen’s d=0.59, CI=0.240-1.108), concentration (M1=4.92±1.14, M2=4.03±1.21, t242=4.127, p<0.0001, Cohen’s d=0.76, CI=0.465-1.314), and reasoning ability (M1=4.66±1.29, M2=3.83±1.34, t242=2.980, p=0.003, Cohen’s d=0.63, CI=0.282-1.379). Baseline ratings did not significantly differ based on sex, country of residence, country in which participants grew up, education level, computer exposure, programming experience, medications taken, or concern over declining mental status.

Study 2: People are Uncertain About the Effects of Non-Invasive Brain Stimulation

Participants were uncertain about the effectiveness of non-invasive brain stimulation at baseline, with ratings near the neutral point. After Holm-Bonferroni corrections for multiple comparisons, baseline ratings in YA were significantly above the neutral point only for general cognitive function (M=4.37±1.33, t303=4.844, p<0.0001, Cohen’s d=0.56, CI=0.22-0.52), but no different from neutral for memory, distractibility, reasoning ability, multitasking ability, and performance in everyday activities (Fig. 2D). In MA, baseline ratings were, surprisingly, significantly below the neutral point for multitasking ability (M=3.16±1.45, t49=4.102, p<0.0001, Cohen’s d=1.17, CI=0.43-1.25) and reasoning ability (M=3.38±1.38, t49=3.169, p=0.003, Cohen’s d=0.91, CI=0.23-1.01, and no different from neutral for general cognitive function, memory, distractibility, and performance in everyday activities after correcting for multiple comparisons (Fig. 2E). Baseline ratings in OA did not significantly differ from neutral in any of the cognitive domains (Fig. 2F). Under the High Expectation condition, ratings were significantly above neutral for all cognitive domains in YA (t301≥7.000, p<0.0001, Cohen’s d≥0.81) and OA (t77≥2.827, p≤0.006, Cohen’s d≥0.64), and for general cognitive function (M=4.76±1.41, t49=3.817, p<0.0001, Cohen’s d=0.91, CI=0.36-1.16), memory (M=4.72±1.40, t49=3.636, p=0.001, Cohen’s d=1.04, CI=0.32-1.12), and distractibility (M=4.66±1.30, t49=3.581, p=0.001, Cohen’s d=1.02, CI=0.29-1.03) in MA. Ratings were significantly below neutral for all cognitive domains under the Low Expectation condition in YA (t302≥6.382, p<0.0001, Cohen’s d≥0.73), MA (t49≥.301, p<0.0001, Cohen’s d≥0.09), and OA (t77≥4.052, p<0.0001, Cohen’s d≥0.92).

Despite providing a wide range of expectation ratings across domains, the majority of YA (≥74%), MA (≥70%), and OA (≥83%) reported being confident in their baseline ratings for each cognitive domain. Similarly, the majority of participants reported confidence in their ratings for all cognitive domains under the High (YA: ≥79%, MA: ≥80%, OA: ≥89%) and Low Expectation conditions (YA: ≥84%, MA: ≥86%, OA: ≥91%).

Repeated measures ANOVA comparing age groups and the order in which participants read the expectation messages across six cognitive domains and under the three expectation conditions revealed a significant main effect of expectation condition (F1.63,687.46=198.218, ηp2=0.32, p<0.0001) and of cognitive domain (F4.43,1870.59=12.774, ηp2=0.029, p<0.0001), and significant interactions between condition and domain (F9.22, 3889.79=4.665, ηp2=0.011, p<0.0001), as well as age and domain (F8.87,1870.59=2.128, ηp2=0.01, p=0.025). Further investigation of these interactions revealed a significant increase in expectation ratings for all cognitive domains, relative to baseline, under the High Expectation condition (YA: t301≥4.457, p<0.0001, Cohen’s d≥0.26; MA: t49≥3.385, p≤0.001, Cohen’s d≥0.48; OA: t77≥3.848, p<0.0001, Cohen’s d≥0.44) and a significant decrease in ratings, relative to baseline, under the Low Expectation condition (YA: t302≥6.198, p<0.0001, Cohen’s d≥0.36; MA: t49≥2.344, p≤0.023, Cohen’s d≥0.33; OA: t77≥3.5, p≤0.001, Cohen’s d≥0.40).

Individual Differences in Expectations of Non-Invasive Brain Stimulation at Baseline

In contrast to brain training, less than half of participants had general knowledge (42%) or familiarity with media reports of NIBS (23%), and only 8% of participants had any prior experience with NIBS. Participants who reported having at least some prior knowledge of NIBS were significantly more optimistic in their expectations of memory at baseline (M1=4.27±1.47, M2=3.88±1.28, t326.14=2.755, p=0.006, Cohen’s d=0.28, CI=0.11-0.657), compared to those who reported no prior knowledge of NIBS, respectively. Baseline ratings did not significantly differ based on factors such as sex, country of residence, country in which participants grew up, education level, computer exposure, computer programming, experience with brain stimulation, or concern over declining mental status.

Discussion

Psychological factors such as expectancy may influence the outcomes of cognitive enhancement (e.g., through placebo effects). Nevertheless, trials of brain training and NIBS methods have rarely included measures or appropriate controls to account for participants’ expectations (Simons et al., 2016). Here we found that people are relatively optimistic about brain training but uncertain about NIBS outcomes, when initially asked (i.e., in the baseline condition). Researchers comparing such training to other types of interventions should explicitly address this distinction when collecting and interpreting data: For example, participants may simply show greater effects of brain training than NIBS in a head-to-head comparison because of differences in expectations. Optimism about brain training may have resulted from aggressive marketing and positive media coverage (CognitiveTrainingData.org, 2014; Farah, 2015; Koroshetz, 2015; Rabipour & Davidson, 2015; Simons et al., 2016; The Stanford Center on Longevity, 2014; Walsh, 2013). As advertising and public awareness of NIBS increase, we may witness an increase in expectations of those techniques as well.

The reported expectations of participants rose or fell in the short term, in response to information implying high or low intervention effectiveness, respectively. This finding implies, on the one hand, that scientists should consider potential sources of inadvertent bias or priming of participants with recruitment information, consent forms, and other study documents (Foroughi et al., 2016). Random assignment and double blinding can reduce the potency of such confounding factors (Rabipour, Miller, Taler, Messier, & Davidson, 2017). On the other hand, researchers may wish to consider deliberately manipulating expectations of brain training and NIBS with a “balanced-placebo” design (as has been done with drugs (Sahakian & Morein-Zamir, 2007)) to tease apart treatment and expectation effects (Boot et al., 2013).

Individual factors such as age and prior exposure to an intervention may also influence expectations of outcomes. Although the age differences observed in Study 1a may result from differential recruitment of YA and OA, our findings replicate our original study and are therefore unlikely to result from a recruitment confound (Rabipour & Davidson, 2015). Notably, we found that OA report higher optimism towards cognitive enhancement under certain circumstances, and remain confident in their initial beliefs regardless of information provided – even when presented with evidence urging skepticism regarding outcomes. We further observed a hint of interaction between age and short-term expectation-setting: OA who first received positive information about cognitive enhancement remained more optimistic about outcomes, even under the Low Expectation condition, compared to YA. Moreover, while the majority of participants believed that the information received under both the High and Low Expectation conditions was convincing, fewer OA were convinced by the pessimistic message compared to YA. Together, these findings suggest that initial exposure to positive information may inflate expectations or reduce skepticism towards cognitive enhancement in certain populations.

Understanding participants’ expectations of outcomes is necessary to determine whether cognitive enhancement approaches such as brain training and NIBS are truly effective. In addition to placebo effects, these results have implications for adoption and adherence to cognitive training interventions, particularly for older adults. In order for an intervention to be successful, the intervention should be effective, but participants must also be willing and able to engage with the intervention for an extended period of time. The perceived benefit of an intervention can influence both of these, including people’s willingness to begin an intervention and adhere to the program until completion. Poor intervention adherence remains an issue in therapeutic settings, as well as in the context of brain training. For example, Boot et al. (2013) found that adherence to a digital game-based intervention was very low. Moreover, expectations of the benefits of this specific intervention were low compared to a more explicit brain-training program that was adhered to much better, and which participants believed would help their everyday functioning. Our research represents a first step towards understanding the link between such perceptions and the way people respond to cognitive interventions, and predicting whether or not participants might adhere to such programs.

Measuring expectations before, during, and after a cognitive enhancement intervention may help explain intervention effects (or lack thereof). We found that people generally believe in the efficacy of brain training, but that there are also individual differences in belief. Our study suggests caution in interpreting any existing evaluations of brain training and NIBS that have not taken expectations into account (e.g., CognitiveTrainingData.org, 2014; Federal Trade Commission, 2015; Steenbergen et al., 2016; The Stanford Center on Longevity, 2014; Underwood, 2016). Our survey offers a simple, face-valid, and internally consistent way to do so (Rabipour et al., In preparation).

Acknowledgements

We thank Thomas Vitale for help with data collection as well as members of the Neuropsychology Laboratory at the University of Ottawa and Boot Lab at Florida State University. We also acknowledge the Natural Sciences and Engineering Research Council of Canada, the Fonds de Recherche Québec-Santé, the Ontario Graduate Scholarships, and the National Institute on Aging-National Institutes of Health (NIA 2P01AG017211-16A1, Project CREATE IV-Center for Research and Education on Aging and Technology Enhancement) for their support of this work.

References

- Boot WR, Simons DJ, Stothart C, & Stutts C (2013). The Pervasive Problem With Placebos in Psychology: Why Active Control Groups Are Not Sufficient to Rule Out Placebo Effects. Perspectives on Psychological Science, 8(4), 445–454. doi: 10.1177/1745691613491271 [DOI] [PubMed] [Google Scholar]

- Carter O, & Forte J (2016). Medical risks: Regulate devices for brain stimulation. Nature, 533(7602), 179. doi: 10.1038/533179d [DOI] [PubMed] [Google Scholar]

- CognitiveTrainingData.org. (2014). Cognitive Training Data: An Open Letter, from http://www.cognitivetrainingdata.org/

- Farah MJ (2015). The unknowns of cognitive enhancement. Science, 350(6259), 379–380. doi: 10.1126/science.5893 [DOI] [PubMed] [Google Scholar]

- Federal Trade Commission. (2015). Makers of Jungle Rangers Computer Game for Kids Settle FTC Charges that They Deceived Consumers with Baseless “Brain Training” Claims.

- Foroughi CK, Monfort SS, Paczynski M, McKnight PE, & Greenwood PM (2016). Placebo effects in cognitive training. Proc Natl Acad Sci U S A, 113(27), 7470–7474. doi: 10.1073/pnas.1601243113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross L (2017). Putting placebos to the test. PLoS Biol, 15(2), e2001998. doi: 10.1371/journal.pbio.2001998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hacking your brain. (2015). The Economist.

- Jwa A (2015). Early adopters of the magical thinking cap: a study on do-it-yourself (DIY) transcranial direct current stimulation (tDCS) user community. Journal of Law and the Biosciences, 2(2), 292–335. doi: 10.1093/jlb/lsv017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koroshetz WJ (2015). Noninvasive Brain Stimulation: Applications and Implications: National Institute of Neurological Disorders and Stroke.

- Lumos Labs Inc. (2017). Lumosity, from http://www.lumosity.com

- Miniussi C, Harris JA, & Ruzzoli M (2013). Modelling non-invasive brain stimulation in cognitive neuroscience. Neuroscience and Biobehavioral Reviews, 37(8), 1702–1712. doi: 10.1016/j.neubiorev.2013.06.014 [DOI] [PubMed] [Google Scholar]

- Moreau D, Kirk IJ, & Waldie KE (2016). Seven Pervasive Statistical Flaws in Cognitive Training Interventions. Front Hum Neurosci, 10, 153. doi: 10.3389/fnhum.2016.00153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nintendo DS. (2017). Brain Age: Concentration Training, from http://brainage.nintendo.com

- Posit Science Inc. (2017). brainHQ: Brain Training That Works, from http://www.brainhq.com/

- Rabipour S, & Davidson PSR (2015). Do you believe in brain training? A questionnaire about expectations of computerised cognitive training. Behavioural Brain Research, 295, 64–70. doi: 10.1016/j.bbr.2015.01.002 [DOI] [PubMed] [Google Scholar]

- Rabipour S, Davidson PSR, & Kristjansson E (In preparation). Measuring Expectations of Cognitive Enhancement: Item Response Analysis of the Expectation Assessment Scale.

- Rabipour S, Miller D, Taler V, Messier C, & Davidson PSR (2017). Physical and Cognitive Exercise in Aging Handbook of Gerontology Research Methods: Understanding Successful Aging: Routledge. [Google Scholar]

- Rabipour S, & Raz A (2012). Training the brain: Fact and fad in cognitive and behavioral remediation. Brain and Cognition, 79(2), 159–179. doi: 10.1016/j.bandc.2012.02.006 [DOI] [PubMed] [Google Scholar]

- Sahakian B, & Morein-Zamir S (2007). Professor’s little helper. Nature, 450(7173), 1157–1159. doi: 10.1038/4501157a [DOI] [PubMed] [Google Scholar]

- Schambra HM, Bikson M, Wager TD, DosSantos MF, & DaSilva AF (2014). It’s All in Your Head: Reinforcing the Placebo Response With tDCS. Brain Stimulation, 7(4), 623–624. doi: 10.1016/J.Brs.2014.04.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarz KA, Pfister R, & Buchel C (2016). Rethinking Explicit Expectations: Connecting Placebos, Social Cognition, and Contextual Perception. Trends in Cognitive Sciences, 20(6), 469–480. doi: 10.1016/j.tics.2016.04.001 [DOI] [PubMed] [Google Scholar]

- SharpBrains. (2014). Brain Training and Cognitive Assessment Market Surpassed $1.3 Billion In 2013. Retrieved from http://sharpbrains.com/blog/2014/01/23/brain-training-and-cognitive-assessment-market-surpassed-1-3-billion-in-2013/

- Simons DJ, Boot WR, Charness N, Gathercole SE, Chabris CF, Hambrick DZ, & Stine-Morrow EAL (2016). Do “Brain-Training” Programs Work? Psychological Science in the Public Interest, 17(3), 103–186. doi: 10.1177/1529100616661983 [DOI] [PubMed] [Google Scholar]

- Souders DJ, Boot WR, Blocker K, Vitale T, Roque NA, & Charness N (2017). Evidence for Narrow Transfer after Short-Term Cognitive Training in Older Adults. Frontiers in Aging Neuroscience, 9. doi: 10.3389/fnagi.2017.00041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steenbergen L, Sellaro R, Hommel B, Lindenberger U, Kuhn S, & Colzato LS (2016). “Unfocus” on foc.us: commercial tDCS headset impairs working memory. Experimental Brain Research, 234(3), 637–643. doi: 10.1007/s00221-015-4391-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Stanford Center on Longevity. (2014). A Consensus on the Brain Training Industry from the Scientific Community.

- Torous J, Staples P, Fenstermacher E, Dean J, & Keshavan M (2016). Barriers, Benefits, and Beliefs of Brain Training Smartphone Apps: An Internet Survey of Younger US Consumers. Front Hum Neurosci, 10, 180. doi: 10.3389/fnhum.2016.00180 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Underwood E (2016). Cadaver study casts doubts on how zapping brain may boost mood, relieve pain. Science. Retrieved from doi: 10.1126/science.aaf9938 [DOI]

- Walsh VQ (2013). Ethics and Social Risks in Brain Stimulation. Brain Stimulation, 6(5), 715–717. doi: 10.1016/J.Brs.2013.08.001 [DOI] [PubMed] [Google Scholar]