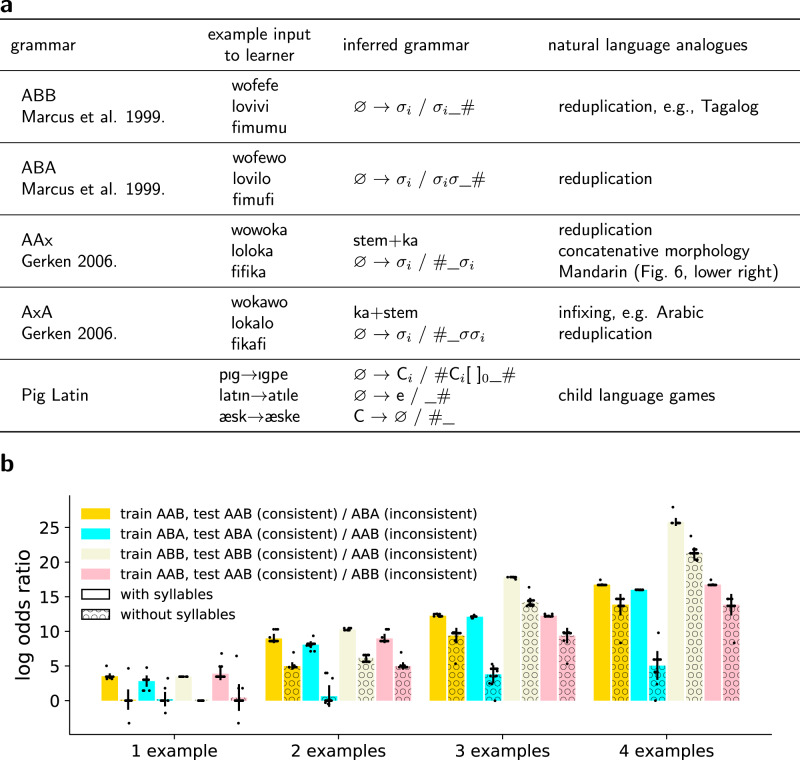

Fig. 6. Modeling artificial grammar learning.

a Children can few-shot learn many qualitatively different grammars, as studied in controlled conditions in AGL experiments. Our model learns these as well. Grammar names ABB/ABA/AAx/AxA refer to syllable structure: A/B are variable syllables, and x is a constant syllable. For example, ABB words have three syllables, with the last two syllables being identical. NB: Actual reduplication is subtler than syllable-copying20. b Model learns to discriminate between different artificial grammars by training on examples of grammar (e.g., AAB) and then testing on either unseen examples of words drawn from the same grammar (consistent condition, e.g., new words following the AAB pattern); or testing on unseen examples of words from a different grammar (inconsistent condition, e.g. new words following the ABA pattern), following the paradigm of ref. 39. We plot log-odds ratios of consistent and inconsistent conditions: (“Methods”), over n = 15 random independent (in)consistent word pairs. Bars show mean log odds ratio over these 15 samples, individually shown as black points, with error bars showing stddev. We contrast models using program spaces both with and without syllabic representations, which were not used for textbook problems. Syllabic representation proves important for few-shot learning, but a model without syllables can still discriminate successfully given enough examples by learning rules that copy individual phonemes. See Supplementary Fig. 4 for more examples. Source data are provided as a Source data file.