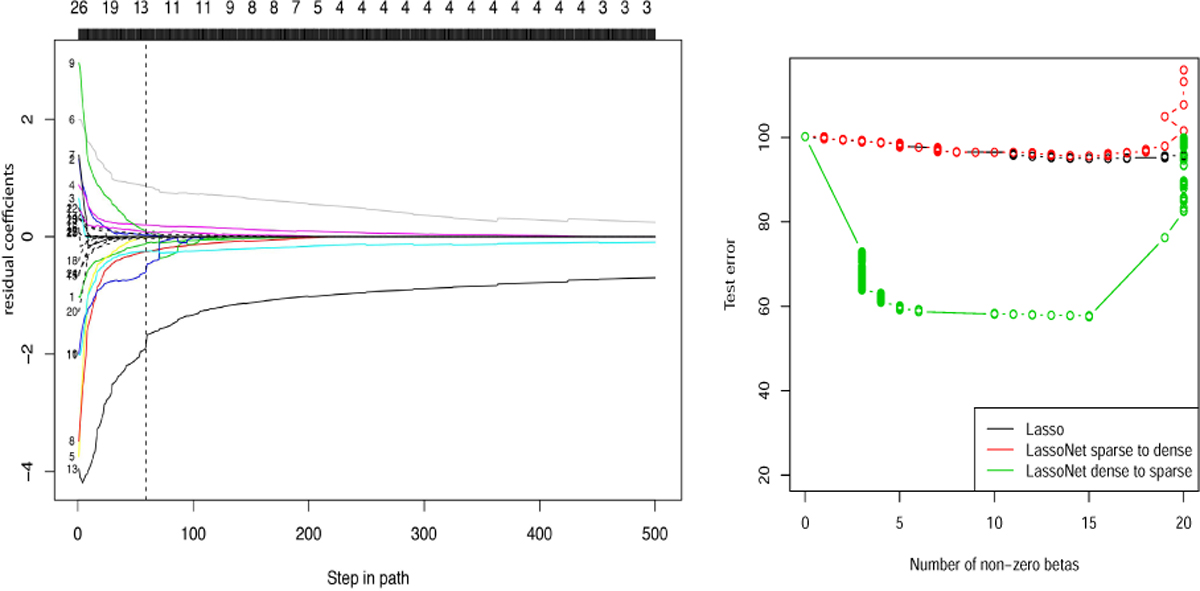

Figure 4.

Left: The path of residual coefficients for the Boston housing dataset. We augmented the Boston Housing dataset from p = 13 features to 13 additional Gaussian noise features (corresponding to the broken lines). The number of features selected by LassoNet is indicated along the top. LassoNet achieves the minimum test error (at the vertical broken line) at 13 predictors. Upon inspection of the resulting model, 12 of the 13 selected features correspond to the true predictors, confirming the model’s ability to perform controlled feature selection. Right: Comparing two kinds of initialization. The test errors for Lasso and LassoNet using the sparse-to-dense (in red) and dense-to-sparse (in green) strategies are shown. The dense-to-sparse strategy achieves superior performance, confirming the importance of a dense initialization in order to efficiently explore the optimization landscape.