Abstract

We present a machine learning model for the analysis of randomly generated discrete signals, modeled as the points of an inhomogeneous, compound Poisson point process. Like the wavelet scattering transform introduced by Mallat, our construction is naturally invariant to translations and reflections, but it decouples the roles of scale and frequency, replacing wavelets with Gabor-type measurements. We show that, with suitable nonlinearities, our measurements distinguish Poisson point processes from common self-similar processes, and separate different types of Poisson point processes.

Keywords: Scattering transform, Poisson point process, convolutional neural network

1. INTRODUCTION

Convolutional neural networks (CNNs) have obtained impressive results for a number of learning tasks in which the underlying signal data can be modelled as a stochastic process, including texture discrimination [1], texture synthesis [2, 3], time-series analysis [4], and wireless networks [5]. In many scenarios, it is natural to model the signal data as the points of a (potentially complex) spatial point process. Furthermore, there are numerous other fields, including stochastic geometry [6], forestry [7], geoscience [8] and genetics [9], in which spatial point processes are used to model the underlying generating process of certain phenomena (e.g., earthquakes). This motivates us to consider the capacity of CNNs to capture the statistical properties of such processes.

The Wavelet scattering transform [10] is a model for CNNs, which consists of an alternating cascade of linear wavelet transforms and complex modulus nonlinearities. It has provable stability and invariance properties and has been used to achieve near state of the art results in fields such as audio signal processing [11], computer vision [12], and quantum chemistry [13]. In this paper, we examine a generalized scattering transform that utilizes a broader class of filters (which includes wavelets). We primarily focus on filters with small support, which is similar to those used in most CNNs.

Expected wavelet scattering moments for stochastic processes with stationary increments were introduced in [14], where it is shown that such moments capture important statistical information of one-dimensional Poisson processes, fractional Brownian motion, α-stable Lévy processes, and a number of other stochastic processes. In this paper, we extend the notion of scattering moments to our generalized architecture, and generalize many of the results from [14]. However, the main contributions contained here consist of new results for more general spatial point processes, including inhomogeneous Poisson point processes, which are not stationary and do not have stationary increments. The collection of expected scattering moments is a non-parametric model for these processes, which we show captures important summary statistics.

In Section 2 we will define our expected scattering moments. Then, in Sections 3 and 4 we will analyze these moments for certain generalized Poisson point processes and self-similar processes. We will present numerical examples in Section 5, and provide a short conclusion in section 6.

2. EXPECTED SCATTERING MOMENTS

Let be a compactly supported mother wavelet with dilations ψj(t) = 2−jψ(2−jt) for , and let , be a stochastic process with stationary increments. The first-order wavelet scattering moments are defined in [14] as , where the expectation does not depend on t since X(t) has stationary increments and Sγ,pX(t) = SX(γ, p) is a wavelet which implies X * ψj(t) is stationary. Much of the analysis of in [14] relies on the fact that these moments can be rewritten as , where . This motivates us to define scattering moments as the integration of a filter, against a random signed measure Y(dt).

To that end, let be a continuous window function with support contained in [0, 1]d. Denote by the dilation of w, and set gγ(t) to be the Gabor-type filter with scale s > 0 and central frequency ,

| (1) |

Note that with an appropriately chosen window function w, (1) includes dyadic wavelet families in the case that s = 2j and |ξ| = C/s. However, it also includes many other filters, such as Gabor filters used in the windowed Fourier transform.

Let Y(dt) be a random signed measure and assume that Y is T-periodic for some T > 0 in the sense that for any Borel set B we have Y(B) = Y(B + Tei), for all 1 ⩽ i ⩽ d (where {ei}i⩽d is the standard orthonormal basis for ). For , set . We define the first-order and second-order expected scattering moments, 1 ⩽ p, p′ > ∞, at location t as

| (2) |

| (3) |

Note Y(dt) is not assumed to be stationary, which is why these moments depend on t. Since Y(dt) is periodic, we may also define time-invariant scattering coefficients by

In the following sections, we analyze these moments for arbitrary frequencies ξ and small scales s, thus allowing the filters gγ to serve as a model for the learned filters in CNNs. In particular, we will analyze the asymptotic behavior of the scattering moments as s decreases to zero.

3. SCATTERING MOMENTS OF GENERALIZED POISSON PROCESSES

In this section, we let Y(dt) be an inhomogeneous, compound spatial Poisson point process. Such processes generalize ordinary Poisson point processes by incorporating variable charges (heights) at the points of the process and a nonuniform intensity for the locations of the points. They thus provide a flexible family of point processes that can be used to model many different phenomena. In this section, we provide a review of such processes and analyze their first and second-order scattering moments.

Let λ(t) be a continuous, periodic function on with

| (4) |

and define its first and second order moments by

A random measure is called an inhomogeneous Poisson point process with intensity function λ(t) if for any Borel set ,

and, in addition, N(B) is independent of N(B′) for all B′ that do not intersect B. Now let be a sequence of i.i.d. random variables independent of N. An inhomogeneous, compound Poisson point process Y(dt) is given by

| (5) |

For a further overview of these processes, we refer the reader to Section 6.4 of [15].

3.1. First-order Scattering Asymptotics

Computing the convolution of gγ with Y(dt) gives

which can be interpreted as a waveform gγ emitting from each location tj. Invariant scattering moments aggregate the random interference patterns in |gγ * Y|. The results below show that the expectation of these interference patterns encode important statistical information related to the point process.

For notational convenience, we let

denote the expected number of points of N in the support of gγ(t – ·). By conditioning on N ([t − s, t]d), the number of points in the support of gγ, and using the fact that

one may obtain the following theorem.1

Theorem 1.

Let , and λ(t) be a periodic continuous intensity function satisfying (4). Then for every , every γ = (s, ξ) such that sd∥λ∥∞ < 1, and every m ⩾ 1,

| (6) |

where the error term ε(m, s, ξ, t) satisfies

| (7) |

and V1, V2, . . . is an i.i.d. sequence of random variables, independent of the Aj, taking values in the unit cube [0, 1]d and with density for ν ∈ [0, 1]d.

If we set m = 1, and let s → 0, then one may use the fact that a small cube [t − s, t]d has at most one point of N with overwhelming probability to obtain the following result.

Theorem 2.

Let Y(dt) satisfy the same assumptions as in Theorem 1. Let γk = (sk, ξk) be a sequence of scale and frequency pairs such that limk→∞ sk = 0. Then

| (8) |

for all t, and consequently

| (9) |

This theorem shows that for small scales the scattering moments Sγ,pY(t) encode the intensity function λ(t), up to factors depending upon the summary statistics of the charges and the window w. Thus even a one-layer location-dependent scattering network yields considerable information regarding the underlying data generation process.

In the case of ordinary (non-compound) homogeneous Poisson processes, Theorem 2 recovers the constant intensity. For general λ(t) and invariant scattering moments, the role of higher-order moments of λ(t) is highlighted by considering higher-order expansions (e.g., m > 1) in (6). The next theorem considers second-order expansions and illustrates their dependence on the second moment of λ(t).

Theorem 3.

Let Y satisfy the same assumptions as in Theorem 1. If (γk)k⩾1 = (sk, ξk)k⩾1, is a sequence such that limk→∞ sk = 0 and , then

| (10) |

where U1, U2 are independent uniform random variables on [0, 1]d; and (Vk)k⩾1 is a sequence of random variables independent of the Aj taking values in the unit cube with respective densities, for ν ∈ [0, 1]d.

We note that the scale normalization on the left hand side of (10) is s−2d, compared to a normalization of s−d in Theorem 2. Thus, intuitively, (10) is capturing information at moderately small scales that are larger than the scales considered in Theorem 2. Unlike Theorem 2, which gives a way to compute m1(λ), Theorem 3 does not allow one to compute m2(λ) since it would require knowledge of in addition to the distribution from which the charges are drawn. However, Theorem 3 does show that at moderately small scales the invariant scattering coefficients depend nontrivially on the second moment of λ(t). Therefore, they can be used to distinguish between, for example, an inhomogeneous Poisson point process with intensity function λ(t) and a homogeneous Poisson point process with constant intensity.

3.2. Second-Order Scattering Moments of Generalized Poisson Processes

Our next result shows that second-order scattering moments encode higher-order moment information about the .

Theorem 4.

Let Y(dt) satisfy the same assumptions as in Theorem 1. Let γk = (sk, ξk) and be sequences of scale-frequency pairs with for some c > 0 and . Let 1 ⩽ p, p′ < ∞ and q = pp′. Assume , and let . Then,

| (11) |

| (12) |

Theorem 2 shows first-order scattering moments with p = 1 are not able to distinguish between different types of Poisson point processes at very small scales if the charges have the same first moment. However, Theorem 4 shows second-order scattering moments encode higher-moment information about the charges, and thus are better able to distinguish them (when used in combination with the first-order coefficients). In Sec. 4, we will see first-order invariant scattering moments can distinguish Poisson point processes from self-similar processes if p = 1, but may fail to do so for larger values of p.

4. COMPARISON TO SELF-SIMILAR PROCESSES

We will show first-order invariant scattering moments can distinguish between Poisson point processes and certain self-similar processes, such as α-stable processes, 1 < α ⩽ 2, or fractional Brownian motion (fBM). These results generalize those in [14] both by considering more general filters and general pth scattering moments.

For a stochastic process X(t), , we consider the convolution of the filter gγ with the noise dX defined by , and define (in a slight abuse of notation) the first-order scattering moments at time t by . In the case where X(t) is a compound, inhomogeneous Poisson (counting) process, Y = dX will be a compound Poisson random measure and these scattering moments will coincide with those defined in (2).

The following theorem analyzes the small-scale first-order scattering moments when X is either an α-stable process, or an fBM. It shows the small-scale asymptotics of the corresponding scattering moments are guaranteed to differ from those of a Poisson point process when p = 1. We also note that both α-stable processes and fBM have stationary increments and thus Sγ,pX(t) = SX(γ, p) for all t.

Theorem 5.

Let 1 ⩽ p < ∞, and let γk = (sk, ξk) be a sequence of scale-frequency pairs with limk→∞ sk = 0 and . Then, if X(t) is a symmetric α-stable process, p < α ⩽ 2, we have

Similarly, if X(t) is an fBM with Hurst parameter H ∈ (0, 1) and w has bounded variation on [0, 1], then

This theorem shows that first-order invariant scattering moments distinguish inhomogeneous, compound Poisson processes from both α-stable processes and fractional Brownian motion except in the cases where p = α or p = 1/H. In particular, these measurements distinguish Brownian motion, from a Poisson point process except in the case where p = 2.

5. NUMERICAL ILLUSTRATIONS

We carry out several experiments to numerically validate the previously stated results. In all of our experiments, we hold the frequency ξ constant while letting s decrease to zero.

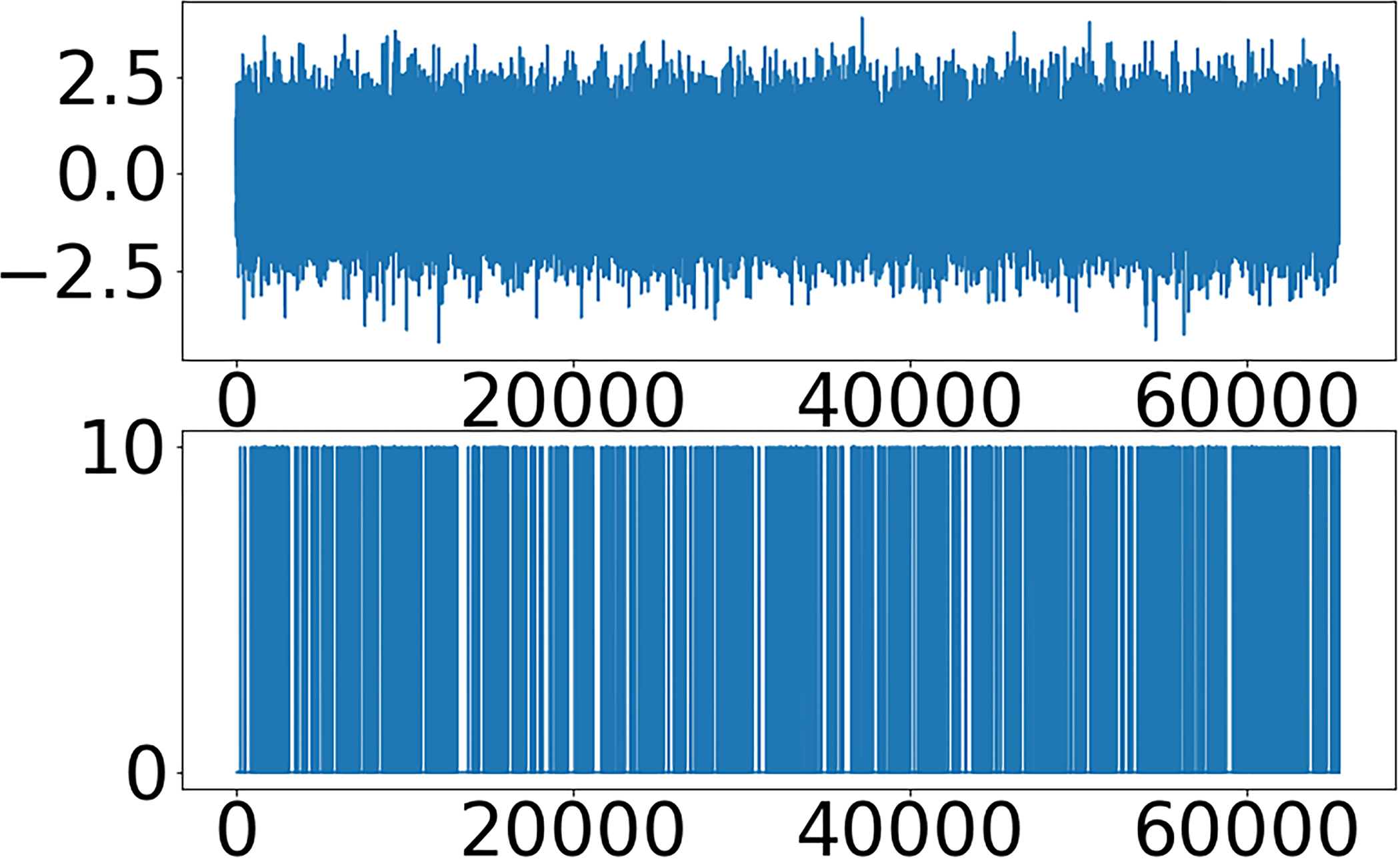

Compound Poisson point processes with the same intensities:

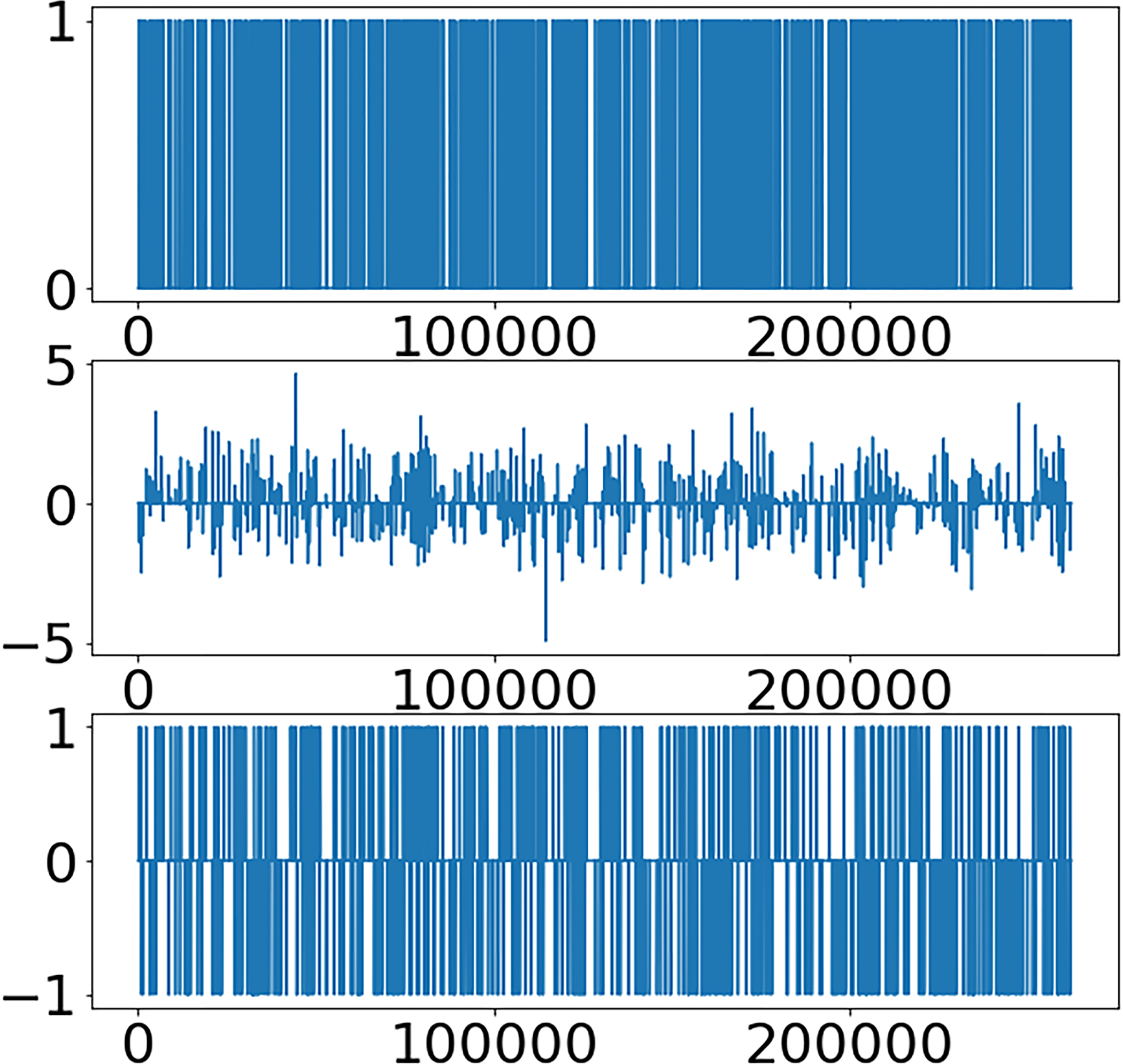

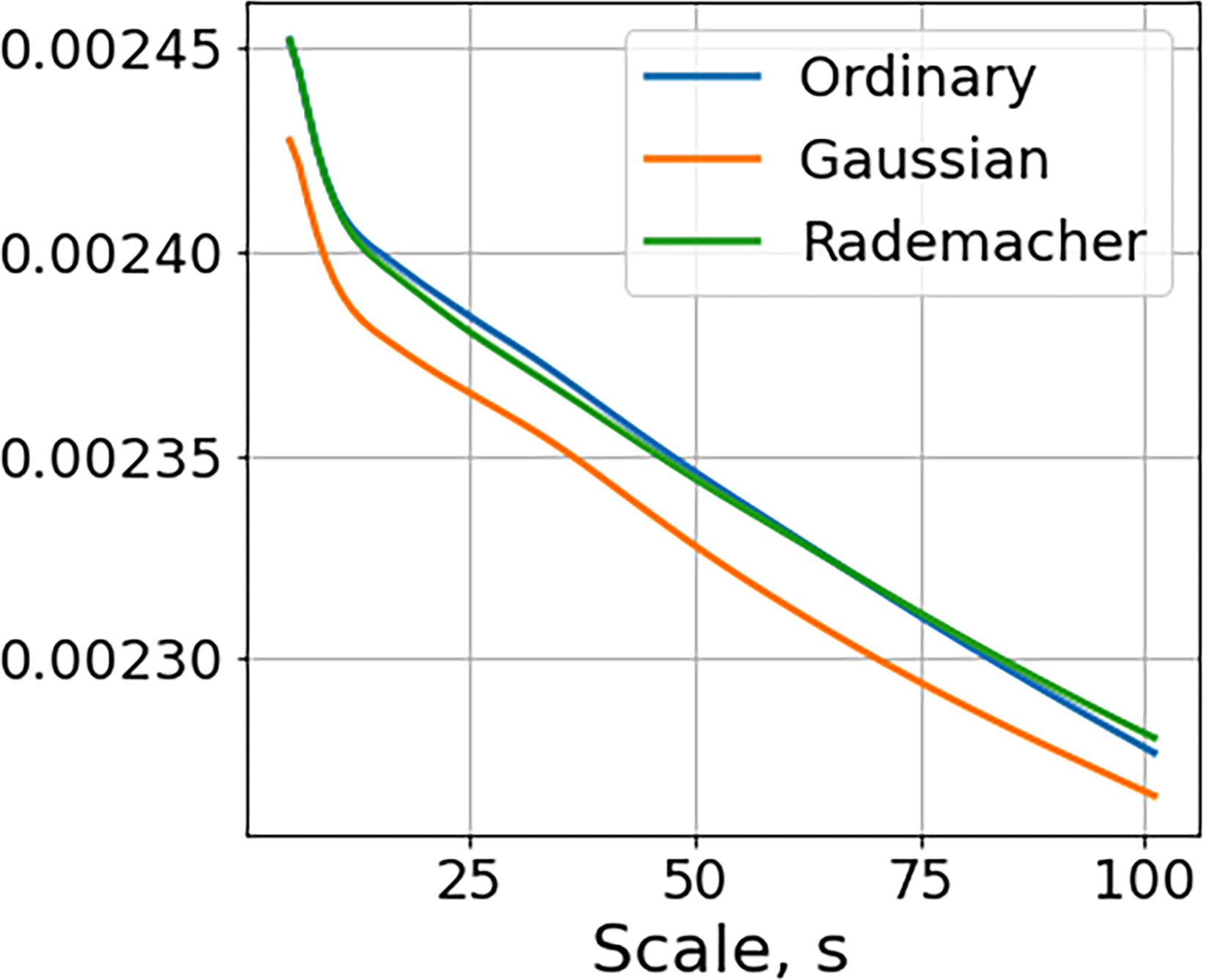

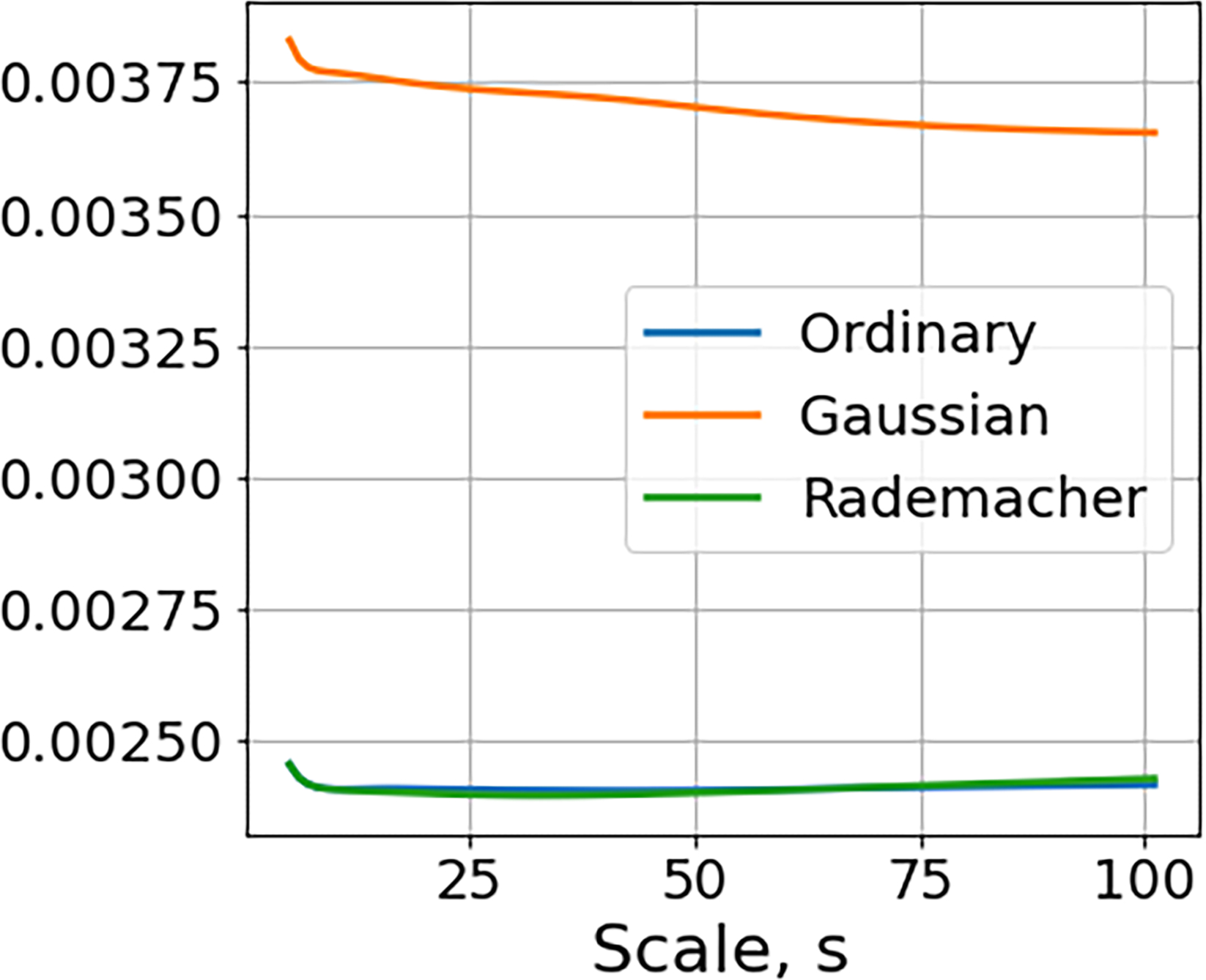

We generated three homogeneous compound Poisson point processes, all with intensity λ(t) ≡ λ0 = 0.01, where the charges A1,j, A2,j, and A3,j are chosen so that A1,j = 1 uniformly, , and A3,j are Rademacher random variables. The charges of the three signals have the same first moment and different second moment with and . As predicted by Theorem 2, Figure 1 shows first-order scattering moments will not be able to distinguish between the three processes with p = 1, but will distinguish the process with Gaussian charges from the other two when p = 2.

Fig. 1.

First-order invariant scattering moments of homogeneous compound Poisson point processes with the same intensity λ0 and different Ai. Left: Realizations of the process with arrival rates given by Top: Ai = 1 Middle: Ai are normal random variables Bottom: Ai are Rademacher random variables. Middle: Plots of normalized first-order scattering moments with p = 1.Right: Plots of normalized first-order scattering moments with p = 2.

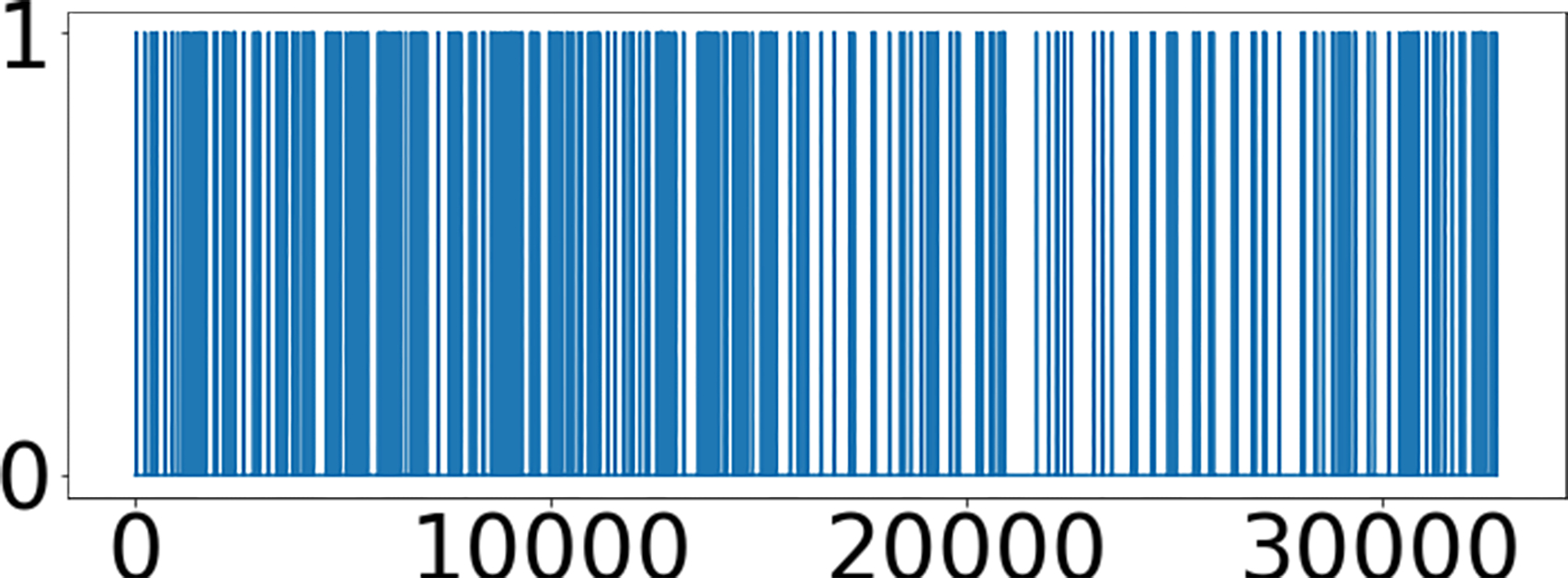

Inhomogeneous, non-compound Poisson point processes:

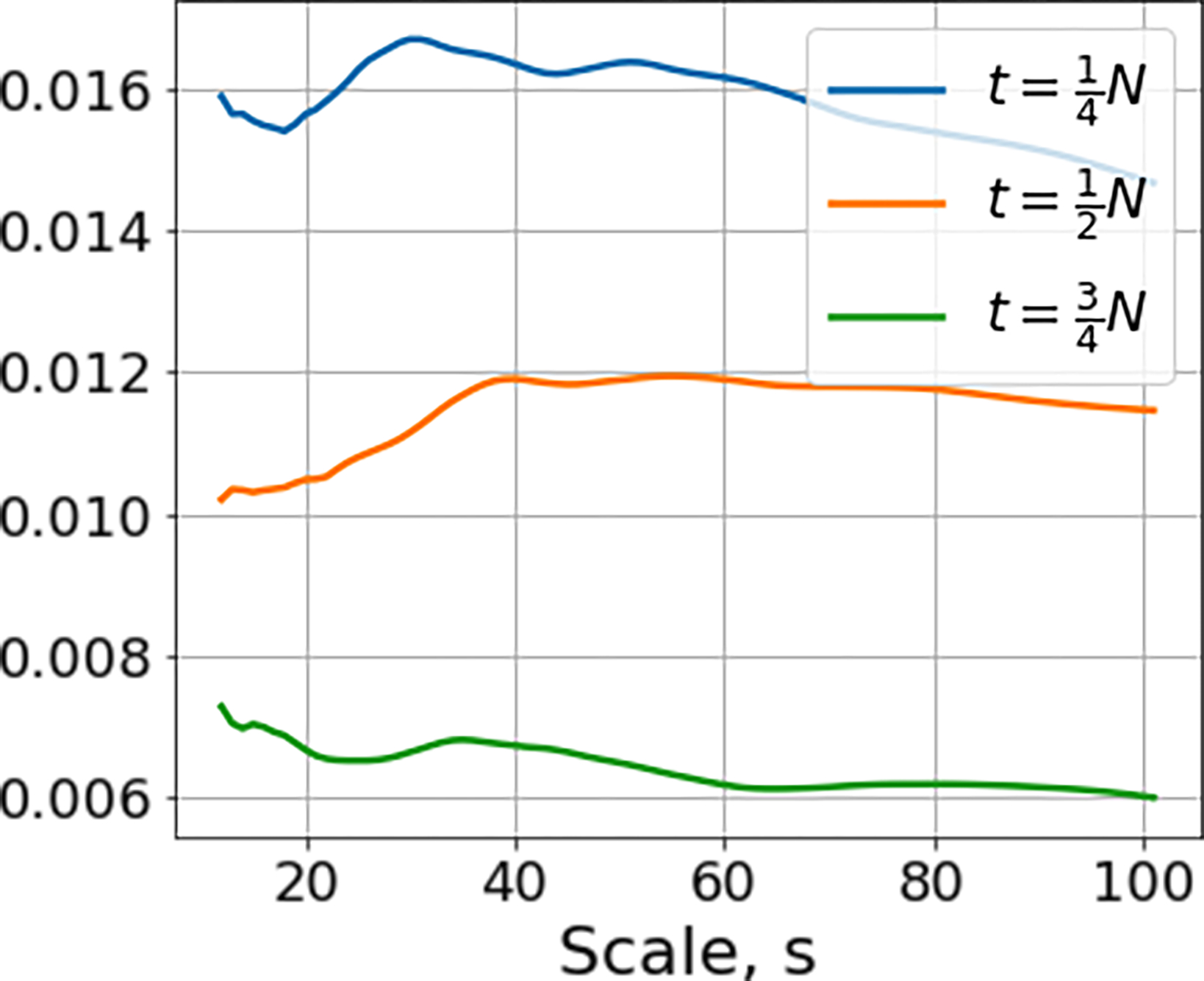

We also consider an inhomogeneous, non-compound Poisson point processes with intensity function (where we estimate Sγ,pY(t), by averaging over 1000 realizations). Figure 2 plots the scattering moments for the inhomogeneous process at different times, and shows they align with the true intensity function.

Fig. 2.

First-order scattering moments for inhomogeneous Poisson point processes. Left: Sample realization with . Right: Time-dependent scattering moments at , ,. Note that the scattering coefficients at times t1, t2, t3 converges to λ(t1) = 0.015, λ(t2) = 0.01, λ(t3) = 0.005.

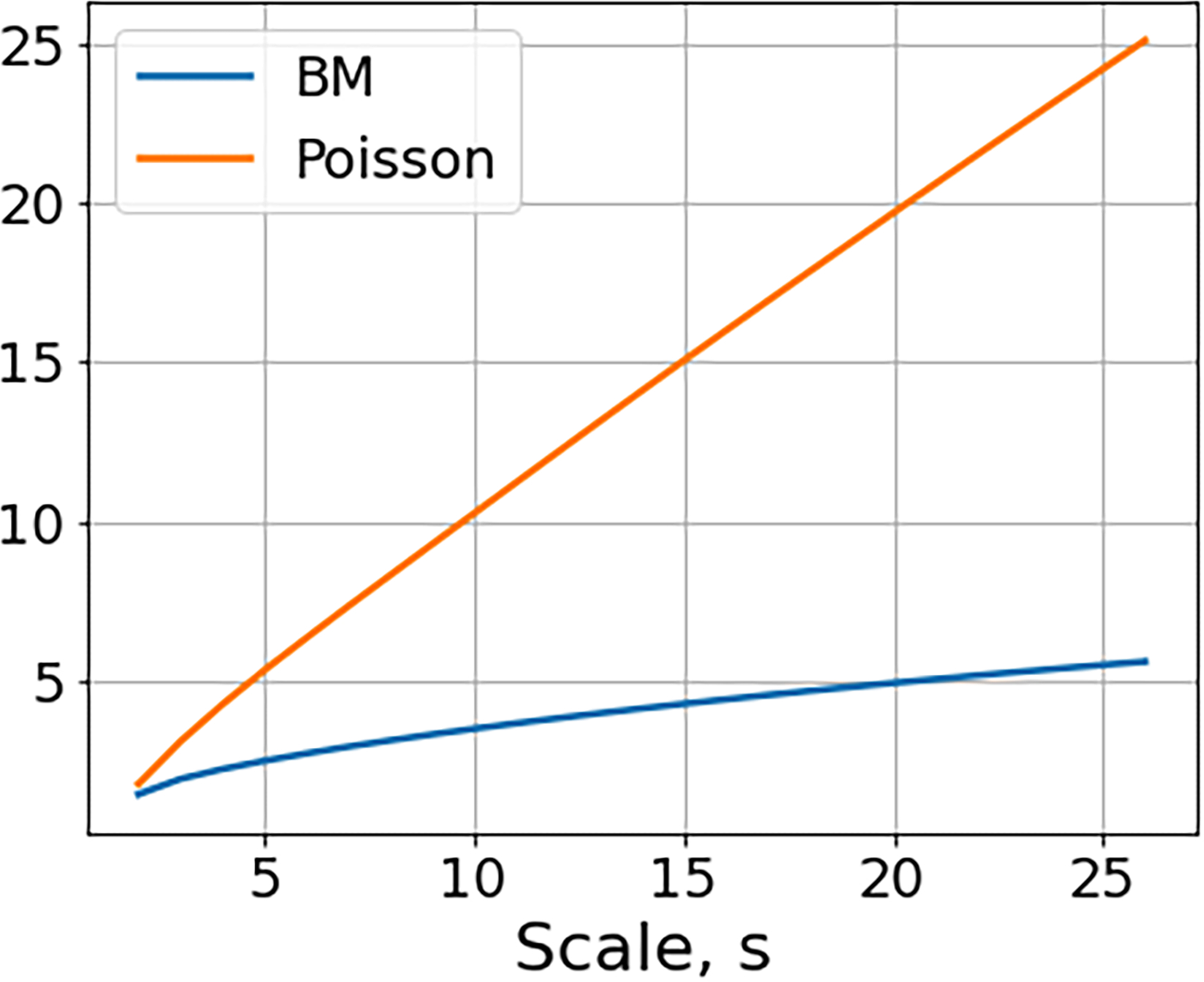

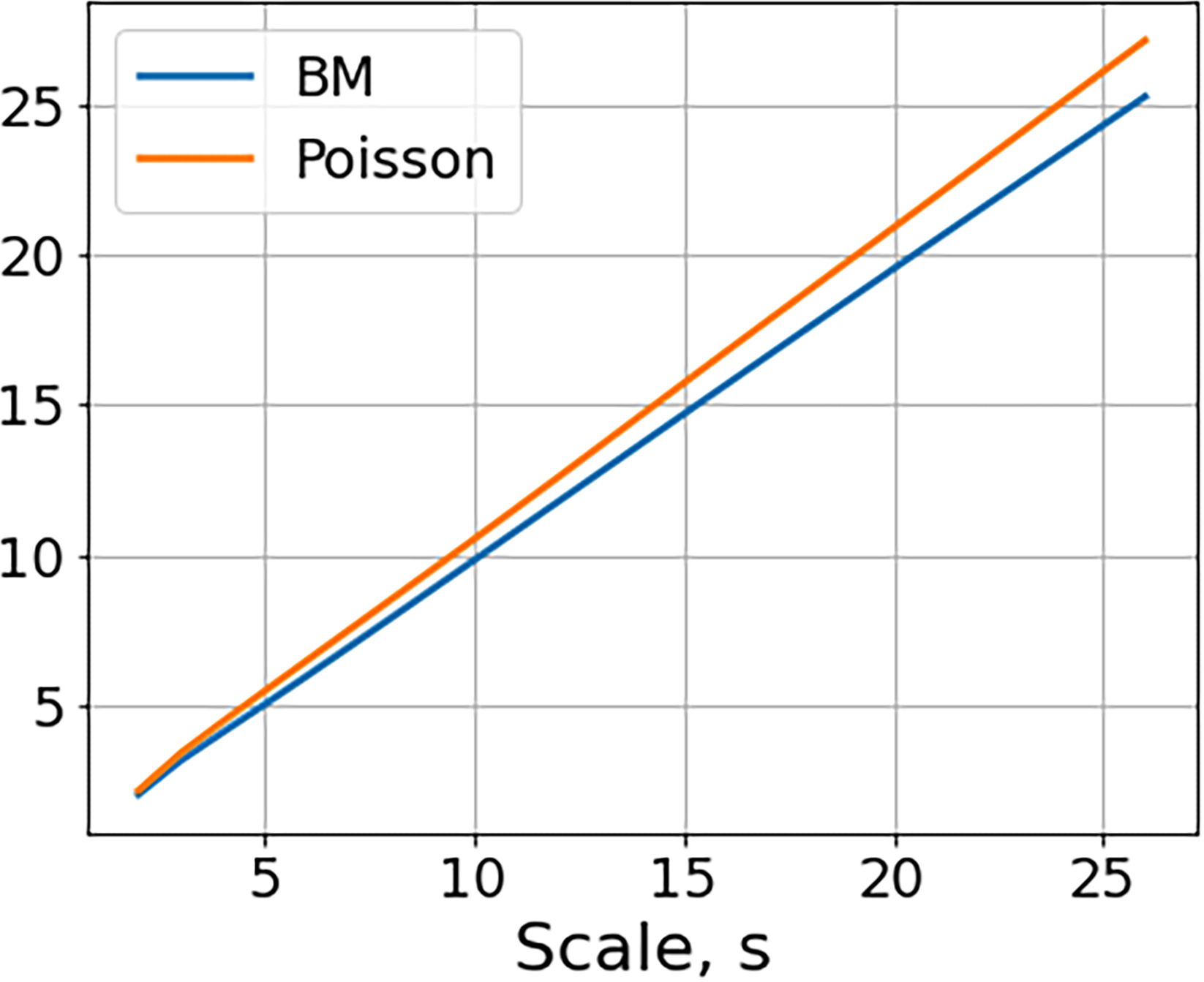

Poisson point process and self similar process:

We consider a Brownian motion compared to a Poisson point process with intensity λ = 0.01 and charges . Figure 3 shows the convergence rate of the first-order scattering moments can distinguish these processes when p = 1 but not when p = 2.

Fig. 3.

First-order invariant scattering moments for standard Brownian motion and Poisson point process. Left: Sample realizations Top: Brownian motion. Bottom: Ordinary Poisson point process. Middle: Normalized scattering moments and for Poisson and BM with p = 1. Right: The same but with p = 2.

6. CONCLUSION

We have constructed Gabor-filter scattering transforms for random measures on . Our work is closely related to [14] but considers more general classes of filters and point processes (although we note that [14] provides a more detailed analysis of self-similar processes). In future work, it would be interesting to explore the use of these measurements for tasks such as, e.g., synthesizing new signals.

A. PROOF OF THEOREM 1

To prove Theorem 1 we will need the following lemma.

Lemma 1.

Let Z be a Poisson random variable with parameter λ. Then for all , , 0 < λ < 1, we have

Proof. For 0 < λ < 1 and , e−λλk ⩽ 1. Therefore,

The proof of Theorem 1. Recalling the definitions of Y(dt) and Sγ,pY(t), and setting Ns(t) = N([t − s, t]d), we see

where are the points N(t) in [t − s, t]d. Conditioned on the event that Ns(t) = k, the locations of the k points on [t − s, t]d are distributed as i.i.d. random variables Z1, . . . , Zk taking values in [t − s, t]d with density

Therefore, the random variables

take values in the unit cube [0, 1]d and have density

Note that in the special case that N is homogeneous, i.e. λ(t) ≡ λ0 is constant, the Vi are uniform random variables on [0, 1]d.

Therefore, computing the conditional expectation, we have for k ⩾ 1

| (13) |

| (14) |

where (14) follows from (i) the independence of the random variables Aj and Vj; (ii) the fact that for any sequence of i.i.d. random variables Z1, Z2, . . .,

and (iii) the fact that

Therefore, since ,

where

By (14) and Lemma 1, if s is small enough so that Λs(t) ⩽ sd∥λ∥∞ < 1, then:

B. PROOF OF THEOREM 2

Proof. Let (sk, ξk) be a sequence of scale and frequency pairs such that limk→∞ sk = 0. Applying Theorem 1 with m = 1, we obtain:

where we write V1,k = V1 to emphasize the fact that the density of V1,k is:

Using the error bound (7), we see that:

Furthermore, since , we observe that:

and by the continuity of λ(t),

| (15) |

Finally, by the continuity of λ(t), we see that

| (16) |

Therefore, by the bounded convergence theorem,

That completes the proof of (8).

To prove (9), we assume that λ(t) is periodic with period T along each coordinate and again use Theorem 1 with m = 1 to observe,

By (7), the second integral converges to zero as k → 8. Therefore,

by the continuity of λ(t) and the bounded convergence theorem.

C. PROOF OF THEOREM 3

Proof. We apply Theorem 1 with m = 2 and obtain:

| (17) |

| (18) |

| (19) |

where Vi,k, i = 1,2, are random variables taking values on the unit cube [0, 1]d with densities,

Dividing both sides in (18) by and subtracting yields:

| (20) |

| (21) |

Using the error bound (7),

| (22) |

at a rate independent of t. Recalling (16) from the proof of Theorem 2, we use the fact that and the bounded convergence theorem to conclude,

| (23) |

| (24) |

where Ui, i = 1, 2, are uniform random variables on the unit cube and . Similarly,

| (25) |

Lastly, recalling that sk → 0 as k → 8 and using (15) from the proof of Theorem 2, we see

| (26) |

| (27) |

Now we integrate both sides of (21) over [0, T]d and divide by Td. Taking the limit as k → 8, on the left hand side we get:

where we used the definition of the invariant scattering moments and (25). On the right hand side of (21), we use (25), (27) and the dominated convergence theorem to see that the first term is:

Using (15), (23), and the bounded convergence theorem, the second term of (21) is:

where

Finally, the third term of (21) goes to zero using the bounded convergence theorem and (22). Putting together the left and right hand sides of (21) with these calculations finishes the proof.

D. PROOF OF THEOREM 4

Proof. As in the proof of Theorem 1, let Ns(t) = N ([t − s, t]d) denote the number of points in the cube [t − s, t]d. Then since the support of w is contained in [0, 1]d,

where are the points of N in [t − sk, t]d. Therefore, in the event that ,

and so, partitioning the space of possible outcomes based on , we obtain:

where

Using the above, we can write the second order convolution term as:

The following lemma implies that decays rapidly in at a rate independent of t.

Lemma 2.

There exists δ > 0, independent of t, such that if sk < δ,

Once we have proved Lemma 2, equation (11) will follow once we show,

| (28) |

Let us prove (28) first and postpone the proof of Lemma 2. We will use the fact that the support of is contained in . Let , , , and let be the points of N in the cube . We have that , and conditioned on the event that Nk(t) = n, the locations of the points t1, . . . , tn are distributed as i.i.d. random variables Z1(t), . . . , Zn(t) taking values in with density . Therefore the i.i.d. random variables defined by take values in and have density

Now, we condition on Nk(t) to see that

| (29) |

| (30) |

| (31) |

| (32) |

The following lemma will be used to estimate the scaling of the term in (31).

Lemma 3.

For all ,

| (33) |

Furthermore, there exists δ > 0, independent of t, such that if sk < δ then

| (34) |

Proof. Making a change of variables in both u and v, and recalling the assumption that , we observe that

| (35) |

| (36) |

The continuity of λ(t) implies that

Furthermore, the assumption 0 < λmin ⩽ ∥λ∥∞ < ∞ implies

| (37) |

Therefore, (33) follows from the dominated convergence theorem and by the observation that the inner integral of (36) is zero unless v ∈ [0, 1 + c]d. Equation (34) follows from inserting (37) into (36) and sending k to infinity.

Since

the independence of and A1, the continuity of λ(t), and Lemma 3 imply that taking k → ∞ in (31) yields:

The following lemma shows that (32) is (and converges at a rate independent of t), and therefore completes the proof of (11) subject to proving Lemma 2.

Lemma 4.

For all there exists δ > 0, independent of t, such that if sk < δ, then

Proof. For any sequence of i.i.d. random variables, Z1, Z2, . . . , it holds that

Therefore, by Lemma 1, Lemma 3, and the fact that the and Ai are i.i.d. and independent of each other, we see that if sk < δ, where δ is as in (34),

where the last inequality uses the fact that .

We will now complete the proof of the theorem by proving Lemma 2.

Proof. [Lemma 2] Since

we see that

First turning our attention to the second term, we note that

| (38) |

since for all . Therefore, conditioning on Nk(t), if sk < δ,

by Lemma 4. Now, turning our attention to the first term, note that

Therefore, by the same logic as in (38)

So again conditioning on Nk (t), and applying Lemma 4, we see that if sk < δ

This completes the proof of (11). Line (12) follows from integrating with respect to t, observing that the error bounds in Lemmas 2 and 3 are independent of t, and applying the bounded convergence theorem.

E. THE PROOF OF THEOREM 5

In order to prove Theorems 5, we will need the following lemma which shows that the scaling relationship of a self-similar process X(t) induces a similar relationship on stochastic integrals against dX(t).

Lemma 5.

Let X be a stochastic process that satisfies the scaling relation

| (39) |

for some β > 0 (where =d denotes equality in distribution). Then for any measurable function ,

Proof. Let be a stochastic process satisfying (39), and let be a sequence of partitions of r0,1s such that

Then, by the scaling relation (39),

We will now use Lemma 5 to prove Theorem 5.

Proof. We first consider the case where is an α-stable process, p < α ⩽ 2. Since X has stationary increments, its scattering coefficients do not depend on t and it suffices to analyze

where the second equality uses the fact the distribution of X does not change if it is run in reverse, i.e.

It is well known that X(t) satisfies (39) for β = 1/α. Therefore, by Lemma 5

So,

The proof will be complete as soon as we show that

By the triangle inequality,

Since 1 ⩽ p < α, we may choose p′ strictly greater than 1 such that p ⩽ p′ < α, and note that by Jensen’s inequality

and since X(t) is a p′-integrable martingale, the boundedness of martingale transforms (see [16] and also [17]) implies

which converges to zero by the continuity of w on [0, 1] and the assumption that skξk converges to L.

Similarly, in the case where is a fractional Brownian motion with Hurst parameter H, we again need to show

However, fractional Brownian motion is not a semi-martingale so we cannot apply Burkholder’s theorem as we did in the proof of Theorem 5. Instead, we use the Young-Lóeve estimate [18] which states that if xpuq is any (deterministic) function with bounded variation, and y(u) is any function which is α-Hölder continuous, 0 < α < 1, then

is well-defined as the limit of Riemann sums and

where ∥·∥BV and ∥·∥α are the bounded variation and α-Hölder seminorms respectively. For all k, the function satisfies, hk(0) = 0 and

One can check that the fact that skξk converges to L implies that fk converges to zero in both L∞ and in the bounded variation seminorm, and that therefore that ∥hk∥BV converges to zero.

It is well-known that fractional Brownian motion with Hurst parameter H admits a continuous modification which is α-Hölder continuous for any α < H. Therefore,

Lastly, one can use the Garsia-Rodemich-Rumsey inequality [19], to show that

for all 1 < p < ∞. For details we refer the reader to the survey article [20]. Therefore,

as desired.

Remark 1.

The assumption that w has bounded-variation was used to justify that the stochastic integral against fractional Brownian motion was well defined as the limit of Riemann sums because of its Hölder continuity and the above mentioned result of [18]. This allowed us to avoid the technical complexities of defining such an integral using either the Malliavin calculus or the Wick product.

F. DETAILS OF NUMERICAL EXPERIMENTS

F.1. Definition of Filters

For all the numerical experiments, we take the window function w to be the smooth bump function

Therefore for γ = (s, ξ), our filters are given by

F.2. Frequencies

In all of our experiments, we hold the frequency, ξ, which we sample uniformly at random from (0, 2π), constant while allowing the scale to decrease to zero.

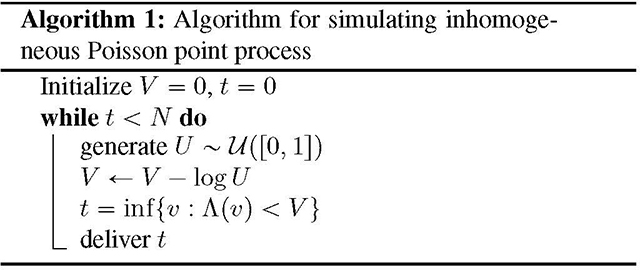

F.3. Simulation of Poisson point process

We use the standard method to generate a realization of a Poisson point process. For Poisson point process with intensity λ, the time interval between two neighbor jumps follows exponential distribution:

Therefore, taking the inverse cumulative distribution function, we sample the time interval between two neighbor jumps through:

where Uj are i.i.d. uniform random variables on [0, 1], and assign the charge Aj to the jump at location tj.

For inhomogeneous Poisson process with intensity funciton λ(t), we simulate the time interval based on a well-known algorithm. We, first define the cumulated intensity:

then generate the location of jumps tj by the Algorithm 1.

Acknowledgments

M.H. acknowledges support from NSF DMS #1845856 (partially supporting J.H.), NIH NIGMS #R01GM135929, and DOE #DE-SC0021152.

Footnotes

A proof of Theorem 1, as well as the proofs of other theorems stated in this paper, can be found in the appendix

7. REFERENCES

- [1].Sifre Laurent and Mallat Stéphane, “Rotation, scaling and deformation invariant scattering for texture discrimination,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2013. [Google Scholar]

- [2].Gatys Leon, Ecker Alexander S, and Matthias Bethge, “Texture synthesis using convolutional neural networks,” in Advances in Neural Information Processing Systems 28, 2015, pp. 262–270. [Google Scholar]

- [3].Antognini Joseph, Hoffman Matt, and Weiss Ron J., “Synthesizing diverse, high-quality audio textures,” arXiv:1806.08002, 2018. [Google Scholar]

- [4].Binkowski Mikolaj, Marti Gautier, and Donnat Philippe, “Autoregressive convolutional neural networks for asynchronous time series,” in Proceedings of the 35th International Conference on Machine Learning, Jennifer Dy and Andreas Krause, Eds., Stockholmsmässan, Stockholm Sweden, 10–15 Jul 2018, vol. 80 of Proceedings of Machine Learning Research, pp. 580–589, PMLR. [Google Scholar]

- [5].Brochard Antoine, Bartłomiej Błaszczyszyn, Stéphane Mallat, and Zhang Sixin, “Statistical learning of geometric characteristics of wireless networks,” arXiv:1812.08265, 2018. [Google Scholar]

- [6].Haenggi Martin, Andrews Jeffrey G., ois Baccelli Franc, Olivier Dousse, and Massimo Franceschetti, “Stochastic geometry and random graphs for the analysis and design of wireless networks,” IEEE Journal on Selected Areas in Communications, vol. 27, no. 7, pp. 1029–1046, 2009. [Google Scholar]

- [7].Genet Astrid, Grabarnik Pavel, Sekretenko Olga, and Pothier David, “Incorporating the mechanisms underlying inter-tree competition into a random point process model to improve spatial tree pattern analysis in forestry,” Ecological Modelling, vol. 288, pp. 143–154, 09 2014. [Google Scholar]

- [8].Schoenberg Frederic Paik, “A note on the consistent estimation of spatial-temporal point process parameters,” Statistica Sinica, 2016. [Google Scholar]

- [9].Fromion V, Leoncini E, and Robert P, “Stochastic gene expression in cells: A point process approach,” SIAM Journal on Applied Mathematics, vol. 73, no. 1, pp. 195–211, 2013. [Google Scholar]

- [10].Mallat Stéphane, “Group invariant scattering,” Communications on Pure and Applied Mathematics, vol. 65, no. 10, pp. 1331–1398, October 2012. [Google Scholar]

- [11].Joakim Andén and Stéphane Mallat, “Deep scattering spectrum,” IEEE Transactions on Signal Processing, vol. 62, no. 16, pp. 4114–4128, August 2014. [Google Scholar]

- [12].Oyallon Edouard and Mallat Stéphane, “Deep rototranslation scattering for object classification,” in Proceedings in IEEE CVPR 2015 conference, 2015, arXiv:1412.8659. [Google Scholar]

- [13].Brumwell Xavier, Sinz Paul, Kim Kwang Jin, Qi Yue, and Hirn Matthew, “Steerable wavelet scattering for 3D atomic systems with application to Li-Si energy prediction,” in NeurIPS Workshop on Machine Learning for Molecules and Materials, 2018. [Google Scholar]

- [14].Bruna Joan, Mallat Stéphane, Bacry Emmanuel, and Muzy Jean-Francois, “Intermittent process analysis with scattering moments,” Annals of Statistics, vol. 43, no. 1, pp. 323 – 351, 2015. [Google Scholar]

- [15].Daley DJ and Vere-Jones D, An introduction to the theory of point processes. Vol. I, Probability and its Applications (New York). Springer-Verlag, New York, second edition, 2003, Elementary theory and methods. [Google Scholar]

- [16].Burkholder Donald L., “Sharp inequalities for martingales and stochastic integrals,” in Colloque Paul Lévy sur les processus stochastiques, number 157–158 in Astérisque, pp. 75–94. Société mathématique de France, 1988. [Google Scholar]

- [17].Rodrigo Bañuelos and Gang Wang, “Sharp inequalities for martingales with applications to the beurling-ahlfors and riesz transforms,” Duke Math. J, vol. 80, no. 3, pp. 575–600, 12 1995. [Google Scholar]

- [18].Young LC, “An inequality of the Hölder type, connected with Stieltjes integration,” Acta Math, vol. 67, pp. 251–282, 1936. [Google Scholar]

- [19].Garsia AM, Rodemich E, and Rumsey H Jr., “A real variable lemma and the continuity of paths of some Gaussian processes,” Indiana University Mathematics Journal, vol. 20, no. 6, pp. 565–578, 1970. [Google Scholar]

- [20].Shevchenko G, “Fractional Brownian motion in a nutshell,” in International Journal of Modern Physics Conference Series, Jan. 2015, vol. 36 of International Journal of Modern Physics Conference Series, p. 1560002. [Google Scholar]