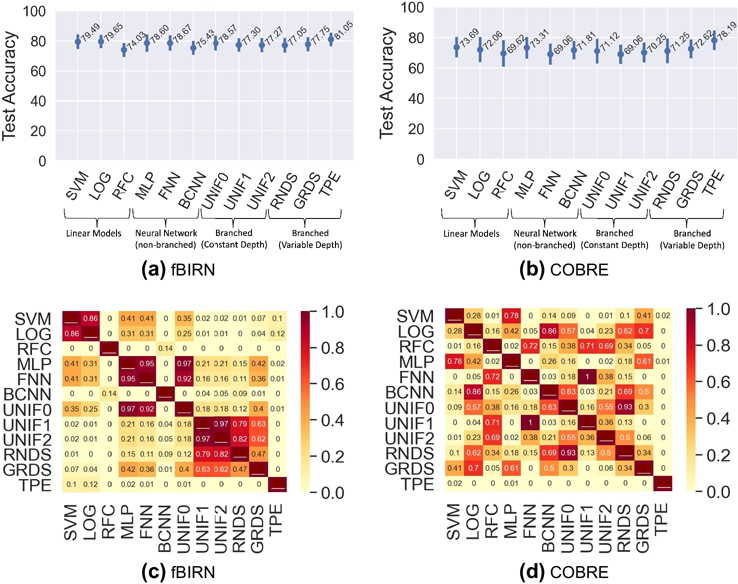

Figure 6.

Mean validation accuracy with error-bar for 50 repetitions of the TPE-optimized final architecture in comparison to baseline methods for (a) fBIRN and (b) COBRE datasets along with p-values for two-sample t-test on the mean test accuracy across 50 repetitions, shown in (c),(d). Methods used for performance comparison with TPE approach include simple machine learning models (SVM, LOG, RFC), Non-Branched Neural Network Architectures (MLP, FNN, BCNN), Branched Neural Network Architectures without Flexibility (UNIF0, UNIF1, UNIF2) and also Branched Neural Network Architectures with Non-Uniform Branch-Depth (GRDS, RNDS). See Figure 3 and subsection 2.6 for visualization and detailed explanation of these methods. The architecture created from the repeated optimizations using the TPE procedure is termed as TPE in the plots. It can be noted that for both the datasets, the accuracy obtained by using the TPE-optimized architecture is significantly higher than the accuracies from uniformly branched architectures (UNIF0, UNIF1), indicating the need for flexible architectures. Moreover, the accuracy with TPE is slightly higher than the accuracy for the other baseline methods, showing the scope of interpretability in the optimized model in terms of certain subdomain interactions (SDIs) with higher complexity requiring deeper while others requiring shallow architectures.