Abstract

Objectives

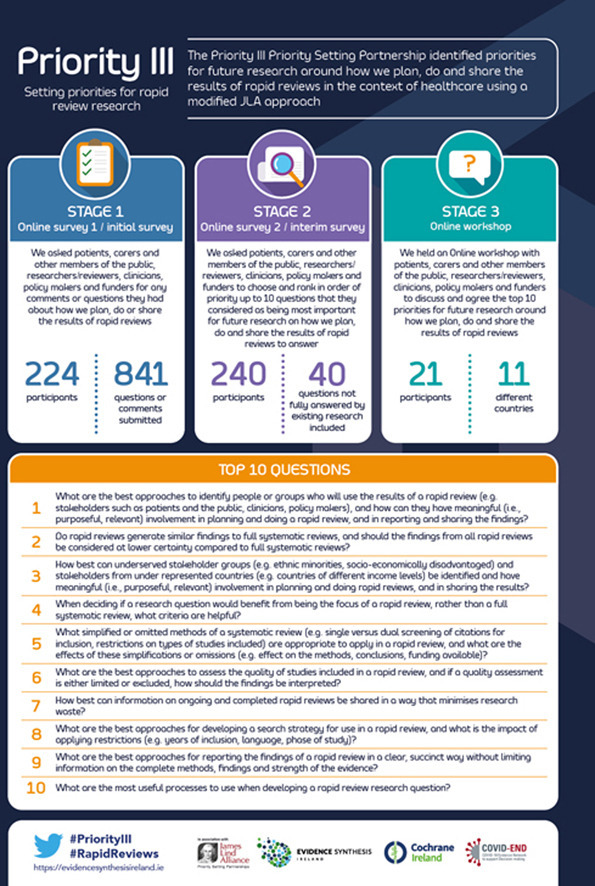

A rapid review is a form of evidence synthesis considered a resource-efficient alternative to the conventional systematic review. Despite a dramatic rise in the number of rapid reviews commissioned and conducted in response to the coronavirus disease 2019 pandemic, published evidence on the optimal methods of planning, doing, and sharing the results of these reviews is lacking. The Priority III study aimed to identify the top 10 unanswered questions on rapid review methodology to be addressed by future research.

Study Design and Setting

A modified James Lind Alliance Priority Setting Partnership approach was adopted. This approach used two online surveys and a virtual prioritization workshop with patients and the public, reviewers, researchers, clinicians, policymakers, and funders to identify and prioritize unanswered questions.

Results

Patients and the public, researchers, reviewers, clinicians, policymakers, and funders identified and prioritized the top 10 unanswered research questions about rapid review methodology. Priorities were identified throughout the entire review process, from stakeholder involvement and formulating the question, to the methods of a systematic review that are appropriate to use, through to the dissemination of results.

Conclusion

The results of the Priority III study will inform the future research agenda on rapid review methodology. We hope this will enhance the quality of evidence produced by rapid reviews, which will ultimately inform decision-making in the context of healthcare.

Keywords: Rapid review, Systematic review, Methodology, Evidence synthesis, Priority Setting Partnership, PPI

Graphical abstract

What is new?

Key findings

-

•

The top 10 priorities for future research to address how we plan, do, and share the results of rapid reviews have been identified.

-

•

These priorities have been determined by stakeholder groups most impacted by rapid reviews, that is, patients and the public, those who conduct reviews (or reviewers), researchers, clinicians, policymakers, and funders.

What this adds to what is known?

-

•

The results of Priority III contribute to the minimization of research waste and research inefficiencies by providing an agenda on which future research can be built to improve how we plan, do, and share the findings of rapid reviews.

What is the implication, what should change now?

-

•

The identified research priorities can guide the allocation of often limited research resources toward answering the most important questions to the stakeholders most impacted by planning, doing, and sharing the results of rapid reviews.

1. Introduction

Evidence synthesis brings together information from research studies that have investigated the same specific question to come to an overall understanding of what was found [1]. In the context of healthcare, the products of evidence synthesis are used to inform decisions by patients and the public, clinicians, researchers, reviewers, policymakers, and funders. Systematic reviews are viewed as the gold standard in evidence synthesis to inform decision-making [2]. However, conducting a conventional systematic review often requires significant resources such as time, expertise, and funding. The need for a resource-efficient alternative to systematic reviews resulted in the emergence of rapid reviews, which are completed through the omission or simplification of certain steps of a conventional systematic review process [3].

Reference to rapid reviews in the literature can be traced back to 1997 [4]. The volume of rapid reviews being commissioned and conducted has risen steadily since then, with demand escalating dramatically following the coronavirus disease 2019 (COVID-19) pandemic [5]. The increased demand for rapid reviews in response to the pandemic is attributable to the urgent need to provide evidence in a resource-efficient manner that cannot be satisfied through conventional systematic reviews [6]. As the rise in demand for rapid reviews has grown, however, so too has the uncertainty and debates about their methodology. Despite the name suggesting that rapid reviews are conducted faster than conventional systematic reviews, this is not always the case. Many high-quality systematic reviews can be, and have been, completed within a time-critical window when, for example, additional expertise and funding are available or if few if any studies are identified for inclusion in the review [7].

A 2015 scoping review of 82 rapid reviews identified 50 unique methods of simplifying or omitting steps of systematic reviews, including the omission of grey literature, having one reviewer screen title and abstracts, the application of language restrictions, and the absence of risk of bias/quality appraisal [8]. The need for research on rapid review methodology is evident, with the Cochrane Rapid Review Methods Group stating that

While the concept of rapid evidence synthesis, or rapid review (RR), is not novel, it remains a poorly understood and as yet ill-defined set of diverse methodologies supported by a paucity of published, available scientific literature. The speed with which RRs are gaining prominence and are being incorporated into urgent decision-making underscores the need to explore their characteristics and use further. While rapid review producers must answer the time-sensitive needs of the health decision-makers they serve, they must simultaneously ensure that the scientific imperative of methodological rigor is satisfied. In order to adequately address this inherent tension, a need for methodological research and standard development has been identified. [9]

The Priority III study was conducted to provide an agenda for this research by identifying and prioritizing the top 10 unanswered questions for future research on how we plan, do, and share the results of rapid reviews in healthcare. The study was conducted in collaboration with the James Lind Alliance [10] (JLA), a nonprofit organization that brings multiple stakeholders together in a transparent and evidence-based Priority Setting Partnership (PSP) to identify and prioritize the most important evidence uncertainties or unanswered research questions about specific topics. The Priority III study is the first PSP on rapid review methodology.

The prioritization of research questions helps minimize research waste by providing a focus for future research resources [11]. The conduct of research in response to the Priority III results will inform how future rapid reviews are planned, done, and their findings shared, ultimately enhancing the use of high-quality synthesized evidence to inform healthcare policy and practice. The prioritization of research questions by the multiple stakeholders that are impacted most by rapid review methodology aligns with the suggestion by the World Health Organization that the methods of rapid reviews should be tailored to the decision-makers’ needs [12].

Evidence Synthesis Ireland conducted the Priority III PSP in collaboration with the JLA. Evidence Synthesis Ireland is an all-Ireland initiative funded by the Health Research Board in Ireland and the Health and Social Care, Research and Development Division of the Public Health Agency in Northern Ireland.

2. Methods

The study used a modified PSP based on the methods of the JLA [10] and the PRioRiTy I [13] and PRioRiTy II [14] studies, as set out in the protocol [15]. PRioRiTy I and II identified methodology uncertainties in recruitment and retention within clinical trials. JLA PSPs have ordinarily focussed on health conditions or settings. PRioRiTy I, II and Priority III differ because they identify and prioritize uncertainties about research methods. Based on this difference, it was necessary for the JLA approach to be modified slightly by the inclusion of a wider variety of stakeholder groups, for example, researchers, in the PSP process.

The results of Priority III are reported following the REporting guideline for PRIority SEtting of health research (REPRISE) criteria [16].

2.1. Establishing the steering group

An international steering group was established to guide the PSP process. Membership of the steering group reflected preidentified stakeholder groups. It was composed of 26 members in total from 5 different countries: 5 patient and public partners, 6 researchers or reviewers, 6 clinicians, 4 policymakers, 3 funders, and 2 JLA representatives. All members were identified either through professional connections or through groups such as the Cochrane Consumer Network and the Cochrane Rapid Reviews Methods Group. The steering group purposively comprised representation from people with widely different and varying experience levels in the commissioning, conduct, and use of rapid reviews. Additionally, particular attention was given to building a steering group with diverse skills that could inform the PSP process, including members with survey methodology experience, information specialists, and those experienced in conducting a PSP. Steering group meetings were held approximately every 2-3 months throughout the study. Before each steering group meeting, a meeting was held with the five patients and public partners on the steering group. This meeting aimed to present an opportunity to discuss the study and aspects of rapid review methodology that may not be familiar to the group and facilitate meaningful contribution by these partners to the steering group meetings. Patient and public partners were compensated for their time spent on steering group activities. Other steering group members (researchers, reviewers, clinicians, policymakers, funders) were not compensated.

2.2. Adaption of definitions and development of animation

The first steering group meeting held in July 2019 identified a need for definitions of rapid reviews, evidence synthesis, systematic reviews, and healthcare to be developed for use throughout the study. Patient and public partners on the steering group considered definitions imperative to the study’s clarity and success and stressed the need for these to be resolved before the initial survey.

Definitions for each of the four terms were drafted by adapting existing definitions. Adapted definitions were reviewed by four steering group members with expertise in rapid reviews, followed by a review by the five patient and public partners on the steering group. The steering group then discussed and signed off the final definitions before their use. The definitions were presented to participants at each stage of involvement. Please see Table 1 for the definitions used throughout the study.

Table 1.

Definitions adapted, original sources referenced

| Term | Definition adapted for use in priority III | Origin |

|---|---|---|

| Rapid review | A rapid review is a type of evidence synthesis that brings together and summarises information from lots of different research studies to produce evidence for people such as the public, researchers, policymakers and funders in a systematic, resource-efficient manner. This is done by speeding up the ways we plan, do and/or share the results of conventional structured (systematic) reviews, by simplifying or omitting a variety of methods that should be clearly defined by the authors' | Slight modification of definition used by the Cochrane Rapid Review Methods Group [17] |

| Systematic review | A systematic review is a type of evidence synthesis that brings together information from multiple studies to help answer a clear question. It uses systematic and specific methods to identify, select and quality assess included studies, followed by the collection and analysis of information. Statistical methods (meta-analysis) may or may not be used to analyse and summarise the results of the included studies | Definition adapted from the Cochrane glossary [18] |

| Evidence synthesis | Evidence synthesis uses specific, rigorous methods to bring together information from multiple studies that have looked at the same topic and provide an account of all that is known about the topic | Definition adapted from the Evidence Synthesis International [19] website, and the Evidence Synthesis Ireland video, available HERE |

| Healthcare | We define healthcare as being related to the treatment, control or prevention of disease, illness, injury or disability, and the care or aftercare of a person with these needs (whether or not the tasks involved have to be carried out by a health professional) | Definition adapted from the UK Department of Health and Social Care guidance document titled “National Framework for NHS Continuing Healthcare and NHS-funded Nursing Care” [20] |

These definitions were also used to inform the development of a video animation specifically for use within Priority III that described evidence synthesis, rapid reviews, and an introduction to the Priority III study and its purpose (https://www.youtube.com/watch?v=miikDRzrKAI&t=3s).

2.3. Identifying and inviting potential partners

Under JLA guidance, PSP partners are individuals or groups impacted by the PSP topic. Partners were asked to participate in and promote the PSP process and encourage their represented groups or members to also participate in the process. The steering group determined the stakeholder groups impacted by rapid review methodology and therefore would be engaged with throughout the study (initial survey, interim survey, and prioritization workshop), as follows:

-

•

Patients and the public

-

•

Researchers or reviewers

-

•

Clinicians (inclusive of allied health professionals)

-

•

Policymakers

-

•

Funders

Steering group members identified potential partners from each of these stakeholder groups through peer knowledge and consultation. Each potential partner was then contacted directly by a member of the steering group with information on the study and the role of partners, and asked for their support of the project.

2.4. Initial survey: development of survey and gathering uncertainties

The initial online survey sought to gather questions or comments from participants on rapid review methodology.

The rapid review process was broken down into three main stages: planning, doing, and sharing results within the survey. Breaking down the review process into these three stages made it possible for participants to answer questions from one, two, or three stages based on their levels of comfort and knowledge. The steering group agreed that characterizing the rapid review process using the framing of “plan, do, and share” would make the methodology accessible and therefore adopted this description for the remainder of the study.

The survey was built in QuestionPro [21] and, following a consent and demographics section, contained four open-ended questions concerning planning, doing, and sharing the results of rapid reviews (see Table 2 ).

Table 2.

Questions included in the initial survey

| No. | Question |

|---|---|

| 1 | What questions or comments do you have about improving the process needed to plan a rapid review successfully? |

| 2 | What questions or comments do you have about improving how rapid reviews are carried out? |

| 3 | What questions or comments do you have about how the findings of rapid reviews are communicated to people? |

| 4 | Do you have any other questions or comments on how we plan, do and share the results of rapid reviews? |

Demographic questions were included to monitor the geographic spread and stakeholder groups of participants, informing the focus of additional recruitment where needed. We also asked participants if they were interested in being contacted about the following stages of the Priority III study. The survey was piloted with the steering group.

The survey ran for 4 weeks, and participants were recruited through contact with our PSP partners in addition to a social media campaign targeted at achieving participation from a geographical spread of participants. No formal sample size was determined.

2.5. Initial survey: data processing and verifying uncertainties

Comments and questions submitted in the initial survey were reviewed and rewritten as research questions. Three reviewers completed this process. C.B. drafted the initial summary questions, which C.H. then reviewed and revised before D.D. conducted a final review. Longer responses were broken down into multiple questions where necessary. A question was regarded as out of scope if it did not relate to planning, doing, and sharing the results of rapid reviews within the healthcare setting. Once every submission had been reviewed and initial research questions formulated, a second review was completed by two reviewers (C.B. and D.D.) to merge questions that focused on common themes into “summary questions” and to organize the summary questions into categories. In line with JLA guidance, these summary questions are generally broad overarching questions from which researchers are invited to identify and answer more specific questions.

Steering group members then reviewed the summary questions in pairs to ensure they were a true reflection of initial submissions and were worded clearly. Twelve members of the steering group volunteered their time to take part in this review. Each pair included individuals with contrasting experiences in rapid reviews to accommodate how different audiences might interpret each question. The questions for review were divided equally between the pairs. Questions were then amended based on the recommendations received.

Each question was checked to ensure it was a true uncertainty and not already fully answered by existing research. The steering group considered a question as unanswered if a synthesis gap was apparent following a search of all relevant systematic reviews published in the previous 3 years. The timeframe of 3 years was chosen in line with JLA recommendation that an up-to-date systematic review is <3 years old [10]. A review was judged to be systematic when explicit methods were used to search, select, critically appraise, and synthesize individual studies. The PubMed bibliographic database was searched for systematic reviews published from 2018 to the time of searching (2021) using a search strategy developed specifically for Priority III by an experienced information specialist on the steering group (A.B.). The quality of the systematic review was evaluated if there was evidence that an identified systematic review had entirely answered a question. Suggested areas for future research, as previously generated by the Cochrane Rapid Review Methods Group were also consulted.

Once a question was verified as unanswered, its wording was reviewed for clarity by an experienced health journalist before inclusion in the interim survey.

2.6. Interim survey: development of survey and interim priority setting

Having been verified as unanswered, 75 questions from the initial survey were deemed eligible for inclusion in the interim survey. The interim survey asked participants to identify the summary questions (arising from the initial survey) that they considered most important for future research on how we plan, do, and share the results of rapid reviews. The steering group discussed the format of the interim survey to identify a format that would minimize the time burden on participants while presenting the most reliable results. Following discussion, the steering group decided that, rather than carrying all 75 questions forward, it would be better for fewer questions to be included.

The number of questions to take forward to the interim survey required balancing the burden on participants with the importance of maintaining a question pool that remained true to participants’ views from the original stakeholder groups. Following extensive discussion and a combination of adapting the PRioRiTy I [13] inclusion criteria pro-rata, further merging of some of the 75 questions and an online survey conducted with the steering group to determine and agree on questions for inclusion, a total of 40 questions were included in the interim survey.

It was decided that participants would be asked to choose up to 10 questions from the 40 they were presented with that they considered the most important for future research to answer and to rank those questions in order of priority. The survey was built in QuestionPro [21]. Different formats to facilitate the choosing and ranking questions were piloted for ease of use with respondents outside of the steering group, with feedback informing the final format. Before the consent page of the survey, participants were advised that the highest rated questions from the survey would be brought to the consensus meeting.

Demographic questions were included in the survey to monitor the geographic spread and stakeholder groups of participants and inform the need for additional recruitment efforts. We also asked participants if they were interested in being contacted about the final stage of the Priority III study, the prioritization workshop. The finalized interim survey was piloted with the steering group before dissemination.

The survey ran for 4 weeks. Participants were recruited through contact with our PSP partners and a social media campaign, again targeted at a wide demographic and geographical spread of participants. All those who consented to future contact were emailed a link to the interim survey. No formal sample size was determined.

2.7. Interim survey: data processing and ranking survey items

The stakeholder group itemized all responses to the interim survey. This ensured that stakeholder groups with lower numbers of participants (e.g., funders) were given equal weighting to stakeholder groups with high response numbers (e.g., researchers or reviewers). Following the JLA approach of using ranked weighted scores across stakeholder groups, a reverse scoring system was applied, and summed scores from each stakeholder group were calculated separately. For each stakeholder group, the highest-ranked question was then allocated a maximum score of 40 and so on, with the lowest-ranked question receiving a score of 1. Questions with the same total were ranked jointly. To obtain an overall ranking, the scores for each question from each stakeholder group were added together.

To preserve the diversity of responses by stakeholder group, the steering group considered the overall rankings and each stakeholder group’s top scores to determine the questions brought forward to the workshop for prioritization. The steering group considered both overall rankings and stakeholder group scores acknowledging that, although some questions were rated highly by all groups, some were rated highly by one group alone.

2.8. Final prioritization workshop

The final prioritization meeting took place online on the 27th and 28th of May 2021 to determine the top 10 list of priorities for future research to address how we plan, do, and share the results of rapid reviews. The decision to hold the workshop online was determined by the COVID-19 pandemic and the resulting restrictions on travel. To facilitate a wide geographical spread, the decision was taken to hold the workshop over two half-days to allow for different time zones. The workshop was facilitated both on-screen and off-screen by the JLA and combined whole group sessions to share general information, generally “check in” with the group, with small group discussion sessions to rank the questions. There were two small group discussions on day 1 and one small group discussion on day 2. In the first small group discussion, each participant was asked to share the top and bottom three questions they felt were most important for future research on rapid reviews to answer—the purpose of this discussion was to highlight if there were similarities or differences between the individual rankings. In the remaining two small group discussions, participants ranked the questions in order of priority, taking into account the arguments for prioritization made by each participant. Membership of discussion groups remained unchanged throughout day 1, with the groups being refreshed for day 2. In the final small group discussion held on day 2, each small group was asked to prioritize each question. The JLA team then combined the scores from each small group. All participants were then joined together in one final group to discuss the final results.

Prior JLA experience suggested that 24 participants would be optimal for the online workshops. Recruitment for the workshop was multifaceted and based on geographical and stakeholder spread. A substantial number of interim survey participants confirmed their possible interest in attending the consensus workshop. Once this data was collected from the interim survey, further information on the workshop and an expression of interest form were sent to each person. Individuals were asked to confirm their interest in attending. Additionally, recruitment targeted authors on prominent research papers relevant to the topic and international groups with members or contacts who might contribute to the workshop discussion, including the World Health Organization, the Cochrane Consumers Network, and Africa Centre for Evidence. Members of the steering group could volunteer to attend the workshop as participants and as a source of information, if needed, to clarify any points raised within the small groups on the processes adopted by Priority III.

Following the workshop, participants were sent a survey by the JLA to gather feedback on the workshop’s format and the top 10 researcher priorities that had been identified.

3. Results

3.1. Initial survey: demographic information

In total, 224 participants consented to participate in, and completed, the initial online survey. Researchers and reviewers had the largest response—the proportion of participants by each stakeholder group is presented in Table 3 . Most participants stated that they lived in Canada; however, geographical spread was achieved with the remaining participants distributed across countries with different income levels, including Argentina, Belgium, Republic of Ireland, Northern Ireland, Spain, Australia, Brazil, Malaysia, and the United States.

Table 3.

Initial survey respondent groups

| Stakeholder group | Number (%) |

|---|---|

| Patients and the public | 39 (18) |

| Researchers or reviewers | 155 (69) |

| Clinicians | 18 (8) |

| Policymakers | 7 (3) |

| Funders | 5 (2) |

| Total | 224 (100) |

3.2. Initial survey: collating themes and merging questions

In all, 841 questions or comments were submitted in the initial survey. Participants were given the opportunity to submit comments or questions to any or all of the stages of interest: planning, doing, or sharing of results. Table 4 presents the number of individual questions submitted for each stage. Many questions could be applied across more than one stage. Questions from each of the three stages were combined where appropriate and grouped into summary questions to be answered by research.

Table 4.

Number of individual questions submitted in response to questions posed in the initial survey

| No. | Question | No. of individual comments or questions received |

|---|---|---|

| 1 | What questions or comments do you have about improving the process needed to plan a rapid review successfully? | 260 |

| 2 | What questions or comments do you have about improving how rapid reviews are carried out? | 245 |

| 3 | What questions or comments do you have about how the findings of rapid reviews are communicated to people? | 233 |

| 4 | Do you have any other questions or comments on how we plan, do and share the results of rapid reviews? | 103 |

| Total | 841 |

This grouping resulted in a total of 78 questions. Following feedback from steering group pairs, additional questions were combined, and some were made more granular. This resulted in 75 questions that were all reviewed to ensure they were not already answered by research. We identified that all were unanswered, and therefore all were eligible for inclusion in the interim survey. Consultation of the suggested areas for future research generated by the Cochrane Rapid Review Methods Group established that all areas suggested by the group were included within the 75 summary questions.

Following extensive discussion, and a combination of adapting the PRioRiTy I [13] inclusion criteria pro-rata, further merging of the some of the 75 questions and an online survey conducted with the steering group, the group decided to carry forward 40 of the 75 questions to the interim survey.

3.3. Interim survey: demographic information

In total, 240 participants completed the interim survey by choosing and ranking what they considered the most important questions to be addressed by future research. Researchers and reviewers comprised the highest number of respondents, as evident from the proportion of participants from each stakeholder group presented in Table 5 . Although most participants stated that they lived in the Republic of Ireland, a geographical spread was again achieved in the interim survey. The remaining participants lived in countries including England, Canada, Italy, Argentina, Uganda, United States, India, Switzerland, and Zimbabwe.

Table 5.

Interim survey respondent groups

| Stakeholder group | Number | Percentage |

|---|---|---|

| Patients and the public | 46 | 19 |

| Researchers or reviewers | 161 | 67 |

| Clinicians | 22 | 9 |

| Policymakers | 7 | 3 |

| Funders | 4 | 2 |

| Total | 240 | 100 |

3.4. Interim survey ranking

Based on their experience of online prioritization workshops, the JLA suggested a maximum of 18 questions for discussion at the virtual workshop. Following discussion, the steering group voted for any question included in the top 6 from each of the stakeholder groups in the interim survey to be brought forward to the workshop. Based on this decision, 17 questions were brought to the workshop for prioritization.

3.5. Final prioritization workshop

The final prioritization workshop consisted of 21 participants: 7 patients or members of the public, 7 researchers/reviewers, 4 clinicians, 1 funder, and 2 policymakers. Four of the workshop participants were members of the steering group. A geographical spread of participants was achieved, with participants attending from 11 different countries, that is, Cameroon, Syria, Republic of Ireland, England, Canada, United States, Kenya, Brazil, Spain, Northern Ireland, and South Africa. The JLA team facilitating the workshop consisted of five people: one chair, three facilitators for the small groups, and a technical support person. There were three observers from the steering group. The small group discussion on day 1 of the workshop focused on discussing each question individually. On the second day, the small group discussion ranked each of the 17 questions by priority. The small groups achieved almost complete agreement on the top 10 questions based on the combination of scores (Table 6 ). Questions ranked 11-17 are presented in Supplementary Material 1. This list required no further edits following feedback from the workshop participant survey, distributed by the JLA following the workshop.

Table 6.

Top 10 questions prioritized

| No. of priority | Question |

|---|---|

| 1 | What are the best approaches to identify people or groups who will use the results of a rapid review (e.g. stakeholders such as patients and the public, clinicians, policymakers), and how can they have meaningful (i.e., purposeful, relevant) involvement in planning and doing a rapid review, and in reporting and sharing the findings? |

| 2 | Do rapid reviews generate similar findings to full systematic reviews, and should the findings from all rapid reviews be considered at lower certainty compared to full systematic reviews? |

| 3 | How best can underserved stakeholder groups (e.g. ethnic minorities, socio-economically disadvantaged) and stakeholders from under represented countries (e.g. countries of different income levels) be identified and have meaningful (i.e., purposeful, relevant) involvement in planning and doing rapid reviews, and in sharing the results? |

| 4 | When deciding if a research question would benefit from being the focus of a rapid review, rather than a full systematic review, what criteria are helpful? |

| 5 | What simplified or omitted methods of a systematic review (e.g. single versus dual screening of citations for inclusion, restrictions on types of studies included) are appropriate to apply in a rapid review, and what are the effects of these simplifications or omissions (e.g. effect on the methods, conclusions, funding available)? |

| 6 | What are the best approaches to assess the quality of studies included in a rapid review, and if a quality assessment is either limited or excluded, how should the findings be interpreted? |

| 7 | How best can information on ongoing and completed rapid reviews be shared in a way that minimises research waste? |

| 8 | What are the best approaches for developing a search strategy for use in a rapid review, and what is the impact of applying restrictions (e.g. years of inclusion, language, phase of study)? |

| 9 | What are the best approaches for reporting the findings of a rapid review in a clear, succinct way without limiting information on the complete methods, findings and strength of the evidence? |

| 10 | What are the most useful processes to use when developing a rapid review research question? |

3.6. Availability of the research question list

The final list of 17 questions is available from a dedicated webpage (www.evidencesynthesisireland.ie/priority-iii/). The web page was designed and developed based on the PRioRiTy I and PRioRiTy II website (www.priorityresearch.ie). Supplementary documentation has been made public on the Open Science Framework (https://doi.org/10.17605/OSF.IO/R6VFX).

4. Discussion

Priority III has facilitated the identification of the top 10 priorities for future research on how to plan, do, and share the results of rapid reviews. The priorities were determined by participants recruited internationally from stakeholder groups that are impacted most by the planning, doing, and sharing of results of rapid reviews, that is, patients and the public, researchers, reviewers, clinicians, policymakers, and funders.

The process of conducting Priority III using a modified JLA PSP approach has been influenced heavily and assisted by the adaptations carried out in both PRioRiTy I [13] and PRioRiTy II [14]. The effectiveness of the modified JLA PSP methodology can be credited with providing the Priority III steering group with guidance on working with what was at often times challenging material and providing the scope to work with that material in a way that reflected the unique needs of the modified PSP and the diversity of stakeholders involved. The research team from both PRioRiTy I [13] and PRioRiTy II [14] were an invaluable source of information on challenges encountered and lessons that were learned when conducting their respective PSPs. The processes and rationale adopted by the PRioRiTy I and PRioRiTy II studies informed many discussions held by the Priority III steering group throughout the PSP process, for example, the interim survey questions inclusion criteria. The influence of PRioRiTy I and PRioRiTy II, and the heterogeneous backgrounds of the Priority III steering group members and the diverse opinions that arose from that heterogeneity, have helped Priority III to maintain equality, diversity, and inclusion in line with the JLA’s principle of equal involvement.

The value of the JLA’s principle of equal involvement is evident from the Priority III results. Two of the top three prioritized questions ask how people or groups who will use the results of a rapid review (e.g., stakeholders such as patients and the public, clinicians, policymakers), underserved stakeholder groups (e.g., ethnic minorities, socioeconomically disadvantaged), and stakeholders from underrepresented countries (e.g., countries of different income levels) can be identified and have meaningful involvement in planning and doing a rapid review, and in reporting and sharing the findings. These questions received equal weightings from people who identify with these stakeholder groups. This highlights the need for these groups to be involved in rapid review methodology and their willingness to do so.

Given that the topic of Priority III was rapid review methodology, it was clear from the outset of the study that significant efforts would need to be given to understanding and incorporating the views of patients and the public. Five patient and public partners were members of the Priority III steering group—three were previous members of either the PRioRiTy I or PRioRiTy II steering groups. The five patient and public partners possessed varying knowledge and experience of rapid reviews. To support the meaningful contribution of each of these partners to the study, the partners and the core Priority III team met before each steering group meeting. These “pre-meetings” provided a space for questions separate from the main steering group meeting on all aspects of the PSP and rapid review methodologies. The agendas for these meetings mirrored the main steering group agendas in addition to any topic the partners wished to discuss. The significant contribution of the five patient and public partners to the conduct of Priority III was readily apparent. An item was added to each steering group meeting agenda where the partners provided an update on their most recent activities and influence.

A concrete example of such influence came when the patient and public partners identified a need for definitions of rapid reviews, evidence synthesis, systematic reviews, and healthcare and the value of an animation to explain evidence synthesis, rapid reviews, and to provide an introduction to the Priority III study and its purpose. Although the development of these resources impacted upon the timeline of the study, they were invaluable in achieving meaningful involvement from patients and the public in particular who, although they may have used the results of a rapid review, may not have an understanding of rapid review methodology before their involvement in the study. These resources gave a greater insight into both the background of rapid reviews and the PSP process itself. Such was the influence of the patient and public partners on the conduct of Priority III, a separate evaluation study has been undertaken in parallel to explore that influence from the perspectives of both the partners themselves and the researchers. We will report the results of that study separately.

Although the conduct of Priority III benefitted greatly from the lessons learned from the PRioRiTy I and PRioRiTy II studies, new challenges inevitably arose. As expected, the onset of the COVID-19 pandemic impacted significantly on the conduct of Priority III. In response to the pandemic and a change from previous PSPs, the Priority III study was conducted online. Although initially challenging, the online format did significantly benefit the study as it facilitated widespread international engagement, which helps to secure the international significance and relevance of the study’s findings. A geographical spread of participants was achieved in both online studies in response to a targeted recruitment campaign. The pandemic’s impact on international engagement is most notable from the final online prioritization meeting with 21 participants from 11 countries of different income levels, which would not have been possible with a traditional in-person workshop format. Furthermore, feedback from our PSP partners and numerous participants suggested that an additional impact of the ongoing pandemic was an increased interest in planning, doing, and sharing the results of rapid reviews among members of each of the stakeholder groups involved. Improved recruitment to each stage of the study was likely to be due, at least in part, to the increasing commission, conduct, and use of rapid reviews to inform decision-making in the context of the coronavirus disease.

5. Conclusion

Priority III engaged with patients and the public, researchers, reviewers, clinicians, policymakers, and funders to identify and prioritize the top 10 unanswered research questions about rapid review methodology. In line with previous PSPs, these questions are generally broad, overarching questions for which researchers are asked to identify more specific questions within to answer. As the final priorities make clear, considerable ambiguity accompanies virtually every aspect of how we plan, do, and share the results of rapid reviews. The results of Priority III provide an essential agenda for future research, and we encourage researchers to collaborate and contribute to answering these priorities in the future. Furthermore, we ask funders to incorporate these priorities into research agendas and strategies to directly improve how rapid reviews are planned, done, and shared.

Acknowledgments

The authors would like to thank every participant in the Priority III initial survey, interim survey, and workshop. The authors are deeply indebted to them for their input. Thank you to all the partners and collaborators (in particular, Dr Jeremy Grimshaw and COVID-END) for help with recruitment to the initial survey, interim survey, and workshop. Thank you to Professor Ruth Stewart (Africa Centre for Evidence & Africa Evidence Network) for the time and effort she spent helping the authors recruit for the workshop. The authors would like to thank Dr Claire O Connell, the health journalist who reviewed the questions before the interim survey to clarify wording. Her input was very much appreciated. Thank you to the steering group member Dr Andrew Booth for time and effort spent suggesting methodologies for identifying answered, and hence unanswered, questions and developing a specific search strategy for use within this study. Thank you to the James Lind Alliance team for their support throughout the study and, in particular for their exceptional facilitation and management of the final prioritisation workshop.

Footnotes

Funding: Evidence Synthesis Ireland conducted the Priority III PSP in collaboration with the JLA. Evidence Synthesis Ireland is an all-Ireland initiative funded by the Health Research Board Ireland and the Health and Social Care, Research and Development Division of the Public Health Agency in Northern Ireland.

Ethical Approval: Ethical approval was granted by the National University of Ireland Galway Research Ethics Committee (reference: 20-Apr-02).

Conflict of interest: Nikita Burke is paid in full from Evidence Synthesis Ireland, which is a capacity-building initiative funded by the Health Research Board (Ireland) and the HSC R&D Division of the Public Health Agency (Northern Ireland). Claire Beecher is paid in part from Evidence Synthesis Ireland, which is a capacity-building initiative funded by the Health Research Board (Ireland) and the HSC R&D Division of the Public Health Agency (Northern Ireland). Declan Devane is paid in part from Evidence Synthesis Ireland, which is a capacity-building initiative funded by the Health Research Board (Ireland) and the HSC R&D Division of the Public Health Agency (Northern Ireland). Andrea C. Tricco is paid in part by a Tier 2 Canada Research Chair in Knowledge Synthesis.

Grant information: Health Research Board Ireland CBES-2018-001.

Author Contributions: Conceptualization: CB, ET, BM, CW, DCS, AW, JE, MS, TT, BB, TM, MK, BL, CG, PH, CH, AB, CG, JT, ACT, NNB, CK, DD. Ideas; formulation or evolution of overarching research goals and aims. Methodology: CB, ET, BM, CW, DCS, AW, JE, MS, TT, BB, TM, MK, BL, CG, PH, CH, AB, CG, JT, ACT, NNB, CK, DD. Development or design of methodology; creation of models. Software: CB, DD. Programming, software development; designing computer programs; implementation of the computer code and supporting algorithms; testing of existing code components. Validation: CB, ET, DCS, AW, JE, MS, TT, TM, PH, AB, CG, JT, ACT, NNB, CK, DD. Verification, whether as a part of the activity or separate, of the overall replication/reproducibility of results/experiments and other research outputs. Formal analysis: CB, ET, DCS, AW, JE, MS, TT, TM, PH, AB, CG, JT, ACT, NNB, CK, DD. Application of statistical, mathematical, computational, or other formal techniques to analyze or synthesize study data. Investigation: CB, ET, BM, CW, DCS, AW, JE, MS, TT, BB, TM, MK, BL, CG, PH, CH, AB, CG, JT, ACT, NNB, CK, DD. Conducting a research and investigation process, specifically performing the experiments, or data/evidence collection. Resources: CB, DD. Provision of study materials, reagents, materials, patients, laboratory samples, animals, instrumentation, computing resources, or other analysis tools. Data Curation: CB, DD. Management activities to annotate (produce metadata), scrub data and maintain research data (including software code, where it is necessary for interpreting the data itself) for initial use and later reuse. Writing - Original Draft: CB, DD. Preparation, creation and/or presentation of the published work, specifically writing the initial draft (including substantive translation). Writing - Review & Editing: CB, ET, BM, CW, DCS, AW, JE, MS, TT, BB, TM, MK, BL, CG, PH, CH, AB, CG, JT, ACT, NNB, CK, DD. Preparation, creation and/or presentation of the published work by those from the original research group, specifically critical review, commentary or revision–including pre-or postpublication stages. Visualization: CB, DD. Preparation, creation and/or presentation of the published work, specifically visualization/data presentation. Supervision: DD. Oversight and leadership responsibility for the research activity planning and execution, including mentorship external to the core team. Project administration: CB, DD. Management and coordination responsibility for the research activity planning and execution. Funding acquisition: DD, ET, NNB. Acquisition of the financial support for the project leading to this publication.

Supplementary data to this article can be found online at https://doi.org/10.1016/j.jclinepi.2022.08.002.

Appendix A. Supplementary Data

References

- 1.Cochrane.org Evidence Synthesis - what is it and why do we need it? 2022. https://www.cochrane.org/news/evidence-synthesis-what-it-and-why-do-we-need-it2022 Available at.

- 2.Haby M.M., Chapman E., Clark R., Barreto J., Reveiz L., Lavis J.N. What are the best methodologies for rapid reviews of the research evidence for evidence-informed decision making in health policy and practice: a rapid review. Health Res Policy Syst. 2016;14(1):83. doi: 10.1186/s12961-016-0155-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hamel C., Michaud A., Thuku M., Skidmore B., Stevens A., Nussbaumer-Streit B., et al. Defining Rapid Reviews: a systematic scoping review and thematic analysis of definitions and defining characteristics of rapid reviews. J Clin Epidemiol. 2021;129:74–85. doi: 10.1016/j.jclinepi.2020.09.041. [DOI] [PubMed] [Google Scholar]

- 4.Best L., Stevens A.J., Colin-Jones D. Rapid and responsive health technology assessment: the development and evaluation process in the South and West region of England. 2nd ed. J Clin Effect. 1997;2:51–56. [Google Scholar]

- 5.Hunter J., Arentz S., Goldenberg J., Yang G., Beardsley J., Lee M.S., et al. Choose your shortcuts wisely: COVID-19 rapid reviews of traditional, complementary and integrative medicine. Integr Med Res. 2020;9(3):100484. doi: 10.1016/j.imr.2020.100484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Garritty C., Gartlehner G., Nussbaumer-Streit B., King V.J., Hamel C., Kamel C., et al. Cochrane Rapid Reviews Methods Group offers evidence-informed guidance to conduct rapid reviews. J Clin Epidemiol. 2021;130:13–22. doi: 10.1016/j.jclinepi.2020.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hamel C., Michaud A., Thuku M., Affengruber L., Skidmore B., Nussbaumer-Streit B., et al. Few evaluative studies exist examining rapid review methodology across stages of conduct: a systematic scoping review. J Clin Epidemiol. 2020;126:131–140. doi: 10.1016/j.jclinepi.2020.06.027. [DOI] [PubMed] [Google Scholar]

- 8.Tricco A.C., Antony J., Zarin W., Strifler L., Ghassemi M., Ivory J., et al. A scoping review of rapid review methods. BMC Med. 2015;13(1):224. doi: 10.1186/s12916-015-0465-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Group CRRM Welcome to the Cochrane rapid reviews methods group (RRMG) website. 2022. https://methods.cochrane.org/rapidreviews/welcome2022 Available at.

- 10.James Lind Alliance . James Lind Alliance; University of Southampton, Southhampton, United Kingdom: 2020. The James Lind Alliance Guidebook. [Google Scholar]

- 11.Chalmers I., Bracken M.B., Djulbegovic B., Garattini S., Grant J., Gülmezoglu A.M., et al. How to increase value and reduce waste when research priorities are set. Lancet. 2014;383:156–165. doi: 10.1016/S0140-6736(13)62229-1. [DOI] [PubMed] [Google Scholar]

- 12.World Health Organization AfHPaSR . In: Rapid reviews to strengthen health policy and systems: a practical guide. Tricco A.C., Langlois E.V., Straus S., editors. World Health Organization; Geneva: 2017. [Google Scholar]

- 13.Healy P., Galvin S., Williamson P.R., Treweek S., Whiting C., Maeso B., et al. Identifying trial recruitment uncertainties using a James Lind alliance priority setting partnership - the PRioRiTy (prioritising recruitment in randomised trials) study. Trials. 2018;19(1):147. doi: 10.1186/s13063-018-2544-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Brunsdon D., Biesty L., Brocklehurst P., Brueton V., Devane D., Elliott J., et al. What are the most important unanswered research questions in trial retention? A James Lind alliance priority setting partnership: the PRioRiTy II (prioritising retention in randomised trials) study. Trials. 2019;20(1):593. doi: 10.1186/s13063-019-3687-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Beecher C., Toomey E., Maeso B., Whiting C., Stewart D.C., Worrall A., et al. What are the most important unanswered research questions on rapid review methodology? A James Lind Alliance research methodology Priority Setting Partnership: the Priority III study protocol. HRB Open Res. 2021;4:80. doi: 10.12688/hrbopenres.13321.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tong A., Synnot A., Crowe S., Hill S., Matus A., Scholes-Robertson N., et al. Reporting guideline for priority setting of health research (REPRISE) BMC Med Res Methodol. 2019;19:243. doi: 10.1186/s12874-019-0889-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Garritty C., Gartlehner G., Kamel C., King V.J., Nussbaumer-Streit B., Stevens A. Cochrane Rapid Reviews.; Ottawa Methods Centre, the Ottawa Hospital Research Institute (OHRI) and Cochrane Austria: 2020. Interim Guidance from the Cochrane Rapid Reviews Methods Group. [Google Scholar]

- 18.Cochrane glossary. Systematic review. 2021. https://community.cochrane.org/glossary#letter-S2021 Available at.

- 19.Evidence Synthesis International About evidence synthesis. 2021. https://evidencesynthesis.org/2021 Available at.

- 20.NHS Continuing Healthcare and NHS-funded Nursing Care team . NHS Continuing Healthcare and NHS-funded Nursing Care team; London, United Kingdom: 2018. National Framework for NHS continuing healthcare & NHS-funded nursing care. In: Department of Health and Social Care. [Google Scholar]

- 21.QuestionPro Inc . QuestionPro Inc.; Dallas, United States: 2021. QuestionPro survey software. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.