Abstract

The existing slime mould algorithm clones the uniqueness of the phase of oscillation of slime mould conduct and exhibits slow convergence in local search space due to poor exploitation phase. This research work exhibits to discover the best solution for objective function by commingling slime mould algorithm and simulated annealing algorithm for better variation of parameters and named as hybridized slime mould algorithm–simulated annealing algorithm. The simulated annealing algorithm improves and accelerates the effectiveness of slime mould technique as well as assists to take off from the local optimum. To corroborate the worth and usefulness of the introduced strategy, nonconvex, nonlinear, and typical engineering design difficulties were analyzed for standard benchmarks and interdisciplinary engineering design concerns. The proposed technique version is used to evaluate six, five, five unimodal, multimodal and fixed-dimension benchmark functions, respectively, also including 11 kinds of interdisciplinary engineering design difficulties. The technique’s outcomes were compared to the results of other on-hand optimization methods, and the experimental results show that the suggested approach outperforms the other optimization techniques.

Keywords: CEC-2005, Hybrid search algorithms, Metaheuristics search, Engineering optimization

Introduction

Nowadays, the usage of metaheuristic algorithms has become widespread in numerous applied fields because of their advanced presentation with less computing duration than other determinant algorithms in dissimilar optimization issues [1]. Uncomplicated conceptions are necessary to attain good outcomes, as well it is effortless to immigrate to dissimilar disciplines. In addition, the need for randomness in a while period of a few determinant algorithms prepares it leaning to go under local optima, and random parameters in metaheuristics make the algorithms explore for every optimum finding in search space, therefore, efficiently escaping local optimum. According to [2], the stochastic algorithms are less efficient than gradient descent algorithms for using gradient information. It is noticed that gradient descent algorithms have the convergence speed quicker than metaheuristic methods. On the other hand, a metaheuristics algorithm classically begins the optimization procedure at randomly produced outcomes and does not require gradient information, thus composing the algorithm extremely appropriate for realistic complications when the derivative data are not known. In reality, the solution location of several issues is repeatedly undetermined or endless. This might be impossible to bring out optimum solutions by bisecting the solution location over existing situations. Metaheuristics algorithms notice the immediate optimum solution of the issue by examining a huge solution space in random by sure means, to discover or produce enhanced solutions for the optimization issue over inadequate conditions or computational ability [3].

In context to the above discussions, an intermingle variant slime mould optimization algorithm was introduced using a simulated annealing technique into the planned research work, and the suggested hybrid translation of population-based metaheuristics search technique was examined to unravel the unique standard customary benchmark issues: unimodal, multimodal, fixed dimensions. Apart from this, the suggested optimizer’s performance was evaluated for engineering design and optimization problems for a more thorough investigation.

Earlier to this article, a few researchers introduced the same algorithm; however, the mode of idea of the algorithm and handling outline is quite diverse from the algorithms suggested in this article. A hybrid optimizer adaptive β hill climbing was combined with slime mould algorithm, here slime mould algorithm is in addition strengthened with Brownian motion and tournament selection to improve exploration capabilities thus producing better quality outcomes in the exploitation phase [4]. Zheng-Ming Gao et al. [5] introduced a technique named grey wolf optimizer–slime mould algorithm (GWO-SMA) to minimize the influence of uncertainty as low as probable which is best suited for a few benchmark functions and not suggested for engineering design issues. Juan Zhao et al. [6] proposed an improved slime mould algorithm with Levy flights and observed that when improved SMA is replaced with uniformly distributed factor, it performed well and when improved SMA is replaced with Gauss Distributed factor, it stuck in local minima. Juan et al. [7] promoted the Chaotic SMA–Chebyshev map and observed its performance to be a better, stable, more rapid, and better choice to apply in real engineering issues. Levy flight–slime mould (LF-SMA) algorithm [8] is clout by the actions of slime mould which is further mixed up with Levy distribution and excelled in obtaining better results in the exploration phase. Improved slime mould algorithm (ISMA) is developed from traditional SMA in two aspects: the exploration phase is aided with a modified opposition-based learning technique as well the phase of exploitative is aided with Nelder–Mead simplex search method to adjust the parameters of the controllers to control the velocity of a DC motor by a fractional-order PID (FOPID) controller as well as requires to maintain the automatic voltage regulator (AVR) at its level of terminal voltage through a PID in addition with second-order derivative (PIDD2) controller [9]. The combination of slime mould and Harris hawks optimization (HHO-SMA) implemented in [10] stood better technique to improve slow convergence speed. The improved version of slime mould with cosine controlling parameters in [11] could abolish errors as well as give better outcomes in engineering design issues. Accordingly, [12] finds the solution to a single solution optimization issue by replicating the 5 existence series of amoeba Dictyostelium discoideum: a phase of vegetative, aggregative, mound, slug, and dispersal using ε-ANN to build an initiative stage. To assess the Pareto optima solutions, the authors in [13] suggested multi-objective slime mould algorithm (MOSMA) for better convergence in association with elitist non-dominated sorting method. Considering two forms of slime mould arrangement [14] introduced a technique to build a wireless sensor setup to correlate two distinct local routing protocols. The network named Physarum is united with the ant colony model to enhance the technique’s proficiency to escape confined optimum values to treat the migrating agent issue efficiently [15]. Motivated by the dissemination of slime mould, Schmickland [16] suggested a bio-motivated navigation method framed for swarm robotics. Promotion of inexpensive and fault-tolerant charts based on foraging procedure of slime mould is done in [17]. According to above conversation, the majority of the pattern slime mould methods have been worn graph theory as well as in generation networks. Thus, the nature of this creature influenced scholars in the field of graph optimization [18]. Monismith et al. [12] simulated the life cycle of amoeba Dictyostelium discoideum to utilize the developed algorithm to optimize the issue with less experimental proofs.

With a unique pattern, a hybrid combination hSMA-SA introduced in this work primarily mimics the nature of slime mould’s foraging as well as morphological transforms. Meanwhile, the usage of adaptive weights in SMA simulates in producing positive and negative feedback throughout foraging, hence creating three diverse morphotypes. The simulated annealing algorithm is intermixed to boost the phase of exploitation of classical SMA resulting in improved results of the suggested hSMA-SA technique and proved better than already existing techniques. Overall, this approach is easier to use than prior population-based algorithms and requires fewer operators by least amount of computational struggles. The left behind parts of the current article enclose a literature review; background of suggested work in the next section; concepts of conventional slime mould algorithm (SMA), simulated annealing algorithm (SA), and the suggested hybrid hSMA-SA technique are discussed in the third section. The fourth section describes the standard benchmark functions. The fifth section displays the findings and compares them to those of other methods. In part 6, test on 11 engineering-based optimization design challenges has been carried, and the last section stands for the paper’s conclusion, limits, and future scope.

Literature review

Single-based and population-based metaheuristics are two types of metaheuristic algorithms. According to the names addressed, the former case has only one solution in the whole optimization process, whereas in the latter case, a bunch of solutions is developed in every iteration of optimization. In population-based techniques, an optimal or suboptimal solution may be taken into consideration, which may be as similar as the optimal or precinct location. These population metaheuristics techniques frequently emulate nominal phenomena. These types of techniques usually start the procedure of optimization by developing a bunch of individuals (population), in which every individual of the population reflects an aspirant optimum solution. Accordingly, the population progress iteratively by making use of stochastic functions at times to reinstate the present population with a newly developed population. This procedure comes to an end unless and until the simulation process gets satisfied with end command.

Metaheuristics algorithms are naturally motivated by actual-world phenomenality to bring out improved solutions for optimization issues by resembling physical laws or biological experience. The physics-based techniques are those which applies mathematical conventions or techniques which includes sine cosine algorithm (SCA) [19], gravitational search algorithm (GSA) [20], central force optimization (CFO) [21, 22], charged system search (CSS) [23], and multi-verse optimizer (MVO) [24]. The two major classes of metaheuristic algorithms are evolutionary techniques and swarm intelligent methods which are nature-influenced strategies. The insight of the evolutionary algorithm emanates from the progression of biological fruition in environment, which when analyzed with conventional optimization algorithms; this is a global optimization technique having well robustness and appropriateness. The prevalent algorithms in the group of evolutionary algorithms are: genetic programming (GP) [25], evolutionary programming (EP) [26], biogeography-based optimization (BBO) [27] which helped in analyzing biological species geographical distribution, and explained how these can be utilized to infer techniques appropriate for optimization. Differential evolution (DE) [28] is an evolutionary algorithm which includes genetic algorithms, evolutionary strategies and evolution programming. Evolutionary programming (EP) and genetic algorithm (GA) [29] are haggard from Darwinian Theory and Evolution Strategy (ES) [30]. The purpose of EP, ES, and swarm-intelligence techniques in logical research, and real-time issues are wide-ranging rapidly [31].

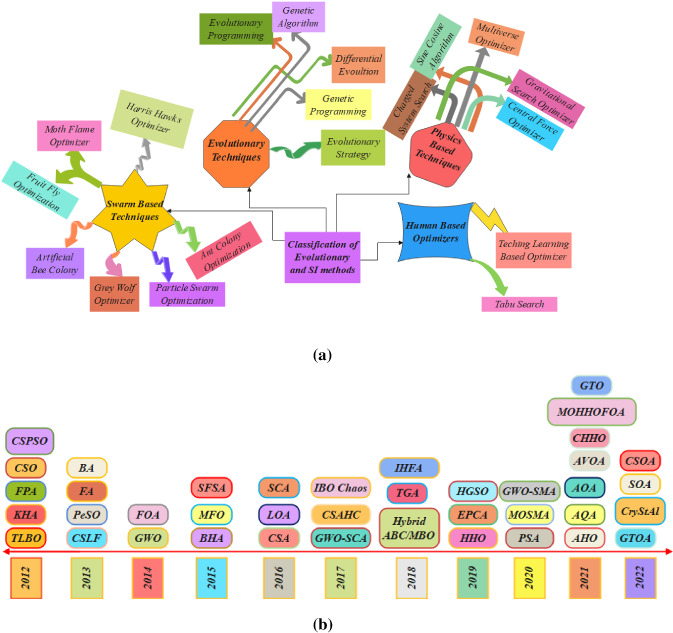

Swarm intelligence (SI) [32] encompasses a joint or communal intellect that unnaturally replicates the devolution of a biological bundle in the environment or the combined mannerisms of self-arranging structures. In this group of algorithms, the idea originates from biological communities present in the environment that have cooperative deeds and cleverness to accomplish an assured function. Reputable and current techniques in this set are particle swarm optimization (PSO) [33], moth flame optimization (MFO) [34], artificial bee colony (ABC) [35], Harris hawks optimizer (HHO) [36], fruit fly optimization algorithm (FFOA) [37], ant colony optimization (ACO) [38], and grey wolf optimization (GWO) [39]. Human-based techniques are those which resemble the activities of human works. In this group of algorithms, the inspiration starts from human activity in an assigned work that supports to finish the function assured. Teaching–learning-based optimization (TLBO) [40] imitates the teaching–learning procedure in a classroom, and tabu search (TS) [41]. A graphical diagram for the categorization of evolutionary and SI techniques is depicted in Fig. 1a and b displaying the history timeline of the other metaheuristic algorithms enclosed in this review. Table 1 showcases the last decade from the year (2012–2022) which exhibits the investigative works on finding solutions for numerical and engineering design problems.

Fig. 1.

a Categories of SI and evolutionary methods. b Timeline of metaheuristics. TLBO teaching–learning-based optimization, KH krill herd, FP flower pollination, CSO cuckoo search algorithm, CSPSO cuckoo search particle swarm optimization, CSLF cuckoo search–Levy flight, PeSO penguins search optimization, FA firefly algorithm, BA bat algorithm, GWO grey wolf optimizer, FOA forest optimization algorithm, BHA black hole algorithm, MFO moth flame optimizer, SFSA stochastic fractal search algorithm, CSA crow search algorithm, LOA lion optimization algorithm, SCA sine cosine algorithm, GWO-SCA hybrid grey wolf optimizer and sine cosine algorithm, CSAHC cuckoo search algorithm with hill climbing, IBO Chaos improved butterfly algorithm with chaos, Hybrid ABC/MBO artificial bee colony with monarch butterfly optimization, TGA tree growth algorithm, IHFA improved hybrid firefly algorithm, HHO Harris hawks optimizer, EPC emperor penguins colony, HGSO Henry gas solubility optimization, PSA particle swarm optimization, MOSMA multi-objective slime mould algorithm, GWO-SMA hybrid grey wolf optimization–slime mould algorithm, AHO archerfish hunting optimizer, AQ Aquila optimizer, AOA arithmetic optimization algorithm, AVOA African vultures optimization algorithm, CHHO chaotic Harris hawks optimizer, MOHHOFOA multi-objective Harris hawks optimization fruit fly optimization algorithm, GTO gorilla troops optimization, GTOA modified group theory-based optimization algorithm, CryStAl crystal structure optimization, SOA seagull optimization algorithm, CSOA criminal search optimization algorithm

Table 1.

A look into population metaheuristics in a nutshell

| Technique and its reference number | Name of the author and year | A quick summary |

|---|---|---|

| Seagull optimization algorithm (SOA) [55] | Yanhui Che and Dengxu He 2022 | This paper proposed an enhanced seagull optimization algorithm to eliminate the defects of traditional seagull optimizer. The technique is tested on 12 various engineering optimization problems |

| Modified group theory-based optimization algorithms for numerical optimization (GTOA) [56] | Li et al. 2022 | This paper concentrated on studying the applicability of the proposed GTOA to solve optimization problems by introducing two versions of GTOA which uses binary coding and integer coding. The performance proved to obtain better convergence rate and average accuracy |

| Criminal search optimization algorithm (CSOA) [57] | Srivastava et al. 2022 | This paper introduced criminal search optimization algorithm which has been developed based on intelligence of policemen in catching a criminal. The presentation of the technique has been evaluated on standard benchmark functions—CEC-2005 and CEC-2020. Five test cases have been operated to measure the results of the suggested algorithm with other techniques and proved the good |

| Crystal structure optimization approach to problem-solving in mechanical engineering design (CryStAl) [58] | Babak Talatahari et al. 2022 | The authors of this paper introduced a metaheuristic named crystal structure algorithm to discover solutions for engineering mechanics and design problems. Further, the technique has been examined on 20 benchmark mathematical functions and obtained satisfying outputs when measured with other existing methods |

| African vultures optimization algorithm (AVOA) [59] | Abdollahzadeh et al. 2021 | The authors of this paper proposed African vultures optimization algorithm imitating the living style of African vultures foraging and navigation attitude. First, the method’s feat is tested on 36 benchmark functions and its applicability is announced on finding optimum solutions for 11 engineering design problems |

| Flow direction algorithm (FDA) [60] | Hojat Karami et al. 2021 | This paper focused in proposing a physics-based algorithm named Flow direction algorithm imitating flow direction in a drainage basin. The method has been tested on 13, 10 and 5 classical mathematical, new mathematical benchmark functions and engineering design problems, respectively. These results proved better than other techniques results |

| A new hybrid chaotic atom search optimization based on tree-seed algorithm and Levy flight for solving optimization problems [61] | Saeid et al. 2021 | The authors in this papers used combination of metaheuristic algorithms to crack 7 special engineering issues. This atom search algorithm convergence speed is enhanced by chaotic maps as well as Levy flight random walk. Furthermore, tree-seed method ties with ASO. These combinations of algorithms yield good results |

| A multi-objective optimization algorithm for feature selection problems (MOHHOFOA) [62] | Abdollahzadeh et al. 2021 | The authors in this paper used three different solutions for feature selection. First, Harris hawks optimization algorithm is multiplied; second, fruit fly optimization algorithm is multiplied, and in third stage, these two algorithms have been hybridized to locate solutions for feature selection issues |

| Arithmetic optimization algorithm (AOA) [63] | Laith et al. 2021 | This paper proposed arithmetic optimization algorithm and tested its performance on 29 benchmark functions 7 real-world engineering design problems. The outcomes obtained by this technique proved better among other existing methods |

| Aquila optimizer (AO) [64] | Laith Abualigah et al. 2021 | The authors suggested population-based optimization method named Aquila optimizer to solve optimization problems. The technique has been evaluated on 23 benchmark functions and 7 real-life engineering design issues. The outcomes are good than other methods |

| Artificial gorilla troops optimizer (GTO) [65] | Abdollahzadeh et al. 2021 | The authors in this paper proposed Artificial gorilla troops optimizer which is designed to improve the phases of exploration and exploitation. The algorithm is examined on 52 functions and 7 engineering design problems |

| Binary slime mould algorithm (BSMA) [66] | Abdel et al. 2021 | This paper proposed slime mould algorithm with 4 binary versions for feature selection. All these versions were tested on 28 datasets of UCI repository |

| 1D SMA models (SMAs) [67] | Sonia Marfia et al. 2021 | This paper elevates the SMA 1D models to elucidate response of SMAs in thermo mechanical models |

| Slime mould algorithm (SMA) [68] | Davut Izci et al. 2021 | Tested on several benchmark functions. Using PID controllers, the capability of SMA optimization is enhanced |

| Hybrid improved slime mould algorithm with adaptive β hill climbing (BTβSMA) [4] | Kangjian Sun et al. 2021 | Tested on 16 benchmark functions and is suggested to lighten the unfledged global and local hunt in standard SMA |

| Archerfish hunting optimizer (AHO) [69] | Farouq Zitouni et al. 2021 | Tested on 10 benchmark functions, 5 engineering problems. AHO replicates the behavior of Archerfish like jumping and shooting to find closer optimum values |

| WLSSA [70] | Hao Ren et al. 2021 | Tested on 23 benchmark functions. With the combination of slap swarm and weight adaptive Levy flight have noticed finer optimum values |

| Multi-temperature simulated annealing algorithm (MTSA) [71] | Shih-Wei-Lin et al. 2021 | This algorithm is developed to reduce the scheduling issues which influence the design and optimization of automated systems |

| Self-adaptive salp swarm algorithm (SASSA) [72] | Rohith Salgotra et al. 2021 | Salp swarm algorithm is improved to mould it into self-adaptive by supplementing it by four modifications which possess in improving local search |

| Simulated annealing with Gaussian mutation and distortion equalization algorithm (SAGMDE) [73] | Julian Lee et al. 2020 | This combined algorithm applied on different data sets yields better results in exploratory phase when only simulated annealing algorithm was applied |

| Slime mould algorithm (SMA) [3] | Shimin Li et al. 2020 |

Tested on 33 benchmark functions It replicated the characteristics of slime mould. SMA, intended to give better exploration capability and extending its application in kernel extreme learning machine |

| Hybrid grey wolf optimization–slime mould algorithm (GWO-SMA) [5] | Zheng-Ming Gao et al. 2020 | Made 3 types of experiments resulting it did not give better results in combining GWO and SMA. SMA equations were unique and excellent and firm to progress |

| Chaotic SMA–Chebyshev map [7] | Juan Zhao et al. 2020 | Tested on standard benchmark functions and noticed the results to be better and the technique performed faster with stability |

| Improved slime mould algorithm with Levy flight [6] | Juan Zhao et al. 2020 | Worked to reduce the pressure of randomness and noticed SMA–Levy flight with uniform distributed parameters would give better results |

| Modified slime mould algorithm via Levy flight [8] | Zhesen Cui et al. 2020 | Tested on 13 benchmark functions and 1 engineering design issue and notice the results obtained were better and steady |

| Hybridized Harris hawks optimization and slime mould algorithm (HHO-SMA) [10] | Juan Zhao et al. 2020 | The research attentively made efforts on many updating discipline mostly individuals on swarms |

| Improved slime mould algorithm with cosine controlling parameter [11] | Zheng-Ming Gao et al. 2020 | The research helped in finding that the controlling parameters are very essential for the technique to perform better, at the same time noticed that all parameters were not acceptably helpful. Hence, should find more apt method |

| Multi-objective slime mould algorithm based on elitist non-dominated sorting (MOSMA) [13] | Manoharan Premkumar et al. 2020 | Tested on 41 various cases, constrained, unconstrained as well as on real-life engineering issues. On applying this algorithm resulted in high-quality and effectiveness solutions for tough multi-objective issues |

| PSA: a photon search algorithm [74] | Y. Liu and Li 2020 | 23 functions were put to the test. The characteristics of photons in physics were the inspiration for this piece. The algorithm has strong global search and convergence capabilities |

| Movable damped wave algorithm [75] | Rizk et al. 2019 | This paper proposed movable damped wave algorithm and the algorithm has been examined on 23 benchmark functions and 3 engineering design problems |

| Henry gas solubility optimization: a novel physics-based algorithm (HGSO) [76] | Hashim et al. 2019 | 47 benchmark functions were used in the testing. It is modeled after Henry’s reign. HGSO, which aims to meet the check room and halt optima locale’s production and conservation capacities |

| Emperor penguins colony (EPC) [77] | Sasan et al. 2019 | A new metaheuristic algorithm named emperor penguins colony is proposed in this paper and has been tested on 10 benchmark functions |

| Harris hawks optimization (HHO) [78] | Heidari et al. 2019 | There were 29 benchmarks and 6 technical issues which were tested on. It is being introduced to help with various optimization chores. Nature’s cooperative behaviors, as well as the patterns of predatory birds hunting Harris’ hawks, impact the strategy |

| Tree growth algorithm (TGA) [79] | Armin et al. 2018 | The authors introduced Tree growth algorithm which is inspired by trees competition for acquiring light and food. It has been examined on 30 benchmark functions and 5 engineering design problems |

| Hybrid artificial bee colony with monarch butterfly optimization [80] | Waheed et al. 2018 | This paper introduced a new algorithm named hybrid ABC/MBO (HAM) and evaluated on 13 benchmark functions and proved better in outcomes |

| An improved hybrid firefly algorithm for solving optimization problems (IHFA) [81] | Fazli Wahid et al. 2018 | This paper introduced a novel method called GA-FA-PS algorithm and tested on 3 benchmark functions and proved that the obtained results are better than firefly algorithm and genetic algorithm |

| An improved butterfly optimization algorithm with chaos [82] | Sankalap Arora et al. 2017 | The authors in this paper improved butterfly optimization with chaos to increase its performance to avoid local optimum and convergence speed. The suggested chaotic BOAs are validated 3 benchmark functions and 3 engineering design problems |

| Cuckoo search algorithm–hill climbing technique (CSAHC) [83] | Shehab et al. 2017 | The authors proposed new cuckoo search algorithm by hybridizing with hill climbing technique to solve optimization issues. It has been examined on 13 benchmark functions and proved successful |

| Hybrid GWO-SCA [84] | Singh et al. 2017 | This paper proposed hybrid grey wolf optimizer and sine cosine technique and tested on 22 functions, 5 bio-medical dataset and 1 sine dataset problems |

| SCA: a sine cosine algorithm for solving optimization problems [85] | Seyedali Mirjalili 2016 | The author proposed SCA for the solutions of optimization problems and its efficiency is validated on testing 19 benchmark functions |

| Lion optimization algorithm (LOA): a nature-inspired metaheuristic algorithm [86] | Maziar Yazdani et al. 2016 | This paper introduced Lion optimization algorithm and has been examined on 30 benchmark functions |

| Crow search algorithm (CSA) [87] | Alireza Askarzadeh 2016 | The author proposed crow search algorithm and applied to unravel 6 engineering design issues. The outputs were promising than existing methods |

| Stochastic fractal search: a powerful metaheuristic algorithm (SFS) [88] | Salimi 2015 | Uni, multi, fixed functions, and engineering functions were all tested |

| Moth flame optimization algorithm: a novel nature-inspired heuristic paradigm (MFO) [34] | Mirjalili 2015 | 7 engineering designs were tested, as well as 29 benchmarks. This optimizer followed navigation tactic of moth flame. The outcomes of this method stood better than existing techniques |

| Solving optimization problems using black hole algorithm (BHA) [89] | Masoum Farahmandian et al. 2015 | This paper suggested black hole algorithm and has been checked on 19 benchmark functions. These results were better than PSO and GA |

| Forest optimization algorithm FOA [90] | Ghaemi et al. 2014 | This technique is for determining the utmost as well as minimum value using a practical appliance, as well as demonstrating that the FOA can generally solve that are acceptable |

| Grey wolf optimizer (GWO) [39] | Mirjalili, Mirjalili, and Lewis 2014 | The researchers looked at 29 BFs and 3 optimization engineering-based approaches. The image was enthused by a swarm-intelligence optimization and was inspired by grey wolves. Grey wolves’ communal structure and hunting conduct were used to develop the suggested model |

| Cuckoo search algorithm using Lèvy flight: a review [91] | Sangita Roy et al. 2013 | The authors in this paper discussed about cuckoo search algorithm using Levy flight algorithm and noticed that the presentation of this method is superior to particle swarm optimizer and genetic algorithm when examined on 10 benchmark functions |

| Firefly algorithm: recent advances and applications (FA) [92] | Xin-She Yang et al. 2013 | This paper suggested firefly algorithm, its fundamentals and explained the balancing of exploration and exploitation phases. In addition, the technique has been tested on higher-dimensional optimization problems |

| Bat algorithm: literature review and applications (BA) [93] | Xin-She 2013 | The author presented the literature review and applications of Bat methodology which is efficient for solving optimization issues |

| Penguins search optimization algorithm (PeSOA) [94] | Youcef Gheraibia et al. 2013 | This paper presented penguins search optimization algorithm and tested on 3 benchmark functions and obtained better results |

| Teaching–learning-based optimization (TLBO) [40] | Rao et al. 2012 | In a power system, TLBO has two stages: a teaching stage and a student stage. Interacting by way of both is feasible only via modification, and the issue is solved |

| Krill herd (KH) [95] | A. H. Gandomi et al. 2012 | This paper proposed krill herd algorithm which is a biologically inspired algorithm. Tested on several benchmark functions |

| Flower pollination algorithm (FPA) [96] | Xin-She Yang 2012 | The author in this paper proposed flower pollination method which is motivated by the procedure of pollination in flowers. The technique has been tested on 10 benchmark functions and 1 nonlinear design problem. The results were better than PSO and GA methods |

| A hybrid CS/PSO algorithm for global optimization [97] | Ghodrati et al. 2012 | The authors in this paper presented hybrid CS/PSO method to crack optimization issues. The technique has been examined on many benchmark functions to prove better than other techniques |

| Biogeography-based optimization (BBO) [27] | Simon 2008 | 14 typical benchmark functions were used in the testing. The BBO method, which analyses the spatial distribution of biological species, may be used to derive optimization algorithms |

| A new heuristic optimization technique: harmony search (HS) [98] | Geem, Kim, and Loganathan 2001 | The comparison of the music creation cycle inspired this algorithm. The starting values of the variables may not be required for HS to make a decision |

| Differential evolution (DE) [99] | Storn et al. 1997 | It shows how to minimize nonlinear and non differentiable continuous space functions that are possibly nonlinear. It merely needs a few strong control variables drawn from a predetermined numerical range |

| Tabu search-part I (TS) [100] | Fred Glover 1989 | This has originated as a method of resolving combinatorial real-world scheduling and covering challenges |

Though different types of metaheuristics algorithms have some dissimilarities, two same phases namely explorative as well as exploitative match them in search phase progression [42]. This explorative stage specifies a procedure to discover solution location as broadly, arbitrarily, and globally as feasible. The exploitative stage specifies the proficiency of the technique to search further exactly in the arena promoted by the exploration stage and its arbitrariness reduces whereas accuracy rises. While the exploration capacity of the technique is leading, it hunts the solution location at random to generate extra discriminated answers to mingle hastily. When the technique’s exploitative capacity is dominant, it performs additional checks in local in such a way that the quality as well as accuracy of the results is enhanced. Moreover, while the exploration competence is enhanced, it reduces exploitation capability, and contrarily. One more defy is that the stableness of these two abilities need not be similar for dissimilar issues. Consequently, it is comparatively challenging to achieve a suitable balance among the two aspects that are proficient for all optimization issues.

Regardless of the victory of traditional and current metaheuristic algorithms, no other techniques can assure to discover the global optima for all optimization issues. No free-lunch (NFL) theory proved it sensibly [43]. Despite this, no one optimization technique has up till now been revealed to crack each and every optimization issues [44]. This theory provoked several researchers to propose new algorithms and find efficient solutions for new classes of issues. Huang et al. [45] made a blend of the crow search and particle swarm optimization algorithms. The spotted hyena optimizer (SHO) [46] is a revolutionary metaheuristic approach encouraged by spotted hyenas’ original combined actions in hunting, circling, and attacking prey. The whale optimization approach (WOA) [47] is a intermix metaheuristics approach that uses whale and swarm human-based optimizers to find optimal exploration and convergence capabilities. MOSHO [48] is a multi-objective spotted hyena optimizer that lowers several key functions. Hu et al. [49] rely on butterfly research into how they build scent as they migrate from one food source to another by the modified adaptive butterfly optimization algorithm (BOA). Balakrishna et al. [50] applied a metaheuristic optimization method HHO-PS that was created to identify a latest edition of Harris hawks for local and global search. The binary spotted hyena optimizer (BSHO) [51] is a discrete optimization problem-solving metaheuristic approach based on spotted hyena hunting behavior. The Bernstrain-search differential evolution method (EBSD) [52] is a universal differential evolution algorithm that depends on mutation and crossover operators that was suggested. The reliability-based design optimization method (RBDO) [53] addresses issues such as global convergence and intricate design variables. Ayani Nandi et al. [54] coupled Harris hawks’ virtuous behavior with arithmetic conceptions of sine and cosine to strengthen the abilities of the hybrid Harris hawks–sine cosine method (HHO-SCA) in the phases of exploration and exploitation.

Background of suggested work

Nature has many organisms and every organism has a unique behavior; among them, few organisms behaviors will attract and can be straightforwardly adopted and statistically shaped to tackle nonconvex and unconstrained models. This adaptability made several researchers seek to imitate the operational procedure for computational and algorithms’ evolution. Based on this idea, slime moulds were accredited for the past few years. Slime mould (a fungus) lives in chill and muggy places stretching its venous to reach the food places. In the process of repositioning, a fan-shaped structure in the front side is formed and connected by a tail shape which acts as interconnection permitting cytoplasm to flow inside. Slime moulds make use of venous structures in search of various food points which trap food places and creeps very eagerly, if there is a scarcity of food, which aid to recognize their behavior in searching, moving, and catching the food in the varying environment. Slime mould is good enough to adjust positive and negative feedbacks depending on the distance to catch the food in an improved way proving that it pay a predefined aisle to reach the level of a food source by all the time targeting rich food spots and based on food stock as well as environmental changes. The slime mould counterbalances the speed and elects to leave that region, and starts its fresh search before foraging. Slime mould decides to search new food centers based on the data available and leaves the current region during foraging. Slime mould is also clever enough to divide its biomass to other resources to grasp enrich food even though it has abundant foodstuff currently. It adjusts as per the foodstuff available in reaching the target. Despite having good global search capability, slime mould lacks in local search ability and convergence. To enhance local search aptitude and convergence speed, the slime mould method in this article is combined with simulated annealing which is good at local search. The recommended calculation aims to increase the convergence rate and betterment in local search of slime mould algorithm utilizing simulated annealing; thus, hSMA-SA is introduced.

The researchers pursue motivation from the streams of physics, genetics, environment, and sociology to develop a state-of-the-art metaheuristic algorithm. In the suggested work, the authors sought to solve these issues by heuristically mixing two strong algorithms for improved exploration and exploitation, as well as enhanced search capabilities. The following research papers were picked from already available techniques which were taking much time to reach near global minima and increased computational burden: animal migration optimization (AMO) algorithm [101], sine cosine algorithm (SCA) [102], group search optimizer (GSO) algorithm [103], interior search algorithm (ISA) [104], electro search optimization (ESO) algorithm [105], tunicate swarm algorithm (TSA) [106], orthogonally designed adapted grasshopper optimization (ODAGO) algorithm [107], photon search algorithm (PSA) [74], gradient-based optimizer (GBO) [108], transient search optimizer (TSO) [109], dynamic group-based cooperative optimizer (DGBO) [110], central force optimization (CFO) algorithm [21], electromagnetic field optimization (EFO) algorithm [111], harmony search algorithm (HS) [98]. Few such hybrid algorithms are manta ray foraging optimization (MRFO) algorithm [112], life choice-based optimizer (LCBO) [113], improved fitness-dependent optimizer algorithm (IFDO) [114], incremental grey wolf optimizer, and expanded grey wolf optimizer (I-GWO and Ex-GWO) [115], hybrid crossover oriented PSO and GWO (HC-PSOGWO) [116], self-adaptive differential artificial bee colony (SA-DABC) [117], and multi-objective heat transfer search algorithm (MHTSA) [118].

The remaining part of this section of the paper is contributed to describe the latest survey on SMA variants and SA variants, novelty of the proposed technique and background of suggested work.

Literature survey on slime mould algorithm variants and simulated annealing algorithm variants

In this area, a special relevant study has been offered to discover data on current advancements connected to SMA variants, as well as newly created approaches by various researchers. By imitating the behavior of slime mould in discovering food points, the researchers have developed a broad assortment of metaheuristic and hybrid renditions of SMA to tackle many types of stochastic problems, as evidenced by the cited literature studies. Using a heuristic method, a group of academics was assessed to examine real-time issues, namely network foraging, engineering design, image segmentation, optimum power flow, structural machines, fault-tolerant transportation, and feature selection are the topics covered. The correctness of any algorithm’s answer is determined by its ability to strike a proper balance between intensification and variety. Slow convergence is a frequent issue with many heuristic techniques, noticed according to studies. The computing efficiency falls as a result. As a result, hybrid algorithms are becoming increasingly popular for improving solutions effectively. Many researchers have successfully used various SMA methods to optimize particular key functions. The eventual goal of these techniques is to find the best answer to an issue. Researchers have newly formed novel SMA renditions for a diversity of operations: chaotic slime mould algorithm (CSMA) [119]; here, the sinusoidal chaotic function is merged with traditional SMA to improve the exploitation capability of SMA. Hybrid arithmetic optimizer–slime mould algorithm (HAOASMA) [120] is introduced to solve the less internal memory and slow convergence rate at local optimum by repeatedly exciting arithmetic optimizer with slime mould algorithm and vice versa which improves the population to skyrocket the convergence. Slime mould algorithm with Levy flights (SMALF) [6] is introduced to enhance searching ability by replacing random weights with Levy flights. OBLSMAL [121] method was proposed by adding two search techniques to basic SMA, i.e., initially, an opposition-based learning algorithm has been utilized to boost up the rate of convergence of SMA, and later SMA is assisted with Levy flight distribution to improve exploration and exploitation phases. Thus, OBLSMAL proved better in convergence rate and searching tactics than other algorithms. Hybrid slime mould salp swarm algorithm (HSMSSA) [122] is developed to improve the convergence speed and searching abilities. A successful method LSMA [123] is suggested in terms of both multilayer threshold precision and time. For both discovery and exploitation, it is necessary to have features of decreased calculations.

Some variants of simulated annealing are: simulated annealing with adaptive neighborhood search algorithm (SA-ANS) [124], developed to find solutions when the algorithm is stuck in the same solution, i.e., at every iteration, more number of solutions are found. Harris hawks optimization with simulated annealing [125] is used for feature selection, as the SA algorithm is added up with HHO attains a reduction in consuming time, this novel idea finds a solution for complex optimization problems in CT-scan in detecting COVID-19. To reduce the high time complexity of capacitated vehicle routing issues, an enhanced simulated annealing algorithm combined with crossover operator (ISA-CO) was suggested in [126] to improve convergence. Using hidden Markov model (HHM), dynamic simulated annealing was introduced in [127], with the integration of HHM adapts neighborhood structure at every iteration in SA, thus proving the capability optimum nature of fellow function depending on the history of search. On the whole, in every observation of an algorithm, it is noted that many cases experience precipitate convergence in simulation results.

The introduced Lévy flight distribution and simulated annealing algorithm (LFDSA) [128] involves a balanced structure in both the phases of exploration and exploitation and proved excellent by testing on unimodal, multimodal benchmark functions and non-parametric statistical tests. This enhanced capability of the suggested algorithm helped to achieve optimum values of fractional-order proportional-integral derivative (FOPID) parameters for an improved closed-loop output voltage control performance of the buck converter in terms of time and frequency domain reaction as well as disturbance rejection. Considering a single machine infinite bus power system, the improved atom search optimization algorithm (IASO) [129], a recently developed hybrid approach that was built by integrating atom search optimization and simulated annealing approaches, is utilized to optimize a power system stabilizer function. In this study, the improved approach was used to find optimal controller settings for a power system stabilizer damping controller, proving the potential and improved feat of the recommended method for a difficult practical engineering problem. On comparison of outcomes with other algorithms, the proposed technique stood better. Atom search algorithm with simulated annealing (hASO-SA) [130] is used to answer various optimization issues, as the simulated annealing technique assists the ASO to avoid local minima and also helps to raise the level of diversity in search of optimal solution in the search space. This mixture version of algorithm (hASO-SA) feat the fast-optimum searching ability and hill climbing act of both ASO and AS techniques which adds the aptitude of the suggested algorithm to solve different optimization issues. Later hASO-SA is applied in training MLP in three diverse techniques using metaheuristic techniques. The first technique is involved to discover linked weights and biases which help to achieve reduced error for an MLP. The second technique is to discover a suitable structural design for an MLP to handle a specific problem using metaheuristics. In the third method, the parameters such as learning rate of the gradient-based learning algorithm and momentum are tuned.

As it is also well known, the burning issue is the struggle of finding answers to optimization problems. The difficulty of optimization problems will expand as the number of optimization factors grows. Furthermore, several of the planned deterministic methods are prone to local optima trapping and have a slow convergence rate. Metaheuristic nature-motivated optimization methods are utilized to tackle such issues. Two major characteristics of these methods are the lack of beginning presumptions and population dependence. There has yet to be identified an optimization method that can address all optimization issues [44]. This motivated to launch the slime mould–simulated annealing algorithm, a metaheuristic hybrid variation optimizer (hSMA-SA).

In the following three ways, the newly proposed hybridized SMA variant outperforms numerous population-based metaheuristic techniques.

The first step comprises combining two established procedures to develop a trouble-free and proficient simulation method that, when compared to other current methods, does more complex mathematical computations faster. Standard SMA features are introduced as initial parameters into the SA technique to increase its progressing capacity and to optimize these values in order to improve standard SMA’s ability to assess the ideal value of an optimization trouble. This treatment is completed without the use of complex procedures.

The second point is that in terms of results, the suggested new method outperformed the classic SMA solution. In the outcome section, the empirical outputs serve as proof, confirming its numerical and experimental performance. This sets the suggested approaches apart from other methods. Most techniques fail to find an optimal solution with an increasing number of repetitions because to inherent limits. The proposed technique provides an essential and standard strategy to manage this issue by assessing the operational phases of this approach, which may be used by other optimization approaches.

The hSMA-SA technique’s third aspect is that it aims to increase the optimization strength of traditional SMA in order to obtain optimal values while keeping the algorithm's complexity low. Combining the SA algorithm with the regular SMA yields the proposed optimization approach. Each of the two mathematical models discussed above has its own framework for dealing with optimization. To convert the ideology of one algorithm into the principles of another, computational methodologies are applied. As a consequence, in this work, the SMA oscillation mode is mapped into SA parameters, and the SA features are translated back into SMA. To raise the complexity of hybrid variations, latest operators have been suggested to this approach. Sixteen benchmark functions along with 11 special engineering optimum problems are investigated to evaluate the proposed hybrid version hSMA-SA with various parameter choices. The results outperform those of other algorithms currently in use. The subsequent are the most important things in terms of new contribution:

-

(i)

The simulated annealing algorithm is applied to advance the local search ability of SMA.

-

(ii)

The SA approach has boosted the prominence of the preliminary population.

-

(iii)

In order to preserve the uniqueness of SMA, parameters of SMA are untouched.

-

(iv)

The hSMA-SA strategy has been profitably tested for 6 standard unimodal, 5 standard multimodal, 5 customary fixed-dimension benchmark functions, and 11 forms of multidisciplinary engineering design difficulties to test its effectiveness.

-

(v)

The success of the new launched technique’s examination is done by Wilcoxon rank test.

-

(vi)

As per the findings section’s comparison analysis, the suggested approach performed excellently the fitness evaluation in addition to solution precision.

Proposed hybridized slime mould algorithm-simulated annealing algorithm

To assure a proficient algorithm, this research work suggested a new hybrid combination of metaheuristic algorithm named hybridized slime mould-simulated annealing algorithm. As the top conversation in the introduction of this paper, this method has been initiated depending on the dispersion and foraging behavior of slime mould. Mathematically, the structure of the propagation wave is represented in the discovery of a better approach to relate foodstuff with brilliant exploratory capability and exploitation affinity.

The traditional SMA is hybridized with simulated annealing algorithm to additionally improve the performance. Every time a new method arouses because of a few drawbacks which do not satisfy in solving many difficult optimization issues in which mathematical reformulations limit the efficiency of methods. The suggested method is beneficial than other algorithms including conventional SMA and SA algorithms. It is noticed that because of early convergence, the convergence rate is not proficient. The suggested algorithm uses the simulated annealing algorithm to enhance local search ability and improve the convergence of a traditional SMA and find a solution for various problems as well optimize the key fitness of those issues. The simulated annealing algorithm makes SMA adjust the starting parameters of the hunt and hence avoids the local trapping of slime moulds. It is well known that few methods are weak in global search. Every algorithm has the necessity to maintain equal balance among local and global search to obtain a proficient performance. In the suggested work, no complex operators are utilized to balance local and global search requirements. The computational time is less for the results drawn from the simulation process. In addition, trapping in local optimum is absent in the suggested method.

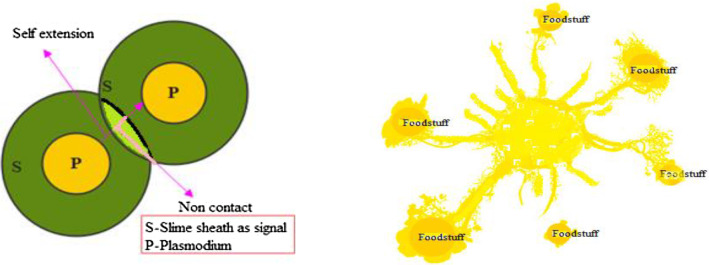

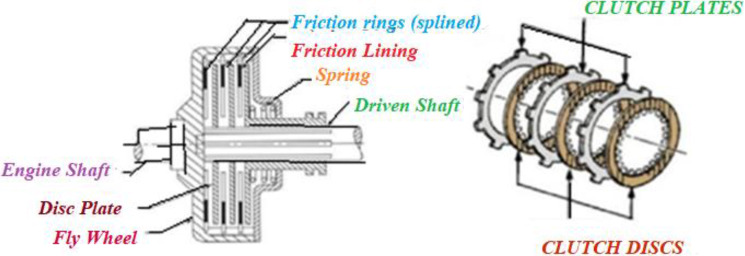

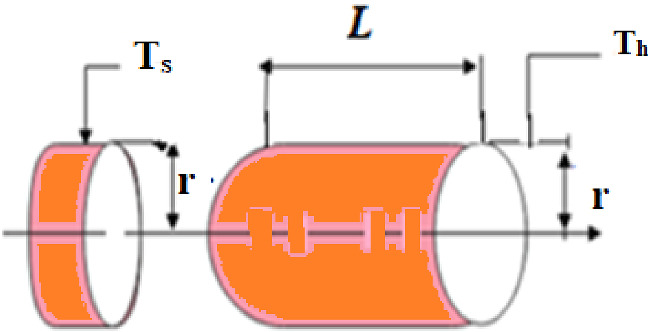

Slime mould algorithm

Physarum polycephalum is the technical title for slime mould. In a 1931 article [131], Howard recognized it as a fungus, studied its span of life and named as “slime mould”. Slime mould grows and lives in cool and moist places. Plasmodium, the competent and active phase of slime mould, materializes to be its essential feeding phase. During this phase, the slime mould’s organic component looks for victuals, catches it, and produces enzymes to consume it. As depicted in Fig. 2, at the time of repositioning, a fan format is framed on the extension of the front end, and this fan format is escorted to permit cytoplasm to flow through it by an integrated venous network [132]. With their unique venous network, slime moulds search various food sources and consequently stash enzymes to grab the food points. Depending on the food availability in the environment, slime mould matures over 900 m2 [131].

Fig. 2.

Growing crops slime mould morphology

Slime mould is referred to as a model organism [133] for the reason of its elegant quality for eating on agar and oatmeal. Kamiya et al. [134] looked at the cytoplasm flow of a slime mould in great detail, which cooperated them better understand the slime mould’s ability to obtain sustenance from its surroundings. As the vein reaches a foodstuff supply, the bio-oscillator sends out a propagation wave, which speeds up cytoplasm stream contained by the vein [135]. The quicker the cytoplasm flows, the stronger the vein turns. The slime may build out its optimum path to gather the victuals in a silent better mode using a combination of positive and negative feedback. As a result, the mathematical representation for use in graph theory and route networks [136] was slime mould.

The venous arrangement increases phase variety in the contraction mode in slime mould [135], leading to the discovery of three relationships between morphological changes in the venous configuration and slime mould contraction phase.

-

(i)

As the contraction progresses from outside to inside, thick vein development and radius are noticed.

-

(ii)

Anisotropy begins during the unstable period of contraction mode.

-

(iii)

The vascular formation does not form until the slime mould contraction mode is no longer regulated by time or place.

The structure of venous and contraction phase bonding stays constant when cells develop naturally. The thickness of the vein is determined in [137] utilizing the Physarum solver and reverse cytoplasm flow.

The increase in cytoplasm suggests an increase in vein diameter. The vein contracts when the flow of cytoplasm decreases, resulting in a drop in the diameter. Slime mould grows stronger in areas where there is more food, ensuring that nutrients are captured with the greatest care. According to the most recent research, slime mould has the ability to forage based on optimization assumption [138]. Slime mould has the capacity to pick higher-concentration nutrition based on food availability and environmental changes. Slime mould, on the other hand, slows things down by leaving the area before foraging. As a result, slime moulds make swift decisions when it comes to protecting the environment [139]. When slime moulds make quick judgements, they take very little time to reach the new region with rich feeding centers, according to rigorous monitoring. When slime moulds make quick judgements, they take very little time to reach the new region with rich feeding centers, according to rigorous monitoring. As a result, while choosing a food source, the slime mould must strike a balance between speed and accuracy. Experiments have demonstrated that slime mould has a lesser chance of leaving an area where it obtains high-quality aliment [140]. Slime mould, on the other hand, may use many food sources at once due to its unique biological distinctiveness. This explains why even if slime mould discovers a superior food area, it may isolate a piece of biomass to pertain for both sources of food at the same time when the top quality aliment is discovered [137]. Slime mould also modulates their search patterns energetically dependent on the accessibility of super food. The slime mould adopts a region limited search technique [141] when the quality of a food source is abundant, focusing its search on foodstuff sources that are now available. If the density of available food is found to be less, then slime mould exits the region in search of other food sources [142]. This adaptable search approach can reflect even more when a variety of meal portions are scattered over the region. The physics and features of the slime mould are mathematically elucidated in the sections that follow.

Mathematical modeling of slime mould algorithm

Slime mould algorithm is a metaheuristic algorithm designed on the manners of foraging slime moulds. The slime mould utilizes oscillations biologically to change the cytoplasmic stream through vein to move towards better foodstuff sources, then environs the food and secretes enzymes to collapse it. The activity of slime bacteria in obtaining sustenance is represented by the mathematical model. The mathematical modeling of slime mould algorithm and food searching stages are analyzed under.

Approaching food Slime mould should be able to find food point, with the stench there in the atmosphere. To explain the contraction process and characterize its behavior mathematically, the following equations are provided:

| 1 |

| 2 |

Here, and are two parameter variables, among them drop from one to zero and lies within the limits . indicates the present repetition. pinpoints individual location of each element in that region where the stench is utmost, is individual position of slime mould, and are two independent singles chosen accidentally from group and weight of slime mould is .

The maximum limit is described in the following equation:

| 3 |

where progressively tends to 1, 2… n, is given as slime mould’s fitness , among all iterations BF presents the best fitness. is expressed in the following equation as

| 4 |

| 5 |

The expression for is given as

| 6 |

| 7 |

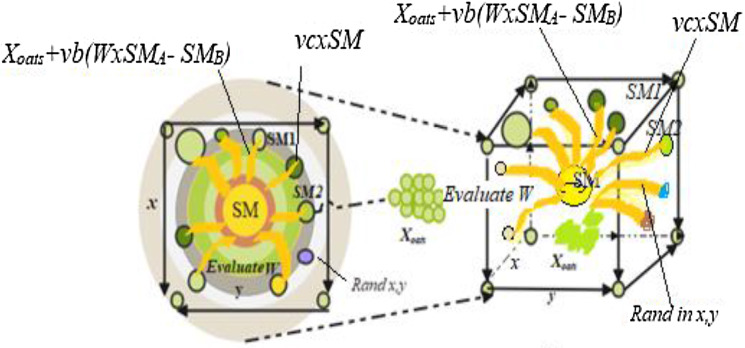

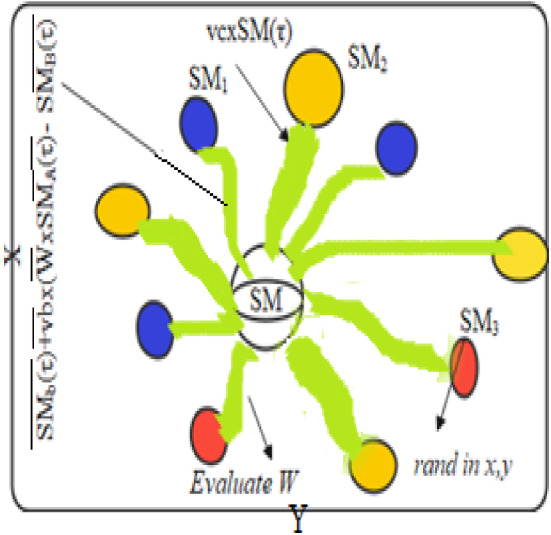

Here, in fact rated first half section of the population, implies its arbitrary number at a period of [0,1], PF indicates optimum fitness attained in the present repetitive procedure, lF symbolizes a low fitness value achieved in the repetitive procedure, stench index reflects the sequence of categorized attributes of fitness. Figures 3 and 4 depict the outcomes of Eqs. (1) and (2) and the probable locations of slime mould in 2D and 3D views. The location of independent may be modified to the finest position presently resulted, and altering of , , and will correct the location of the target.

Fig. 3.

View in two dimensions of a probable position

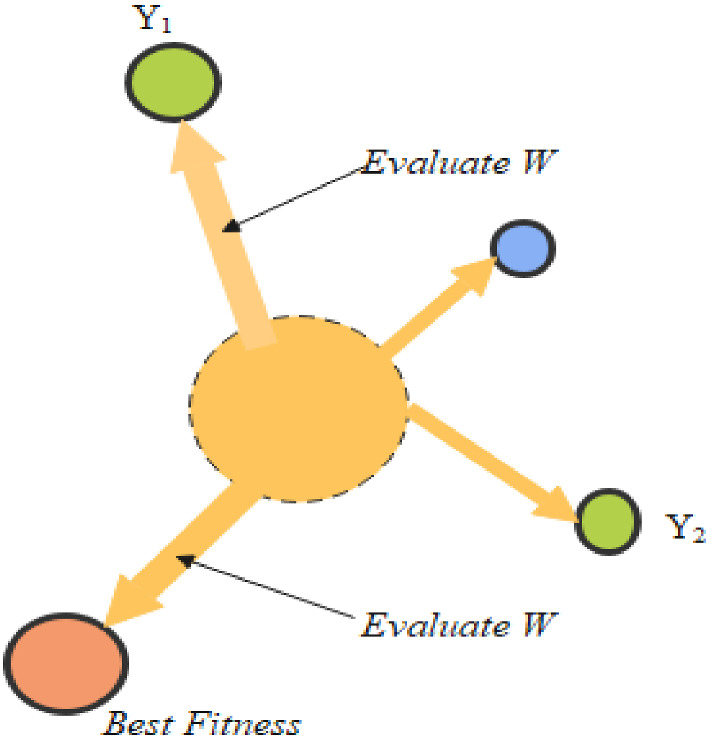

Fig. 4.

Fitness evaluation

Wrapping food This portion technically mimics how the contraction mode of slime mould and venous tissue configuration looks. When the vein gains maximum foodstuff absorption, the healthier the generated wave, the more rapidly the cytoplasm travels in addition to the thicker the vein becomes is shown in Eq. (6). The benefits and fault analysis among the slime mould vein thickness and the food concentration examination was numerically calculated. The in Eq. (6) represents uncertainty in the reproduction of venous contraction. The rate of change of numerical value is minimized by Log, such that the frequency value of contraction will not update too far. The conditions affect slime mould to improve their styles as per the quality food availability. The weight puts on in the area of high food concentration; if there is a reduction in food concentration, the weight in that area diminishes, thus searching additional sites. Figure 4 portraits fitness evaluation procedure of a slime mould.

The new location of the slime mould is presented mathematically as

| 8.1 |

| 8.2 |

| 8.3 |

The superior and lesser restrictions of search ranges are specified as , , and , and pinpoints the arbitrary value in the period [0,1].

Food grabble Slime mould clearly relies on its own propagation (circulation) wave produced by the phase of oscillations biologically to vary the cytoplasm stream passing through veins, and they seem to absorb food in a better way. To imitate in slime mould the changes of venous width, , , and are proposed to recognize the varieties. The weight of slime mould indicates its frequency oscillation to creep to different areas of food located, that too slime mould easily reaches the food when the food is abundant and reaches very slowly when the food is very less. This quality in slime mould makes it to find an optimal food source. The parameter value varies between randomly and increases to reach zero when iterations escalate.

The parameter value lies in the interval [− 1, 1] and gradually drop to zero when iterations shoot up. To search for the best place for abundant food, slime mould make efforts to explore rich quality food, this gives better solution and keeps moving to find optimum solution.

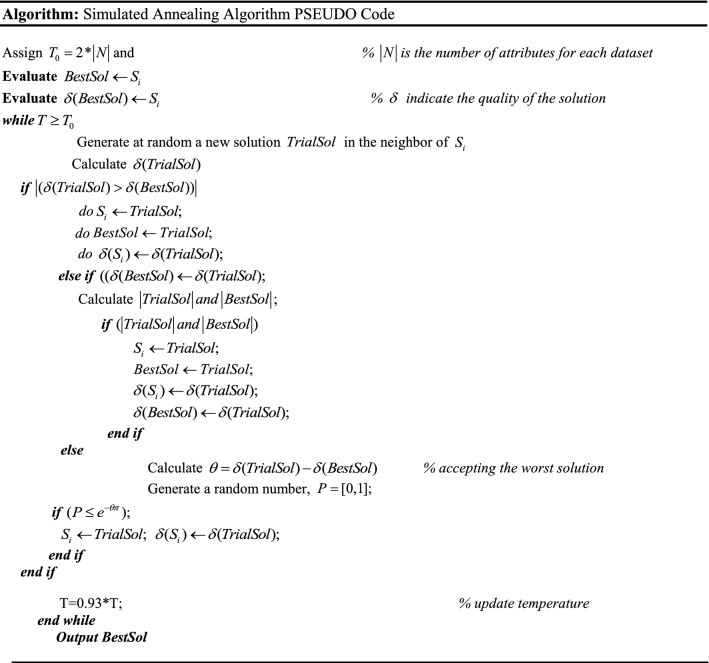

Simulated annealing algorithm

The process of simulated annealing (SA) is a probabilistic approach for finding global optimal solution for a function. This metaheuristic-based algorithm in a vast search space nears global optimal value for a given optimization problem. When the search space is discrete, it is usually utilized. Simulated annealing may be superior to procedures like gradient descent or branch and bound for issues where achieving globally optimum solution is also essential than obtaining a local optimum accurately in a limited period. The term annealing derives from the metallurgical procedure of heating and cooling a matter to enhance crystal size and remove defects. Scott Kirkpatrick was the first to create this approach, which was given the term simulated annealing algorithm. When accurate approaches fail, simulated annealing can be used to tackle exceedingly complex computational optimization problems; while it only offers an approximate solution to the global minimum, it may suffice in many practical cases.

The computation first generates arbitrary arrangement vectors, known as the beginning arrangement, and then a preset neighborhood arrangement generates another neighbor arrangement, which is also evaluated using a target task. If the neighbor is more powerful than the initial arrangement, the improved advancement is always recognized; however, a more terrible neighbor is always acknowledged with a specific probability controlled by the Boltzmann likelihood using condition in the following equation:

| 9 |

where = 0.93 is the distinction between the wellness of the created neighbor arrangement and best arrangement and diminishing as indicated by cooling plan and T is the temperature. The steps for simulated annealing are given as follows.

Slime mould-simulated annealing algorithm

In the suggested hybridized slime mould-simulated annealing algorithm, the position vector resulting from Eqs. (8.1, 8.2, 8.3) is updated by the suggested hybrid hSMA-SA technique, and the latest location vector is adept on slime mould to compute the food sources in three stages: looming food, bind food, food grabble. The reason behind the merge of the simulated annealing technique with slime mould algorithm is to develop the actual slime mould’s exploitation phase, which has excellent global hunt ability but poor local hunt capacity. Meanwhile, it is also necessary to memorize that the simulated annealing algorithm has a strong local hunt aptitude but a weak global hunt capacity. To amalgamate SMA and SA, a heuristic strategy is chosen in which the simulated annealing method is engaged immediately successive to slime mould. Subsequently, it is noticed that the local hunt ability of traditional slime mould enhanced in the phase of exploitation in obtaining better results when blended with simulated annealing. In order to attain improvement in the suggested algorithm, the principal temperature is treated as . Here, nominates no. of attributes for the individual dataset.

The cooling itinerary for the simulated annealing method is determined using the equation presented as follows:

| 10 |

Depending on the sensitiveness in fluctuations of system energies, the temperature (T) controls the system’s state (S) evolution. ‘S’ the evolution is receptive to boorish energy variations if the value of ‘T’ is huge and if the value of ‘T’ is tiny, then ‘S’ the evolution is receptive for better energy variations. Primarily, 1.0 is the value of temperature in the beginning and it gets multiplied by a constant ‘α’ to minimize temperature (T) at the closing stages of iteration. The limit range of a constant ‘α’ is between 0.8 and 0.99. In this study, the constant ‘α’ value is picked as 0.93.

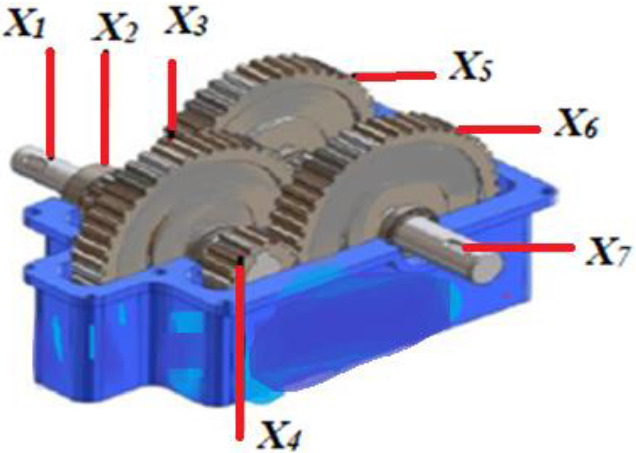

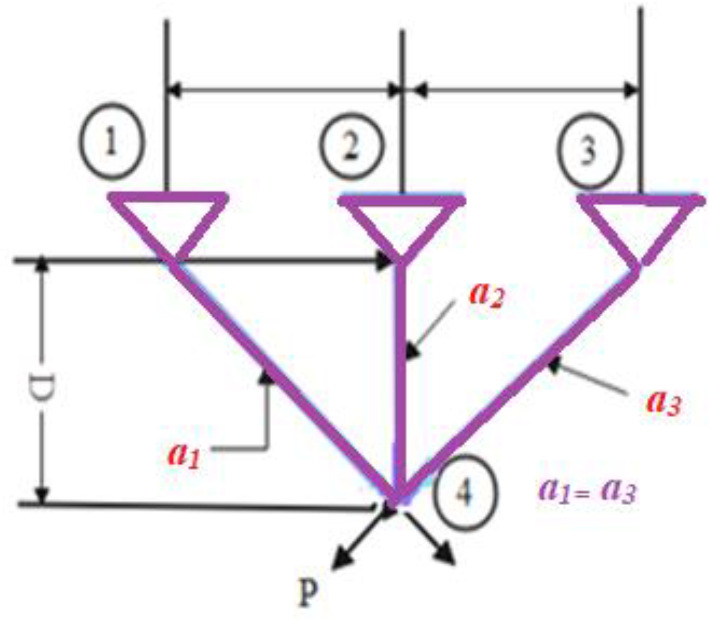

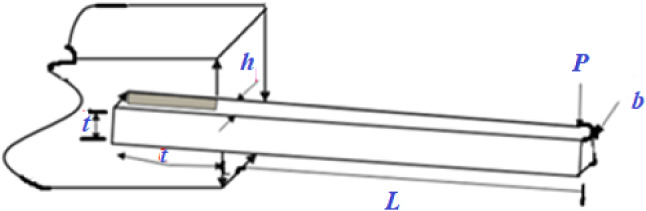

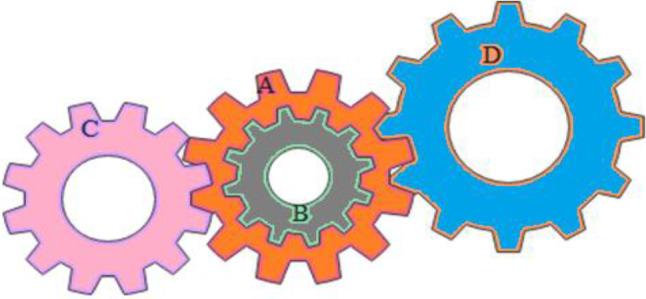

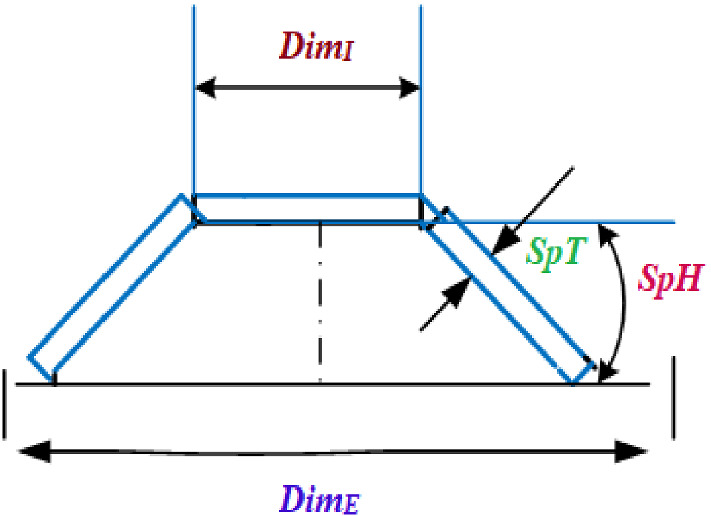

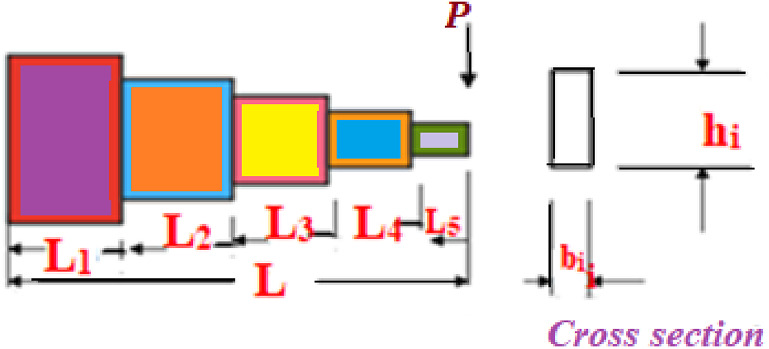

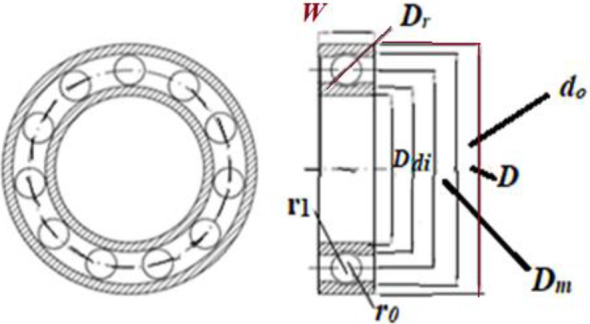

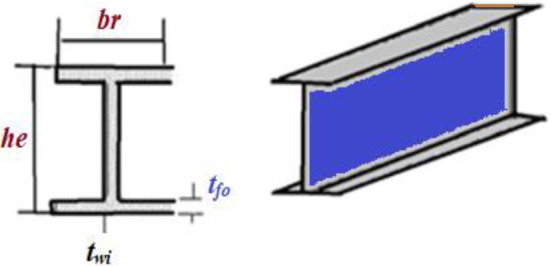

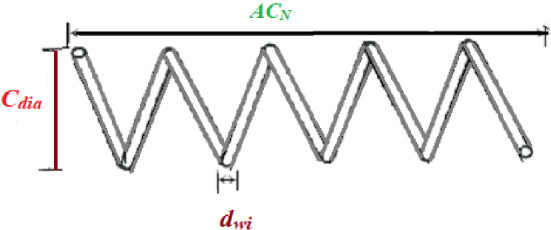

In Fig. 5, a slime mould of (X, Y) adjusts its location according to latest achieved location vectors and remains in contact with them as described in 2D and 3D views and also defines the location of the food (X*, Y*) as well as develops the search region in improvised means. Improved positions are gained by estimating the vectors and . The hSMA-SA explorative phase is same as traditional SMA. Vectors and are utilized to search globally in technical model divergence (Fig. 6). Slime mould gets expanded in the environment in search of food when vector is greater than 1 (Fig. 7). The developed hSMA-SA algorithm PSEUDO code is showcased in Figs. 8, and 9 displays the flow chart.

Fig. 5.

Probable positions in 2D and 3 D

Fig. 6.

SMA PSEUDO code

Fig. 7.

Simulated annealing algorithm PSEUDO code

Fig. 8.

PSEUDO code for hSMA-SA algorithm

Fig. 9.

Flow chart for hSMA-SA algorithm

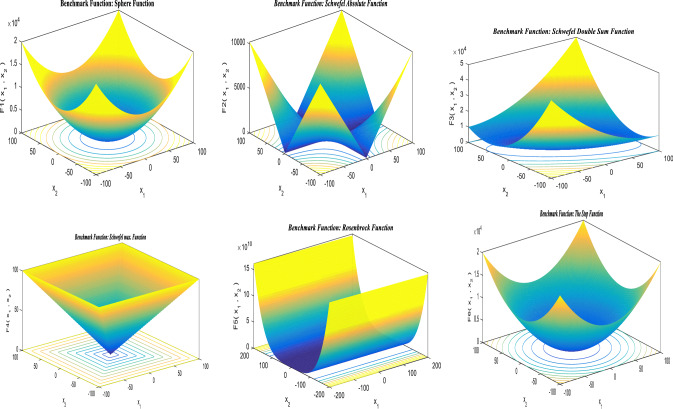

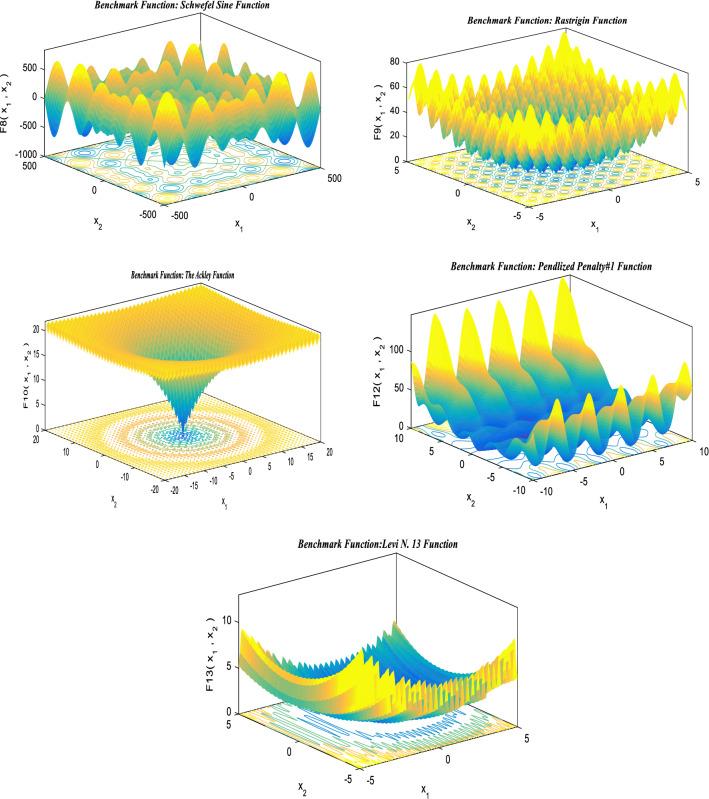

Standard benchmark functions

The suggested hSMA-SA optimization strategy is put to the test using a cluster of distinct benchmark functions [143]. Standard benchmarks are divided into three categories: unimodal (UM), multimodal (MM), and fixed dimensions (FD). For these benchmark functions, the size, range limit, and optimal value, are determined based on objective fitness (fmin). Tables 2, 3, and 4 show the numerical formulations for UM, MM, and FD, respectively, and the findings are given in the outcomes and discussion section. The performance of typical benchmark functions is evaluated using 30 trial runs. The details of parameter setup for the proposed method are shown in Table 5.

Table 2.

Unimodal standard benchmark functions

| Functions | Dimensions | Range | fmin |

|---|---|---|---|

| 30 | [− 100, 100] | 0 | |

| 30 | [− 10,10] | 0 | |

| 30 | [− 100, 100] | 0 | |

| 30 | [− 100, 100] | 0 | |

| 30 | [− 38, 38] | 0 | |

| 30 | [− 100, 100] | 0 | |

| 30 | [− 1.28, 1.28] | 0 |

Table 3.

Multimodal standard benchmark functions

| Multimodal bench mark functions | Dim | Range | |

|---|---|---|---|

| 30 | [− 500, 500] | − 418.98295 | |

| 30 | [− 5.12, 5.12] | 0 | |

| 30 | [− 32, 32] | 0 | |

| 30 | [− 600, 600] | 0 | |

|

where

|

30 | [− 50, 50] | 0 |

| 30 | [− 50, 50] | 0 |

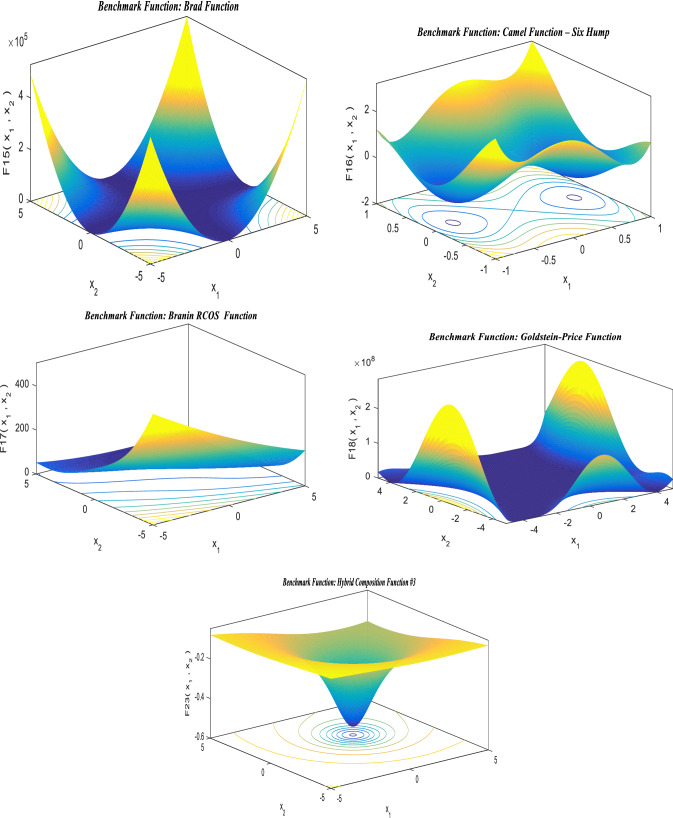

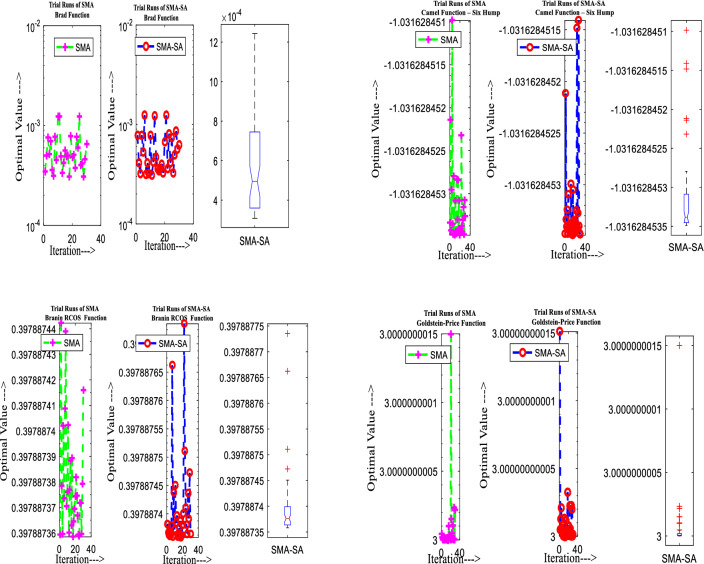

Table 4.

Fixed-dimension benchmark functions

| Fixed-dimension (FD) benchmark functions | Dimension | Range | |

|---|---|---|---|

| 2 | [− 65.536, 65.536] | 1 | |

| 4 | [− 5, 5] | 0.00030 | |

| 2 | [− 5, 5] | − 1.0316 | |

| 2 | [− 5, 5] | 0.398 | |

| 2 | [− 2,2] | 3 | |

| 3 | [1, 3] | − 3.32 | |

| 6 | [0, 1] | − 3.32 | |

| 4 | [0,10] | − 10.1532 | |

| 4 | [0, 10] | − 10.4028 | |

| 4 | [0, 10] | − 10.5363 |

Table 5.

Parameter constraints for the suggested technique

| Parameter setting | hSMA-SA |

|---|---|

| Search agents | 30 |

| Count of iterations for benchmark problems (unimodal, multimodal and fixed dimension) | 500 |

| Count of iterations for engineering optimal designs | 500 |

| Count of trial runs for each function and engineering optimal designs | 30 |

Thirty search agents are used to go through the entire research, with a maximum of 500 iterations. The proposed hSMA-SA was evaluated using the MATLAB R2016a program using a laptop of Intel corei3 processor with an 8 GB RAM and 7th generation CPU.

According to the results of the comparative study, the suggested heuristic technique significantly boosts the rate of convergence as well as develops its capacity to quickly run away from local area stagnation.

Results and analysis

The offered slime mould-simulated annealing method is assessed on three primary modules of customary benchmark functions in this study effort to validate the success rate of the suggested hSMA-SA technique. The exploitation and convergence rate of hSMA-SA are assessed using unimodal benchmark functions with a single optimal solution. As the name indicates, multimodal replicates several perfect solutions, as a result, these can be used to check for exploration and prevent finding a local optimal solution. The distinction among multimodal and fixed-dimension benchmark functions determines the design variables. These design variables will be saved in fixed-dimension benchmark functions, which will uphold a graphic representation of preceding search space data to compare with multimodal functions.

For detailed study, a documentation of the outcomes for the launched hSMA-SA technique was supplied; in the table form indicating statistical outputs, time of computation, and evaluation of the technique by executing with 500 iterations and 30 runs.

Evaluation of unimodal functions (exploitation)

The search progress for the finest place stands upon the potential of search agents to arrive nearer to source. At the time of the search procedure, there is a chance for various agents to get ensnare far or nearby in view of the phases exploration and exploitation. Exploration falls below global search whereas exploitation refers to local search. The results of unimodal functions have a statistical analysis in selected points such as search record, convergence behavior, average fitness of population. The search record in the trail runs graph shows the locations of slime mould. The graph of convergence explains the variation in the position of slime mould during optimization procedure. The average fitness of the population describes the variations in the average population during whole optimization procedure. This better convergence certifies the effectiveness of the suggested algorithm. The low p value shown in Table 9, which was acquired using the statistical Wilcoxon rank sum test and t test to examine the proposed algorithm’s detailed behavior, indicates that the produced algorithm has better convergence and is more effective. At a 95% level of significance, the h value further supports the null hypothesis. The suggested algorithm’s parametric test demonstrates that the null hypothesis is rejected at the alpha significance level. If h = 1, the null hypothesis has been rejected at the alpha significance level. If h = 0, the null hypothesis was not successfully rejected at the alpha significance level.

Table 9.

Test results for multimodal functions using hSMA-SA technique

| Function | Mean | Standard deviation | Best fitness value | Worst fitness value | Median | Wilcoxon rank sum test | t test | |

|---|---|---|---|---|---|---|---|---|

| p value | p value | h value | ||||||

| Schwefel sine function (F8) | − 12,569.02623 | 0.43623993 | − 12,569.48529 | − 12,567.9831 | − 12,569.15434 | 1.73E−06 | 4.22E−131 | 1 |

| Rastrigin function (F9) | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 |

| The Ackley function (F10) | 8.88E−16 | 0 | 8.88E−16 | 8.88E−16 | 8.88E−16 | 4.32E−08 | 0 | 1 |

| Penalized penalty#1 function (F12) | 0.012678845 | 0.012483005 | 9.69E−05 | 0.039727727 | 0.007266933 | 1.73E−06 | 5.31E−06 | 1 |

| Levi N. 13 function (F13) | 0.002689783 | 0.001733379 | 0.000388677 | 0.007871366 | 0.002737656 | 1.73E−06 | 2.30E−09 | 1 |

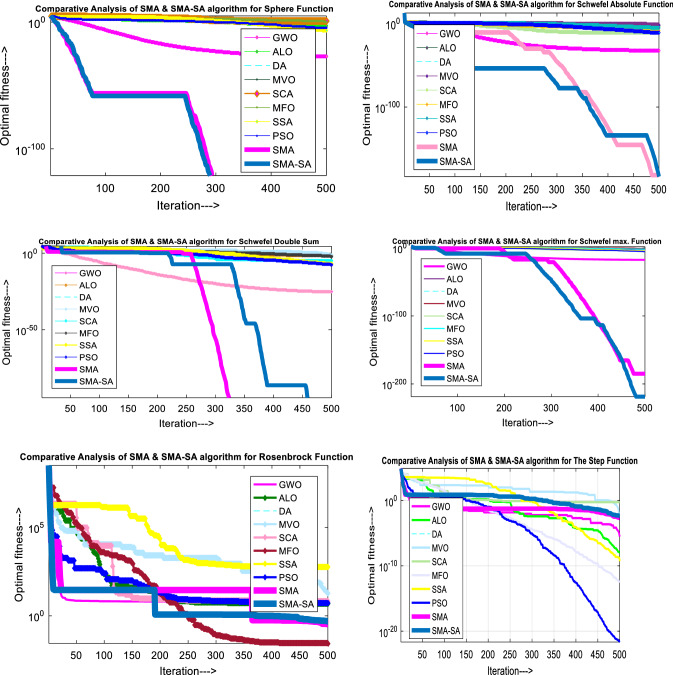

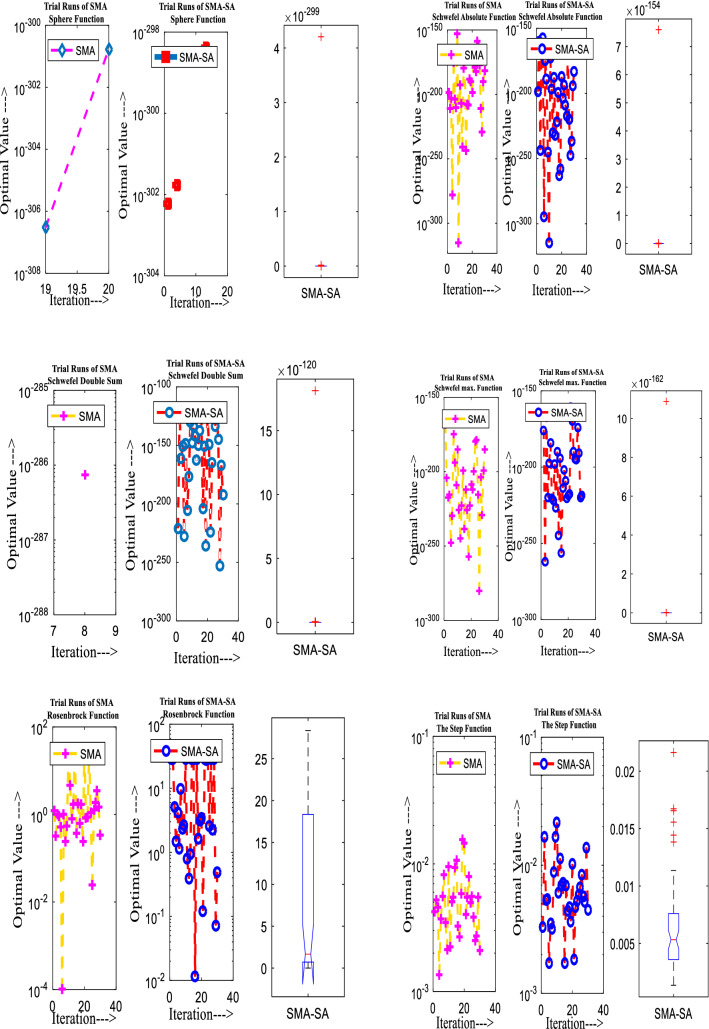

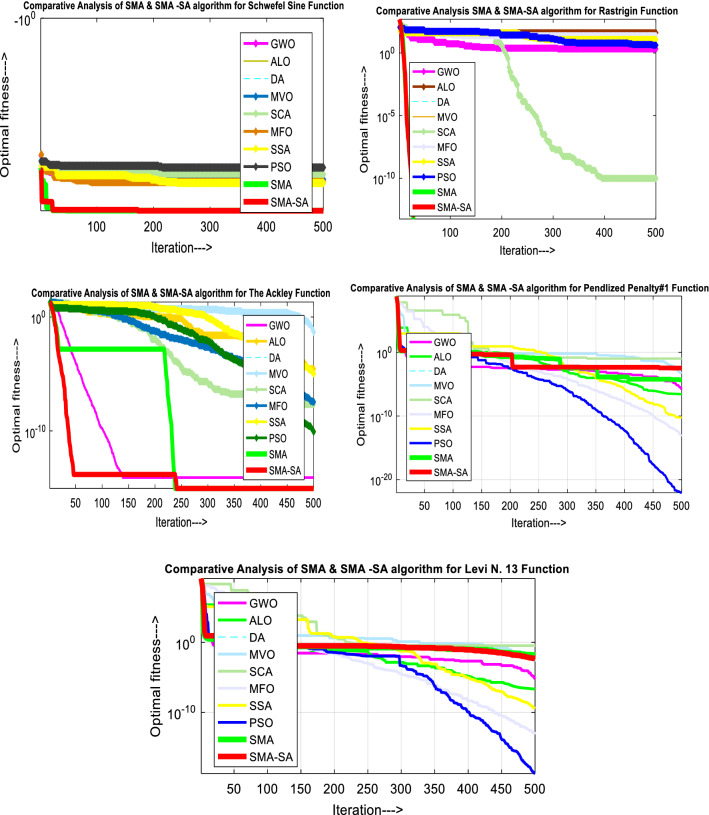

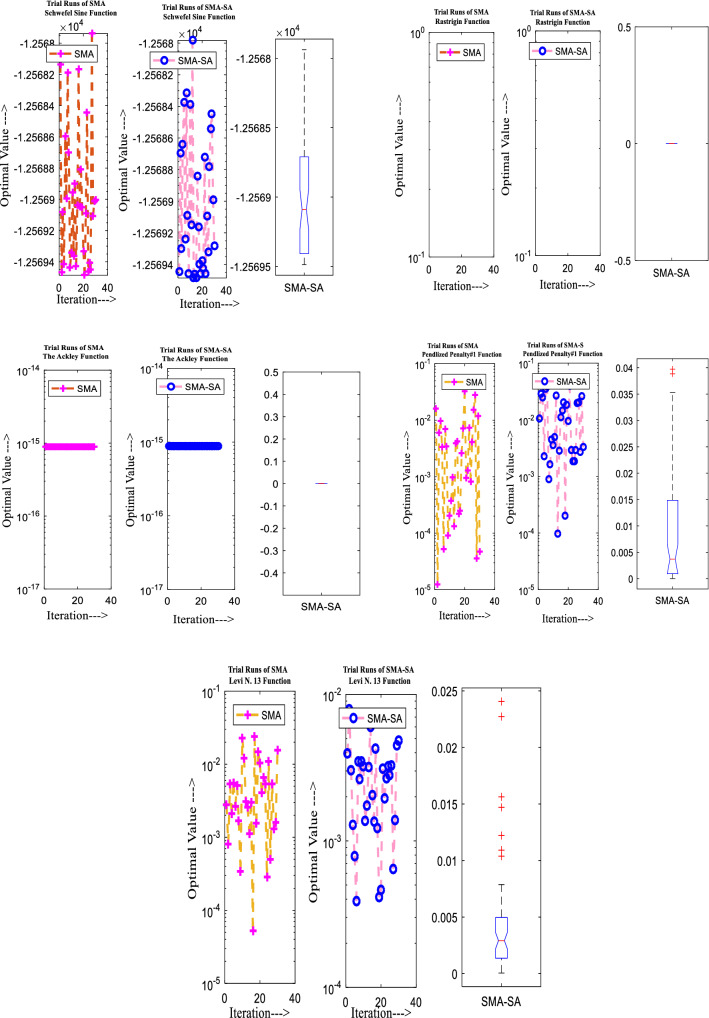

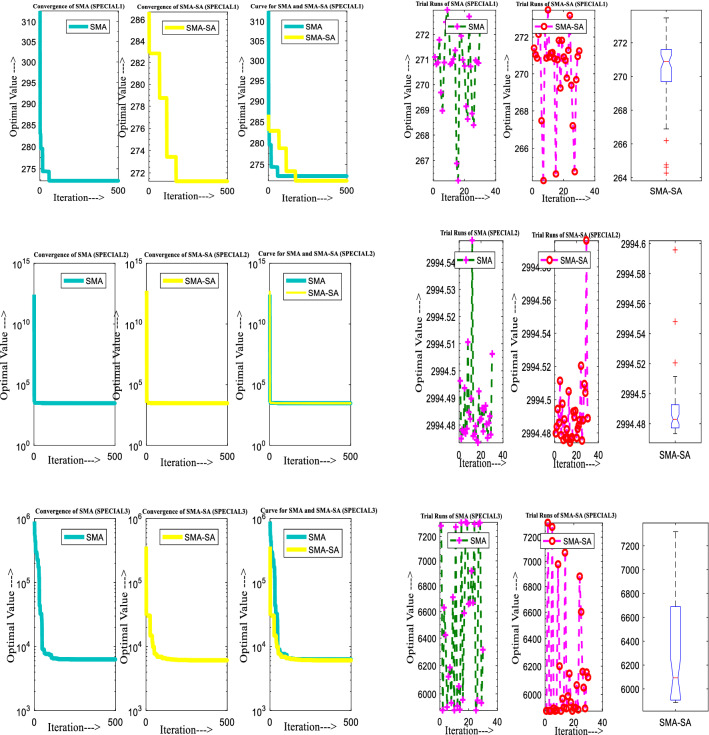

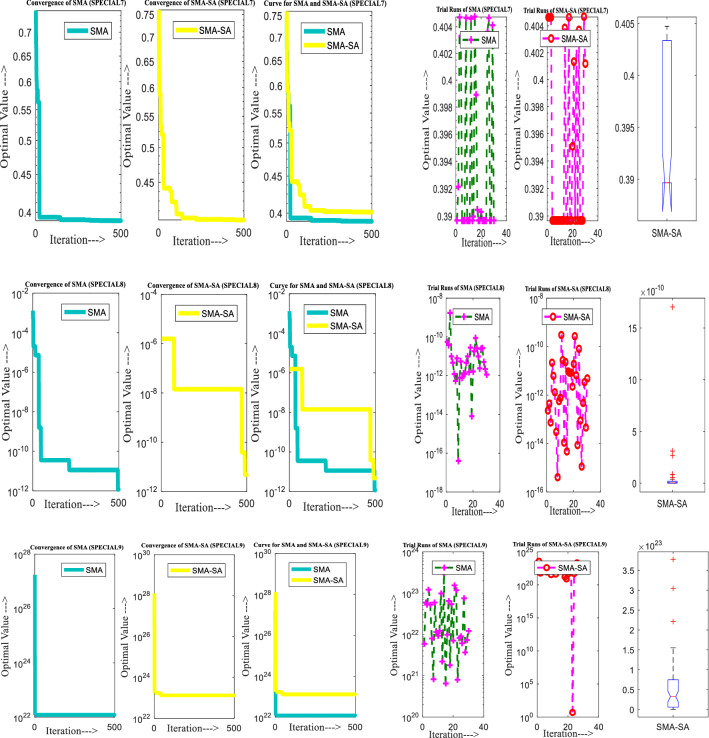

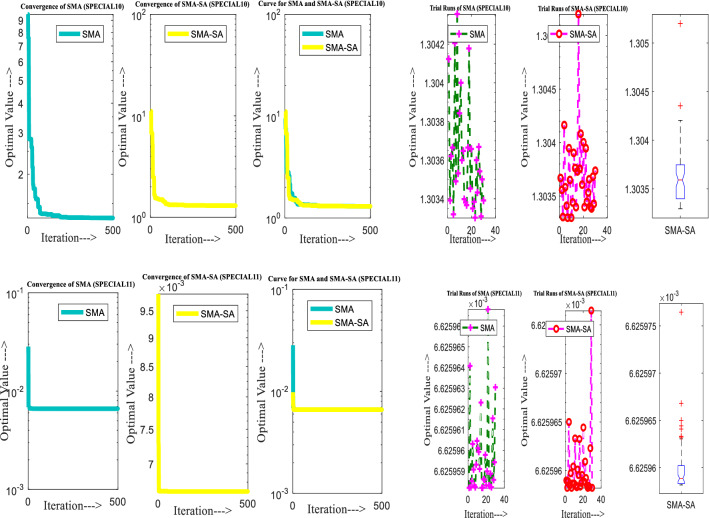

Figure 10 showcases the characteristic curves of unimodal benchmark functions and Fig. 11 shows a comparison of hSMA-SA with other different algorithms. It is observed from the curves of convergence of proposed algorithm converges to optimum very soon. To ensure the aptness of the launched technique, every test function is assumed with SMA and SA. The statistical outputs in Table 6 exhibit the unimodal functions in view of mean, standard deviation, best fitness value, worst fitness, median, p value and t value. There are a few areas of global optima and a few areas get jammed in local optima in search region. The global search procedure finds the exploration phase while the local search procedure explores the exploitation. The appraisal of any technique is inspected by its capability in attaining maxima or minima within a little time of computation. Table 7 displays the time of computation in view of best, average as well as worst time. Table 8 displays the evaluation of hSMA-SA technique with other already available methods such as LSA [144], (SCA) [102], BRO [145], DA [146], OEGWO [147], MFO [34], PSA [74], HHO-PS [50], (SSA) [148], SHO [46], GWO [149], HHO [78], MVO [24], ECSA [150], PSO [151], TSO [109], ALO [152], and LF-SMA [8] considering standard deviation and average value. There is a variation in benchmark functions in view of characteristics. All these functions differ in their search abilities in the zones of exploration and exploitation. In this context, test judgment for six unimodal benchmark functions is examined. The test results for each function are reported in terms of average and standard deviation after 30 trial runs and 500 iterations. To examine the influence of SA on the solutions of hSMA-SA the scalability measurement is conceded. Table 8 displaying the statistical result announces a significant gap between hSMA-SA and other techniques. It is clear from Table 8 that by injecting SA technique, the SMA gained strength to enhance exploration and exploitation phases. The results of hSMA-SA when compared with SCA, ALO, PSA, SSA, MVO, BRO, PSO, MFO, DA, and GWO show noteworthy feat in handling with F3, F5, and F6 test functions in terms of standard deviation and average value. According to Fig. 11 convergence curves, it is noticed that with enhanced efficacy, the optimality results shoot up. The former approaches shown converge early. Moreover, to prove the success of the introduced method, every benchmark function’s independent trial runs are shown in Fig. 12. By comparison, it is proved that the SA algorithm promotes to investigate the local search phase with high intensity.

Fig. 10.

3D view of unimodal functions

Fig. 11.

Convergence curve of hSMA-SA with known algorithms for F1–F6 functions

Table 6.

Test note for unimodal functions using hSMA-SA technique

| Function | Mean | Standard deviation | Best fitness value | Worst fitness value | Median | Wilcoxon rank sum test | t test | |

|---|---|---|---|---|---|---|---|---|

| p value | p value | h value | ||||||

| Sphere function (F1) | 1.4E−300 | 0 | 0 | 4.2059E−299 | 0 | 0.125 | 0 | 1 |

| Schwefel absolute function (F2) | 1.9E−159 | 1.0209E−158 | 0 | 5.5926E−158 | 4.6226E−200 | 1.7344E−06 | 0.32386916 | 0 |

| Schwefel double sum function (F3) | 6.1E−121 | 3.309E−120 | 0 | 1.8126E−119 | 2.5788E−151 | 2.56308E−06 | 0.324043719 | 0 |

| Schwefel max. function (F4) | 3.6E−163 | 2.2228E−162 | 1.0007E−262 | 1.088E−161 | 4.4998E−199 | 1.7344E−06 | 0.378435872 | 0 |

| Rosenbrock function (F5) | 11.77822 | 12.89147042 | 0.011463167 | 28.35510393 | 3.286513444 | 1.7344E−06 | 2.50694E−05 | 1 |

| The step function (F6) | 0.007059 | 0.004749705 | 0.001719961 | 0.021628358 | 0.005572663 | 1.73E−06 | 5.63E−09 | 1 |

Table 7.

Time for execution for unimodal functions using hSMA-SA technique

| Function | Best time | Average time | Worst time |

|---|---|---|---|

| Sphere function (F1) | 190.3906 | 219.1385417 | 297.39063 |

| Schwefel absolute function (F2) | 122.7188 | 133.9958333 | 155.51563 |

| Schwefel double sum function (F3) | 137.2188 | 144.546875 | 162.70313 |

| Schwefel max. function (F4) | 111.875 | 176.4671875 | 307.20313 |

| Rosenbrock function (F5) | 90.25 | 112.4067708 | 150.95313 |

| The step function (F6) | 195.25 | 212.9197917 | 327.8125 |

Table 8.

Evaluation for unimodal problems

| Algorithm | Parameters | Unimodal Benchmark functions | |||||

|---|---|---|---|---|---|---|---|

| sphere function (F1) | Schwefel absolute function (F2) | Schwefel double sum function (F3) | Schwefel max. function (F4) | Rosenbrock function (F5) | The step function (F6) | ||

| Lightning search algorithm (LSA) [144] | Avg | 4.81067E−08 | 3.340000000 | 0.024079674 | 0.036806544 | 43.24080402 | 1.493275733 |

| S. deviation | 3.40126E−07 | 2.086007800 | 0.005726198 | 0.156233023 | 29.92194448 | 1.302827039 | |

| Dragonfly algorithm (DA) [146] | Avg | 2.850E−19 | 1.490E−06 | 1.290E−07 | 9.88E−04 | 7.6 | 4.170E−17 |

| S. deviation | 7.160E−19 | 3.760E−06 | 2.100E−07 | 2.78E−03 | 6.79 | 1.320E−16 | |

| Battle Royale optimization algorithm (BRO) [145] | Avg | 3.0353E−09 | 0.000046 | 54.865255 | 0.518757 | 99.936848 | 2.8731E−08 |

| S. deviation | 4.1348E−09 | 0.000024 | 16.117329 | 0.403657 | 82.862958 | 1.8423E−08 | |

| Multi-verse optimizer (MVO) [24] | Avg | 2.08583 | 15.9247 | 453.200 | 3.12301 | 1272.13 | 2.29495 |

| S. deviation | 0.64865 | 44.7459 | 177.0973 | 1.58291 | 1479.47 | 0.63081 | |

| Opposition-based enhanced grey wolf optimization algorithm (OEGWO) [147] | Avg | 2.49 × 10–34 | 4.90 × 10–25 | 1.01 × 10–1 | 1.90 × 10–5 | 2.72 × 101 | 1.40 × 1000 |

| S. deviation | 7.90 × 10–34 | 6.63 × 10–25 | 3.21 × 10–1 | 2.43 × 10–5 | 7.85 × 101 | 4.91 × 10–1 | |

| Particle swarm optimization (PSO) [151] | Avg | 1.3E−04 | 0.04214 | 7.01256E+01 | 1.08648 | 96.7183 | 0.00010 |

| S. deviation | 0.0002.0E−04 | 0.04542 | 2.1192E+01 | 3.1703E+01 | 6.01155E+01 | 8.28E−05 | |

| Photon search algorithm (PSA) [74] | Avg | 15.3222 | 2.2314 | 3978.0837 | 1.1947 | 332.6410 | 19.8667 |

| S. deviation | 27.3389 | 1.5088 | 3718.9156 | 1.0316 | 705.1589 | 33.4589 | |

| Sine–cosine algorithm (SCA) [102] | Avg | 0.000 | 0.000 | 0.0371 | 0.0965 | 0.0005 | 0.0002 |

| S. deviation | 0.000 | 0.0001 | 0.1372 | 0.5823 | 0.0017 | 0.0001 | |

| Hybrid Harris hawks optimizer–pattern search algorithm (hHHO-PS) [50] | Avg | 9.2 × 10–017 | 8.31E | 5.03 × 10–20 | 6.20 × 10–54 | 2.18 × 10–9 | 3.95 × 10–14 |

| S. deviation | 5E−106 | 4.46 × 10–53 | 1.12 × 10–19 | 1.75 × 10–53 | 6.38 × 10–10 | 3.61 × 10–14 | |

| Ant lion optimizer (ALO) [152] | Avg | 2.59E−10 | 1.84E−06 | 6.07E−10 | 1.36E−08 | 0.3467724 | 2.56E−10 |

| S. deviation | 1.65E−10 | 6.58E−07 | 6.34E−10 | 1.81E−09 | 0.10958 | 1.09E−10 | |

| Spotted hyena optimizer (SHO) [46] | Avg | 0 | 0 | 0 | 7.78E−12 | 8.59E+00 | 2.46E−01 |

| S. deviation | 0 | 0 | 0 | 8.96E−12 | 5.53E−01 | 1.78E−01 | |

| Moth flame optimizer (MFO) [34] | Avg | 0.00011 | 0.00063 | 696.730 | 70.6864 | 139.1487 | 0.000113 |

| S. deviation | 0.00015 | 0.00087 | 188.527 | 5.27505 | 120.2607 | 9.87E−05 | |

| Harris hawks optimizer (HHO) [78] | Avg | 1.06 × 10–90 | 6.92 × 10–51 | 1.25 × 10–80 | 4.46 × 10–48 | 0.015002 | 0.000115 |

| S. deviation | 5.82 × 10–90 | 2.47 × 10–50 | 6.63 × 10–80 | 1.70 × 10–47 | 0.023473 | 0.000154 | |

| Grey wolf optimizer (GWO) [149] | Avg | 6.590E−29 | 7.180E−18 | 3.20E−−07 | 5.610E−08 | 26.8125 | 0.81657 |

| S. deviation | 6.3400E−07 | 0.02901 | 7.9.1495E+01 | 1.31508 | 69.9049 | 0.00012 | |

| Enhanced crow search algorithm (ECSA) [150] | Avg | 7.4323E−119 | 5.22838E−59 | 3.194E−102 | 3.04708E−52 | 7.996457081 | 0.400119079 |

| S. deviation | 4.2695E−118 | 2.86361E−58 | 1.7494E−101 | 1.66895E−51 | 0.661378213 | 0.193939866 | |

| Salp swarm algorithm (SSA) [148] | Avg | 0.000 | 0.2272 | 0.000 | 0.000 | 0.000 | 0.000 |

| S. deviation | 0.000 | 1.000 | 0.000 | 0.6556 | 0.000 | 0.000 | |

| Transient search optimization (TSO) [109] | Avg | 1.18 × 10–99 | 8.44 × 10–59 | 3.45 × 1041 | 1.28E−53 | 8.10 × 10–2 | 3.35 × 10–3 |

| S. deviation | 6.44 × 10–99 | 3.93 × 10–58 | 1.26 × 10–41 | 6.58 × 10–53 | 11 | 6.82 × 10–3 | |

| LF-SMA [8] | Avg | 1.58E−156 | 2.74E−171 | 5.2412 | 0.0006 | 5.90E−05 | 0.0008 |

| S. deviation | 7.53E−156 | 0 | 10.229 | 0.0002 | 6.38E−05 | 0.0008 | |

| Proposed algorithm hSMA-SA | Avg | 1.4E−300 | 1.9E−159 | 6.1E−121 | 3.6E−163 | 11.77822 | 0.007059 |

| S. deviation | 0 | 1.0209E−158 | 3.309E−120 | 2.2228E−162 | 12.89147042 | 0.004749705 | |

Fig. 12.

Trial runs of SMA and hSMA-SA for F1–F6 functions

Evaluation of a few multimodal functions (exploration)