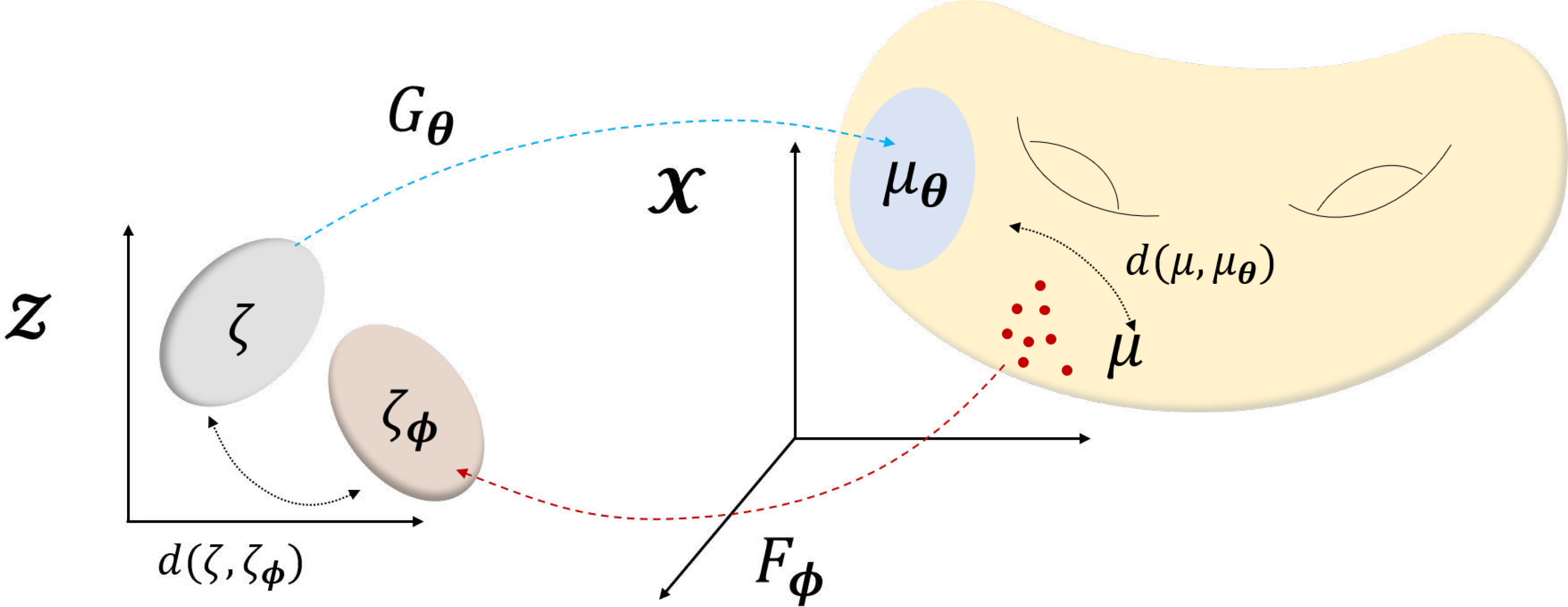

Fig. 5.

Geometric view of deep generative models. Fixed distribution ζ in is pushed to μθ in by the network Gθ, so that the mapped distribution μθ approaches the real distribution μ. In VAE, Gθ works as a decoder to generate samples, while Fϕ acts as an encoder, additionally constraining ζϕ to be as close to ζ. With such geometric view, auto-encoding generative models (e.g. VAE), and GAN-based generative models can be seen as variants of this single illustration.