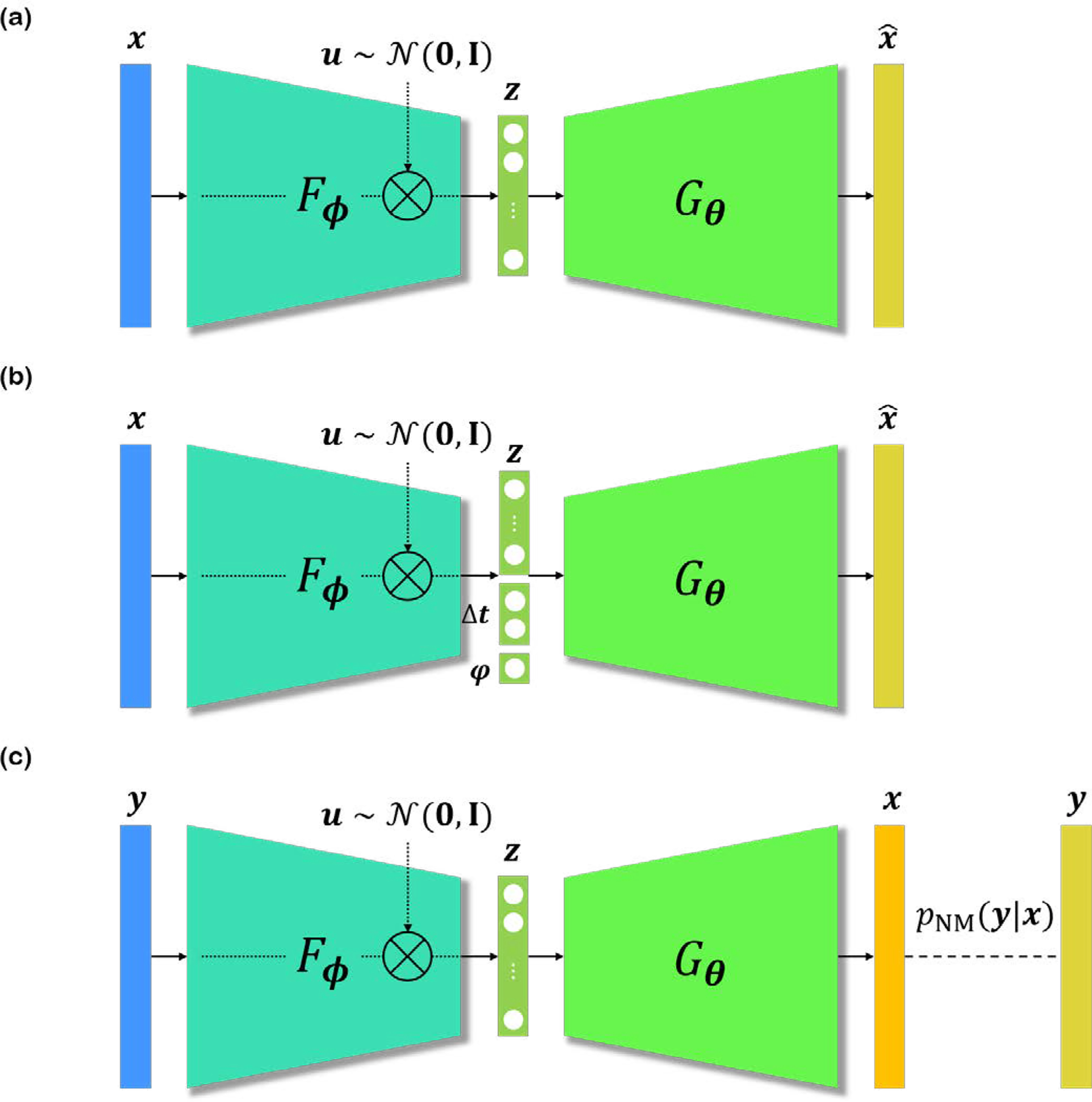

Fig. 6.

VAE architecture. Fϕ encodes x, and combined with random sample u to produce latent vector z. Gθ decodes the latent z to acquire . u is sampled from standard normal distribution for the reparameterization trick. (a) VAE. (b) spatial-VAE [19], disentangling translation/rotation features from different semantics. (c) DIVNOISING [20], enabling superviesd/unsupervised training of denoising generative model by leveraging the noise model pNM(y|x).