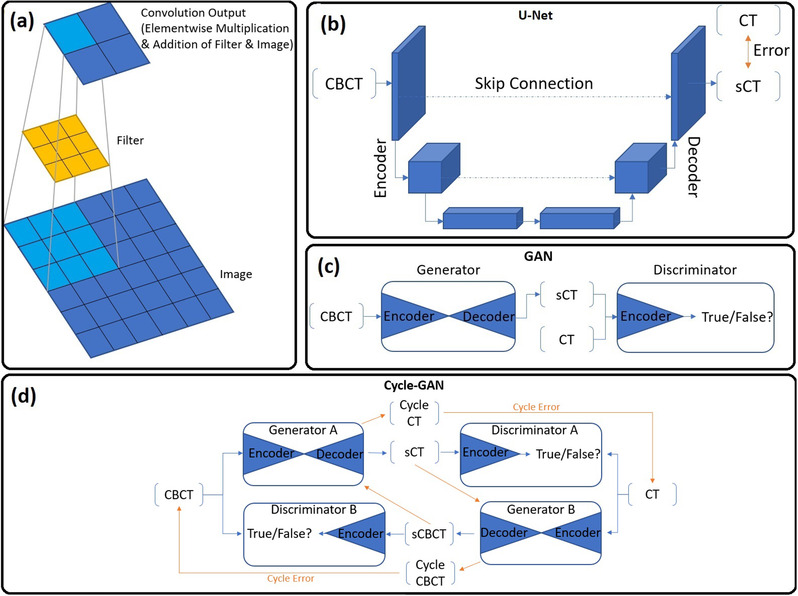

FIGURE 1.

(a) The convolution output (feature map) results from element‐wise multiplication followed by summation between the filter and image. Note how image information is encoded into a reduced spatial dimension. (b) Depiction of the U‐Net architecture. Note how the input spatial dimensions are progressively reduced, whereas the feature dimension increases with network depth. (c) The GAN architecture comprising a generator and discriminator. Generators are typically U‐Net‐type architectures with encoder/decoder arms, whereas discriminators are encoder classifiers. (d) The Cycle‐GAN network comprising two generators and discriminators capable of unpaired image translation via computation of the cycle error. The orange arrows indicate the backward synthesis cycle path.