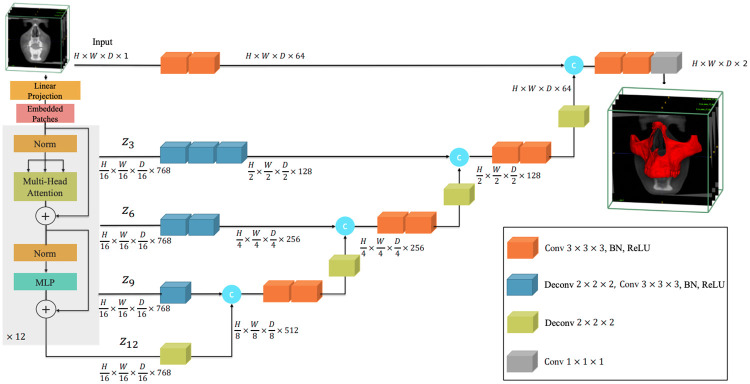

Fig 3. Overview of the UNETR used.

A 128x128x128x1 cropped volume of the input CBCT is divided into a sequence of 16 patches and projected into an embedding space using a linear layer. A transformer model is fed with the sequence added with 768 position embedding. Via skip connections, the decoder will extract and merge the final 128x128x128x2 crop segmentation from the encoded representations of different layers in the transformer.