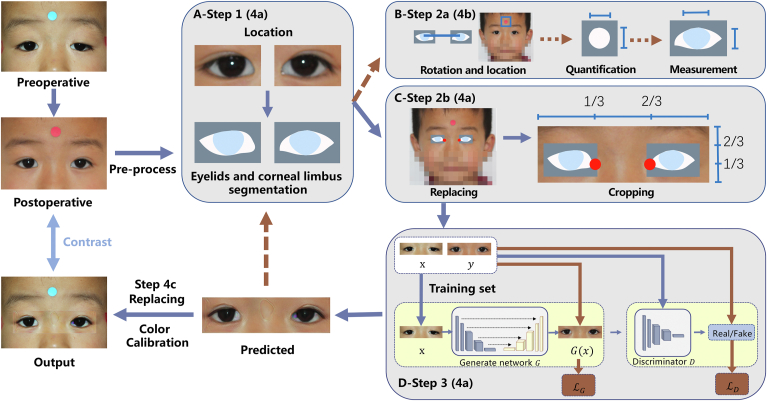

Figure 1.

Flowchart of the fully automatic postoperative appearance prediction system (POAP) for ptosis. A, Ocular detection module (steps 1, 4a): A pair of preoperative and postoperative training images were input into an ocular detection module developed in our previous work. B, Analyzing module (steps 2a, 4b): The mask output in the ocular detection module was used to define the rotation angles to make the eyes parallel, and then the round sticker was detected and segmented in pixels to quantify the eyelids. C, Data processing module (steps 2b, 4a): The mask output in the ocular detection module was replaced back on the original images, and the medial canthi were detected and anchored. Then the original images were cropped into strip images where the anchor points stayed horizontally at the trisection and vertically at the lower trisection on each image. D, Prediction module (steps 3, 4a): The preprocessed strip images went through a generator and a discriminator as the training process. Then the test images, having gone through the ocular detection module and the analyzing module, were fed into the trained prediction model and output as predicted pieces. These generated pieces were then passed to the ocular detection module and the analyzing module again to complete the automatic measurement of the predicted eyes, while at the same time, the generated pieces were eventually pasted back to the original preoperative images and underwent color calibration for the final output (step 4c).