Abstract

Background

For the development of digital solutions, different aspects of user interface design must be taken into consideration. Different technologies, interaction paradigms, user characteristics and needs, and interface design components are some of the aspects that designers and developers should pay attention to when designing a solution. Many user interface design recommendations for different digital solutions and user profiles are found in the literature, but these recommendations have numerous similarities, contradictions, and different levels of detail. A detailed critical analysis is needed that compares, evaluates, and validates existing recommendations and allows the definition of a practical set of recommendations.

Objective

This study aimed to analyze and synthesize existing user interface design recommendations and propose a practical set of recommendations that guide the development of different technologies.

Methods

Based on previous studies, a set of recommendations on user interface design was generated following 4 steps: (1) interview with user interface design experts; (2) analysis of the experts’ feedback and drafting of a set of recommendations; (3) reanalysis of the shorter list of recommendations by a group of experts; and (4) refining and finalizing the list.

Results

The findings allowed us to define a set of 174 recommendations divided into 12 categories, according to usability principles, and organized into 2 levels of hierarchy: generic (69 recommendations) and specific (105 recommendations).

Conclusions

This study shows that user interface design recommendations can be divided according to usability principles and organized into levels of detail. Moreover, this study reveals that some recommendations, as they address different technologies and interaction paradigms, need further work.

Keywords: user interface design, usability principles, interaction paradigm, generic recommendations, specific recommendations

Introduction

In the context of digital solutions, user interface design consists of correctly defining the interface elements so that the tasks and interactions that users will perform are easy to understand [1]. Therefore, a good user interface design must allow users to easily interact with the digital solution to perform the necessary tasks in a natural way [2]. Considering that a digital solution is used by an individual with specific characteristics in a particular context [3-7], when developing a digital solution, designers must pay attention to a high number of components of user interface design, such as color [8] typography [1], navigation and search [9], input controls, and informational components [10].

Digital solutions and their interfaces must be accessible to all audiences and aimed at universal use in an era of increasingly heterogeneous users [3,4,11-17]. Therefore, designers should also be aware of broad and complex issues such as context-oriented design, user requirements, and adaptable and adaptive interactive behaviors [5]. The universal approach to user interface design follows heuristics and principles systematized by different authors over the years [18-20], but these are generic guidelines, and examples of how they can be operationalized in practice are scarce.

The literature presents many user interface design recommendations for varied digital solutions and users [21-25]. However, the absence of a detailed critical analysis that compares, evaluates, and validates existing recommendations is likely to facilitate an increasing number of similar recommendations [12,26-29]. Although existing recommendations refer to specific technologies, forms of interaction, or end users, the content of some recommendations is generic and applicable to varied technologies and users, such as “always create good contrast between text and page background” [30]; “color contrast of background and front content should be visible” [23]; “leave space between links and buttons” [30]; and “allow a reasonable spacing between buttons” [31]. These illustrative examples highlight the need to aggregate, analyze, and validate existing recommendations on user interface design. Accordingly, this study aimed to synthesize existing guidelines into a practical set of recommendations that could be used to guide user interface design for different technologies. This is important because it contributes to the standardization of good practices and will conceivably allow for better interface design achieved at earlier stages of product development.

Methods

Background

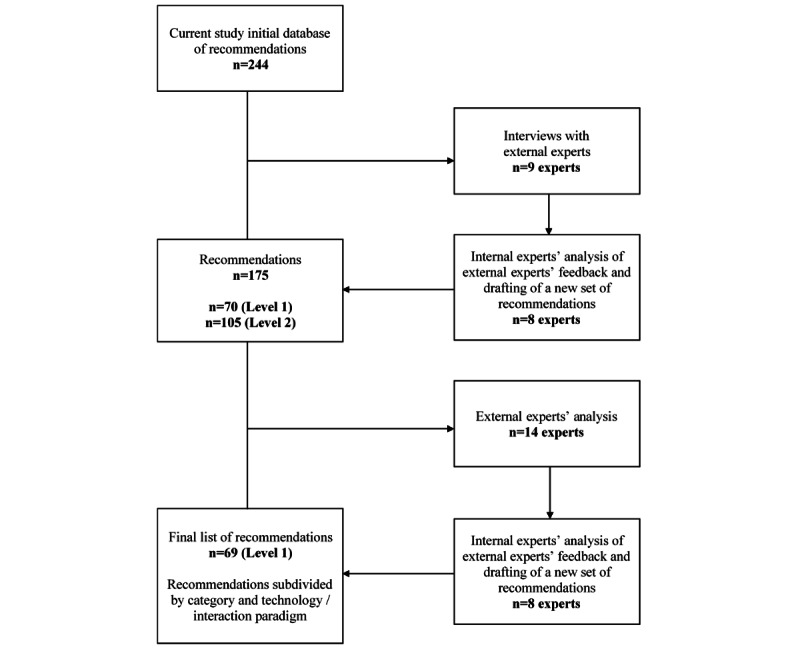

In a previous work, 244 interface recommendations were identified, and they formed the basis for this study [32]. The identification of the 244 recommendations combined multiple sources: (1) our previous work [33], (2) a purposive search on Scopus database, and (3) inputs provided by experts in the field of interface design. The references identified through all 3 steps were extracted into an Excel (Microsoft) database with a total of 1210 recommendations. We screened this database and looked for duplicated recommendations. During this analysis, very generic recommendations were also deleted, and recommendations addressing similar content were merged. The resulting database, with 194 recommendations, was analyzed by 10 experts in user interface design recruited among SHAPES (Smart and Health Ageing through People Engaging in Supportive Systems) [34] project partners, who added another 62 recommendations, resulting in 256 recommendations. A further analysis identified 12 duplicated references that were deleted, resulting in a final list of 244 recommendations. The large number of recommendations was deemed impractical, and further action was necessary. Building on this prior research, a set of recommendations on user interface design were engendered following 4 steps: (1) interview with user interface design experts, (2) analysis of the experts’ feedback and drafting of a set of recommendations, (3) reanalysis of the shorter list of recommendations by a group of experts, and (4) refining and finalizing the list. Each of these steps is detailed below, and the whole process is illustrated in Figure 1.

Figure 1.

Steps of analysis of the user interface design recommendations.

Interview With User Interface Design Experts

An Excel file with the 244 user interface design recommendations was sent to external experts in the field of user interface design. For an individual to be considered an expert, they had to meet at least 1 the following criteria: (1) have designed user interfaces for at least 2 projects/digital solutions or (2) have participated in the evaluation of user interfaces for at least 2 projects/digital products.

An invitation email was sent to experts explaining the objectives of the study, along with a supporting document with the 244 recommendations. They were asked to analyze the recommendations and report on (1) repetition/relevance, (2) wording, (3) organization, and (4) any other aspect they felt relevant. They were given approximately 4 weeks to analyze the 244 recommendations and send their written analysis and comments back to us. Subsequently, they were asked to attend a Zoom (Zoom Video Communications) meeting aimed at clarifying their views and discussing potential contradictory comments. The written comments (sent in advance by the experts) were used to prepare a PowerPoint (Microsoft) presentation where recommendations and respective comments (anonymized) from all experts were synthetized. This presentation was used to guide the Zoom meeting discussion. To maximize the efficiency of the discussion, recommendations without any comments and those that received similar comments from different experts were not included in the presentation. For recommendations with contradictory comments, the facilitator led a discussion and tried to reach a consensus. For recommendations with comments from a single expert, the facilitator asked for the opinion of other experts. The Zoom meeting was facilitated by one of the authors (AIM) and assisted by another (author CD) who took notes. The facilitator encouraged the discussion and exchange of opinions from all experts participating in each meeting. The Zoom meetings were recorded, and the experts’ arguments were transcribed and analyzed using content analysis by 2 authors (AIM and AGS) with experience in qualitative data analysis. Written comments sent by the experts as well as comments and relevant notes made during the meeting were transposed into a single file and subject to content analysis. After transcription, the notes were independently read by both the aforementioned authors and grouped into themes, categories, and subcategories with similar meaning [35]. Themes, categories, and subcategories were then compared, and a consensus was reached by discussion.

Analyzing Experts’ Feedback and Drafting a Set of Recommendations

The authors of this manuscript (internal experts), including individuals with expertise on content analysis and on user interface design and usability, participated in a series of 6 meetings that were approximately 2 to 3 hours in duration each. These meetings, which took place between January and April 2021 and were held online, aimed to analyze the comments made by external experts in the previous step. Based on the experts’ comments, each recommendation was either (1) not changed (if no comments were made by the experts), (2) deleted, (3) merged with other complementary recommendations, (4) rewritten, or (5) broken up into more than 1 recommendation. The decisions were based on the number of experts making the same suggestion, alignment with existing guidelines, and coherence with previous decisions for similar recommendations. In addition, based on external experts’ suggestions, the recommendations were organized as follows: (1) hierarchical levels according to level of detail and interdependency, (2) usability principles, and (3) type of technology and interaction paradigm.

Reanalyzing the Shorter List of Recommendations

To further validate decisions made by the internal panel and explore the possibility of reducing the number of recommendations, the set of recommendations resulting from the previous step (and its respective organization according to hierarchical levels and principles) was reanalyzed by an additional external panel of experts. Once again, to be considered an expert, individuals had to meet the previously identified criteria for experts (have designed user interfaces for at least 2 projects/digital products or have participated in the evaluation of user interfaces for at least 2 projects/digital products). An online individual interview was conducted in May 2021 with each expert by one of the authors (CD). Experts had to answer 3 questions about each of the recommendations: (1) Do you consider this recommendation useful? (Yes/No); (2) Do you consider this recommendation mandatory? (Yes/No); and (3) Do you have any observation/comment on any recommendations or on its organization? The first question was used to determine the inclusion or exclusion of recommendations, and the second one was used to inform on the priority of recommendations through the possibility of having 2 sets of recommendations: 1 mandatory and 1 optional. The third question aimed to elicit general comments on both individual recommendations and their organization. Consensus on the first 2 questions was defined as 70% or more of the experts signaling a recommendation with “Yes” and less than 15% of experts signaling the same recommendation with “No.” Qualitative data from the third question was independently analyzed by 2 authors (CD and AGS) using content analysis, as previously described.

Refining and Finalizing the List of Recommendations

The internal panel of experts (the authors of this study) had an online meeting in which findings of the previous step were presented and discussed, and amendments to the existing list of recommendations were decided to generate the final list of user interface design recommendations.

Ethical Considerations

This study focused on the analysis of previously published research and recommendations; therefore, ethical approval was considered unnecessary.

Results

Interview With User Interface Design Experts

A total of 9 experts participated in this step of the study: 5 females and 4 males with a mean age of 39.1 (SD 4.3) years. The participants were user interface designers (n=3, 33%) and user interface researchers (n=6, 67%) who had a background in design (n=6, 67%), communication and technology sciences (n=2, 22%), or computer engineering (n=1, 11%). A total of 3 meetings with 1 to 3 participants were conducted with a mean duration of 2 hours. Of the 244 recommendations, 166 (68%) were commented on by at least 1 expert.

Regarding the analysis of the interviews and written information sent by the experts, it was possible to aggregate commentaries into 2 main themes: (1) wording and content of recommendations and (2) organization of recommendations. The first theme was divided into 5 categories: (1) not changed (if no comments were made by the experts); (2) deletion of recommendations (because they were not useful or were contradictory); (3) merging of recommendations (to address complementary aspects of user interface design); (4) rewriting of recommendations (for clarity and coherence); and (5) splitting recommendations into more than 1 (because they included different aspects of user interface design). Of the 244 recommendations, external experts suggested that 108 should be merged (usually pairs of recommendations but could also include more than 2 recommendations), 29 should be rewritten, 4 should be split into more than 1, and 44 should be deleted. Among the recommendations, 78 received no comment. For 19 recommendations, it was not possible to reach consensus in the interview phase.

The second theme (organization of the recommendations) was divided into 2 categories: (1) hierarchization of recommendations and (2) grouping of recommendations. This last category was subdivided into 2 subcategories: (1) grouping of recommendations according to usability principles and (2) grouping of recommendations according to whether they apply to digital solutions in general or to specific digital solutions/interaction paradigms. Examples of quotations that support these categories and subcategories are presented in Table 1. Regarding the grouping of recommendations according to usability principles, the categories proposed by 5 experts (Table 1) were reorganized and merged into 12 categories: feedback, recognition, flexibility, customization, consistency, errors, help, accessibility, navigation, privacy, visual component, and emotional component. The mapping of the categories proposed by the experts and the 12 categories (named principles) are presented in Multimedia Appendix 1.

Table 1.

Categories and subcategories of the theme “organization of recommendations,” quotations supporting the categories, and number of experts that made comments in each category/subcategory.

| Categories | Subcategories | Citations (examples) | Experts, n (%) |

| Hierarchization |

N/Aa |

|

4 (44) |

| Grouping of recommendations | Design principles |

Of the 9 experts, 5 suggested categories for grouping recommendations:

Feedback; text information; user profile; layout; navigation; content; tasks; errors; consistency; input; ergonomic; emotional; security; gamification. [E2, female]

|

7 (78) |

|

|

Generic vs specific to technology/ interaction paradigms |

Of the 9 experts, 3 suggested categories for grouping recommendations:

|

3 (33) |

aN/A: not applicable.

bE: expert.

Analysis of Experts’ Feedback and Reanalysis of the Recommendations

Based on the external expert’s comments, the recommendations were reanalyzed. Of the 244 recommendations, 61 (25%) were deleted because they were duplicated or redundant, 48 (19.7%) were merged with other complementary recommendations, 62 (25.4%) were rewritten for clarification and language standardization, 14 (5.7%) were split in 2 or more recommendations, and 59 (24.2%) were not changed. This resulted in a preliminary list of 175 recommendations. Table 2 compares the external experts’ recommendations and internal experts’ final decision.

Table 2.

Comparison of external expert’s recommendations and internal experts’ decision.

| Type of action | External experts’ recommendations (N=263)a, n (%) | Internal experts’ decision (N=244), n (%) |

| Deleted | 44 (16.7) | 61 (25) |

| Merged | 108 (41.1) | 48 (19.7) |

| Rewritten | 29 (11) | 62 (25.4) |

| Split | 4 (1.5) | 14 (5.7) |

| Not changed | 78 (29.7) | 59 (24.2) |

aConsensus was not possible for 19 recommendations.

The 175 recommendations were then categorized into 12 mutually exclusive principles (feedback, recognition, flexibility, customization, consistency, errors, help, accessibility, navigation, privacy, visual, and emotional) and within each principle, organized into 2 levels of hierarchy according to the specificity/level of detail.

Of the 175 recommendations, 70 were categorized as level 1 and were generic recommendations applied to all digital solutions, and 105 recommendations were linked to 1 first level recommendation and subdivided by type of digital solution/interaction paradigm. The recommendations of both levels are linked, as level 2 recommendations detail how level 1 recommendations can be implemented. For example, the level 1 recommendation that “the system should be used efficiently and with a minimum of fatigue” is linked to a set of level 2 recommendations targeted at specific interaction paradigms, such as feet interaction and robotics: (1) “In feet interaction, the system should minimize repetitive actions and sustained effort, using reasonable operating forces and allowing the user to maintain a neutral body position,” and (2) “In robotics, the system should have an appropriate weight, allowing the person to move the robot easily (this can be achieved by using back drivable hardware).” Table 3 shows the distribution of the 175 recommendations.

Table 3.

Distribution of recommendations by level and category.

| Category | Level 1, (N=70), n |

Level 2, (N=105), n | Technology/interaction paradigm | Total (N=175), n |

| Feedback | 6 | 5 |

|

11 |

| Recognition | 5 | 12 |

|

17 |

| Flexibility | 6 | 10 |

|

16 |

| Customization | 7 | 6 |

|

13 |

| Consistency | 2 | 2 |

|

4 |

| Errors | 5 | 7 |

|

12 |

| Help | 3 | 2 |

|

5 |

| Accessibility | 8 | 23 |

|

31 |

| Navigation | 6 | 6 |

|

12 |

| Privacy | 3 | 5 |

|

8 |

| Visual component | 16 | 22 |

|

38 |

| Emotional component | 3 | 5 |

|

8 |

Reanalysis of the Shorter List of Recommendations by Experts

A total of 14 experts (8 females and 6 males) with a mean age of 35 (SD 8.8) years old provided feedback on recommendations. Experts were user interface designers (n=6, 43%) and user interface researchers (n=8, 57%) who had a background in design (n=8, 57%) or communication and technology sciences (n=6, 43%). The interviews lasted up to 2 hours each.

All the 175 recommendations reached consensus for the usefulness question. However, for question 2 (Do you consider this recommendation mandatory?), there was consensus that 54 (77%) level 1 recommendations were mandatory. The remaining 16 (23%) level 1 recommendations were considered by 5 (36%) to 9 (64%) experts as not mandatory. For the 105 level 2 recommendations, there was consensus that 91 (87%) were mandatory, and the remaining 14 were not considered mandatory by 5 (36%) to 9 (64%) experts.

Experts’ comments were aggregated into 5 main themes: (1) deletion or recategorizing of recommendations, (2) consistency, (3) contradiction, (4) asymmetry, and (5) uncertainty. It was suggested that 1 recommendation be deleted (“The system should be free from errors”), and another moved from the visual component category to the emotional component category. No other suggestions were made regarding the structure of the recommendations. There were comments related to the consistency, particularly regarding the need to use either British or American spelling throughout all recommendations and to consistently refer to “users” instead of “persons” or “individuals.” The remaining comments applied mostly to level 2 recommendations, for which experts identified contradictory recommendations (eg, accessibility: “In robotics, the system should meet the person’s needs, be slow, safe and reliable, small, easy to use, and have an appearance not too human-like, not patronizing or stigmatizing” vs emotional: “In robotics, the system should indicate aliveness by showing some autonomous behavior, facial expressions, hand/head gestures to motivate engagement, as well as changing vocal patterns and pace to show different emotions”). Experts also commented on the asymmetry across the number of level 2 recommendations linked to level 1 recommendations and on the asymmetry regarding the number of recommendations per type of technology and interaction paradigm. In addition, experts were uncertain about the accuracy of the measures indicated in the recommendations (eg, visual: “In robotics, the system graphical user interface and button elements should be sufficiently big in size, so they can be easily seen and used, about ~20 mm in case of touch screen, buttons” vs visual: “In feet interaction, the system should consider an appropriate interaction radius of 20 cm for tap, 30 cm at the front, and 25 cm at the back for kick”).

Refining and Finalizing the List of Recommendations

Based on the experts’ comments and issues raised in the previous step, the term “users” was adopted throughout the recommendations, 1 recommendation was removed, and 1 was moved from the visual component to the emotional component. In addition, all level 1 recommendations for which no consensus was reached on whether they were mandatory were considered not mandatory (identified by using the word “may” in the recommendation). The internal panel also recognized that level 2 recommendations cannot be used to guide user interface design in their current stage and that further work is needed. Therefore, a final list of 69 generic recommendations is proposed (Multimedia Appendix 2).

Discussion

Principal Findings

To the best of our knowledge, this is the first study that attempted to analyze and synthetize existing recommendations on user interface design. This was a complex task that generated a high number of interdependent recommendations that could be organized into hierarchical levels of interdependency and grouped according to usability principles. Level 1 recommendations are generic and can be used to inform the user interface design of different types of technology and interaction paradigms. Meanwhile, level 2 recommendations are more specific and therefore apply to different types of technology and interaction paradigms. Furthermore, the level of detail and absence of evidence that they had been validated raised doubts about their validity.

The external experts’ suggestions formed the basis for the internal experts’ (our) analysis. However, there is a discrepancy between the analysis of both panels of experts in terms of the number of recommendations that should be deleted, merged, rewritten, fragmented, or not changed. This was because when analyzing the recommendations, the internal panel verified that there were more recommendations to delete that were repeated or generic beyond those already identified by the external panel. It is likely that these were missed due to the high number of recommendations, which made the analysis a time-consuming and complex task. Furthermore, changing 1 recommendation in line with external experts’ suggestions resulted in subsequently having to change other recommendations for coherence and consistency, resulting in a higher number of recommendations that were rewritten. In addition, there was a lack of consensus among external experts, leaving the final decision to the internal experts (us), further contributing to discrepancies.

Regarding the organization of the recommendations, the division into 2 hierarchical levels based on the specificity/level of detail resulted from the external experts’ feedback and aimed at making the consultation of the list of recommendations easier. This type of hierarchization in levels of detail was also used in previous studies aimed at synthetizing existing guidelines [23,36].

The recommendations were grouped into 12 categories, which closely relate to existing usability principles (feedback, recognition, flexibility, customization, consistency, errors, help, accessibility, navigation, and privacy [18,37-39]). Usability principles are defined as broad “rules of thumb” or design guidelines that describe features of the systems to guide the design of digital solutions [18,40]. Additionally, they are oriented to improve user interaction [3] and impact the quality of the digital solution interface [41]. Therefore, having the recommendations organized in a way that maps these principles helps facilitate a practical use of the recommendations proposed herein, as these usability principles are familiar to designers and are well established, well known, and accepted in the literature [23,42].

The results showed an asymmetry in the number of recommendations categorized into each of the 12 usability principles (eg, for level 1, consistency has 2 recommendations while the visual component has 16 recommendations). This discrepancy suggests that some areas of user interface design such as the visual component might be better detailed, more complex, or more valued in the literature, but can also suggest that the initial search might not have been comprehensive enough, as it included a reduced number of databases [32]. Nevertheless, the heterogeneity between categories does not influence its relevance, as it is the set of recommendations as a whole that influences the user design interface of a digital solution.

The number of level 2 recommendations aggregated under each level 1 recommendation is also uneven. Most of the level 2 recommendations that resulted from this study concern web and mobile technologies because their utilization is widespread among the population [43] and therefore more likely to have design recommendations reported in the literature [23,31,44,45]. On the other hand, emerging technologies like robotics and interaction paradigms (eg, gestures, voice, and feet) represent new challenges for researchers, and recommendations are still being formulated, resulting in a lower number of specific recommendations that are published [46-49]. Moreover, the level 2 recommendations raised doubts among experts, namely regarding (1) the lack of consensus on whether they were mandatory or not, (2) apparent contradictions between recommendations, and (3) uncertainty regarding the accuracy of some recommendations, particularly those very specific (eg, the recommendations on the size of the buttons in millimeters). These aspects suggest that level 2 recommendations need further validation in future studies. No data was found on how the authors of the recommendations arrived at this level of detail and how the exact recommendation might vary depending on the target users [50,51], the type of technology [49], interaction paradigm [46], and the context of use [52]. Validation of the level 2 recommendations might be performed by gathering expert’s consensus on the adequacy of recommendations by type of technology/interaction paradigm and involving real users to test if the specific user interfaces that fulfill the recommendations improve usability and user experience [50,53].

We believe that level 1 recommendations apply to different users, contexts, and technologies/interaction paradigms and that the necessary level of specificity will be given by level 2 recommendations, which can be further operationalized into more detailed recommendations (eg, creating level 3 recommendations under level 2 recommendations). For example, recommendation 1 from the recognition category states that “the system should consider the context of use, using phrases, words, and concepts that are familiar to the users and grounded in real conventions, delivering an experience that matches the system and the real world,” which is an example of applicability to different contexts such as health or education. Similarly, recommendation 1 from the flexibility category states that “the system should support both inexperienced and experienced users, be easy to learn, and to remember, even after an inactive period,” also showing adaptability to different types of users. Nevertheless, the level of importance of each level 1 recommendation might vary. For example, recommendation 6 of the flexibility category, which states that “the system may make users feel confident to operate and take appropriate action if something unexpected happens,” was not considered mandatory by the panel of external experts. However, one might argue that it should be considered mandatory in the field of health, where the feeling of being in control and acting immediately if something unexpected happens is of utmost importance. Therefore, both level 1 and level 2 recommendations require further validation across different types of technology and interaction paradigms but also for different target users and contexts of use. Also required are investigations to determine whether their use results in better digital solutions, and particularly for the health care field, increases adhesion to and effectiveness of interventions.

In synthesis, although this study constitutes an attempt toward a more standardized approach in the field of user interface design, the set of recommendations presented herein should not be seen as a final set but rather as guides that should be critically appraised by designers according to the context, type of technology, type of interaction, and the end users for whom the digital solution is intended.

Strengths and Limitations

The strengths of this proposed set of recommendations are that it was developed based on multiple sources and multiple rounds of experts’ feedback. However, although several experts were involved in different steps of the study, it cannot be guaranteed that the views of the included experts are representative of the views of a broader community of user interface design experts. Another limitation of this study is that the initial search for recommendations might not have been comprehensive enough. Nevertheless, external experts were given the possibility of adding recommendations to the list, and none suggested the need to include additional recommendations. The list of level 2 recommendations is a work in progress that should be further discussed and changed considering the technology/paradigm of interaction. Finally, some types of technologies and interaction paradigms are not represented in the recommendations (eg, virtual reality), and it would be important to have specific recommendations for all types of technologies and interaction paradigms in the future.

Acknowledgments

This work was supported by the SHAPES (Smart and Health Ageing through People Engaging in Supportive Systems) project funded by the Horizon 2020 Framework Program of the European Union for Research Innovation (grant agreement 857159 - SHAPES – H2020 – SC1-FA-DTS – 2018-2020).

Abbreviations

- SHAPES

Smart and Health Ageing through People Engaging in Supportive Systems

Mapping of the categories proposed by the experts and the final categories.

Final list of 69 generic recommendations proposed.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Garrett JJ. Choice Reviews Online. Upper Saddle River: Pearson Education; 2010. The elements of user experience: user-centered design for the web and beyond. [Google Scholar]

- 2.Stone D, Jarret C, Woodroffe M, Minocha S. User Interface Design and Evaluation. Amsterdam, the Netherlands: Elsevier; 2005. [Google Scholar]

- 3.Bevana N, Kirakowskib J, Maissela J. What is usability?. Proceedings of the 4th International Conference on HCI; 4th International Conference on HCI; September 1-6; Stuttgart, Germany. 1991. [DOI] [Google Scholar]

- 4.World Wide Web Consortium (W3C) 2019. [2021-12-19]. https://www.w3.org/WAI/fundamentals/accessibility-intro/

- 5.Stephanidis C. User Interfaces for All: Concepts, Methods, and Tools. Boca Raton, FL: CRC Press; 2000. [Google Scholar]

- 6.Shneiderman B. Leonardo's Laptop Human Needs and the New Computing Technologies. Cambridge, MA: MIT Press; 2003. [Google Scholar]

- 7.Meiselwitz G. Universal usability: past, present, and future. Found Trends Hum-Comput Interact. 2009;3(4):213–333. doi: 10.1561/1100000029. [DOI] [Google Scholar]

- 8.Ambrose G, Ball N, Harris P. The Fundamentals of Graphic Design. New York, NY: Bloomsbury Publishing; 2019. [Google Scholar]

- 9.Morville P, Rosenfeld L. Information Architecture for the World Wide Web: Designing Large-Scale Web Sites. Sebastopol, CA: O'Reilly Media; 2006. [Google Scholar]

- 10.Usability.gov. [2021-05-26]. http://www.usability.gov/how-to-and-tools/methods/user-interface-elements.html .

- 11.Billi M, Burzagli L, Catarci T, Santucci G, Bertini E, Gabbanini F, Palchetti E. A unified methodology for the evaluation of accessibility and usability of mobile applications. Univ Access Inf Soc. 2010 Mar 19;9(4):337–356. doi: 10.1007/s10209-009-0180-1. [DOI] [Google Scholar]

- 12.Iwarsson S, Ståhl A. Accessibility, usability and universal design--positioning and definition of concepts describing person-environment relationships. Disabil Rehabil. 2003 Jan 21;25(2):57–66. doi: 10.1080/dre.25.2.57.66.BFKB31E4W4ER0G65 [DOI] [PubMed] [Google Scholar]

- 13.Keates S. When universal access is not quite universal enough: case studies and lessons to be learned. Univ Access Inf Soc. 2018 Oct 16;19(1):133–144. doi: 10.1007/s10209-018-0636-2. [DOI] [Google Scholar]

- 14.Thatcher J, Waddell C, Henry S, Swierenga S, Urban M, Burks M, Bohman P. Constructing Accessible Web Sites. San Rafael, CA: Glasshaus; 2002. p. A. [Google Scholar]

- 15.Accessibility guidelines for information/communication technology (ICT) equipment and services (ISO 9241-20:2008) International Standards Organization. [2021-12-18]. https://www.iso.org/standard/40727.html .

- 16.Guidance on software accessibility (ISO 9241-171:2008) International Standards Organization. [2021-12-18]. https://www.iso.org/standard/39080.html .

- 17.Software engineering quality model (ISO/IEC 9126-1:2001) International Standards Organization. [2021-12-19]. https://www.iso.org/standard/22749.html .

- 18.Usability heuristics for user interface design. Nielsen Norman Group. [2021-12-19]. https://www.nngroup.com/articles/ten-usabilityheuristics/

- 19.Vanderheiden GC. Handbook of Human Factors and Ergonomics. New York, NY: Wiley; 2006. Design for people with functional limitations; p. 2052. [Google Scholar]

- 20.World Wide Web Consortium. [2021-12-20]. https://www.w3.org/TR/WCAG21/

- 21.Garett R, Chiu J, Zhang L, Young SD. A literature review: website design and user engagement. Online J Commun Medi. 2016;6(3):1–14. doi: 10.29333/ojcmt/2556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Matthew-Maich N, Harris L, Ploeg J, Markle-Reid M, Valaitis R, Ibrahim S, Gafni A, Isaacs S. Designing, implementing, and evaluating mobile health technologies for managing chronic conditions in older adults: a scoping review. JMIR mHealth uHealth. 2016 Jun 09;4(2):e29. doi: 10.2196/mhealth.5127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ahmad N, Rextin A, Kulsoom UE. Perspectives on usability guidelines for smartphone applications: An empirical investigation and systematic literature review. Inf Softw Technol. 2018 Feb;94:130–149. doi: 10.1016/j.infsof.2017.10.005. [DOI] [Google Scholar]

- 24.Holthe T, Halvorsrud L, Karterud D, Hoel K, Lund A. Usability and acceptability of technology for community-dwelling older adults with mild cognitive impairment and dementia: a systematic literature review. CIA. 2018 May;Volume 13:863–886. doi: 10.2147/cia.s154717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Abdel-Wahab N, Rai D, Siddhanamatha H, Dodeja A, Suarez-Almazor ME, Lopez-Olivo MA. A comprehensive scoping review to identify standards for the development of health information resources on the internet. PLoS One. 2019 Jun 20;14(6):e0218342. doi: 10.1371/journal.pone.0218342. https://dx.plos.org/10.1371/journal.pone.0218342 .PONE-D-19-05366 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Al-Razgan MS, Al-Khalifa HS, Al-Shahrani MD. Heuristics for evaluating the usability of mobile launchers for elderly people. International Conference of Design, User Experience, and Usability; June 22-27; Crete, Greece. 2014. p. 424. [DOI] [Google Scholar]

- 27.Farage MA, Miller KW, Ajayi F, Hutchins D. Design principles to accommodate older adults. Glob J Health Sci. 2012 Feb 29;4(2):2–25. doi: 10.5539/gjhs.v4n2p2. doi: 10.5539/gjhs.v4n2p2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Del Ra W. Usability testing essentials. SIGSOFT Softw Eng Notes. 2011 Sep 30;36(5):49–50. doi: 10.1145/2020976.2021001. [DOI] [Google Scholar]

- 29.Norman DA. The Design of Everyday Things. New York, NY: Basic Books; 2013. [Google Scholar]

- 30.Usability guidelines for accessible web design. Nielsen Norman Group. 2001. [2021-02-01]. https://www.nngroup.com/reports/usability-guidelines-accessible-web-design/

- 31.Almao EC, Golpayegani F. Are mobile apps usable and accessible for senior citizens in smart cities?. Proceedings of the 21st International Conference on Human-Computer Interaction; 21st International Conference on Human-Computer Interaction; July 26-31; Florida. 2019. pp. 357–375. [DOI] [Google Scholar]

- 32.SHAPES User Experience Design and Guidelines and Evaluation. 2020. [2022-12-14]. https://shapes2020.eu/wp-content/uploads/2020/11/D5.1-SHAPES-User-Experience-and-Guidelines.pdf .

- 33.Silva AG, Caravau H, Martins A, Almeida AMP, Silva T, Ribeiro. Santinha G, Rocha NP. Procedures of User-Centered Usability Assessment for Digital Solutions: Scoping Review of Reviews Reporting on Digital Solutions Relevant for Older Adults. JMIR Hum Factors. 2021 Jan 13;8(1):e22774. doi: 10.2196/22774. https://humanfactors.jmir.org/2021/1/e22774/ v8i1e22774 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.SHAPES 2020. [2022-09-19]. https://shapes2020.eu/

- 35.Bengtsson M. How to plan and perform a qualitative study using content analysis. NursingPlus Open. 2016;2:8–14. doi: 10.1016/j.npls.2016.01.001. [DOI] [Google Scholar]

- 36.Chorianopoulos K. User interface design principles for interactive television applications. Int J Hum Comput Interact. 2008 Jul 30;24(6):556–573. doi: 10.1080/10447310802205750. [DOI] [Google Scholar]

- 37.Gerhardt‐Powals J. Cognitive engineering principles for enhancing human‐computer performance. Int J Hum Comput Interact. 1996 Apr;8(2):189–211. doi: 10.1080/10447319609526147. [DOI] [Google Scholar]

- 38.Shneiderman Designing the user interface: Strategies for effective human-computer interaction. Applied Ergonomics. 1993 Aug;24(4):295. doi: 10.1016/0003-6870(93)90471-k. [DOI] [Google Scholar]

- 39.Lim KC, Selamat A, Alias RA, Krejcar O, Fujita H. Usability measures in mobile-based augmented reality learning applications: a systematic review. Appl Sci. 2019 Jul 05;9(13):2718. doi: 10.3390/app9132718. [DOI] [Google Scholar]

- 40.Nielsen J, Molich R. Heuristic evaluation of user interfaces. SIGCHI conference on Human factors in computing systems; April 1-5; Washington, DC. 1990. [DOI] [Google Scholar]

- 41.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, Payne TH, Rosenbloom ST, Weaver C, Zhang J, American MIA. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013 Jun;20(e1):e2–8. doi: 10.1136/amiajnl-2012-001458. http://jamia.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=23355463 .amiajnl-2012-001458 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Nassar V. Common criteria for usability review. Work. 2012;41 Suppl 1:1053–7. doi: 10.3233/WOR-2012-0282-1053.U5282267NX523131 [DOI] [PubMed] [Google Scholar]

- 43.International Telecommunication Union. [2022-12-14]. https://www.itu.int/en/ITU-D/Regional-Presence/Europe/Documents/Publications/Digital-Trends_Europe-E.pdf .

- 44.Roy B, Call M, Abts N. Development of usability guidelines for mobile health applications. 21st International Conference on Human-Computer Interaction; 26-31 July; Florida. 2019. [DOI] [Google Scholar]

- 45.Petersen GB, Nielsen JH, Olsen JV, Kok RN. Usability guidelines for developing and evaluating web-based mental health intervention: establishing a practical framework. PsyArXiv. doi: 10.31234/osf.io/3ewz4. (forthcoming) [DOI] [Google Scholar]

- 46.Cohen MH, Giangola JB, Balogh J. Voice User Interface Design. Reading, MA: Addison Wesley; 2004. [Google Scholar]

- 47.Neto AT, Bittar TJ, Fortes RP, Felizardo K. Developing and evaluating web multimodal interfaces - a case study with usability principles. Proceedings of the 2009 ACM Symposium on Applied Computing; 2009 ACM Symposium on Applied Computing; March 12-18; New York, NY. 2009. [DOI] [Google Scholar]

- 48.Tsiporkova E, Tourwé T, González-Deleito N. Towards a semantic modelling framework in support of multimodal user interface design. 13th IFIP Conference on Human-Computer Interaction; September 5-9; Lisbon, Portugal. 2011. [DOI] [Google Scholar]

- 49.Sarter NB. Multimodal information presentation: Design guidance and research challenges. Int J Ind Ergon. 2006 May;36(5):439–445. doi: 10.1016/j.ergon.2006.01.007. [DOI] [Google Scholar]

- 50.Vu KPL, Proctor RW, editors. The Handbook of Human Factors in Web Design. Boca Raton, FL: CRC Press; 2005. [Google Scholar]

- 51.Zajicek M. Interface design for older adults. Proceedings of the 2001 EC/NSF Workshop on Universal Accessibility of Ubiquitous Computing: Providing for the Elderly; WUAUC'01; May 22-25; Alcácer do Sal, Portugal. 2001. [DOI] [Google Scholar]

- 52.Badre AN. Shaping web usability. Ubiquity. 2002 Feb;2002(February):1. doi: 10.1145/763913.763910. [DOI] [Google Scholar]

- 53.Petrie H, Bevan M. The Universal Access Handbook. Boca Raton, Florida: CRC Press; 2009. The evaluation of accessibility, usability, and user experience. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Mapping of the categories proposed by the experts and the final categories.

Final list of 69 generic recommendations proposed.