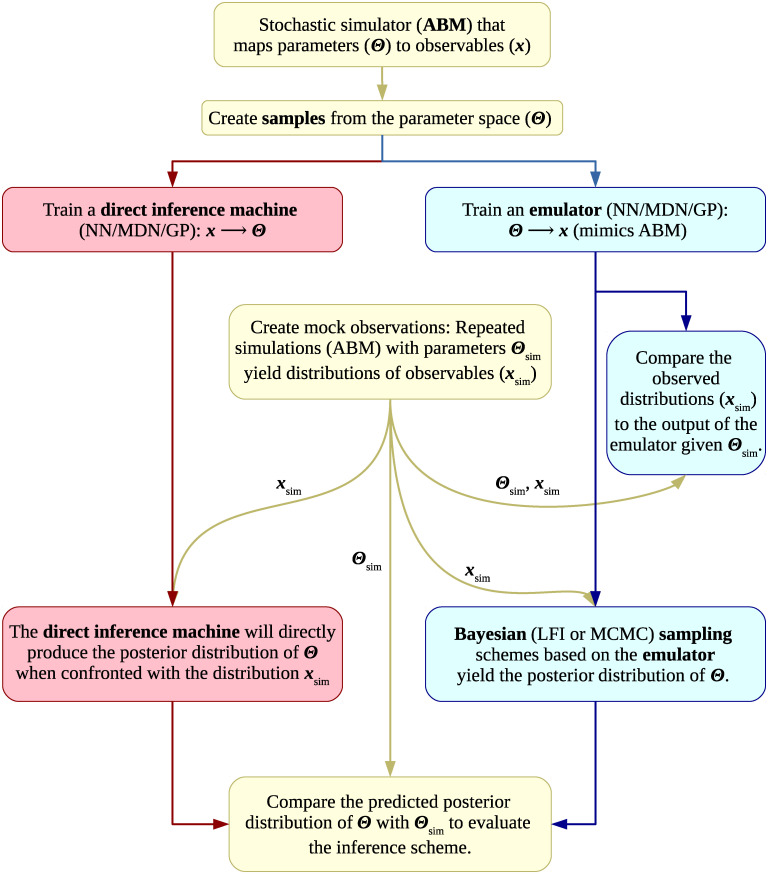

Fig 1. Flowchart summarizing the different steps of our analysis.

We sample the parameter space by creating quasi-random grids of simulations using the agent-based models (ABM). We then employ the sampled parameter sets (Θsim) and the simulation output (xsim) to train different machine learning (ML) methods: Neural networks (NN), mixture density networks (MDN) and Gaussian processes (GP). These ML methods can either be used as emulators or direct inference machines. Emulators mimic the simulations at low computational cost. They can be employed in Bayesian sampling schemes, such as MCMC and Likelihood Free Inference (LFI), to infer model parameters based on the observations. Direct inference machines can be seen as black boxes that produce samples from the posterior distribution of model parameters when given the observations. We use synthetic data obtained from the ABM which allows us to compare the obtained posterior distributions for the model parameters with the ground truth. For both the emulators and direct inference machines, we thus perform a classical inference task, amounting to a self-consistent test. Moreover, we quantify how well the emulators capture the behaviour of the ABMs in separate comparisons.