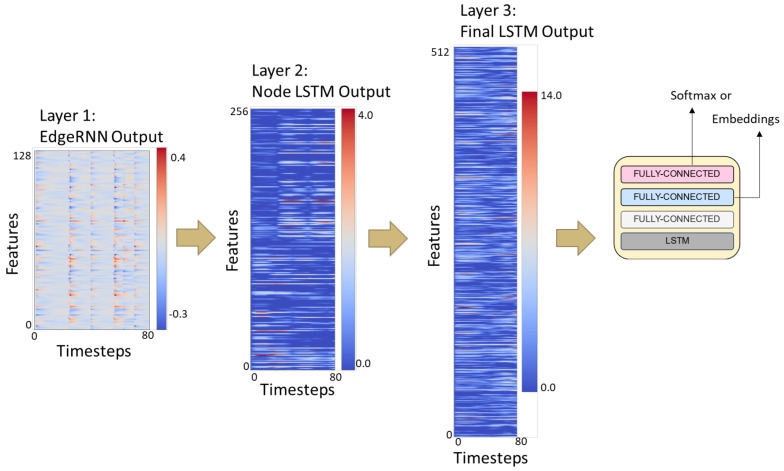

Figure 6.

ST-DeepGait model featurization example occurring at each layer. The LSTM feature output at each layer is shown for illustration. The first layer EdgeRNNs have a feature output of the entire sequence length by the number of hidden neurons. The second layer NodeRNNs have a feature output of the entire sequence length by the number of hidden neurons. The final layer is a concatenation of all nodeRNNs for an output of sequence length by the number of hidden neurons. The final LSTM featurization is then used to produce the embeddings or make a softmax distribution. The features are not human-interpretable, but show that the network is learning oscillating feature patterns.