Background:

Data from surveys of patient care experiences are a cornerstone of public reporting and pay-for-performance initiatives. Recently, increasing concerns have been raised about survey response rates and how to promote equity by ensuring that responses represent the perspectives of all patients.

Objective:

Review evidence on survey administration strategies to improve response rates and representativeness of patient surveys.

Research Design:

Systematic review adhering to the Preferred Reporting Items for Systematic reviews and Meta-Analyses guidelines.

Study Selection:

Forty peer-reviewed randomized experiments of administration protocols for patient experience surveys.

Results:

Mail administration with telephone follow-up provides a median response rate benefit of 13% compared with mail-only or telephone-only. While surveys administered only by web typically result in lower response rates than those administered by mail or telephone (median difference in response rate: −21%, range: −44%, 0%), the limited evidence for a sequential web-mail-telephone mode suggests a potential response rate benefit over sequential mail-telephone (median: 4%, range: 2%, 5%). Telephone-only and sequential mixed modes including telephone may yield better representation across patient subgroups by age, insurance type, and race/ethnicity. Monetary incentives are associated with large increases in response rates (median increase: 12%, range: 7%, 20%).

Conclusions:

Sequential mixed-mode administration yields higher patient survey response rates than a single mode. Including telephone in sequential mixed-mode administration improves response among those with historically lower response rates; including web in mixed-mode administration may increase response at lower cost. Other promising strategies to improve response rates include in-person survey administration during hospital discharge, incentives, minimizing survey language complexity, and prenotification before survey administration.

Key Words: patient experience survey, patient survey, CAHPS, response rate

Patient experience survey data are a cornerstone of national public reporting and pay-for-performance initiatives.1 Some health care providers, payers, and other stakeholders have expressed concerns that response rates to patient experience surveys are declining and that responses may not be representative of all patients, particularly underserved groups.2–5 Stakeholders have proposed several strategies to address these concerns, including using monetary incentives, reducing the length of survey instruments, and administering surveys via the web, on mobile devices, or at the point-of-care.

Ideally, patient experience surveys can be self-administered by patients (or family caregivers acting as proxies, when needed).6,7 Patient experience surveys also must accommodate patients who are recovering from illness or injury or experiencing long-term cognitive and physical impairments. They need to elicit comparable responses across heterogeneous populations, including those that differ by education; literacy; access to technology; age, race and ethnicity; and geographic region. They should produce results that can be used to fairly compare health care providers. Furthermore, in the context of accountability initiatives that use multiple survey vendors,8 strategies to enhance response to patient surveys must be feasible for vendors with varying technical capabilities.

For decades, researchers have studied strategies to promote survey response rates and representativeness.9–14 However, surveys about patient care experiences have distinctive features that may influence the effectiveness of these strategies. We conducted a systematic review of the peer-reviewed literature to document the evidence for strategies designed to enhance response rates and representativeness of patient experience surveys in particular.

STUDY DATA AND METHODS

We adhered to the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines.15,16

IDENTIFICATION AND SELECTION OF STUDIES

We included peer-reviewed empirical studies that examined the effects of strategies to improve response rates to patient experience surveys. To ensure that we reviewed only studies that were conducted with a high-level of rigor, we included only those with experimental designs in which either provider entities or patients in the study sample were randomized to study arms. We excluded nonempirical articles, studies with observational designs, studies conducted outside the United States, and studies that assessed response strategies for surveys designed for consumers other than patients, or for health care providers.

We searched MEDLINE and Scopus for English-language studies conducted in the United States and published between 1995 and December 2020 using search terms outlined in Appendix Exhibit A1 (Supplemental Digital Content 1, http://links.lww.com/MLR/C536). We chose 1995 as it was the year the Agency for Healthcare Research and Quality’s predecessor, the Agency for Health Care Policy and Research, funded development of the first Consumer Assessment of Healthcare Providers and Systems (CAHPS) survey—the national standard for collecting, tracking, and benchmarking patient care experiences across settings.17 We also obtained input from experts to identify articles not identified by the database searches, reviewed the reference lists from included articles, and collected references from a prior white paper on a related topic.18 One author (R.A.P.) conducted a preliminary screening of titles and abstracts to determine whether they met inclusion criteria. Full-text screening of the resulting studies was conducted by 3 authors working independently. Discrepancies were resolved through discussion until consensus was reached.

DATA EXTRACTION AND SYNTHESIS

Using standardized coding to systematically abstract information about each included study, 1 author (A.U.B.) extracted information regarding study design and analysis, survey sample population, setting and size, response rate strategies tested, study outcomes, and major limitations. Data extraction was verified independently by a second author (R.A.P or D.D.Q.). We grouped study results by strategy. Two authors (R.A.P or D.D.Q.) assessed the quality of the included studies.19 Unless otherwise noted, study results described in the text are statistically significant at P<0.05. When the reviewed article did not report statistical significance, we calculated significance based on response rates and sample sizes using a simple binomial test of 2 proportions.20 We summarized response rate effects for each strategy by calculating the median and range of response rate differences across all studies, including all results regardless of statistical significance.20

RESULTS

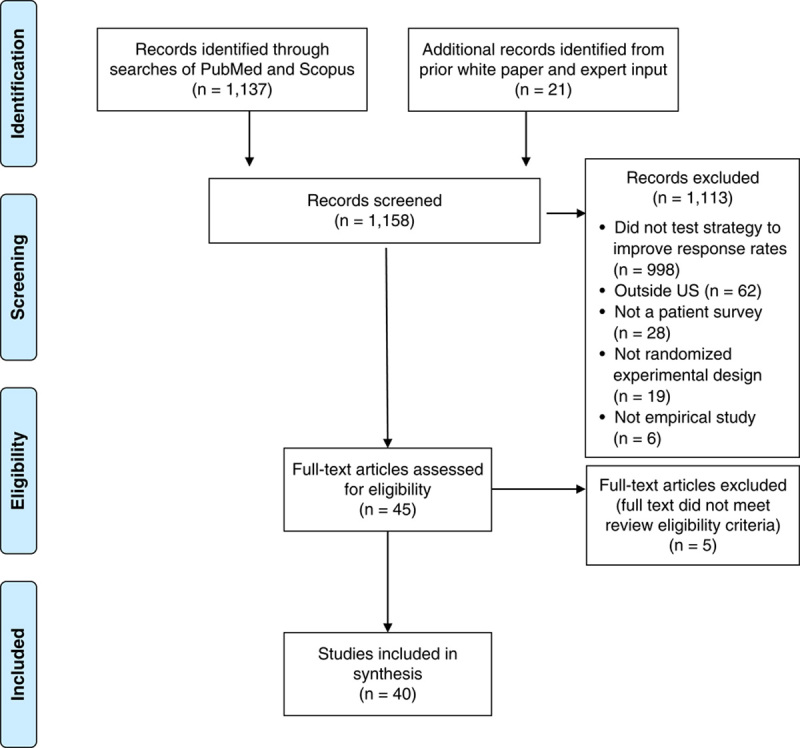

The searches identified 1137 unique studies; 21 additional articles were identified by subject matter experts. Of these 1158 articles, 45 met inclusion criteria after review of their titles and abstracts. After review of full text, 40 were included (Fig. 1).

FIGURE 1.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram. From Moher et al.16 For more information, visit www.prisma-statement.org.

Twenty of the included studies (50%) assessed response rates to CAHPS surveys and 20 used other patient experience surveys. Twenty-four studies (55%) assessed response rates for subgroups or reported the representativeness of respondents in comparison to sampled patients. Sample sizes ranged from 63 to 294,877, with a median of 1900. Twenty of the studies were published between 1995 and 2005, 10 between 2006 and 2015, and 10 between 2016 and 2020.

Detailed information about each study is in Appendix Table 1 (Supplemental Digital Content 2, http://links.lww.com/MLR/C537). Table 1 provides an overview of results for each strategy that the included studies tested to promote response rates.

TABLE 1.

Summary of Response Rate Effects by Strategy

| No. Studies | ||||||

|---|---|---|---|---|---|---|

| Studies Reporting Statistically Significant Findings | ||||||

| Tested Strategy | Reference Strategy | All | Tested Strategy has Higher Response Rate Than Reference Strategy | Tested Strategy has Lower Response Rate Than Teference Strategy | Median Response Rate Difference Between Tested Strategy and Reference Strategy (Range) | Factors Contributing to Varying Effects Across Studies |

| Survey administration by telephone or IVR | ||||||

| Telephone only | Mail only | 1021–24,26,28,29,34,43,45 | 421,29,34,43 | 226,28 | 6% (−11%, 22%) | Patient/respondent characteristics (eg, hospital inpatients, emergency department patients, or outpatients; bereaved family caregivers versus patients) |

| IVR only | Mail only | 425–27,30 | 0 | 325,26,30 | −14% (−17%, 1%) | Use of speech-enabled IVR |

| Mail-telephone | Mail only | 526,28,29,41,45 | 526,28,29,41,45 | 0 | 10% (4%, 15%) | Availability of telephone numbers |

| Mail-telephone | Telephone only | 526,28,29,31,33 | 426,28,29,31 | 0 | 13% (2%, 15%) | Patient/respondent characteristics |

| Mail-IVR | Mail only | 225,30 | 125 | 0 | 2.5% (2%, 3%) | Sample size |

| Survey administration by web | ||||||

| Web only | Mail only | 723–25,27,32,39,43 | 0 | 524,25,27,32,39 | −20% (−33%, 0%) | Availability of email addresses Mode of survey invitation (ie, email vs. mailed letter) |

| Web only | Telephone only | 323,24,43 | 0 | 224,43 | −22% (−44%, −20%) | Availability of email addresses |

| Web only | IVR only | 225,27 | 0 | 225,27 | −19% (−21%, −16%) | Availability of email addresses |

| Web only | In-person | 238,42 | 0 | 142 | −10% (−5%, −14%) | Care setting |

| Web-mail | Mail only | 225,32 | 0 | 0 | −2% (−2%, −2%) | Mode of survey invitation (ie, email versus mailed letter with printed link) |

| Web-mail-telephone | Mail-telephone | 242,44 | 142 | 0 | 4% (2%, 5%) | Availability of email addresses |

| In-person survey administration | ||||||

| In-person | Mail only | 435–37,40 | 237,40 | 135 | 5% (−18%, 55%) | Mail follow-up for nonresponders + care setting (eg, inpatient discharge with extended wait time) |

| In-person | Web only | 238,42 | 142 | 0 | 10% (5%, 14%) | Care setting |

| Other strategies | ||||||

| Incentive* | No incentive | 446–49 | 446–49 | 0 | 12% (7%, 20%) | Nature of incentive (eg, cash vs. other, such as gift certificate or phone card) Size of incentive (eg, $1, $2, $5, vs. $10) Population (eg, Medicaid beneficiaries vs. commercially insured) Unconditional vs. conditional upon receipt of completed survey |

| Strategies to enhance response from subgroups with traditionally low response rates | 530,31,47,48,57 | 347,48,57 | 0 | 4% (1%, 9%) | Respondent characteristics (eg, language, literacy) | |

| Formatting and layout | 341,53,54 | 154 | 0 | 1% (0%, 6%) | Complexity of survey content Size and color of mail materials | |

| Prenotification† | No prenotification | 155 | 155 | 0 | 18% (NA) | Respondent characteristics (eg, race or ethnicity) |

Median and range are calculated among all studies, including all results regardless of statistical significance. For studies that test multiple versions of a given strategy, we include the highest response rate difference in our calculations.

An additional 3 studies compared types of incentives.

An additional study compared types of prenotification letters. As there is only one study that tested this strategy, no range is presented.

IVR indicates interactive voice response; NA, not available.

Mode of Administration

Twenty-five studies21–45 assessed the effectiveness of alternative modes of survey administration.

Telephone or Interactive Voice Response Modes

Of the 10 studies that compared telephone-only to mail-only mode, 4 reported telephone-only response rates higher than mail-only rates21,29,34,43 and 4 found comparable response rates for the 2 modes (or did not have sufficient power to detect differences; Table 1).22–24,45 A study of bereaved family caregivers of hospice patients and another study of hospital patients found lower response rates to telephone-only surveys than to mail-only (38% phone-only vs. 43% mail-only26; 27% phone-only vs. 38% mail-only, respectively26,28).

Interactive voice response (IVR) is an automated phone system technology in which survey responses are captured by touch-tone keypad selection or speech recognition. Of the 4 studies comparing IVR-only to mail-only mode, 3 found that IVR-only yielded lower response rates than mail-only,25,26,30 while 1 study that tested speech-enabled IVR, in which respondents were transferred by a live interviewer to an IVR system allowing for verbal responses (rather than use of a telephone keypad), found response rates comparable to mail-only.27

Nine studies assessed mixed-mode survey administration that added a telephone or IVR component to mail. Seven of the 9 studies found that these mixed modes yielded substantially higher response rates than mail, telephone, or IVR alone (Table 1).25,26,28,29,31,41,45 One found comparable response rates between telephone-only and mail with telephone follow-up,33 and another found comparable response rates between mail-only and mail with IVR follow-up.30

The 6 studies that assessed the representativeness of respondents across modes found that telephone promoted higher response among groups with historically lower response rates. One reported that telephone respondents were more diverse with regard to race and ethnicity,29 2 found that those with Medicaid were more likely to respond to telephone than to other modes,21,43 and 5 reported that a higher proportion of younger patients responded to telephone than to mail or web modes (Appendix Table 1, Supplemental Digital Content 2, http://links.lww.com/MLR/C537).21,34,41,43,44

With regard to IVR, one study found that in comparison to mail respondents, IVR respondents were less likely to have completed high school and were less likely to be Asian (Appendix Table 1, Supplemental Digital Content 2, http://links.lww.com/MLR/C537).25 Another study found that respondents to speech-enabled IVR were more likely to be English-preferring, and less likely to be age 74 or older.27

Web-based Modes

Nine studies23–25,27,32,38,39,42,43 compared web-only administration to other single modes. All 9 studies found that web-only resulted in lower response rates than the alternative modes (Table 1). Tested alternatives included on-site paper surveys,38,42 mail-only surveys,23–25,27,32,39 and telephone-only or IVR-only surveys.23–25,27,43

Four studies assessed sequential mixed-mode survey administration that included a web component. Two of these found that among primary care patients, web followed by mail yielded a response rate similar to mail alone (51% mail-only vs. 49% web with mail follow-up25; 43% mail-only vs. 41% web-mail; Table 1).32 Among emergency department patients, 2 studies found that a sequential mixed administration by web, mail, and telephone resulted in a response rate similar to or higher than a mail-telephone administration (26% for mail-telephone vs. 27% email-mail-telephone; 25% for mail-phone vs. 31% for email-mail-phone, respectively).42,44

Two studies compared web-based administrations that either sent survey links by mail or by email; both found notably lower response rates for mailed survey links than for emailed links.42,44 One study found that using a combination of text and email invitations/reminders instead of only email invitations/reminders yielded higher response rates.44 Another study found similar response rates to a web-based survey, regardless of whether respondents were required to log in to a patient portal to complete the survey or could complete the survey directly from their email via a hyperlink (17% portal, 20% email),32 although those age 65+ were significantly less likely to respond when they had to go through a portal than when they could go directly to the survey from the email request; no significant differences were found by education, race and ethnicity, or sex.

Compared with mail respondents, 2 studies found that web respondents were younger,25,44 1 found that they were more educated,25 1 that they had fewer medical conditions and reported better health status,25 and others that they were less likely to be Black27 or Hispanic.25 One study did not find differences across web and mail modes by education or race and ethnicity, but noted that the study population had high levels of education and email use.32

In-person Mode

Unlike other survey administration modes, the protocols for in-person survey administration vary by care setting. Of the 6 studies assessing response rates to surveys administered in person at a clinical site, 4 tested distribution of paper surveys in ambulatory care settings.35–38 Two of these found worse response rates among patients who received a paper survey in person than among those who were sent mailed surveys (40% on-site vs. 58% for mailed surveys35; and 70% in-person vs. 76% mail, not statistically significant; Table 1)36; notably, in both studies, the mail mode included an additional mailed survey to nonrespondents, while the in-person mode did not. In contrast, one study compared paper surveys distributed in-office to mailed surveys with a reminder postcard (but no additional mailed survey), and found higher response rates to the in-person survey (73% in-person vs. 57% mail).37 Finally, one study compared in-clinic paper survey distribution to a web survey sent to those who provided email addresses before checking out of the clinic; the response rate to the in-clinic survey substantially exceeded the email survey, although statistical significance was not achieved with the small sample size (72% in-clinic vs. 58% email).38

The remaining 2 studies of in-person mode were conducted in inpatient settings. One study found that on-site distribution of patient surveys was difficult in emergency departments, possibly resulting in selection bias. The overall response rate to the on-site survey was very low, but exceeded that of a web-based survey (10% for on-site vs. 5% web survey).42 Another study tested the use of tablets to administer in-person surveys to parents of hospitalized children while they were waiting to be discharged, and found a substantially higher response rate for this protocol than to mail administration (71% tablet administration vs. 16% mail).40 Tablet respondents were significantly more likely to be fathers, more likely to have a high school education or less, less likely to be White, and more likely to be publicly insured than mail respondents.

Incentives

Seven studies tested the use of incentives, including 4 studies that compared incentives to no incentive46–49 and 3 that compared types of incentives to one another.50–52 Three studies found that small cash incentives for Medicaid enrollees can result in a substantial increase in response rates: 1 distributed a $2 unconditional incentive along with initial mailings (43% incentive vs. 33% no incentive),46 1 sent $2 or $1 cash incentives solely to those who had not responded to 2 prior mailings (50% for $2 and 48% for $1 incentive, respectively, vs. 37% for no incentive),47 and 1 found a response rate of 64% for those provided $10 conditionally upon receipt of completed surveys versus 44% for no incentive (Table 1).48 One study of adult medical center patients found that conditional incentives resulted in higher response rates (57% for $5 cash or Target e-certificate vs. 50% for no incentive,49 with most respondents choosing the cash option).

Effects on response are dependent on the size and nature of the incentive. One study found that larger unconditional cash incentives resulted in higher response from health plan enrollees (74% for $5 vs. 67% for $2).52 Among childhood cancer survivors and their parents, a $10 cash incentive resulted in substantially higher response rates when included in the initial mailing rather than conditionally upon survey response (64% unconditional vs. 45% conditional).50 Another study found no differences in response rates among prostate cancer patients offered either an unconditional or conditional incentive of a 30-minute prepaid phone card.51

Formatting and Layout

Three studies tested the formatting and layout of survey materials.41,53,54 One found that small white and large blue questionnaire booklets yielded higher response rates than small blue or large white booklets.54 Another found comparable response rates for a 16-page food frequency questionnaire with fewer items overall but many more items per page versus a 36-page questionnaire with more survey items but designed to be cognitively easier.53 Finally, one study found no significant differences in response rates among patients at a university health center in response to surveys that used a 4-point response scale versus those that used a 6-point response scale.41

Prenotification

Two studies tested the effects of prenotification.55,56 One study directly assessed the effects of an advance letter sent to primary care patients age 50 or older 2 weeks before a mailed survey, and found large differences in response rates between those who did and did not receive the advance letter (59% advance letter vs. 41% no advance letter; Table 1),55 with greater benefits observed among those who were White than among those who were Black. The other study compared an ethnically tailored letter and envelope inviting Black and Hispanic primary care patients to complete a phone survey to an untailored letter and envelope; there were no statistically significant differences in response rates across the 2 groups.56

Strategies to Enhance Response From Subgroups With Traditionally Low Response Rates

Five studies30,31,47,48,57 tested a range of strategies designed to promote response from subgroups with historically low response rates. One tested enhanced procedures for identifying phone and address contact information for Medicaid enrollees (ie, use of additional directories and vendors for lookup of contact information), and found a 4 percentage point improvement in response rates (not statistically significant).31 Another tested the effects of including both English and Spanish-language surveys rather than only English surveys in mailings to Medicare beneficiaries with predicted probabilities of Spanish response of at least 10%, and found that the bilingual protocol increased response rates by 4 percentage points (40% for bilingual mailing vs. 36% for English mailing).57 Finally, another study reported that sending follow-up surveys by certified mail rather than by priority mail increased response rates among nonrespondents by 7 percentage points (28% for certified mail vs. 21% for priority mailing).47

Of 2 studies that tested strategies for increasing responses from low-literacy Medicaid enrollees,30,48 one found no difference in response rates among Medicaid enrollees between a survey with a traditional print format and an illustration-enhanced format that used pictures to depict the key elements within each survey item.30 In contrast, another study tested a standard survey mailing compared with a user-friendly, low-literacy version for Medicaid households and found higher response rates for the low-literacy survey (44% low literacy vs. 35% standard).48

DISCUSSION

Our systematic review of 40 experimental studies identified support for several strategies to improve the response rates and representativeness of patient surveys. Administering patient surveys in sequential mixed modes is the most effective strategy for achieving high response rates. The most common such approach, with the most supporting evidence, is mail with telephone follow-up. Mail surveys are included in most multimode protocols because mailing addresses are typically available for all sampled patients, and mail mode response rates are consistently as high or higher than those of other modes, particularly among older adults. The studies we reviewed find that adding a web mode can further increase the response rates achieved by mail-telephone by 2–5 percentage points. Administering patient surveys first by web (with invitation by text or email) in a sequential mixed-mode survey administration may reduce costs (with lower-cost web outreach replacing higher-cost administration by mail or telephone) and improve the timeliness with which survey responses are received,58 potentially increasing their usefulness for quality improvement. Notably, surveys administered using only web-based modes resulted in consistently lower response rates than those administered by mail or telephone. For web surveys to result in cost savings, the additional costs of setting up and administering the web survey need to be lower than the savings in other costs.59 The potential cost savings depend, in part, on the response rate to the web survey.

Promoting representativeness of patient survey respondents is important for several reasons. First, patients who are Black, Hispanic, or of low socioeconomic status are less likely to respond to care experience surveys,60,61 underrepresenting these patients in overall assessments of care and hampering efforts to measure health care equity. In addition, evidence from numerous patient experience surveys indicates that lower response rates under-represent patients with poorer experiences,26,62–64 whose assessments are critical for informing quality improvement. We find that sequential mixed mode protocols that include telephone may promote representation across subgroups of patients by age, insurance status, and race and ethnicity, and that web administration can improve the representativeness of respondents across age groups by increasing response by younger adults. However, in the studies we reviewed, web administration did not improve the representation of other groups that often have lower likelihood of response, such as patients who are low income, Asian, Black, or Hispanic.

In-person survey administration at the point of care offers the potential advantages of capturing patients’ experiences when they are most salient and eliminating the need for accurate and comprehensive patient contact information. In the studies we reviewed, however, in-person administration of paper surveys following ambulatory care and emergency department visits yielded lower response rates than mail survey protocols that included follow-up with another copy of the survey. In contrast, tablet-based survey administration while parents awaited their child’s discharge from a children’s hospital showed improved response rates and representativeness of respondents.40 High response rates in this setting may be contingent on extended waiting periods during hospital discharge, during which patients and families have fewer competing demands than they do when they are invited to complete a survey at home. In addition, presentation of tablets by hospital staff members may be appealing to families and convey a sense of importance about the data collection effort.

Ensuring that a representative sample of eligible patients is invited to participate in point-of-service surveys is necessary to prevent systematic biases in the survey sample, but has proven challenging, even in experimental settings.35,42,65 When health care staff are responsible for recruiting respondents, they may intentionally or unintentionally bias who is sampled and how they respond. For example, 3 of the 5 studies that compared responses to in-person surveys to those collected in other modes found that patients invited to participate in a survey by clinical staff at the point of service gave more favorable responses than patients responding via other modes.35–37 These results could also be due to socially desirable response pressures associated with completing surveys at the site of care,34 or the more general tendency to report more favorably when surveyed nearer to the time of care.66,67 In the hospital setting, however, it may be possible to overcome this limitation by integrating survey administration into the standardized discharge process.

In keeping with findings from the larger survey literature,9,10 our review found 1 article that reported substantial benefits of prenotification on response rates, 2 articles reporting notable benefits of certified or overnight mail delivery (for Medicaid and general patient populations, respectively47,68), and several articles reporting large benefits of incentives (7–20 percentage points in the reviewed studies). Incentives provided with the initial mail survey invitation yielded higher response rates than those provided conditionally upon receipt of completed surveys, and cash incentives were generally preferred to other incentives. Of note, however, some survey sponsors may be reluctant to offer incentives due to cost constraints, ethical reasons, such as perceived coercion of respondents, or out of concern that over time, provision of incentives may erode respondents’ intrinsic motivation to complete surveys.69

Only one study meeting our review criteria examined the effects of survey length on response rates53; this study simultaneously studied length and complexity and found similar response rates for a 16-page, more complex survey and a 36-page, less complex survey. This finding is consistent with prior, observational and unpublished research that found lower response rates to shorter surveys presented with more complex or less attractive layouts than to longer surveys with less complex and more attractive layouts.70,71 One study also found that a survey with a lower required literacy level yielded a higher response rate among Medicaid beneficiaries than a same-length survey at a higher literacy level. A comprehensive review of the broader survey literature reported that—assuming surveys of similar complexity and interest to potential respondents48—shorter survey length is associated with somewhat higher response rates.72 These benefits may be particularly apparent when comparing surveys with substantial proportionate differences in length (eg, when comparing survey length of 4 pages to 7 pages rather than 4 pages to 5 pages).73 Prior research on CAHPS surveys collected from Medicare beneficiaries using mixed-mode administration found that surveys that are 12 questions shorter are associated with response rates that are 2.5 percentage points higher.70,74 Reducing the number of questions on a patient survey results in a loss of information; however, reducing complexity is a viable strategy for enhancing response rates and representativeness without losing information that may be useful for quality monitoring and improvement.

Limitations

Our review has several potential limitations. First, there was substantial heterogeneity in the survey administration procedures and outcomes assessed across our included studies. Therefore, we present a range of results for each strategy of interest, rather than conducting a formal meta-analysis. Second, some studies included in our review were conducted 10 or more years ago, likely yielding higher response rates for telephone modes in particular than might be expected more recently75,76; importantly, however, studies included in our review—even the most recent—continue to underscore the usefulness of telephone administration for promoting response from underserved groups. The shift from landlines to cell phones over time may also result in changes in response patterns for telephone modes. Third, when an article compared multiple versions of a given strategy (eg, incentives of $1 and of $2), we used the largest difference in response rates to represent the article’s response rate results. While this approach has the benefit of highlighting the largest potential benefit of a given strategy, it may result in overestimation of effects. Finally, to ensure that our findings are based on the highest quality of evidence available, we included only experimental studies reported in the peer-reviewed literature. By doing so, however, we excluded observational studies and grey literature that may provide relevant insights. Where possible, we referenced these studies in our discussion of findings for context.

CONCLUSIONS

Data collection strategies focused solely on reducing burden, such as very short surveys or web-only administration, may result in loss of important information and a reduction in the representativeness of patient survey responses. In contrast, sequential mixed-mode survey administration promotes the highest response rates to patient surveys and increases representation of hard-to-reach and underserved populations in assessments of patient care. Including telephone follow-up in mixed-mode administration enhances response among those with historically lower response rates, but may be avoided due to its relatively higher cost. Including web-based modes in mixed-mode administration may increase response among those with web access at low cost. Other promising strategies include in-person survey administration during hospital discharge, provision of incentives, minimizing the complexity of survey wording, special mail delivery, and prenotification before survey administration.

Supplementary Material

ACKNOWLEDGMENTS

The authors gratefully acknowledge Joan Chang for her assistance in abstracting articles, and Caren Ginsberg, Sylvia Fisher, and Floyd J. Fowler, Jr for their insightful comments on earlier drafts of the manuscript.

Footnotes

This work was supported by a cooperative agreement from the Agency for Healthcare and Research Quality (AHRQ) [Contract number U18 HS025920].

The authors declare no conflict of interest.

Supplemental Digital Content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal's website, www.lww-medicalcare.com.

Contributor Information

Rebecca Anhang Price, Email: ranhangp@rand.org.

Denise D. Quigley, Email: quigley@rand.org.

J. Lee Hargraves, Email: Lee.Hargraves@umb.edu.

Joann Sorra, Email: JoannSorra@westat.com.

Alejandro U. Becerra-Ornelas, Email: becerra@prgs.edu.

Ron D. Hays, Email: drhays@ucla.edu.

Paul D. Cleary, Email: paul.cleary@yale.edu.

Julie Brown, Email: julieb@rand.org.

Marc N. Elliott, Email: elliott@rand.org.

REFERENCES

- 1. NEJM Catalyst. What is pay for performance in healthcare? 2018. Available at: https://catalyst.nejm.org/doi/full/10.1056/CAT.18.0245. Accessed September 3, 2021.

- 2. Godden E, Paseka A, Gnida J, et al. The impact of response rate on Hospital Consumer Assessment of Healthcare Providers and System (HCAHPS) dimension scores. Patient Exp J. 2019;6:6. [Google Scholar]

- 3. Evans R, Berman S, Burlingame E, et al. It’s time to take patient experience measurement and reporting to a new level: Next steps for modernizing and democratizing national patient surveys. Health Aff. 2020. https://www.healthaffairs.org/do/10.1377/forefront.20200309.359946/full/. Acessed October 6, 2022. [Google Scholar]

- 4. Damberg CL, Paddock SM. RAND Medicare Advantage (MA) and Part D Contract Star Ratings Technical Expert Panel 2018 Meeting. Santa Monica, CA: RAND Corporation; 2018.

- 5. Salzberg CA, Kahn CN, Foster NE, et al. Modernizing the HCAHPS Survey: Recommendations From Patient Experience Leaders. American Hospital Association; 2019. [Google Scholar]

- 6. Crofton C, Lubalin JS, Darby C. Consumer Assessment of Health Plans Study (CAHPS). Foreword. Med Care. 1999;37:Ms1–Ms9. [DOI] [PubMed] [Google Scholar]

- 7. Agency for Healthcare Research and Quality. Principles underlying CAHPS surveys. Content last reviewed January 2020. Available at: https://www.ahrq.gov/cahps/about-cahps/principles/index.html. Accessed July 28, 2021. [DOI] [PubMed]

- 8. Giordano LA, Elliott MN, Goldstein E, et al. Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67:27–37. [DOI] [PubMed] [Google Scholar]

- 9. Edwards P, Roberts I, Clarke M, et al. Increasing response rates to postal questionnaires: systematic review. BMJ. 2002;324:1183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Mercer A, Caporaso A, Cantor D, et al. How much gets you how much? Monetary incentives and response rates in household surveys. Public Opin Q. 2015;79:105–129. [Google Scholar]

- 11. Medway RL, Fulton J. When more gets you less: a meta-analysis of the effect of concurrent web options on mail survey response rates. Public Opin Q. 2012;76:733–746. [Google Scholar]

- 12. Shih T-H, Fan X. Comparing response rates from web and mail surveys: a meta-analysis. Field Methods. 2008;20:249–271. [Google Scholar]

- 13. Dillman DA, Smith JD, Christian L. Internet, Phone, Mail and Mixed-Mode Surveys: The Tailored Design Method. Hoboken, NJ: John Wiley; 2014. [Google Scholar]

- 14. Dillman DA. Brenner P. Chapter 2. Towards Survey Response Rate Theories That No Longer Pass Each Other Like Strangers in the Night. Understanding Survey Methodology: Sociological Theory and Applications. Springer; 2020. [Google Scholar]

- 15. Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA Statement. PLoS Med. 2009;6:e1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Khanna G, Ginsberg C. CAHPS®: 25 years of putting the patient first. 2020. Available at: https://www.ahrq.gov/news/blog/ahrqviews/cahps-25yrs.html. Accessed July 28, 2021.

- 18. Tesler R, Sorra J. CAHPS Survey Administration: What We Know and Potential Research Questions. Rockville, MD: Agency for Healthcare Research and Quality; 2017. [Google Scholar]

- 19. Guyatt GH, Oxman AD, Vist G, et al. GRADE guidelines: 4. Rating the quality of evidence—study limitations (risk of bias). J Clin Epidemiol. 2011;64:407–415. [DOI] [PubMed] [Google Scholar]

- 20. Pagano M, Gauvreau K. Principles of Biostatistics. Australia: Duxbury; 2000. [Google Scholar]

- 21. Burroughs TE, Waterman BM, Cira JC, et al. Patient satisfaction measurement strategies: a comparison of phone and mail methods. Jt Comm J Qual Improv. 2001;27:349–361. [DOI] [PubMed] [Google Scholar]

- 22. Couper MP, Peytchev A, Strecher VJ, et al. Following up nonrespondents to an online weight management intervention: randomized trial comparing mail versus telephone. J Med Internet Res. 2007;9:e16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Harewood GC, Yacavone RF, Locke GR, III, et al. Prospective comparison of endoscopy patient satisfaction surveys: e-mail versus standard mail versus telephone. Am J Gastroenterol. 2001;96:3312–3317. [DOI] [PubMed] [Google Scholar]

- 24. Harewood GC, Wiersema MJ, de Groen PC. Utility of web-based assessment of patient satisfaction with endoscopy. Am J Gastroenterol. 2003;98:1016–1021. [DOI] [PubMed] [Google Scholar]

- 25. Rodriguez HP, von Glahn T, Rogers WH, et al. Evaluating patients’ experiences with individual physicians: a randomized trial of mail, internet, and interactive voice response telephone administration of surveys. Med Care. 2006;44:167–174. [DOI] [PubMed] [Google Scholar]

- 26. Elliott MN, Zaslavsky AM, Goldstein E, et al. Effects of survey mode, patient mix, and nonresponse on CAHPS hospital survey scores. Health Serv Res. 2009;44:501–518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Elliott MN, Brown JA, Lehrman WG, et al. A randomized experiment investigating the suitability of speech-enabled IVR and Web modes for publicly reported surveys of patients’ experience of hospital care. Med Care Res Rev. 2013;70:165–184. [DOI] [PubMed] [Google Scholar]

- 28. Parast L, Elliott MN, Hambarsoomian K, et al. Effects of survey mode on Consumer Assessment of Healthcare Providers and Systems (CAHPS) hospice survey scores. J Am Geriatr Soc. 2018;66:546–552. [DOI] [PubMed] [Google Scholar]

- 29. Parast L, Mathews M, Tolpadi A, et al. National testing of the Emergency Department Patient Experience of Care Discharged to Community Survey and implications for adjustment in scoring. Med Care. 2019;57:42–48. [DOI] [PubMed] [Google Scholar]

- 30. Shea JA, Guerra CE, Weiner J, et al. Adapting a patient satisfaction instrument for low literate and Spanish-speaking populations: comparison of three formats. Patient Educ Couns. 2008;73:132–140. [DOI] [PubMed] [Google Scholar]

- 31. Brown JA, Nederend SE, Hays RD, et al. Special issues in assessing care of Medicaid recipients. Med Care. 1999. ; 37:Ms79–Ms88. [DOI] [PubMed] [Google Scholar]

- 32. Fowler FJ, Jr, Cosenza C, Cripps LA, et al. The effect of administration mode on CAHPS survey response rates and results: A comparison of mail and web-based approaches. Health Serv Res. 2019;54:714–721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Hepner KA, Brown JA, Hays RD. Comparison of mail and telephone in assessing patient experiences in receiving care from medical group practices. Eval Health Prof. 2005;28:377–389. [DOI] [PubMed] [Google Scholar]

- 34. Ngo-Metzger Q, Kaplan SH, Sorkin DH, et al. Surveying minorities with limited-English proficiency: does data collection method affect data quality among Asian Americans? Med Care. 2004;42:893–900. [DOI] [PubMed] [Google Scholar]

- 35. Anastario MP, Rodriguez HP, Gallagher PM, et al. A randomized trial comparing mail versus in-office distribution of the CAHPS Clinician and Group Survey. Health Serv Res. 2010;45:1345–1359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Burroughs TE, Waterman BM, Gilin D, et al. Do on-site patient satisfaction surveys bias results? Jt Comm J Qual Patient Saf. 2005;31:158–166. [DOI] [PubMed] [Google Scholar]

- 37. Gribble RK, Haupt C. Quantitative and qualitative differences between handout and mailed patient satisfaction surveys. Med Care. 2005;43:276–281. [DOI] [PubMed] [Google Scholar]

- 38. Labovitz J, Patel N, Santander I. Web-based patient experience surveys to enhance response rates a prospective study. J Am Podiatr Med Assoc. 2017;107:516–521. [DOI] [PubMed] [Google Scholar]

- 39. Bergeson SC, Gray J, Ehrmantraut LA, et al. Comparing web-based with mail survey administration of the Consumer Assessment of Healthcare Providers and Systems (CAHPS(R) clinician and group survey. Prim Health Care. 2013;3:1000132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Toomey SL, Elliott MN, Zaslavsky AM, et al. Improving response rates and representation of hard-to-reach groups in family experience surveys. Acad Pediatr. 2019;19:446–453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Drake KM, Hargraves JL, Lloyd S, et al. The effect of response scale, administration mode, and format on responses to the CAHPS Clinician and Group survey. Health Serv Res. 2014;49:1387–1399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Mathews M, Parast L, Tolpadi A, et al. Methods for improving response rates in an emergency department setting—a randomized feasibility study. Surv Pract. 2019;12:12. [Google Scholar]

- 43. Schwartzenberger J, Presson A, Lyle A, et al. Remote collection of patient-reported outcomes following outpatient hand surgery: a randomized trial of telephone, mail, and e-mail. J Hand Surg Am. 2017;42:693–699. [DOI] [PubMed] [Google Scholar]

- 44. Parast L, Mathews M, Elliott M, et al. Effects of push-to-web mixed mode approaches on survey response rates: evidence from a randomized experiment in emergency departments. Surv Pract. 2019;12:1–26. [Google Scholar]

- 45. Fowler FJ, Jr, Gallagher PM, Nederend S. Comparing telephone and mail responses to the CAHPS survey instrument. Consumer Assessment of Health Plans Study. Med Care. 1999;37:Ms41–Ms49. [DOI] [PubMed] [Google Scholar]

- 46. Beebe TJ, Davern ME, McAlpine DD, et al. Increasing response rates in a survey of Medicaid enrollees: the effect of a prepaid monetary incentive and mixed modes (mail and telephone). Med Care. 2005;43:411–414. [DOI] [PubMed] [Google Scholar]

- 47. Gibson PJ, Koepsell TD, Diehr P, et al. Increasing response rates for mailed surveys of Medicaid clients and other low-income populations. Am J Epidemiol. 1999;149:1057–1062. [DOI] [PubMed] [Google Scholar]

- 48. Fredrickson DD, Jones TL, Molgaard CA, et al. Optimal design features for surveying low-income populations. J Health Care Poor Underserved. 2005;16:677–690. [DOI] [PubMed] [Google Scholar]

- 49. Brown JA, Serrato CA, Hugh M, et al. Effect of a post-paid incentive on response rates to a web-based survey. Surv Pract. 2016;9:1–7. [Google Scholar]

- 50. Rosoff PM, Werner C, Clipp EC, et al. Response rates to a mailed survey targeting childhood cancer survivors: a comparison of conditional versus unconditional incentives. Cancer Epidemiol Biomarkers Prev. 2005;14:1330–1332. [DOI] [PubMed] [Google Scholar]

- 51. Evans BR, Peterson BL, Demark-Wahnefried W. No difference in response rate to a mailed survey among prostate cancer survivors using conditional versus unconditional incentives. Cancer Epidemiol Biomarkers Prev. 2004;13:277–278. [DOI] [PubMed] [Google Scholar]

- 52. Shaw MJ, Beebe TJ, Jensen HL, et al. The use of monetary incentives in a community survey: impact on response rates, data quality, and cost. Health Serv Res. 2001;35:1339–1346. [PMC free article] [PubMed] [Google Scholar]

- 53. Subar AF, Ziegler RG, Thompson FE, et al. Is shorter always better? Relative importance of questionnaire length and cognitive ease on response rates and data quality for two dietary questionnaires. Am J Epidemiol. 2001;153:404–409. [DOI] [PubMed] [Google Scholar]

- 54. Beebe TJ, Stoner SM, Anderson KJ, et al. Selected questionnaire size and color combinations were significantly related to mailed survey response rates. J Clin Epidemiol. 2007;60:1184–1189. [DOI] [PubMed] [Google Scholar]

- 55. Napoles-Springer AM, Fongwa MN, Stewart AL, et al. The effectiveness of an advance notice letter on the recruitment of African Americans and Whites for a mailed patient satisfaction survey. J Aging Health. 2004;16:124s–136s. [DOI] [PubMed] [Google Scholar]

- 56. Napoles-Springer AM, Santoyo J, Stewart AL. Recruiting ethnically diverse general internal medicine patients for a telephone survey on physician-patient communication. J Gen Intern Med. 2005;20:438–443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Elliott MN, Klein DJ, Kallaur P, et al. Using predicted Spanish preference to target bilingual mailings in a mail survey with telephone follow-up. Health Serv Res. 2019;54:5–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Hunter J, Corcoran K, Leeder S, et al. Is it time to abandon paper? The use of emails and the Internet for health services research—a cost-effectiveness and qualitative study. J Eval Clin Pract. 2013;19:855–861. [DOI] [PubMed] [Google Scholar]

- 59. Couper MP. Web surveys: a review of issues and approaches. Public Opin Q. 2000;64:464–494. [PubMed] [Google Scholar]

- 60. Klein DJ, Elliott MN, Haviland AM, et al. Understanding nonresponse to the 2007 Medicare CAHPS survey. Gerontologist. 2011;51:843–855. [DOI] [PubMed] [Google Scholar]

- 61. Kahn KL, Liu H, Adams JL, et al. Methodological challenges associated with patient responses to follow-up longitudinal surveys regarding quality of care. Health Serv Res. 2003;38:1579–1598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Saunders CL, Elliott MN, Lyratzopoulos G, et al. Do differential response rates to patient surveys between organizations lead to unfair performance comparisons?: evidence from the english cancer patient experience survey. Med Care. 2016;54:45–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Barron DN, West E, Reeves R, et al. It takes patience and persistence to get negative feedback about patients’ experiences: a secondary analysis of national inpatient survey data. BMC Health Serv Res. 2014;14:153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Zaslavsky AM, Zaborski LB, Cleary PD. Factors affecting response rates to the Consumer Assessment of Health Plans Study survey. Med Care. 2002;40:485–499. [DOI] [PubMed] [Google Scholar]

- 65. Edgman-Levitan S, Brown J, Fowler FJ, et al. Feedback Loop: Testing a Patient Experience Survey in the Safety Net. Oakland, California: California Health Care Foundation; 2011. [Google Scholar]

- 66. Hargraves JL, Cosenza C, Elliott MN, et al. The effect of different sampling and recall periods in the CAHPS Clinician & Group (CG-CAHPS) survey. Health Serv Res. 2019;54:1036–1044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Setodji C, Burkhart Q, Hays R, et al. Differences in Consumer Assessment of Healthcare Providers and Systems (CAHPS) Clinician and Group Survey Scores by Recency of the Last Visit: implications for comparability of periodic and continuous sampling. Med Care. 2019;57:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Renfroe EG, Heywood G, Foreman L, et al. The end-of-study patient survey: methods influencing response rate in the AVID Trial. Control Clin Trials. 2002;23:521–533. [DOI] [PubMed] [Google Scholar]

- 69. Groves RM, Singer E, Corning A. Leverage-saliency theory of survey participation: description and an illustration. Public Opin Q. 2000;64:299–308. [DOI] [PubMed] [Google Scholar]

- 70. Burkhart Q, Orr N, Brown JA, et al. Associations of mail survey length and layout with response rates. Med Care Res Rev. 2011;78:441–448. [DOI] [PubMed] [Google Scholar]

- 71. LeBlanc J, Cosenza C, Lloyd S. The effect of compressing questionnaire length on data quality. Presentation at the 68th American Association for Public Opinion Research Annual Conference. Boston, MA. 2013.

- 72. Edwards PJ, Roberts I, Clarke MJ, et al. Methods to increase response to postal and electronic questionnaires. Cochrane Database Syst Rev. 2009:Mr000008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Iglesias C, Torgerson D. Does length of questionnaire matter? A randomised trial of response rates to a mailed questionnaire. J Health Serv Res Policy. 2000;5:219–221. [DOI] [PubMed] [Google Scholar]

- 74. Beckett MK, Elliott MN, Gaillot S, et al. Establishing limits for supplemental items on a standardized national survey. Public Opin Q. 2016;80:964–976. [Google Scholar]

- 75. Davern M, McAlpine D, Beebe TJ, et al. Are lower response rates hazardous to your health survey? An analysis of three state telephone health surveys. Health Serv Res. 2010;45:1324–1344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Czajka JL, Beyler A. Declining Response Rates in Federal Surveys: Trends and Implications. Princeton, NJ: Mathematica Policy Research; 2016. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.