Abstract

We present a biologically inspired recurrent neural network (RNN) that efficiently detects changes in natural images. The model features sparse, topographic connectivity (st-RNN), closely modeled on the circuit architecture of a “midbrain attention network.” We deployed the st-RNN in a challenging change blindness task, in which changes must be detected in a discontinuous sequence of images. Compared with a conventional RNN, the st-RNN learned 9x faster and achieved state-of-the-art performance with 15x fewer connections. An analysis of low-dimensional dynamics revealed putative circuit mechanisms, including a critical role for a global inhibitory (GI) motif, for successful change detection. The model reproduced key experimental phenomena, including midbrain neurons' sensitivity to dynamic stimuli, neural signatures of stimulus competition, as well as hallmark behavioral effects of midbrain microstimulation. Finally, the model accurately predicted human gaze fixations in a change blindness experiment, surpassing state-of-the-art saliency-based methods. The st-RNN provides a novel deep learning model for linking neural computations underlying change detection with psychophysical mechanisms.

SIGNIFICANCE STATEMENT For adaptive survival, our brains must be able to accurately and rapidly detect changing aspects of our visual world. We present a novel deep learning model, a sparse, topographic recurrent neural network (st-RNN), that mimics the neuroanatomy of an evolutionarily conserved “midbrain attention network.” The st-RNN achieved robust change detection in challenging change blindness tasks, outperforming conventional RNN architectures. The model also reproduced hallmark experimental phenomena, both neural and behavioral, reported in seminal midbrain studies. Lastly, the st-RNN outperformed state-of-the-art models at predicting human gaze fixations in a laboratory change blindness experiment. Our deep learning model may provide important clues about key mechanisms by which the brain efficiently detects changes.

Keywords: change blindness, deep learning, global inhibition, midbrain attention network, saliency map, superior colliculus

Introduction

Detecting critical changes in the environment is essential for adaptive survival. Forebrain regions in the prefrontal and parietal cortex, and the medial temporal lobe are all known to be involved in change detection (Beck et al., 2001; Pessoa and Ungerleider, 2004; Reddy et al., 2006). In comparison, the role of the midbrain in change detection is relatively unknown. Here, we develop a model of change detection inspired by the neural architecture of an evolutionarily conserved “midbrain attention network,” which comprises the superior colliculus (SC) and an associated inhibitory nucleus [isthmi pars magnocellularis (Imc); Knudsen, 2011].

Primarily studied for its role in the control of eye movements (Paré and Wurtz, 2001; Port and Wurtz, 2003) the SC plays an important role also in the detection of salient changes in sensory stimuli (Krauzlis et al., 2013; Sridharan et al., 2014; Herman and Krauzlis, 2017). For instance, neurons in the SC, and its extensively characterized nonmammalian vertebrate homolog, the optic tectum (OT), fire robustly in response to changes in luminance and size of stimuli (Knudsen, 2011; Liu et al., 2011; Barker et al., 2021; Heap et al., 2018). Many studies have shown that the SC/OT is involved in the detection of salient change events, in a variety of species, including frogs (Gaillard, 1990), birds (Wu et al., 2005), and rats (Comoli et al., 2003). More recent studies have shown that neurons in the primate SC produce phasic bursts of activity in response to near-threshold changes in stimulus color saturation (Herman and Krauzlis, 2017). Moreover, reversibly inactivating the primate SC produces deficits with detecting changes in motion-direction change detection tasks (Zénon and Krauzlis, 2012), and microstimulating the SC enhances the ability to detect changes (Cavanaugh and Wurtz, 2004; Cavanaugh et al., 2006). The SC/OT along with the inhibitory (GABAergic) nucleus Imc (Fig. 1A) are hypothesized to play a key role in signaling of the highest priority stimulus in dynamic environments (Knudsen, 2018).

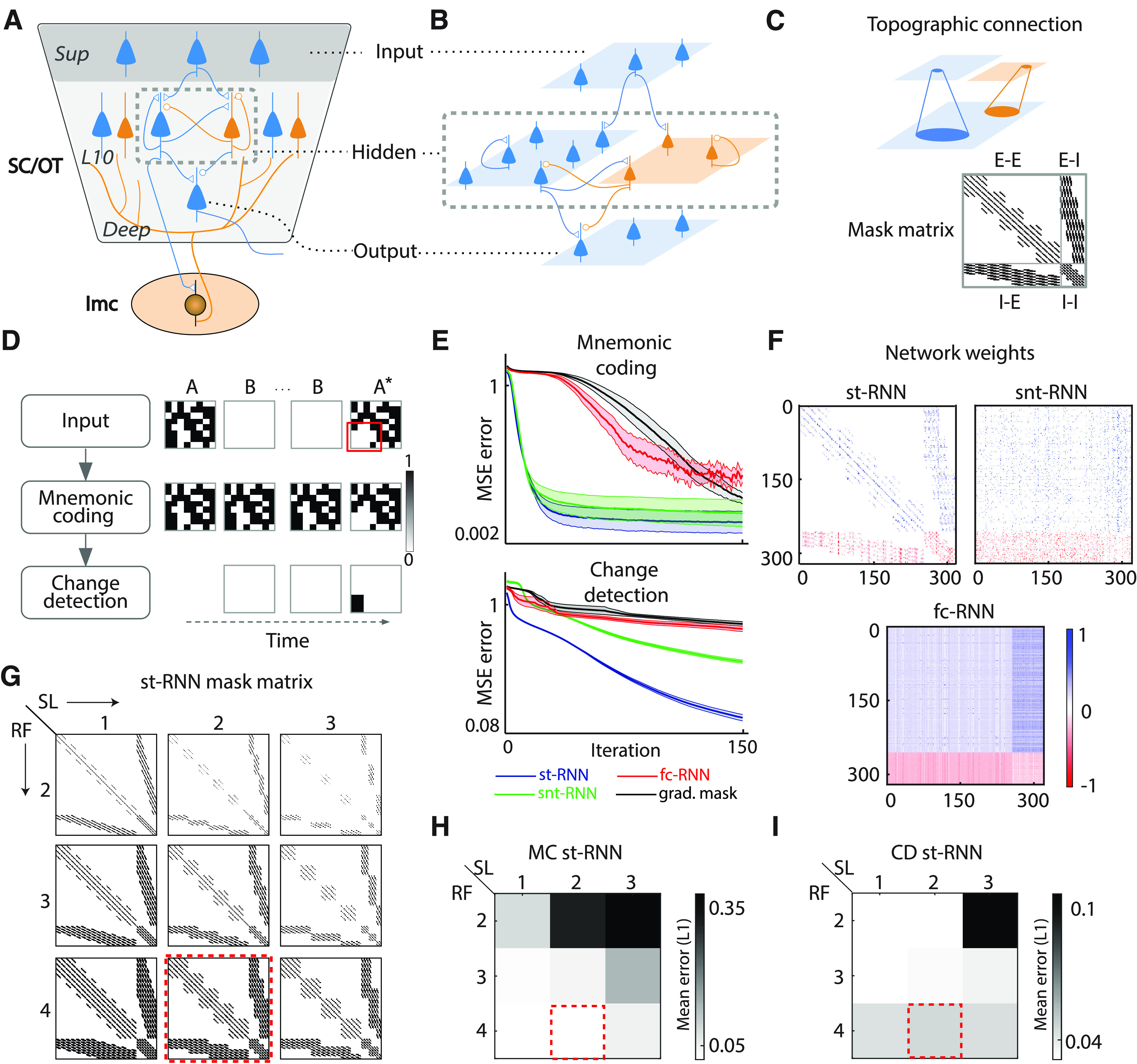

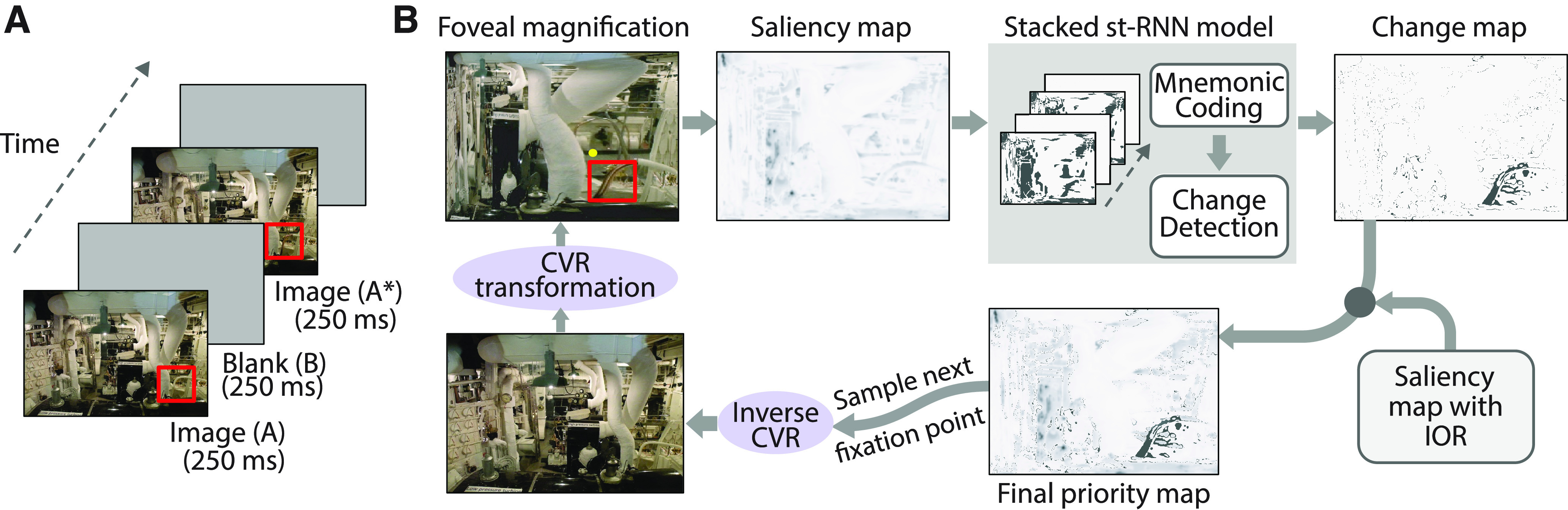

Figure 1.

A midbrain-inspired st-RNN model. A, Schematic showing key components of the midbrain attention network (Knudsen, 2018). The SC, or its non-mammalian vertebrate homolog, the OT, is a multilayered structure with recurrently connected excitatory (E; blue) and inhibitory (I; orange) neurons. Superficial layers (Sup) receive visual inputs whereas intermediate and deep layers (L10, Deep) project to other brain regions and to oculomotor centers. Dashed gray rectangle, Neurons in layer 10 that project topographically to a midbrain GABAergic nucleus (Imc; lower ellipse), which provides global inhibition across the SC/OT neural representation (diffuse orange connections). B, Schematic of st-RNN, with connectivity inspired by the midbrain network. Blue: E-neurons, orange: I-neurons, triangles: E-synapses, circles: I-synapses. Input layer (top) and output layer (bottom) comprise 8 × 8 E-neurons with feedforward connections. Dashed gray rectangle: hidden layer comprising 16 × 16 E-neurons and 8 × 8 I-neurons with recurrent connections. C, Top, Schematic of topographic connectivity: each neuron can connect to a spatially restricted neighborhood of neurons only, for both feedforward and recurrent connections. Bottom, Mask matrix (320 × 320) showing permitted (topographic) recurrent connections in the hidden layer. First 256 rows (and columns) correspond to E-neurons and the last 64 rows (and columns) correspond to I-neurons. Each cell (i,j) represents a connection from neuron j to neuron i. E-E and E-I, connections from excitatory to other excitatory or other inhibitory neurons, respectively; I-E and I-I, connections from inhibitory to other excitatory or other inhibitory neurons, respectively. Black: permitted connections, white: disallowed connections. D, Change detection by the st-RNN. Top row, An exemplar binary patch sequence presented as input to the model. A: original image patch; B: blank; A*: changed image patch. Black: “active” pixels; white: “inactive” pixels; red box: location of change (pixels in the lower left). E, Top, Mean squared error (MSE) (log-scale) over the course of training iterations for the mnemonic coding st-RNN (blue), a conventional fc-RNN (red), an RNN with sparse, but not topographic, connectivity (snt-RNN, green), and an fc-RNN with gradients masked with a sparse matrix during learning (black). Shading: SEM across n = 6 different weight initializations and learning rates (Materials and Methods). Bottom, Same as in the top panel, but for the respective change detection RNNs of each network. Other conventions are the same as in the top panel. F, Top left, The final connectivity matrix learned by the mnemonic coding st-RNN. Blue: excitatory synaptic weights (positive values); red: inhibitory synaptic connection weights (negative values); white: no connectivity (synaptic weight of zero). Top right, Same as in the top left panel, but for the snt-RNN. Bottom, Same as in the top left panel, but for the fc-RNN. G, Connectivity masks used for training mnemonic coding (MC) st-RNN and change detection (CD) st-RNN with different sparsity levels (SL) of connectivity and receptive field (RF) sizes of localized, topographic inputs (Materials and Methods). Columns, Left to right, Connectivity patterns with increasing sparsity levels. Rows, Top to bottom, Connectivity patterns with increasing RF sizes. Other conventions are the same as for the mask matrix shown in panel C. H, Performance of the MC st-RNN, quantified with the mean L1 error, during the maintenance epoch, for different sparsity levels (columns) and receptive field sizes (rows) corresponding, respectively, to the connectivity mask matrices shown in panel G. I, Same as in panel H but showing performance of the CD st-RNN. Other conventions are the same as in panel H. G–I, Red dashed squares, Sparsity levels and receptive field sizes used in subsequent model simulations.

We develop a midbrain inspired deep learning model of change detection, specifically, for the challenging scenario of “change blindness”: the surprising inability to detect salient changes in visual scenes when attention is not deployed at the location of change (Rensink et al., 1997; Gibbs et al., 2016). Change blindness is assessed, in laboratory settings, by presenting a pair of alternating images that differ in some important detail, typically, with intervening blank frames (“flicker” paradigm; Rensink et al., 1997). In such tasks, subjects are instructed to scan the images by moving their eyes to different parts of the image to identify the location of change. Both human observers and models must address a key challenge when detecting changes in such change blindness tasks. If the pair of images were presented on consecutive frames, a motion-like signal would occur at the location of change, rendering it relatively easy for human observers to detect the change (Tse, 2004). Similarly, a computational model can accomplish change detection with the relatively trivial operation of computing a difference in input pixel values across successive frames. On the other hand, in change blindness tasks changes occur interspersed by a blank frame, which precludes the appearance of motion signals localized to the change. Consequently, simple operations, like pixel-level differencing of the input, do not suffice to localize the change in change blindness tasks.

To model change detection in this challenging setting, we turn to recurrent neural network (RNN) models. RNNs constitute a versatile class of deep learning models that are routinely deployed for modeling various cognitive behaviors (Sussillo and Barak, 2013; Sussillo, 2014). These include cognitive tasks involving perceptual decision-making, multisensory integration, working memory (Song et al., 2016), delayed estimation, change detection, forced choice comparison (Orhan and Ma, 2019), signal detection and context dependent discrimination (Mastrogiuseppe and Ostojic, 2018), among others. RNNs are also increasingly used for accurate decoding of neural dynamics in brain machine interfaces (Sussillo et al., 2012; Pandarinath et al., 2018), suggesting their potential utility for understanding the link between complex neural dynamics and cognitive states.

Here, we introduce a biologically constrained RNN, which we call a sparse, topographic RNN (st-RNN). The st-RNN is closely modeled on detailed neuroanatomy of the midbrain SC/OT and the Imc (Knudsen, 2018; Fig. 1A). In addition to being trained more rapidly, the st-RNN achieves change detection with far fewer connections than conventional RNN models. Moreover, low-dimensional dynamics of the st-RNN provide essential insights into key neural computations underlying change detection in the midbrain. Lastly, by having the st-RNN model drive an eye movement (saccade) algorithm, we predict human gaze fixations in a laboratory change blindness experiment, surpassing state-of-the-art. In sum, the st-RNN provides a novel deep learning framework that may enable linking neural computations underlying change detection with their associated psychophysical mechanisms (Krauzlis et al., 2013; Sridharan et al., 2014).

Materials and Methods

Ethics declaration

Behavioral data from a change blindness experiment reported previously (Jagatap et al., 2021) were re-analyzed for this study. For that study, informed consent was obtained from all participants; other details are reported elsewhere (Jagatap et al., 2021). Experimental protocols were approved by the Institute of Human Ethics Review board at the Indian Institute of Science (IISc), Bangalore.

st-RNN model

We designed an RNN model, incorporating neurobiological constraints, by modifying the architecture of conventional RNNs. We implemented sparse, topographic connectivity among neurons of every layer, while also incorporating Dale's law, which specifies that each neuron connects with its downstream targets exclusively with either excitatory or inhibitory synapses. Thus, the connection weight matrix between different layers of the network was specified as:

| (1) |

where SM(.) is a sign matrix, whose elements (+1 or −1) determines E versus I connectivity, respectively, among the different neurons, CM(.) is a mask matrix that determines the connections permissible under topographic connectivity constraints (Fig. 1C), × denotes matrix multiplication, denotes element-wise multiplication, and []+ denotes rectification (Song et al., 2016). The topographic connection mask matrix (CM) was specified as follows (Fig. 1C): each hidden layer neuron received input from overlapping tiles of either 4 × 4 (input-hidden layer E and I, hidden layer E-E, I-E, I-I) or 8 × 8 neurons (hidden layer E-I). Similarly, each hidden layer neuron (E and I) projected to tiles of 4 × 4 neurons in the output layer. Neurons proximal to the corners of each layer received input from and projected to only one such tile. Neurons proximal to the edges, either horizontal or vertical, received input from and projected to two overlapping tiles. Neurons in the center of each layer received input from, and projected to, four overlapping tiles. Levels of overlap were different for different connections (one neuron for E-E connections, two neurons for input-E, input-I, I-E, and I-I connections, and four neurons for E-I connections). The mask matrix shown in Figure 1C represents unfolding of the neurons in E and I layers in a column-first manner, with E neurons first, followed by I neurons. Note that this pattern represents constraints on connectivity in the network; the final connection weights were determined following network training (see below, st-RNN training and testing). As the precise ratio of E:I neurons in the SC is not known, we adopted the canonical 4:1 ratio, observed in the neocortex (Xue et al., 2014). Thus, each st-RNN module comprised an input layer (8 × 8 = 64) with only excitatory neurons, a hidden layer comprising separate excitatory (16 × 16 = 256) and inhibitory (8 × 8 = 64) neuron layers, and an output layer (8 × 8 = 64) also comprising only excitatory neurons. Finally, RNN dynamics were simulated with ordinary differential equations, with dynamics discretized in time, as follows:

| (2) |

where xt, rt and ot are the activity of the input, recurrent layer, output neurons at time t, respectively; st represents a latent variable, that can be construed as the net input into the recurrent layer; Ueff, Veff, and Weff are input-hidden, hidden-output and recurrent hidden layer connectivity matrices, bo is output bias, f(.) is the hyperbolic tangent function, and g(.) is a sigmoid nonlinearity. The results presented here were fairly robust to the choice of these nonlinearities; for example, a sigmoid nonlinearity in the hidden layer also produced results similar to those presented here. All simulations were performed with the Tensorflow framework (Abadi et al., 2016).

In addition, we tested the effect of (1) varying the level of sparsity in the connections, and (2) varying the receptive field (RF) size of local, topographic connections, both among the hidden layer units. RF sizes were varied by interconnecting neighboring neurons in blocks of size (RF or r = 2, 3, 4; Fig. 1G). Sparsity levels were controlled, independently of RF sizes, by skipping connections (varying the stride) across adjacent neurons in a block, so that the lowest sparsity level (SL = 1, densest connectivity) contained ∼45% of all possible connections within a block, whereas the intermediate (SL = 2) and highest (SL = 3) sparsity levels contained ∼40% and ∼35% connections, respectively (Fig. 1G).

Architecture incorporating a global inhibitory (GI) layer

In some simulations, we also modeled input to the hidden layer units of each st-RNN module from a GI layer (10 × 8 = 80 neurons; Fig. 4A). The GI layer received strong convergent input from the input layer units, and its output was obtained by topographic spatial convolution of the input layer activity with a box-filter of size 11 × 11, followed by nearest-neighbor downsampling to 10 × 8 resolution, and binarization by rounding. These input weights to the GI layer were not trainable (fixed weights). The resultant 10 × 8 binary-map was treated as the output of GI layer. No recurrent connections occurred in the GI layer. The GI layer projected to the hidden layer neurons (recurrent units) of the st-RNN modules through inhibitory connection weights (Ug; Eq. 3, below). These weights were trainable and were randomly initialized before training. The hidden layer unit activations were, then modeled as:

| (3) |

where is the output of the GI units, and Ug is inhibitory connection matrix from the GI units to the hidden layer units of the st-RNNs. Note that only one st-RNN module was trained along with the GI layer. For modeling images with tiled st-RNNs, GI layer weights were replicated across all, tiled st-RNN modules. Simulations in Figures 4, 7, 8 were performed with this version of the network incorporating the GI layer.

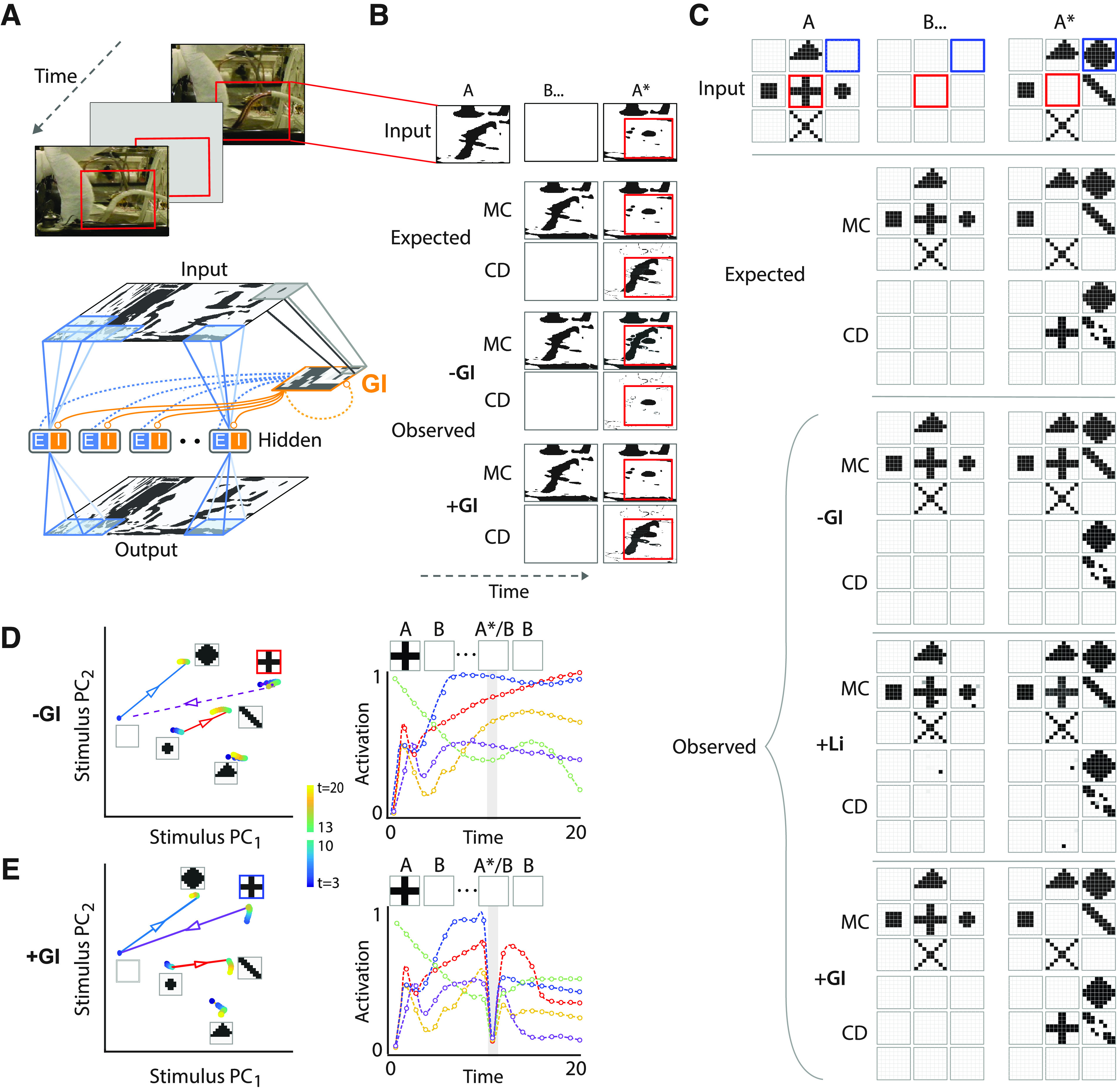

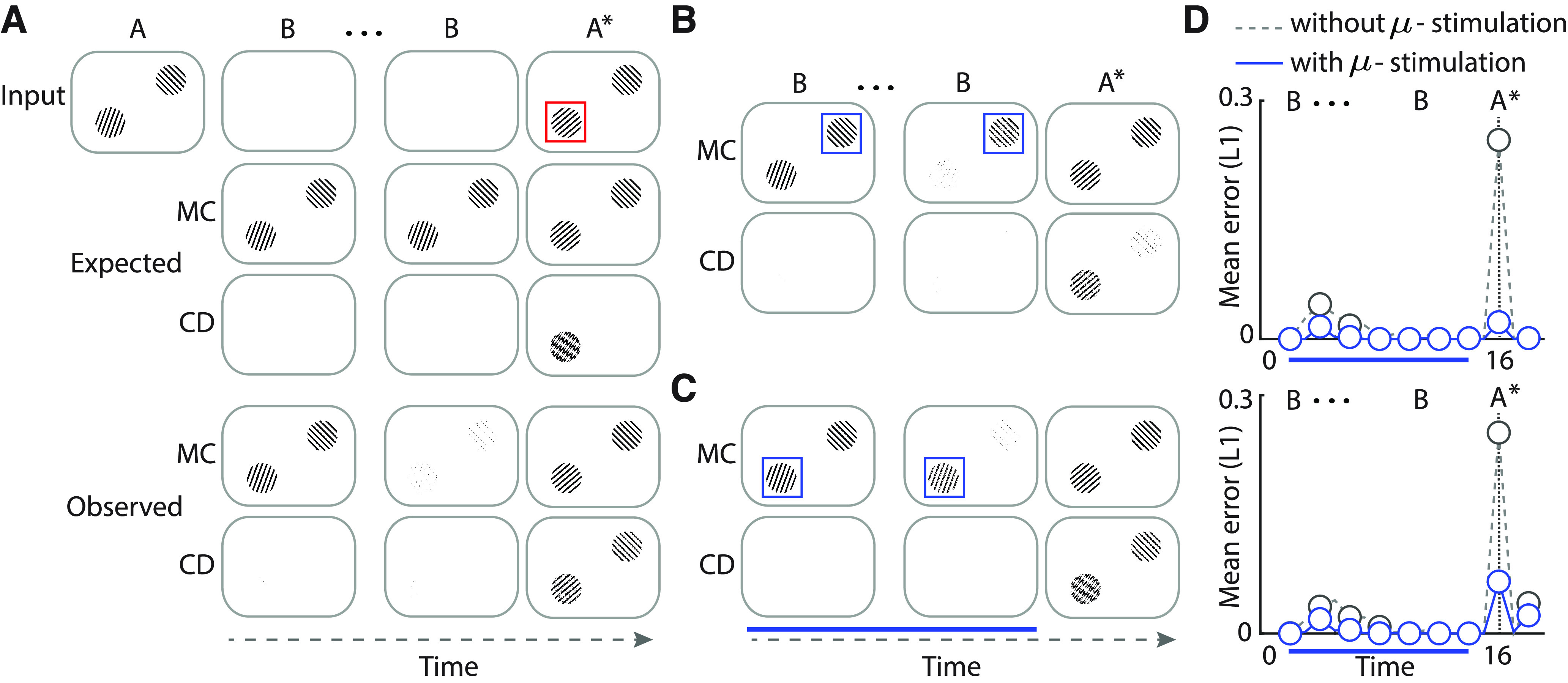

Figure 4.

Global inhibition enables change detection with natural images. A, Top, Schematic of change detection with a representative natural image (resolution: 1024 × 768), interspersed by blanks. Red rectangle: location of change (not part of the image). Bottom, 8 × 8 st-RNN modules tiled to represent the full resolution image (overlapping blue patches in both input and output map). st-RNN modules were tiled with 50% overlap along both horizontal and vertical directions, such that each 4 × 4 patch in the image (except for patches closest to the border) was processed by four different st-RNN modules. Orange outline: global inhibition (GI) layer, mimicking the architecture of the Imc connection (Fig. 1A, orange nucleus). Gray lines: convergent, topographic connections from input to the GI layer; orange circles: inhibitory connections from the GI layer to both E and I neurons in the hidden layer of each st-RNN module; dashed connections: recurrent excitatory connections from E neurons in the hidden layer to neurons in the GI layer (in blue) and recurrent inhibitory connections among neurons in the GI layer (in orange); these connections were implemented in one variant of the network incorporating the GI layer (Materials and Methods). B, Topmost row, Thresholded, binarized saliency map around the region of change (red box; see text for details). Second and third rows, The expected output (ground truth) of mnemonic coding (MC) and change detection (CD) st-RNNs, respectively. Fourth and fifth rows, Output of the trained MC and CD st-RNN models before incorporating the global inhibition layer (−GI). Sixth and seventh rows, Output of the trained MC and CD st-RNN models after incorporating the global inhibition layer (+GI). For all rows, the middle and right columns represent the output of the respective st-RNN during the blank (B) and change image (A*) epochs, respectively. Red outlines: location of change. C, Analysis of a toy-example with nine st-RNN modules tiled in a 3 × 3 square grid, with no overlap. Rows 1–3, Input to the st-RNN modules (1st row), and the expected ouputs of the MC st-RNN (2nd row), and CD st-RNN (3rd row). Rows 4–9, Outputs of the trained MC and CD st-RNN models before incorporating the global inhibition layer (–GI; 4th and 5th rows), after incorporating local (short-range) recurrent interactions (+Li; 6th and 7th rows), and after incorporating the global inhibition layer (+GI; 8th and 9th rows). Other conventions are the same as in panel B. Top row, Red box: on-off transition; blue box: off-on transition. D, Left, Projection of hidden layer activity for each st-RNN (panel C) into the mnemonic subspace, in the absence of global inhibition (−GI). Trajectories begin from the first blank, when the first image was maintained (blue shaded dots; t = 3–10), through a transition corresponding to the presentation of the change image (lines with superimposed arrowheads), followed by the second blank, when the change image was maintained (green to yellow shaded dots; t = 13–20). Insets, Input images for each st-RNN module from the toy example (panel C). The st-RNN failed to accomplish the on-off transition (“plus” shape to blank) successfully (dashed purple arrow). Right, Activity of five representative hidden layer units, each represented by a different color, of the mnemonic coding st-RNN corresponding to the middle pattern (“plus”) from panel C. In absence of global inhibition (–GI) unit activity failed to reset on presentation of the change image (A*). Dots: data for each bin; dashed lines: spline fits; gray shaded bar: time point (t = 11) corresponding to presentation of the change image. E, Same as in panel D but in the presence of global inhibition (+GI). Left, The st-RNN accomplished the on-off transition (“plus” shape to blank) successfully (solid purple arrow). Right, In the presence of global inhibition (+GI) unit activity “reset” on presentation of the change image (gray shading).

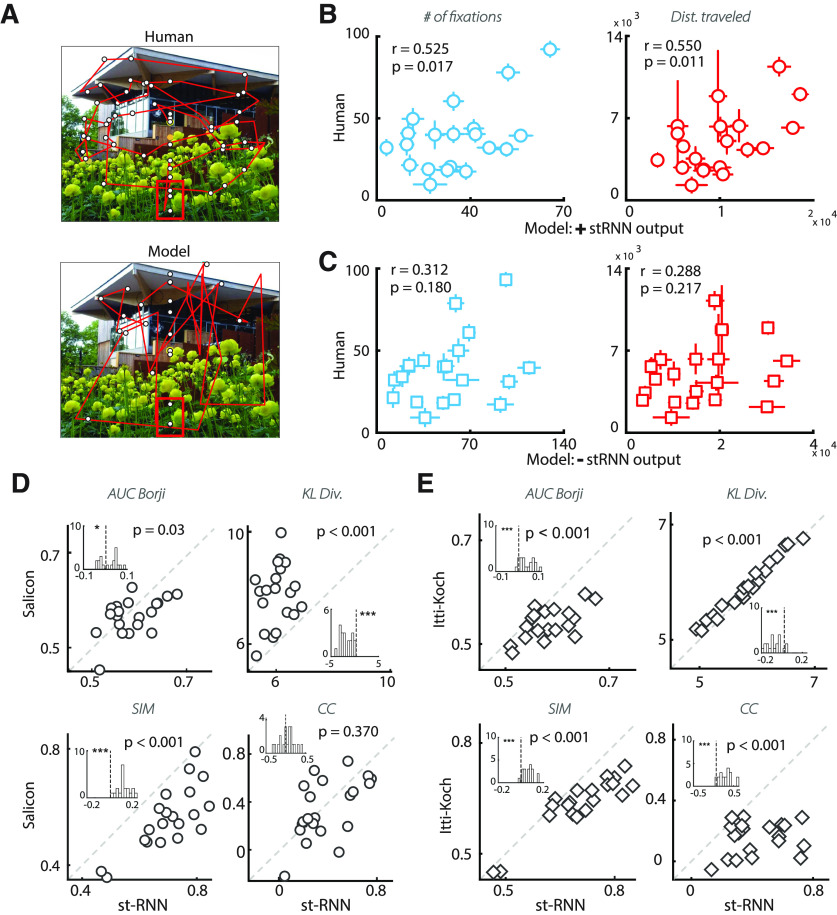

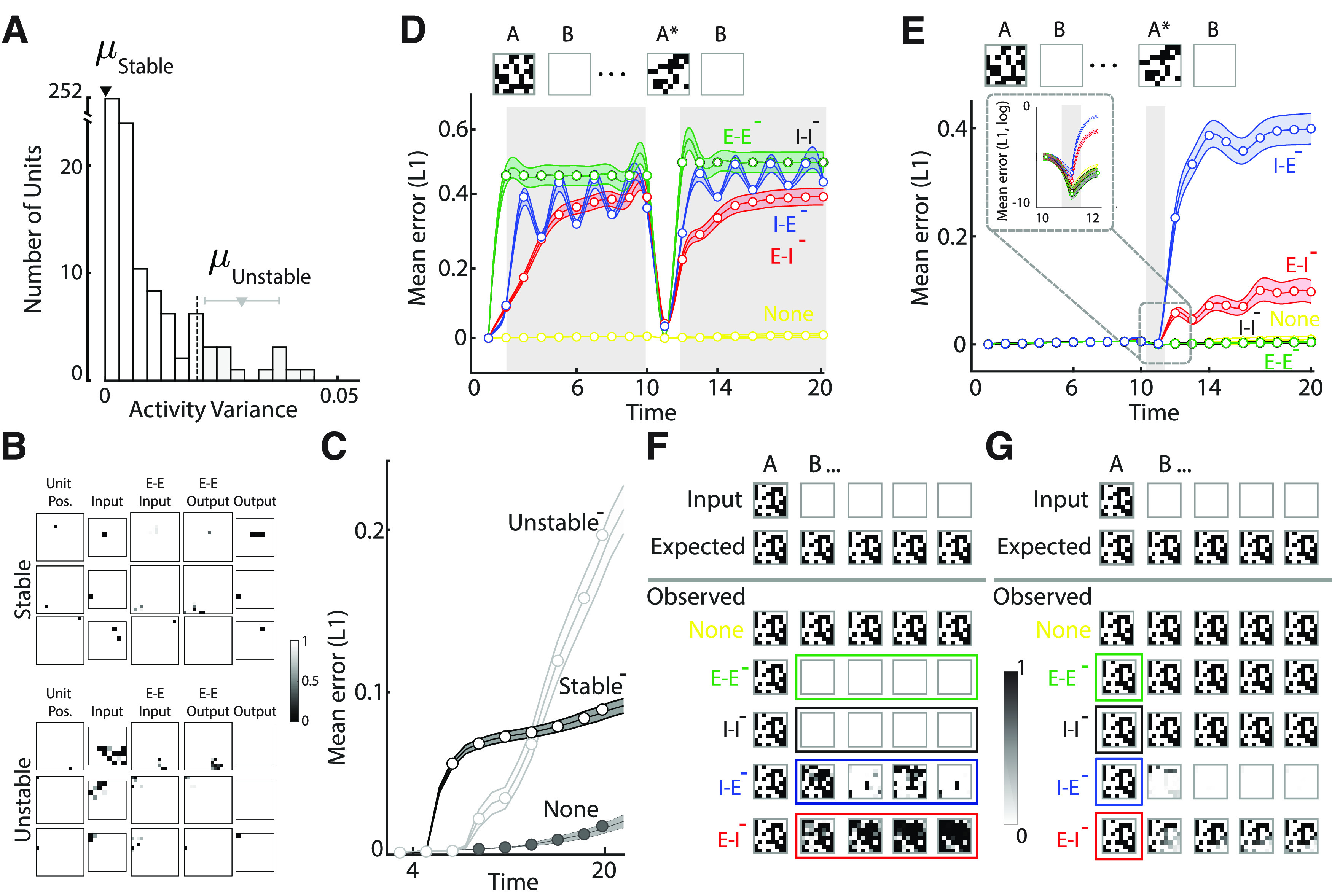

Figure 7.

A gaze model for simulating eye movements with the st-RNN model. A, A representative sequence of stimuli in the change blindness experiment. Images, with a key change between them (red box indicates location of change), were alternated for 250 ms each, with intervening blank frames, also presented for 250 ms (“flicker” paradigm). In the laboratory experiment, participants were required to scan the image and detect the change within a fixed trial duration (60 s). B, Steps involved in simulating sequential fixations with the st-RNN model. Clockwise from top left, Following fixation (yellow dot), the image was foveally magnified with a CVR transform. Following this a bottom-up saliency map was computed (Itti et al., 1998), thresholded and binarized (for details, see Materials and Methods). The temporal sequence of binarized saliency maps was provided to the stacked st-RNN model to obtain the Change map (output of the change detection st-RNN; top right). The Saliency and Change maps were then fused along with an IOR map to obtain the final Priority map (bottom; Materials and Methods). This final map was converted into a probability density and used to sample the next fixation point.

Figure 8.

st-RNN based gaze model mimics and predicts human strategies in a change blindness task. A, Comparison of gaze scan path for a representative human subject (top) versus a model's trial (bottom) on an example image from the change blindness experiment. Red box: location of change. B, Correlation between model (x-axis; average across n = 80 iterations) and human data (y-axis; average across n = 39 participants) for the number of fixations (left) and distance traveled (right) before fixating on the change region. Error bars: SEM. C, Same as in panel B, but for a model in which the priority map was computed after excluding the st-RNN output (see Materials and Methods). D, Distribution of four different saliency comparison metrics across 27 images for the fixation map predicted by the st-RNN model (x-axis) versus the map predicted by the Salicon algorithm (y-axis) (Huang et al., 2015). Clockwise from top: AUC (Borji), KL-divergence, CC and similarity. Diagonal line: line of equality (x = y). For all metrics, except for KL-divergence, a higher value implies better match with human fixations. Insets, Distribution of difference between the st-RNN and Salicon prediction for each metric. E, Same as in panel D but comparing fixation predictions of the st-RNN model (x-axis) against that of the Itti–Koch saliency prediction algorithm (y-axis). Other conventions are the same as in panel D.

We also trained a variant of the network in which the GI layer neurons received topographic excitatory input from the st-RNN hidden layer excitatory (E) neurons; these weights (matrix ; Eq. 4) were trainable. The GI layer comprised of 100 neurons, organized in a 10 × 10 grid and also contained all-to-all recurrent inhibitory connections (matrix ; Eq. 4). As before, GI units inhibited the st-RNN hidden layer units using weight matrix (Eq. 3). The GI layer unit activations were modeled as:

| (4) |

where represents the convergent input from the input layer (10 × 10), is a trainable weight matrix that transforms the input to GI layer dimensions. For modeling high-resolution images with tiled st-RNNs, GI layer unit weights were shared across all tiled st-RNN modules, with representing averaged hidden excitatory unit activations across all the tiled st-RNN modules. Simulations in Figures 5 and 6 were performed with this version of the network.

Figure 5.

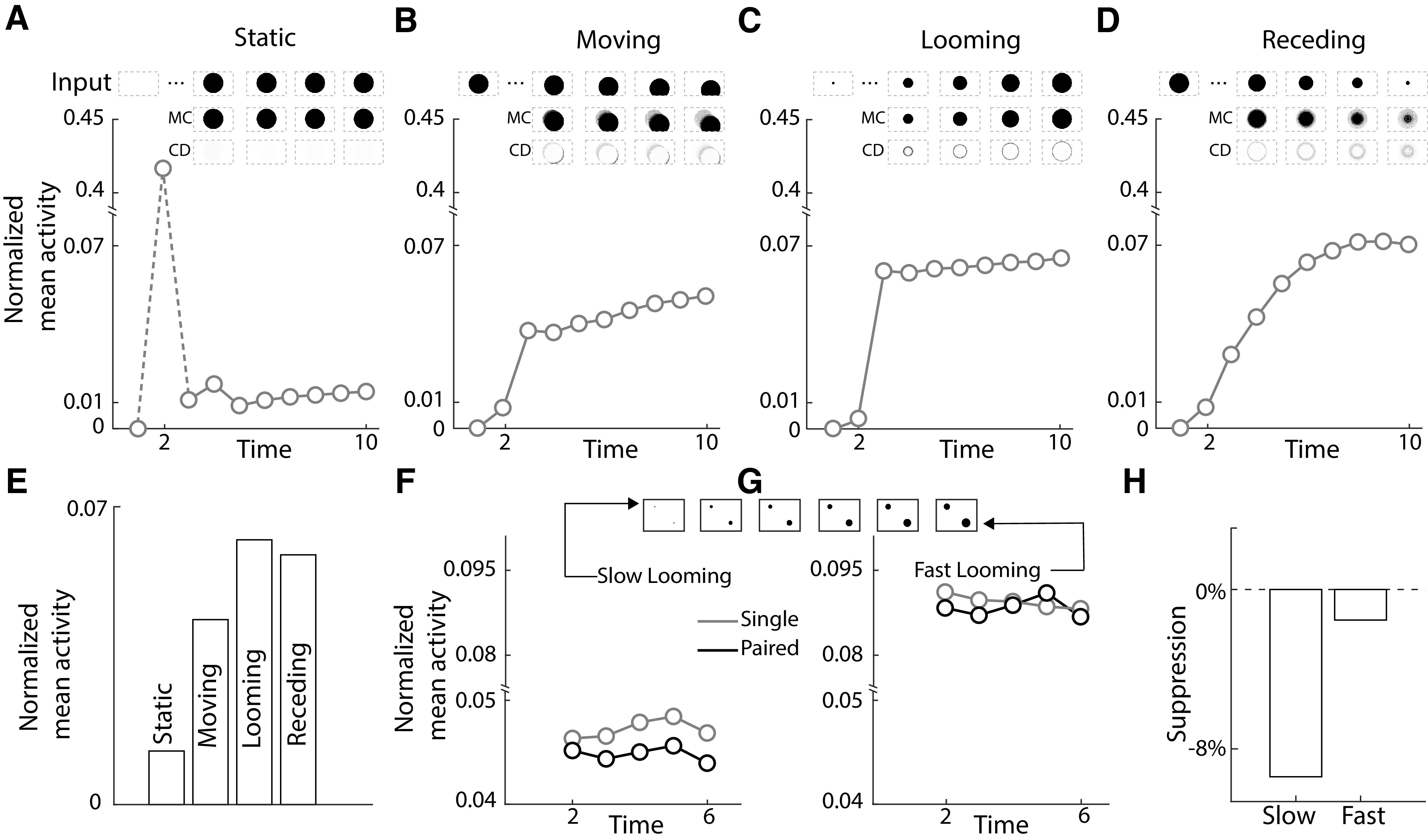

Model unit responses to static, dynamic and competing stimuli. A–D, Normalized mean activity of output units (n = 62,500) of the change detection st-RNN for static (A), moving (B), looming (C), and receding (D) stimuli. Mean activity was normalized by the maximum activation across all four stimulus classes. Insets, Input stimulus patterns for each, respective, simulation. Insets, First row, Input sequence corresponding to each stimulus type (A–D). Insets, Second and third rows, Mnemonic coding (second row) and change detection (third row) outputs corresponding to the respective stimulus type. E, Normalized mean activity of the change detection st-RNN output units averaged across the final seven frames (t = 3 to t = 10) for static (S), moving (M), looming (L), and receding (R) stimuli (see text for details). F, Same as in panels A–D except showing activity evoked by a slow-looming stimulus in the upper left quadrant when presented alone (“Single,” gray) or concurrently with a fast-looming stimulus (“Paired,” black). Inset, Paired input stimulus pattern. Other conventions are the same as in panels A–D. G, Same as in panel F, but showing activity evoked by the fast-looming stimulus. Other conventions are the same as in panel F. H, Suppression of the mean activity (percentage) for paired, as compared with single, across the last five frames (t = 2 to t = 6), for neurons representing the slow-looming (left bar) and fast-looming (right bar) stimuli, respectively.

Figure 6.

Simulated microstimulation rescues change detection deficits. A, Top row, Simulated laboratory change blindness task. Two oriented gratings were presented, one in each visual hemifield. The entire image spanned 1000 × 800 pixels and was encoded with 50,000 overlapping st-RNN modules. Following the blank (B), a new change image occurred in which one of the gratings (here, the grating in the left hemifield) underwent a change in orientation. Middle row, Output of the mnemonic coding (MC) st-RNN. Bottom row, Output of the change detection (CD) st-RNN. Red box: location of change, is shown for illustration only, and is not presented along with the visual input. Other conventions are the same as in Figure 4B. B, The output of the mnemonic coding (first row) and change detection (second row) st-RNNs following simulated, focal microstimulation of the right hemifield (no-change) grating representation alone (see text for details). C, Same as in panel B but following simulated, focal microstimulation of the left hemifield (change) grating representation alone. Other conventions are as in panel B. B, C, Blue box, Location of simulated microstimulation, is shown for illustration only and is not presented along with the visual input. Blue horizontal bar: duration of microstimulation. D, Quantification of change in performance following the simulated microstimulation experiments of panel B (top) and panel C (bottom), respectively. Top, Mean L1 error for units representing the right hemifield (no-change) grating without (gray dashed) or with (blue solid) simulated microstimulation. Dashed vertical lines: time of appearance of the changed image (A*). Other conventions are the same as in panel C. Bottom, Same as in the top but mean L1 error for units representing the left hemifield (change) grating. Other conventions are the same as in the top panel.

st-RNN training and testing

Two st-RNN modules operated, in sequence, to solve the change blindness task (Fig. 1D; see Results). Each st-RNN was trained by learning the following parameters: W, U, Ug, V, bo. Because mnemonic coding (maintenance) and change detection are independent, separable, operations, each st-RNN could be trained independently of the other, and with separate training datasets comprising 200,000 synthetic 8 × 8 binary patches. Each binary patch (A) was generated to provide input patterns of prespecified sparsity (proportion of active pixels) drawn from a uniform random distribution, ranging from 0.1 to 1. For each binary patch A, an alternate (change) patch A* was generated by setting pixels active or inactive randomly with 50% probability, independently for each pixel. During training, the input to each st-RNN comprised a sequence of 10 images, beginning with a binary patch (A), succeeded by a variable number of blanks (median = 2, range = 1–8), followed, finally, by the changed binary image (A*). The distribution of variable blanks was determined during training, by deciding with 50% probability, at each time step, whether a blank or the changed image would be shown. We employed a variable number of blanks to ensure that the RNN learned a general strategy of change detection, which did not depend on the precise temporal interval between the original and changed image. The order of presentation of A and A* were counterbalanced to ensure that the number of “onset” versus “offset” type changes (Fig. 1D) were matched in the training dataset. Each st-RNN was trained, in a supervised manner, to minimize the average mean squared error (MSE) between st-RNN output and expected (ground-truth) activation in the output layer (optimizing the L2-loss function) across time steps. Both st-RNNs were trained until the change in the loss function plateaued (Fig. 1E), and subsequently tested on a validation corpus of 20,000 new image patches each.

st-RNN weights were initialized to ensure that they obeyed the constraints on topography and Dale's law. For this, we adopted truncated Gaussian initialization to initialize connectivity matrices with random positive or negative values; the truncated distribution was chosen to have a mean and standard deviation of 0.2 and 0.01, respectively. During learning weights were masked with the sign mask matrix, SM, to segregate E and I connection types, as described above. Unlike Song et al. (2016), we did not adopt explicit regularization to encourage learning of sparse connections. On the other hand, the topographic constraint, defined by the matrix CM above, enforces sparsity by permitting only spatially local connections to be learned (i.e., to assume a non-zero value). Bias parameters of the output connections were initialized with a constant value of zero, throughout.

Training was performed with minibatches of size 128 using adaptive moment estimation based stochastic gradient descent (i.e., Adam optimizer) with a learning rate (L) of 10−4, and exponential decay rates (Kingma and Ba, 2014; is assigned a small value to prevent division by zero). Training was performed by treating the st-RNN as a deep feedforward architecture with identical layers, and shared parameters, unrolled at each timestep (Pascanu et al., 2013). We also performed backpropagation through time over mini-batches of sequence length 10, which enabled the network to learn long-term relationships between the input stimulus and the expected output. Such an approach is particularly essential for the mnemonic coding st-RNN, which needs to maintain its persistent representation in the absence of input, and over the variable durations of the blank epochs (one to eight blanks).

For simulating the fully connected RNN (fc-RNN) network (Figs. 1E,F), the connectivity equations were the same as above except that we removed the constraint on sparse topographic connectivity (matrix CM; Eq. 1). Other aspects of training and testing were identical to that of the st-RNN network, described above. Both st-RNN and fc-RNN were trained with different learning rates (L = 0.0001, 0.005, 0.001, 0.05, 0.01) and five random weight initializations; the shading in Figure 1E reflects the standard error across these different learning rates and initializations.

We also trained and tested the st-RNN modules with inhibitory input from a global, inhibitory layer (GI layer; Fig. 4A). Training exemplars were chosen similarly as described above for training the model without the GI layer, except that in this case we also included completely blank images as potential change (A*) images. To mimic change in global context (e.g., the appearance of a new image), two 8 × 10 patterns, each with randomly activated pixels (binary maps with sparsities ranging from 0.4 to 0.6) provided GI input to all hidden layer units at two distinct time-steps, at the time of appearance of A and A*, respectively, with blanks in between. While we could have chosen to provide input directly from the input image to the GI layer, random inputs sufficed to illustrate the following idea: for flexible updating, GI layer neurons needed to be activated during the presentation of new images but could remain agnostic to the precise content of those images. All other training and testing steps were the same as described above for the st-RNN module. Again, as before, the st-RNN network with global inhibition was trained with 200,000 training sequences and validated with 20,000 new sequences. When tested with the full, high-resolution image, the 8 × 10 patterns that provided global context, were drawn from the output of the GI layer (Fig. 4A). A similar procedure was used for training the st-RNN modules with recurrent connections to the GI layer (Fig. 4A, dashed connections).

Estimating the total number of connection weights and parameters

Based on the above architecture, we estimated the total number of weights in an st-RNN module (8 × 8 input/output, hidden layer with 16 × 16 E neurons and 8 × 8 I neurons, and a 10 × 8 GI layer) to be 46 080; these included feedforward connections from neurons in every layer to each successive layer (input, hidden, output), feedforward connections from the GI layer, as well as recurrent connections between neurons in the hidden layer. For designing an st-RNN to encode a 1024 × 768 high-resolution image with each neuron in the input layer encoding one image pixel, the number of units in each layer would have to be scaled up by ∼104 times the current configuration. This would yield a scaling up of the total number of weights in the network to ∼3.8 × 1012, a prohibitively large number that is impractical for training. As a result, we trained a single 8 × 8 module and tiled this module (with shared weights) across both x- and y-directions to cover the span of the 1024 × 768 high-resolution image (see Materials and Methods, Modeling change detection with high-resolution images).

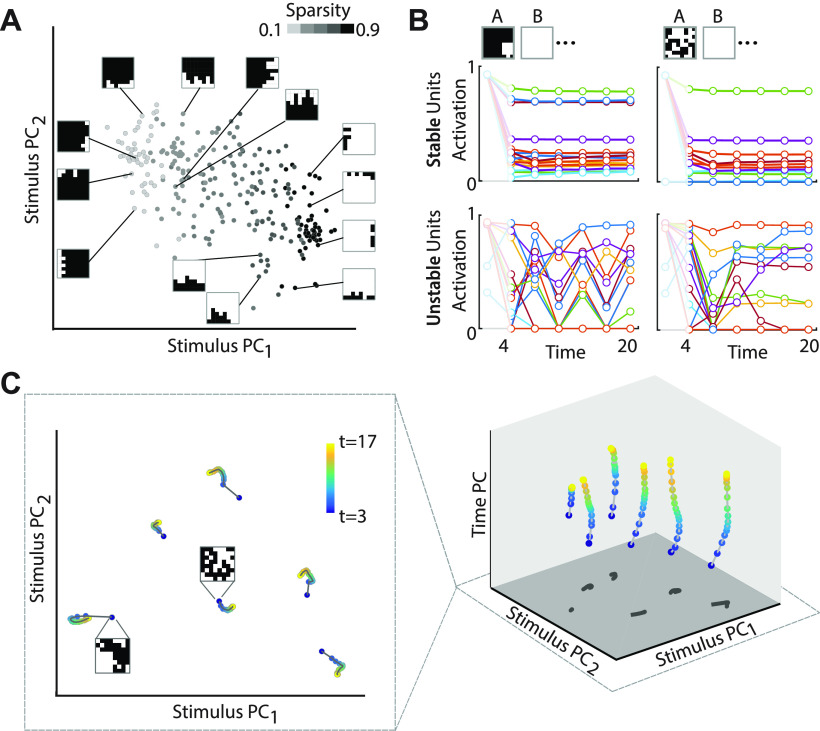

Computing the mnemonic subspace

We examined the representation of the st-RNN hidden layer unit dynamics in a mnemonic subspace (Druckmann and Chklovskii, 2012; Murray et al., 2017). Each unique input pattern is expected to have a corresponding unique latent representation in this mnemonic subspace and trajectories of the latent representation of each input pattern should be stable (and not drift) in the absence of input (Druckmann and Chklovskii, 2012; Murray et al., 2017).

To obtain this mnemonic subspace, we performed principal components analysis (PCA) on the time-averaged activity of the of hidden layer units of the mnemonic coding st-RNN, during the blank period; for this analysis, activity in the first two time bins was excluded, to permit activity in the “stable” neurons to stabilize (Fig. 2B). Thus, PCA was performed on an mxn matrix, where m is the number of unique input patterns and n = 320, including 256 excitatory and 64 inhibitory hidden layer neurons. The first two principal component (PC) vectors were designated as “Stimulus PC1” and “Stimulus PC2”; these vectors span the two-dimensional mnemonic coding subspace (Fig. 2A). The activity of the hidden layer neurons at each time-step was then projected onto this subspace to obtain a two-dimensional trajectory during the maintenance epoch, following each input patch presentation (Fig. 2C, left). Next, a “Time PC” was obtained by computing first principal direction after subtracting the time-averaged activity across each stimulus. Thus, PCA was performed on a (m.t)xn matrix, where t = 17 is the number of time bins during the delay period (excluding the first three time bins). The Time PC was then orthogonalized relative to the Stimulus PCs by finding the closest orthogonal projection to the two-dimensional mnemonic subspace. Finally, the hidden layer neuron activity was projected into this Stimulus+Time PC representation to obtain three-dimensional trajectories of the hidden layer units during the maintenance epoch. 95% of the variance in the temporal dynamics could be explained by as few as 40 time PCs; similarly, 95% of the variance in the stimulus patterns could be explained by as few as 34 stimulus PCs. Given that the full dimensionality of the hidden layer was 320 (256 excitatory and 64 inhibitory neurons), nearly all of the variance (dynamics or patterns) could be accounted for, therefore, with subspaces that were nearly 8- to 10-fold smaller in dimensionality. Unit activities during specific epochs were then projected onto this mnemonic subspace (Figs. 2A,C, 4E,D).

Figure 2.

Stable maintenance occurs in a “mnemonic” subspace despite heterogenous dynamics of individual units. A, Projection of input stimulus patterns in the mnemonic subspace. “Stimulus PC1” and “Stimulus PC2” correspond to the two PC dimensions with the largest variance across time-averaged representations of the mnemonic coding st-RNN hidden layer units. Points with lighter shades of gray represent input patterns of lower sparsity (proportion of active pixels). Insets, Specific 8 × 8 input patterns. B, Top row, Activity dynamics of the top 16 hidden layer units with the most “stable” dynamics (see text for details) in the mnemonic coding st-RNN during the blank epoch (t = 4–20); each color denotes dynamics for a different unit. Dots: data for every third time bin; lines: data for each time bin. Bottom row, Same as in top row but for the top 16 hidden layer units with the most “unstable” dynamics. Left and right columns, Results for two exemplar input patterns. Several units show overlapping activity profiles. C, Left, Low-dimensional trajectories obtained by projecting the hidden layer unit activity onto the mnemonic subspace during the maintenance epoch. Each cluster of points corresponds to a different input 8 × 8 pattern (insets). Blue to yellow: time points ranging from early (t = 3) to late (t = 17) in the blank epoch. Right, Same as in the left panel, but trajectories plotted with an additional dimension along the z-axis indicating a “Time PC” corresponding to a PC dimension with maximal variance in input-averaged activity across time that is also orthogonal to the mnemonic subspace. Dark-gray trajectories on the xy-plane indicate the projection of the trajectories in the mnemonic subspace.

Stable network output despite unstable activity in individual units

We seek to show analytically how stable activity can emerge in our st-RNN network despite unstable dynamics in individual neurons (Fig. 2B,C). We derive below a sufficient condition for stability of the output representation in our model and verify that this condition holds in our simulated network, modifying a previous framework (Druckmann and Chklovskii, 2012). For convenience, we rewrite the equations for the st-RNN module dynamics (Eq. 2) as:

| (5) |

where is a nonlinearity that encapsulates both and the rectification . To ensure that the output of the st-RNN network is stable, must be unchanging with time . In Equation 5 above, biases are constants. Therefore, for stable output, must be unchanging with time. Because ours is a discrete time model, we represent this condition as . We rewrite this condition, based on st-RNN module dynamics equations as:

| (6) |

where we have dropped the superscripts “eff” from the connectivity matrices, for simplicity. During the maintenance epoch . Setting this value, and expanding the matrix multiplications above in terms of their component terms:

| (7) |

where represents the vector of output connection weights from neuron in the hidden layer to all neurons in the output layer and represents the recurrent connection weight from neuron to neuron , both in the hidden layer. To simplify the analysis, we linearize the equation (by removing the nonlinearity ) and write Equation 7 as:

| (8) |

where in the last step, we have exchanged dummy subscripts and in the first term on the left-hand side. Simplifying further:

| (9) |

For this condition to be true for all pattern dynamics , it is sufficient that the term inside the parenthesis in the left-hand side of the Equation 9 is zero, i.e., it can be stated as:

| (10) |

Equation 10 is, therefore, a sufficient condition for stable activity to emerge in the network output in the absence of external input. We test whether this condition was met in our trained st-RNN network. Because of the simplification associated with linearization of the term (Eq. 8), and because the tanh is a saturating nonlinearity, we normalized the vector on either side before comparing their similarities:

| (11) |

where the asterisk denotes unit normalization of the magnitude of the respective vector. In the trained mnemonic coding network, we quantified the similarity between the unit normalized st-RNN output vectors (320 vectors of size ) on the right-hand side and left-hand side of Equation 11 with their, respective, dot products. A dot product of indicates overlap, and lower magnitudes of dot products indicate progressively less overlap. In the case of our st-RNN mnemonic coding network, we discovered that the dot-product magnitude was (median [ confidence intervals]; across n = 108 output vectors with non-zero magnitudes). These results indicate the stability condition (Eq. 11) was reasonably well met in our data, validating our analysis of output stability in the st-RNN network.

Experiments with silencing specific connections, stable or unstable units

We evaluated change detection performance after silencing specific types of recurrent, hidden-layer connections (E-E, E-I, I-E, or I-I), while holding all of the other types of connections intact (Fig. 3D–G). This was achieved by simply setting the respective weights in the Weff matrix to zero. In addition, we examined the relevance of units with different kinds of dynamics, stable and unstable, for robust change detection. For this, we computed the variance of neural activity during the maintenance period (blank epoch) averaged over 500 random input stimulus patterns. Each hidden neuron was then classified along an axis of most “stable” to most “unstable” by sorting them according to its respective averaged variances. We grouped the top and bottom 5th percentile of these neurons into a “stable” (n = 16) and “unstable” (n = 16) set, respectively. Finally, to evaluate the relative importance of these “stable” versus “unstable” neurons for pattern maintenance, we turned off the output activity of the “stable” and “unstable” subsets, separately, and tested the effect on maintenance. As a measure of the fidelity of maintenance, we computed the mean absolute deviation between the expected output (ground truth) and model output at each time-step (Fig. 3C). This analysis was done using st-RNN module including the GI layer.

Figure 3.

Distinct contributions of unit and connection subtypes to change detection. A, Distribution of variance of activity during the maintenance epoch for all hidden layer units of the mnemonic coding st-RNN. Black and gray inverted triangles: mean activity variance of the units exhibiting the most stable and the most unstable dynamics respectively; dashed vertical line: unstable units with top 5th percentile of activity variance. B, Connectivity kernels of representative stable (top panel) and unstable (bottom panel) excitatory units in the mnemonic coding st-RNN. First column, The unit's location in a 16 × 16 grid. Second column, Feedforward connections from the 8 × 8 input layer to the corresponding unit in the respective row. Third column, Recurrent input from other excitatory hidden layer units. Fourth column, Recurrent output to other excitatory hidden layer units. Last column, Feedforward connections to the output layer units. C, Mean absolute error (L1 norm) between the expected and observed output of mnemonic-coding st-RNN during maintenance (t = 4–20) after silencing the top 5% of stable (“Stable−”; black open circles and curve) and top 5% of unstable (“Unstable−”; gray open circles and curve) units. Dashed line and filled circles: mean absolute error with all units intact (“None”); shading: SEM (n = 500 input patterns). D, Mean absolute error (L1 norm) between the expected and observed output of the mnemonic coding st-RNN following selective silencing of each type of recurrent connection: E-E (green), I-I (black), I-E (blue), and E-I (red). Connections were silenced during the maintenance epochs alone (gray shading, t = 2–10 and t = 12–20). Yellow: mean absolute error but with all connections intact (None); dots: data for specific time points; lines: spline fits; shading: SEM (n = 500 input patterns). E, Same as in panel D but following silencing of recurrent connections only during the presentation of the new image, A* (t = 11; gray shading). Inset, Logarithmic scale plot magnified to show mean absolute error at the time of the change. Other conventions are as in panel D. F, Output of the mnemonic-coding st-RNN for a representative input pattern (topmost row) following silencing of each type of recurrent connection: E-E (green outline, fourth row), I-I (black outline, fifth row), I-E (blue outline, sixth row), and E-I (red outline, seventh row). Second and third rows from top, Expected output and observed output with all connections intact (“None”), respectively. The colored outline indicates the time points during which connections were silenced. Other conventions are the same as in Figure 1D. G, Same as in panel F but following silencing of recurrent connections only during the presentation of the new image. Other conventions are as in panel F.

“Unstable” units are important for stable maintenance

We observed that silencing the activity of unstable units also disrupted stable maintenance (Fig. 3C), in a manner that was comparable to silencing stable units. These results could be readily explained by examining the null space of the output weight matrix (; Eq. 5). For simplicity, we are writing as . In order for the activity of the unstable neurons to not affect the network output, their dynamics should be confined to the null space of In other words, if then the network output would remain unchanged despite silencing the unstable neurons.

Contrary to this hypothesis, we observed that, on average, the activity of unstable neurons was not confined to the null space of (= −0.11, averaged across output units and patterns tested) and was, in fact, comparable to the projection of the stable unit activity onto (; where and denote the activity of the hidden layer “unstable” and “stable” units, respectively). The marginally negative projection of the unstable unit activity onto was possibly a consequence of the larger proportion of inhibitory neurons in the unstable unit subset (11/16), as compared with the stable subset (0/16; see Results).

Tiled st-RNNs with local interactions

We tested whether local interactions among st-RNN modules sufficed to achieve robust change detection (Fig. 4C, +Li, sixth and seventh rows). For this we modeled a network by tiling nine st-RNN modules in a 3 × 3 array, and modeled local interactions between every pair of adjacent modules. The equations for an st-RNN module in each of 3 × 3 modules, describing these interactions are as follows:

| (12) |

where is the output from adjacent st-RNN modules at time and is a local connectivity matrix which specifies the connection weights between the output neurons of one st-RNN module to 10% of randomly selected hidden neurons of the adjacent module. These st-RNN modules were trained and tested in a manner identical to that described previously.

Modeling change detection with high-resolution images

For change detection with natural, high-resolution images (1024 × 768), we tiled 49 152 (256 × 192) st-RNN module pairs, each comprising a mnemonic-coding and change-detection st-RNN, to cover the entire extent of the image. Tiling was performed with 50% overlap along both horizontal and vertical directions (Fig. 4A), such that every 4 × 4 pixel patch (barring those closest to the border) was processed by four different st-RNN modules. The high-resolution image underwent three key transformations before being presented as input to the st-RNN network: (1) foveal magnification with the CVR transform (Wiebe and Basu, 1997); (2) saliency computation with the Itti–Koch saliency algorithm (Itti et al., 1998); and (3) thresholding and binarization based on Otsu's algorithm (Otsu, 1979). These operations are elaborated, next.

First, we modeled a key feature of retinal representation: foveal magnification. When a fixation occurs at a particular region of the image, the representation of the fixated region is mapped onto the fovea with considerably greater spatial resolution, as compared with the periphery. We modeled foveal magnification using the Cartesian variable resolution (CVR) transform (Wiebe and Basu, 1997). For a given fixation location (x0, y0) in the original image, the foveally magnified image was obtained with a nonlinear transformation of original image pixel locations (x, y). Let dx = x – x0 and dy = y – y0 be the distance of an arbitrary pixel at location (x, y) from (x0, y0). Then the logarithmic nonlinear transformation is defined as x' = x0 + dvx and y' = y0 + dvy, where dvx = Sfx * ln(β dx + 1) and dvy = Sfy * ln(β dy + 1). Here, the values of the parameters β, Sfx, and Sfy control the extent of central magnification, and the scaling of the transformed image along the azimuth and elevation directions, respectively. β was set to 0.02, whereas Sfx and Sfy were set so as to maintain the foveally magnified image the same overall size as the original image. An illustration of this foveated transformation is shown in Figure 7B.

Second, we modeled a key aspect of image representation in the SC: visual saliency. Recent studies have shown that SC neurons respond to visual saliency (Veale et al., 2017; White et al., 2017); in fact, saliency information appears to reach the SC even before it is available to the visual cortex (White and Munoz, 2017). Thus, input to the network, at each frame, was based on the saliency map for that frame computed with the Itti–Koch algorithm. The Itti–Koch algorithm (Itti et al., 1998) is inspired by neurobiological properties of the visual cortex. Briefly, the map is computed by combining gradients of color, orientation and intensity information at multiple different spatial scales to compute a single, topographical salience map. The algorithm has been widely employed in various studies that seek to model fixation patterns associated with free-viewing of natural sciences (Adeli et al., 2017).

Third, we binarized the saliency map with an adaptive thresholding algorithm (Otsu, 1979). The algorithm tests different threshold values by dividing the image into a background region and a foreground region based on pixel intensities above and below . Defining and as the variance of background and foreground region, the algorithm iteratively searches to find a ζ that minimizes , where and are the number of pixels in the background and foreground respectively. This binarized saliency map, following thresholding, was provided as input to the st-RNN network to detect changes. In neural terms, such a binarizing operation corresponds to filtering the RNN output with a step-like nonlinearity; a property observed in many output neurons in the SC/OT that signal, categorically, the most salient stimuli in the visual environment based on winner-take-all computations (Knudsen, 2018). The foveally magnified, binarized saliency map of high-resolution, natural images was provided as input to the st-RNN for simulations in Figures 4B and 7B.

Modeling visually-evoked responses and stimulus competition

We computed the visually evoked responses of st-RNN model neurons by simulating the presentation of four different kinds of visual stimuli: static, moving, looming and receding. For all of these simulations we tiled 50,000 st-RNN modules with 50% overlap along both x- and y-directions to encode the entire image (1000 × 800).

A static stimulus was simulated as a circle of radius 125 pixels placed at the center of the 1000 × 800 input image across nine frames (t = 2 to t = 10, in this, and all subsequent cases; Fig. 5A, inset). A moving stimulus was simulated by presenting the same input patch as the static case but by moving it by 10 pixels diagonally on each frame, from the center toward the lower right corner of the image (Fig. 5B, inset). A looming stimulus was simulated as an expanding circle of active pixels from a radius of 8 pixels to a radius of 125 pixels (rate of radius increase: 8 pixels per frame) linearly (Fig. 5C, inset). A receding stimulus was simulated as a contracting circle, with an identical set of frames as the looming stimulus, but reversed in sequential order (Fig. 5D, inset). To avoid an onset transient for the moving and receding stimulus cases, the input for first frame (t = 1) was taken to be identical to that of the second frame (t = 2); for the other two cases (static, looming), the first frame was a blank image. The mean activity of a 250 × 250 central patch of output neurons of the change detection st-RNN is plotted in Figure 5A–D. Figure 5E shows the mean steady-state activity, across the final nine frames (t = 3 to t = 10).

To study the effect of stimulus competition in the network we simulated paired looming stimuli (Fig. 5F,G, “paired”): a fast looming and a slow looming stimulus in the lower right and upper left visual quadrants, respectively. The fast and slow looming stimuli were simulated as expanding circles of active pixels beginning with a circle of radius 8 pixels and expanding at a rate of either 8 pixels per frame (fast looming) or four pixels per frame (slow looming). To compare the strength of activity modulation because of stimulus competition, the same simulations were performed with each stimulus presented alone at the same, respective, location (Fig. 5F,G, “single”). To better visualize activity modulations arising from stimulus competition, the output weights of the GI layer units () were scaled up by a factor of 10 for these simulations. The mean activity of the change detection output neurons representing a 250 × 250 patch, centered on the respective stimulus, is plotted in Figure 5F,G. All simulations in Figure 5 were performed with a partially trained model (checkpoint saved at n = 100 training iterations) to model the comparatively volatile mnemonic representations observed in biology.

Mimicking experimental effects of causal manipulations of SC/OT

We simulated the effect of SC/OT microstimulation on a common psychophysical change detection task (Cavanaugh and Wurtz, 2004; Zénon and Krauzlis, 2012). First, to simulate the orientation change detection task (Fig. 6A), we presented two gratings, one in each visual hemifield. Again, we tiled 50,000 st-RNN modules with 50% overlap along both x- and y-directions to encode the entire image (1000 × 800). Both gratings were presented at full contrast. The gratings were presented at random initial orientations (here, 20° and 135° clockwise of vertical for left hemifield and right hemifield stimuli respectively). This was followed by eight blank frames, following which the gratings reappeared for one frame. Upon reappearance the orientation of the grating in the left hemifiled alone changed to 30° (Fig. 6A, top right, red box). We expected the st-RNN output to identify the grating at this location alone as the “change,” whereas the actual output of the model produced false alarms at other locations also (Fig. 6A, bottom right). To mimic focal microstimulation of the SC/OT, we scaled up the recurrent and output weights (1.1 * Weff) of the mnemonic coding st-RNN units encoding the grating in the either the left (change) or right (no change) hemifield, respectively. Our default model was trained to the point where change detection and mnemonic coding were near perfect, and items were maintained for robustly for even the longest epochs (>200 time points) tested. On the other hand, mnemonic representations in biology are comparatively volatile (Goldman, 2009). To model this shortcoming, and to model its rescue with microstimulation, simulations in Figure 6A–C were performed with a partially trained model st-RNN model incorporating Eq. 4 (checkpoint saved at n = 100 training iterations) whose mnemonic representations decayed more rapidly compared with the fully trained model.

Change blindness experiments with human participants

A total of n = 44 participants (20 females; age range 18–55 years) with normal or corrected-to-normal vision performed a change blindness experiment (Jagatap et al., 2021). Data from four participants, who were unable to complete the task for various reasons, were excluded, as was data from one participant that was not stored correctly because of logistic errors. The final analyses were performed on data from 39 participants (18 females). Details of the change blindness experiment have been reported elsewhere (Jagatap et al., 2021).

Briefly, subjects viewed the images on a 19” Dell monitor (1024 × 768 resolution) seated with their heads resting on a chin rest, with their eyes positioned 60 cm from the monitor. Subjects' eye movements were tracked with an iViewX Hi-speed eye-tracker (SensoMotoric Instruments Inc.) with a sampling rate of 500 Hz; the tracker was calibrated for each subject, individually, before the start of the experiment. Each trial of the change blindness task comprised a pair of alternating images, each of 250-ms duration, separated by blank screens, also of 250-ms duration. Each trial began with subjects fixating continuously for 3 s on a central cross; this was done to ensure consistency in the first fixation across subjects. In each experiment, we tested either 26 or 27 pairs of images (Jagatap et al., 2021, their Fig. S1; Supplemental Data). Of these 20 image pairs contained at least one object that differed in key respects across the image pairs, in size, color or occurrence. The remaining six (n = 9 subjects) or seven (n = 30 subjects) image pairs were catch images that consisted of identical images with no distinction between them. Change and catch trials were interleaved in a pseudorandom order across all the subjects. For the analyses presented in this paper, we used only data from change image trials. Subjects were allowed to freely move their eyes across the scene to locate the change. They indicated correctly detecting the change by fixating on the change region for at least 3 s, and such trials were considered a “hit.” If the subjects failed to detect the change within 60 s, the trial timed-out and was considered a “miss.” Each session lasted for roughly 45 min, including time for instruction and eye-tracker calibration.

Change blindness experiments with the st-RNN model

To simulate the change blindness flicker paradigm (Fig. 7A), each image (A) and the corresponding altered version (A*) were displayed, along with interleaved blank images (B), each for two frames. This cycle was repeated until the model detected the change, or the maximum trial duration elapsed. In our experimental data, the maximum permitted time for human participants to detect the change was 60 s, and each image and blank were presented for 250 ms. To match these statistics, the maximum trial length of our model simulations was set to 240 frames, with each frame equivalent to an interval of 125 ms in the original experiment. The model was permitted to make saccades (gaze shifts, see next) at the end of every set of four frames starting with the fifth frame, such that saccades were made after every sequence of the form ABBA* or A*BBA; this intersaccade interval (fixation duration) of 500 ms approximately matches the mean fixation duration of ∼400 ms that we observed in data from human participants performing the change blindness task (Jagatap et al., 2021).

To simulate saccades in the change blindness task, we adopted the following procedure. First, we computed a priority map for saccades based on three different factors: (1) bottom-up saliency; (2) top-down task goals; (3) inhibition of return. The first and third components have been extensively investigated in previous studies, and are known to be important for determining free-viewing saccade strategies (Itti et al., 1998; Adeli et al., 2017). The second component (top-down goal) is specific to our task, in that participants must scan the image with the goal of identifying changes. We computed each of these factors as follows. First, the image Ai in physical image coordinates at frame i was CVR transformed to retinocentric coordinates, based on gaze fixation at location x (see above, Modeling change detection with high-resolution images). We term this transformed image as Ai,x. Next, the bottom up saliency map was computed from the Itti–Koch algorithm, also as described above (Itti et al., 1998). We term this real-valued map B(Ai,x). Then, the top-down goal map, or “change map,” was computed based on the activation of the output layer of the change-detection st-RNN; this corresponds to a map of the likely location(s) of change, as determined by the network. st-RNN modules were tiled to encode the entire image, and activations were averaged over these overlapping tiles to yield the final top-down map. This map was thresholded at 0.5 to yield a binary map. We term this “change” map T(Ai,x). Finally, the inhibition-of-return (IOR) map was computed by specifying a two-dimensional circularly symmetric Gaussian centered at the point of fixation, with a variance of 40 pixels, and summed over the past m = 50 fixations. This map was CVR transformed and normalized between 0 and 1 to yield the final IOR map, I(Ai,x). These three maps [B(Ai,x), T(Ai,x), I(Ai,x)] were combined to determine the final priority map, P(Ai,x), as follows:

| (13) |

| (14) |

where is a piecewise linear mapping, which saturates at a value of 1 for all arguments greater than 1.

As a final step, the priority map was normalized by its sum across all image locations, so as to transform it into a spatial probability density. The next fixation at each time point was sampled from this probability density function. To execute the fixation, the priority map was reverted to physical image coordinates using an “inverse CVR” transform, the new fixation location was selected and the image was transformed again into retinocentric coordinates with the CVR transform, based on the new gaze fixation point (Fig. 7B).

Comparing human and model gaze metrics

To compare gaze metrics of human participants with the model (Fig. 8B), we computed, for each of the 20 change images, the mean number of fixations, on “hit” trials, across n = 39 participants. We also computed the total distance traveled as sum of Euclidean distance (in pixels) between consecutive fixations. We then compared these human gaze metrics with the same metrics computed from model simulations using Pearson correlations; model metrics were computed by simulating the model for n = 80 iterations and computing the average over these iterations. As a control analysis, we performed these same correlations except that the st-RNN output, corresponding to the top-down component [T(Ai,x); Eq. 13] was excluded when computing the priority map. All other parameters were identical for these control simulations.

Comparing fixation predictions across models

We tested how our model would compare with other established algorithms for predicting human gaze fixations. For this, we computed a fixation map as a continuous map across the entire image from the distribution of discrete fixations by convolution with a Gaussian filter describe and applying a standard low-pass filter with cutoff frequency fc = 8 cycles per image (Bylinskii et al., 2019). For comparing performance across models, we tested various metrics, including those based on the correlation coefficient (CC), similarity, Kullback–Leibler (KL)-divergence and area under the curve (AUC). Previous studies on gaze prediction have suggested that multiple metrics should be used for comparing gaze prediction algorithms, because of different potential kinds of bias in each metric (Bylinskii et al., 2019). We briefly describe, below, these evaluation metrics. In the following, G denotes the fixation map obtained from human data, and S denotes the map estimated using a saliency prediction model.

Linear CC. The CC metric between G and S is given by: CC = cov(G, S)/(σG*σS), where σG and σS are the standard deviations of vectorized maps G and S respectively. This metric provides a measure of the linear relationship between the two maps, with a CC of +1 indicating a perfect linear relationship between the maps.

Similarity. Similarity is computed as the sum of the minimum values at each pixel location for the distributions of S and G. , where SN and GN are normalized to be probability distributions so that, for identical maps, the similarity score is +1.

KL-divergence. KL-divergence measures the difference between the two probability distributions S and G as , where is a regularization constant with value = 2.22 × 10−16 (Bylinskii et al., 2019).

AUC. The area under the receiver-operating-characteristic curve (AUC) is computed by thresholding the saliency map at varied values. The true positive (TP) and false positive (FP) proportions are computed at each of these threshold values, and the AUC is calculated. The AUC-Borji (Borji et al., 2013) algorithm defines the proportion of TPs as the number of the saliency map (S) pixels above threshold relative to the total number of fixated pixels in the image, and defines the proportion of FPs as the number of saliency map (S) pixels above threshold relative to a uniform random sample, equal to the number of nonfixated pixel locations.

The comparison of our eye movement model was performed against Salicon with the images used in our human change blindness experiment, and not with the MIT300 dataset. This is because we seek to test our model against ground-truth human fixation data in a change blindness task, whereas the MIT300 dataset is for free-viewing saliency prediction.

Data and code availability

Data and code for reproducing the results in this paper are available in the following repository: https://github.com/yashmrsawant/ChangeDetection_stRNN.

Results

A midbrain-inspired st-RNN model

The SC, and the OT, its homolog in other vertebrates, receives direct visual input from the retina and projects to cortical and subcortical regions involved in controlling attention and eye-movements (Knudsen, 2011; Krauzlis et al., 2013; Fig. 1A). Neurons in the SC/OT exhibit spatially restricted visual receptive fields with topographic connectivity: each neuron encodes only a portion of visual space (typically 5–10°; Cynader and Berman, 1972), and connects predominantly with downstream neurons that encode overlapping regions of space (Knudsen, 2011; Sridharan et al., 2014). In addition, the SC/OT is multilayered, comprising recurrently connected excitatory (E) and inhibitory (I) neural populations (Knudsen, 2018). In particular, layer 10 of the SC/OT contains specialized recurrent E-I neurons that project to a midbrain inhibitory (GABAergic) isthmic nucleus, the Imc (Fig. 1A; Goddard et al., 2012, 2014), which is hypothesized to play a key role in resolving stimulus competition across space (Knudsen, 2018; see next section).

We modeled the recurrent E-I circuit in SC/OT layer 10, employing a RNN with key neurobiological constraints (Fig. 1B). First, the input layer of the RNN was organized topographically, such that each patch of the input, in this case a high-resolution image, was represented by a localized patch of neurons, mimicking spatially localized retinal input to the SC. Second, following Dale's law for biological neurons, each presynaptic neuron in the model provided either excitatory or inhibitory inputs to all of its postsynaptic partners. Recurrent connections occurred both within and across E and I neural populations. Third, each neuron (both E and I) was permitted to connect to only to a small group of neurons in its neighborhood such that mutually connected neurons encoded overlapping regions of the image (Materials and Methods). This connectivity pattern ensured topographic connectivity, while also guaranteeing a sparse weight matrix for all layers (Fig. 1C; Materials and Methods, Eq. 1). We term such a RNN, with sparse, topographic connectivity, as an st-RNN. st-RNN dynamics were simulated with a phenomenological rate model, previously employed in simulations of neural population dynamics (Eq. 2, Materials and Methods; Ganguli and Latham, 2009; Sussillo, 2014). For illustration, we first describe the results of training and testing the st-RNN with simple, 8 × 8 binary image patches as input. In a subsequent section, we test the st-RNN's ability to detect changes in high-resolution, natural images.

Two key operations are necessary for solving the change blindness task (Fig. 1D). First, the network must robustly maintain a representation of the first image (Fig. 1D, A) over the duration of the blank frames (Fig. 1D, B). Second, this maintained information must be compared against the subsequently presented image (Fig. 1D, A*) for detecting and localizing the change successfully. These two operations were achieved with two different st-RNNs, operating in tandem (Fig. 1D). The first st-RNN, the “mnemonic coding” RNN, encoded and maintained the representation of the input image (A) over the duration of one or more blank frames (Fig. 1D). Moreover, this RNN learned to flexibly update its representation when presented with a new input image (A*) following the blank frames (Fig. 1D). The second st-RNN, the “change detection” RNN, monitored variations in output of the mnemonic-coding RNN, which enabled it to detect and localize the change (Fig. 1D). Both st-RNNs were trained independently, with 200,000 8 × 8 binary image patches, and tested with 20,000 validation patches, not used in the training dataset (Materials and Methods). We then compared the performance of the st-RNN against a conventional, fc-RNN, without constraints on sparse or topographic connectivity (Materials and Methods).

The st-RNN maintained its input and detected changes with high accuracy (Fig. 1E, top, blue; mean squared error or MSE: mnemonic coding st-RNN = 1.7 ± 0.02%, change detection st-RNN = 1.6 ± 0.02%, mean ± std at iteration 200; n = 20,000 validation patches). The fc-RNN's also detected changes with high accuracy (Fig. 1E, top, red; MSE: mnemonic coding fc-RNN = 2.6 ± 0.5%; change detection fc-RNN = 2.5 ± 0.1%), but the MSE for the fc-RNN was marginally, albeit significantly higher, as compared with the st-RNN (p < 10−6, n = 20,000 patches, Wilcoxon signed rank test). In addition, the fc-RNN training converged much more slowly (∼7.5–9× longer) both for the mnemonic-coding and change-detection operations (Fig. 1E, bottom, red vs blue curves; mnemonic-coding: st-RNN = 12 epochs, fc-RNN = 90 epochs; change-detection: st-RNN = 10 epochs, fc-RNN = 93 epochs; # iterations to achieve a training MSE of 1%, across 10,000 training samples). Moreover, the proportion of non-zero weights in the hidden layer of the fc-RNN (45.7%) was, far higher than the proportion of non-zero weights in the st-RNN (2.9%; Fig. 1F; p < 10−6; Kolmogorov–Smirnov test for significant differences in weight distributions).

We sought to understand the relative advantages of sparsity versus topographic connectivity for change detection. First, we asked whether the topographic nature of connectivity provided a specific advantage for change detection. We trained a network with random (nontopographic) connectivity, but with weight sparsity identical to the st-RNN; we call this network a sparse, non-topographic RNN (snt-RNN). The weight matrix following training is shown in Fig. 1F. The snt-RNN training converged more slowly (Fig. 1E, green) as compared with the st-RNN (Fig. 1E, blue). Specifically, the number of iterations for convergence (1% training MSE) was more than 2-fold higher for the snt-RNN as compared with the st-RNN (mnemonic-coding: st-RNN = 12 epochs, snt-RNN = 25 epochs; change-detection: st-RNN = 10 epochs, snt-RNN = 22 epochs). Second, we asked whether sparsity of the weight matrix was responsible for faster training. As an alternative model, we tested whether reducing the degrees of freedom, by reducing the number of weight updates, would also achieve faster training; for this, we applied a sparse mask (Materials and Methods, Eq. 1) to the gradient updates, rather than to the full weight matrix itself, during training. In this case, again we observed considerably slower convergence (Fig. 1E, black) as compared with the original st-RNN model (Fig. 1E, blue).

Finally, we performed simulations of the model by varying the level of sparsity in the connections, and with different sizes of local, topographic connections in the hidden layer (Fig. 1G; Materials and Methods). Increasing the level of sparsity led to systematic degradations in performance for both the mnemonic coding and change detection st-RNNs, likely because of the stronger constraints on connectivity imposed by progressively sparser weight matrices (Fig. 1H,I, columns). By contrast, decreasing the receptive field size degraded performance for the mnemonic coding st-RNN but slightly improved performance for the change detection st-RNN (Fig. 1H,I, rows). Because of its fine-grained, spatially local nature, the change detection computation was rendered more challenging when signals were pooled across multiple neighboring neurons.

In summary, our midbrain inspired st-RNN architecture was able to successfully solve the challenging change blindness task. Moreover, the st-RNN accomplished change detection far more efficiently, with considerably fewer connections and significantly faster learning rates, compared with a conventional, fc-RNN. Finally, neither a network with sparse, but unstructured, connectivity nor a network with a comparably simple, but a conceptually different, learning strategy were as fast as the st-RNN model at learning to successfully detect changes. In other words, both sparsity and topographic connectivity were relevant for efficiently learning to detect changes (see Discussion for caveats regarding the biological plausibility of the supervised learning rule).

Mechanisms underlying stable maintenance and flexible updating

We analyzed computational mechanisms by which the st-RNN achieves robust change detection in this change blindness task. The computation performed by the change detection st-RNN is a comparatively simple one: it needs to compute differences between each successive frame of the mnemonic coding st-RNN's output, as long as the latter maintains an active representation of the latest input. The key challenge, then, rests with the mnemonic coding st-RNN, which must not only maintain a stable representation of the first image (Fig. 1D, A) over the course of the blank epoch (Fig. 1D, B), but must also rapidly and flexibly update its representation as soon as the new image is presented (Fig. 1D, A*).

We analyzed, first, low-dimensional dynamics of the mnemonic coding st-RNN to understand how it was able to maintain image information robustly during the blank epoch. For this we identified a “mnemonic” subspace, based on the activity of the hidden layer units of the mnemonic coding st-RNN (Murray et al., 2017); the dimensions of this subspace represent those that capture maximal variance across stimuli during the blank epoch (stimulus PCs; Fig. 2A; Materials and Methods). We then plotted the activity trajectories of the hidden layer units in this mnemonic subspace (Fig. 2C, colored dots with connecting lines).

The mnemonic coding subspace encoded interpretable, and (partially) dissociable, features of stimuli during the stimulus presentation epoch: stimulus PC1 encoded the proportion of “active” pixels (sparsity) in the input image (Fig. 2A, x-axis, dark to light shading) whereas stimulus PC2 encoded the location of these active pixels – in terms of their approximate center of mass along the vertical axis (Fig. 2A, y-axis). Individual hidden layer units exhibited markedly heterogeneous dynamics during the maintenance epoch. Some units (Fig. 2B, top) exhibited stable activity during maintenance, whereas others (Fig. 2B, bottom) exhibited unstable and oscillatory patterns of activity. Despite these wide variations in individual unit dynamics, the projection of hidden layer activity in the mnemonic subspace was remarkably stable over time, even in the absence of sensory input (Fig. 2C; different colors show activity projection at different time points during the blank epoch). Dynamics were primarily confined to a “Time PC,” orthogonal to the stimulus PC axes (Fig. 2C, left; Materials and Methods). In other words, stable coding in the mnemonic subspace emerged despite heterogeneous dynamics in individual units' activities. Each of these characteristics is a hallmark of the “stable subspace model,” a recently proposed framework for stable maintenance of information in the brain (Druckmann and Chklovskii, 2012; Murray et al., 2017). In Materials and Methods, Stable network output despite unstable activity in individual units, we analyze these observations mathematically to show how a stable representation may arise in the network with unstable and heterogenous units (Druckmann and Chklovskii, 2012).